Beruflich Dokumente

Kultur Dokumente

TC Verification Final 11nov13

Hochgeladen von

Nguyễn ThaoOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

TC Verification Final 11nov13

Hochgeladen von

Nguyễn ThaoCopyright:

Verfügbare Formate

Verification methods for tropical cyclone forecasts

WWRP/WGNE Joint Working Group on Forecast Verification Research

November 2013

Joint Working Group on Forecast Verification Research

World Weather Research Program and

Working Group on Numerical Experimentation

World Meteorological Organization

Verification methods for tropical cyclone forecasts

Contents

Foreword

1. Introduction

2. Observations and analyses

2.1 Reconnaissance

2.2 Surface networks

2.3 Surface and airborne radar

2.4 Satellite

2.5 Best track

2.6 Observational uncertainty

3

3

6

7

8

10

12

3. Forecasts

12

4. Current verification practice Deterministic forecasts

4.1 Track and storm center verification

4.2 Intensity

4.3 Storm structure

4.4 Verification of weather hazards resulting from TCs

4.4.1 Precipitation

4.4.2 Wind speed

4.4.3 Storm surge

4.4.4 Waves

14

14

18

19

21

21

22

23

24

5. Current verification practice Probabilistic forecasts and ensembles

5.1 Track and storm center verification

5.2 Intensity

5.3 Verification of weather hazards resulting from TCs

5.3.1 Precipitation

5.3.2 Wind speed

5.3.3 Storm surge

5.3.4 Waves

25

25

30

33

33

35

37

38

6. Verification of sub-seasonal, monthly, and seasonal TC forecasts

40

7. Experimental verification methods

7.1 Verification of deterministic and categorical forecasts of extremes

7.2 Spatial verification techniques that apply to TCs

7.3 Ensemble verification methods applicable to TCs

7.3.1 Probability ellipses and cones derived from ensembles

7.3.2 Two-dimensional rank histograms

7.3.3 Ensemble of object properties

7.3.4 Verification of probabilistic forecasts of extreme events

7.4 Verification of genesis forecasts

7.5 Evaluation of forecast consistency

40

41

42

45

45

46

47

47

49

50

8. Comparing Forecasts

52

9. Presentation of verification results

53

Acknowledgments

53

References

54

Appendix 1. Brief description of scores

Appendix 2. Guidelines for computing aggregate statistics

Appendix 3. Inference for verification scores

Appendix 4. Software tools and systems available for TC verification

67

77

79

81

Verification methods for tropical cyclone forecasts

Appendix 5. List of acronyms

Appendix 6. Contributing authors

82

84

Verification methods for tropical cyclone forecasts

Foreword

Tropical cyclones (TCs) are one of the most destructive forces of nature on earth. As such they have

attracted a long tradition of research into their structure, development and movement. This has been

accompanied by an active forecast program in countries which they affected, driven by the need to

protect life and property and to mitigate the impact when land areas and sea assets are threatened.

Numerical weather prediction (NWP) models became the primary track aids for TC prediction about

two decades ago. Due to model improvements and increased resolution in recent years, the model

skill in predicting TC location has also increased greatly (although prediction of TC intensity with

dynamical models remains a challenge). A measure of the transition toward increased importance of

TC prediction with NWP models is represented by the fact that the accuracy of TC prediction has

become an important indicator of the quality of an NWP model. Even NWP centers in countries that

are not affected by TCs have shown increased interest in TC prediction.

All this increase in NWP prediction against a backdrop of a long tradition of operational forecasting has

shone a bright beacon on the verification methods used to evaluate TC forecasts, and led to a request

to the WMO Joint Working Group on Forecast Verification for some recommendations on the

verification of TCs. This document is in answer to that request.

In preparing this document, we quickly realized that the verification of TCs is a very broad subject.

There are many weather and marine parameters to consider, including storm surge and wave height,

storm track and intensity, minimum pressure and maximum wind speed, (land) strike probabilities, and

wind and precipitation for landfalling storms. User needs for TC verification information are rather

diverse, ranging from the modelers need for information on the accuracy of the detailed three

dimensional structures of incipient storms at sea to the disaster planners need for information on the

accuracy of forecasts of landfall timing, location and intensity. It also soon became clear that the

science of TC verification is developing rather quickly at the present time. All of these factors led us to

decide that it would not be wise to make specific pronouncements on recommended verification

methods. Rather, this document should be considered as an annotated (or commented) survey of

verification methods available. When discussing specific methods, we have tried to be clear about the

intended purposes of each.

In order to respect the dichotomy of a long history of traditional verification of manual forecasts and

the recent upsurge of verification methods for NWP forecasts, we have separated current verification

practices and experimental methods into different chapters, even though some of todays experimental

methods may very soon become standard practice. Probabilistic forecasting of TC-generated weather

by NWP models is also progressing rapidly following the development of ensemble forecast systems.

Thus, we describe probabilistic verification methods separately from deterministic forecast verification

methods.

This survey is certainly not exhaustive. While we have tried to include discussion of verification

methods for all of the parameters of interest in TC forecasting, and also for monthly and seasonal TC

forecasts, we have most probably left out some interesting methods. The authors would be happy to

hear from anyone with suggestions for improvements.

Welcome to the world of TC verification!

Verification methods for tropical cyclone forecasts

1. Introduction

Tropical Cyclones (TCs) are both extreme events in the statistical sense and high impact weather

events for any affected area of the world. While they remain over ocean areas, their impact is

confined mainly to aviation and marine transportation, naval operations, offshore drilling operations,

fishing and pleasure boating. While at sea, the risks come mainly from extreme winds and local

waves, and atmospheric turbulence. However once TCs threaten to hit land areas, they become a

much greater hazard. Risks to life and property from landfalling TCs come not only from the extreme

winds, but also from coastal storm surges, rainfall-induced flooding and landslides, and tornadoes.

th

The recommendations that resulted from the 2010 7 WMO International Workshop on Tropical

Cyclones included a specific recommendation focused on verification metrics and methods: "The

IWTCVII recommends that the WMO, via coordination of the WWRP/TCP and WGNE assist in

defining standard metrics by which operational centres classify tropical cyclone formation, structure,

and intensity, and that these metrics serve as a basis to collect verification statistics. These statistics

could be used for purposes including objectively verifying numerical guidance and communicating with

the public." The goal of this document is to facilitate this effort.

Verification of TCs is a multi-faceted problem. Simply put, verification is necessary to understand the

accuracy, consistency, precision, discrimination and utility of current forecasts, and to direct future

development and improvements. As in all verification efforts, it is important to identify the user of the

information and the specific questions of interest about the quality of the forecasts so that the

appropriate methodology can be selected.

Modelers are most likely to be interested in the storm parameters which help them evaluate their

model and identify limitations in order to direct research efforts. They might be interested in the

accuracy of the storm track, an assessment of the storms predicted intensity either in terms of central

mean sea level pressure and/or maximum sustained winds, and in the size of the storm. Modelers

who work on TC modeling in particular would also be interested in storm structure, rapid

intensification, genesis, and other aspects of the TC lifecycle. Some of these parameters are

amenable to verification; however, process studies often are the best approach for many aspects, and

it is not possible to consider that depth of forecast evaluation in this document. To fully assess models,

one must consider their ability to generate storms without too many false alarms, and the ability to

determine the location, intensity and timing of landfall as accurately as possible. Modelers would also

normally be interested in the verification of probabilistic information generated from the model,

including uncertainty cones and, more directly, probabilities obtained from ensembles. For storms that

hit land, the interest shifts to variables that are more directly related to the impacts, such as

quantitative precipitation forecasts (QPF), near-surface winds, and storm surge.

Forecasters are likely to be interested in accuracy information for the same storm-related variables as

modelers, but are perhaps less likely to be interested in verification of the three dimensional structure

as simulated by the model. Forecasters are likely to also be interested in assessments of the

accuracy of processed model output such as storm surges and ocean waves, in addition to

evaluations of surface wind and QPF. Forecasters would also be particularly interested in verification

of landfall timing, location and intensity information, including probabilistic landfall information,

because of its importance in guiding evacuation and other storm preparedness decisions.

Emergency managers and other users of TC forecasts such as business, industry and government

would be expected to be interested in verification information about those storm parameters which

directly impact their decision-making processes (e.g., tides, waves, surge, rainfall). This would include

verification of all forms of information on location, timing and intensity of hazardous winds and surge,

including probability information. It would also include verification of QPF and wind forecasts for

landfalling storms. Compared to forecasters, external stakeholders might be interested in verification

information in different forms, for example warning lead time for specific severe events, or precipitation

categories which are specific to their decision models.

The general public and the media keenly monitor the progress of a TC as it approaches land,

especially when the region likely to be affected is heavily populated and the impact has the potential to

2

Verification methods for tropical cyclone forecasts

be devastating. The human toll, damage to homes, businesses and infrastructure, and disruption to

services are of immediate concern. However, making quantitative forecasts for storm impact is difficult,

and methods for verifying such forecasts are in their infancy. The media and public also take great

interest in the severity of the most intense cyclones, typically comparing them to other extreme TCs

that may have occurred in that region in the last century. When a prediction is for "the worst hurricane

ever to hit" then verification of this prediction is sure to be of interest.

In 2012 a new international journal entitled Tropical Cyclone Research and Review was established by

the ESCAP/WMO Typhoon Committee. In addition to publishing research on tropical cyclones it also

publishes reviews and research on hydrology and disaster risk reduction related to tropical cyclones.

The first issue provides a review of operational TC forecast verification practice in the northwest

Pacific region (Yu et al. 2012).

This document concentrates on quantitative verification methods for several parameters associated

with TCs. Since TCs occur sporadically in space and time, many TC forecast evaluations focus on

individual storm case studies. This report focuses more on quantitative verification methods which can

be used to measure the quality of TC forecasts compiled over many storms. The focus is on forecast

accuracy; economic value in terms of cost/loss analysis is not considered here. Examples from the

literature and from operational practice are included to illustrate relevant verification methodologies.

Reference is frequently made to websites where examples of TC forecasts, observations, and

evaluations can be viewed online. This document does not address the evaluation of TC related

processes such as boundary layer evolution, momentum transport, sea surface cooling and

subsurface thermal structure, etc., which are better addressed by detailed research studies, nor does

it discuss verification of the large-scale fields related to TC prediction such as steering flow or

environmental shear.

Many of the methods described here are the same as methods used for other more common weather

phenomena. However, some special attributes of TC forecasts impact the choices of verification

approaches. For example, TC forecasts typically have small sample sizes due to the relative

infrequency of TCs compared to other weather phenomena. This sample size difference is important

to take into account when verifying TC forecasts. Another special concern is the quantity and quality

of observational datasets available to evaluate TC forecasts. In particular, these datasets are typically

sparse or intermittent and may infer TC characteristics from indirect measurements (e.g., from

satellites) rather than directly measure them. Thus, it is of importance to consider the observations

before discussing the verification approaches.

2. Observations and analyses

Observations of TCs and the weather associated with them come from a variety of sources. Table 1

shows the types of observations that have been used over the years to monitor TCs in the North

Pacific, North Indian Ocean, and Southern Hemisphere regions (Chu et al. 2002). Note that military

aircraft reconnaissance is still undertaken by the U.S. Air Force in other basins, though not shown in

this table. This section briefly discusses the observational data that can be used to verify forecasts of

TCs and associated weather hazards. A summary of observations and analyses is given in Table 2.

More detailed discussions of observations of TCs can be found in WMO (1995) and Chan and Kepert

(2010).

2.1 Reconnaissance

TC aircraft reconnaissance flights provide in situ and remotely-sensed observations of TC structure

and can be used to infer information about important parameters related to TC intensity, development

and motion. Some flights are for research purposes only, but most flights in some basins (e.g.,

Atlantic) are operational and provide consistent reconnaissance information for many storms. The U.S.

rd

Air Force Weather Reserve 53 Weather Reconnaissance Squadron also uses ten Lockheed-Martin

C-130-J aircraft for hurricane location fixing in the Atlantic and Eastern and Central Pacific basins;

these aircraft provide the largest number of TC reconnaissance flights and are a very important source

of information about TC location and intensity. The National Oceanic and Atmospheric Administration

3

Verification methods for tropical cyclone forecasts

Table 1. Significant events affecting TC observations in the western North Pacific, North Indian Ocean

and Southern Hemisphere regions. Thick arrows indicate that the observation source or tool is still in

service. Acronyms are given in Appendix 5. (From Chu et al. 2002)

1900 1910 1920 1930 1940 1950 1960 1970 1980 1990 2000 2010

=Ship logs and land observations

=Transmitted ship and land observations

=Radiosonde network

=Military aircraft reconnaissance===

=Research aircraft reconnaissance

=Radar network

=Meteorological satellites

=Satellite cloud-tracked & watervapor-tracked wind

=SSM/I &QuikSCAT

wind, MODIS

=Omega and GPS dropsondes

=Data buoys

=SST analysis

=Dvorak technique

=DOD TC documentation published (ATR, ATCR)

=McIDAS and other interactive

systems (AFOS, ATCF, AWIPS and

MIDAS, etc.)

1900 1910 1920 1930 1940 1950 1960 1970 1980 1990 2000 2010

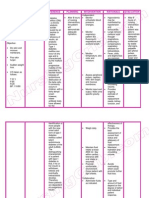

Table 2. Suggested observations and analyses for verifying forecasts of TC variables and associated

hazards. See text for descriptions.

Variable

Suggested observations

Suggested analyses

Position of storm

Reconnaissance flights, visible & IR satellite

Best track, IBTrACS

center

imagery, passive microwave imagery

Intensity maximum

Dropwinsonde, microwave radiometer

Best track, IBTrACS,

sustained wind

Dvorak analysis

Intensity central

Ship, buoy, synop, AWS

IBTrACS, Dvorak

pressure

analysis

Storm structure

Reconnaissance flights, Doppler radar, visible H*Wind, MTCSWA,

& IR satellite imagery, passive microwave

ARCHER

Storm life cycle

NWP model analysis

Precipitation

Wind speed over land

Wind speed over sea

Storm surge

Waves significant

wave height

Waves spectra

Rain gauge, radar, passive microwave,

spaceborne radar

Synop, AWS, Doppler radar

Buoy, ship reports, dropwinsondes,

scatterometer, passive microwave imagers

and sounders

Tide gauge, GPS buoy

Buoy, ship reports, altimeter

Altimeter

Blended gauge-radar,

blended satellite

H*Wind, MTCSWA

Blended analyses

Verification methods for tropical cyclone forecasts

(NOAA) P-3 aircraft have been used for the past 30 years, and since 1996 a Gulfstream IV aircraft has

performed operational synoptic surveillance missions (and, more recently, research missions) to

measure the environments of TCs that have the potential to threaten U.S. coastlines and territories

(Aberson 2010). In addition, Taiwan implemented the Dropwinsonde Observations for Typhoon

Surveillance near the Taiwan Region (DOTSTAR) program in 2003. Research aircraft have been

supported by the U.S. Naval Research Laboratory (the NRL P-3) and the U.S. National Aeronautics

and Space Administration (NASA) (high-altitude aircraft), as well as Canada (a Convair-580 which has

instrumentation focusing on collection of microphysical data), and the Falcon 20 Aircraft of the

Deutsches Zentrum fr Luft-und Raumfart (DLR) which was used for typhoon research in the T-PARC

project in the western Pacific in 2008. Unfortunately, research projects typically focus on a few storms

and do not provide long-term consistent observations of TCs. New observation platforms that may

provide more complete observational coverage for TCs in the future include unmanned aerial

surveillance (UAS) vehicles. However, routine observations are currently only available for TCs that

occur in the Atlantic Basin, with occasional reconnaissance missions in the western, eastern, and

central Pacific basins. In situ data are rarely available for other basins.

Measurements that are available from aircraft reconnaissance missions include flight-level wind

velocity, pressure, temperature, and moisture (e.g., Aberson 2010). Surface wind speeds are

measured by Stepped Frequency Microwave Radiometer (SFMR) and dropwinsondes, and also can

be inferred from flight level winds. In addition, dropwinsondes provide profiles of temperature, wind

velocity, and moisture within and around the TC. On research and NOAA aircraft, Doppler radar and

Doppler wind lidar observations are collected and can provide information regarding winds and

precipitation in the area of the aircraft flight path. Some ocean near-surface measurements are

provided by bathythermographs that can be released from the aircraft as well as from a scanning radar

altimeter (SRA) which provides surface directional wave spectra information. Additional probes on

some of the research aircraft also provide cloud microphysical information, distinguishing the cloud

water contents between ice and liquid particles, and giving measurements of particle sizes. Many of

these datasets are available from the Hurricane Research Division (HRD) at NOAAs Atlantic

Oceanographic and Meteorological Laboratory (AOML; http://www.aoml.noaa.gov/hrd/index.html).

Typically, aircraft observations have not been used for verification, except through their incorporation

in defining the best track (see Section 2.5). However, the observations, particularly from the synoptic

surveillance aircraft, have been found to contribute to improvement in operational numerical weather

prediction of TCs, through their use in defining initial conditions through the data assimilation system

(e.g., Aberson 2010). These observations also have been found to be very valuable for investigating

model or forecast diagnostics and they are the foundation for creation of the Best Track when they are

available (see Section 2.5). However, their potential benefit in forecast verification analyses has not

been fully exploited.

The H*Wind product produced by the HRD is a TC-focused Cressman-like analysis (Cressman 1959)

of surface wind fields that takes into account all available observations ships, buoys, coastal

platforms, surface aviation reports, and aircraft data adjusted to the 10 m above the surface with

consistent time averaging to create 6-hourly guidance on TC wind fields

(http://www.aoml.noaa.gov/hrd/Storm_pages/background.html; Powell 2010, Powell and Houston

1998). Manual quality control procedures are required to create H*Wind analyses and to make them

available to TC forecasters. Each analysis is representative of a 4-6-h period and includes information

about the radius and azimuth of maximum winds and estimates of the extent of operationally

significant wind thresholds (i.e., the wind radii; see Section 2.4). An example of an H*Wind analysis for

Hurricane Katrina is shown in Figure 1. Limitations on H*Wind accuracy are connected to the

availability of appropriate observations and the quality of those observations. Uncertainties associated

with each of the measurements that contribute to H*Wind are detailed in Powell (2010). These

measurements, typically include all available surface weather observations (e.g., ships, buoys, coastal

platforms, surface aviation reports, reconnaissance aircraft data adjusted to the surface). The H*Wind

analyses also make used of various kinds of observations from satellite platforms such as the Tropical

Rainfall Monitoring Mission (TRMM; see Section 2.4). Currently H*Wind analyses require in situ

measurements and/or observations from reconnaissance missions but future global versions may rely

primarily on satellite measurements. It is important to note that because H*Wind is an analysis, it is

unlikely to be able to fully represent the peak winds.

5

Verification methods for tropical cyclone forecasts

Figure 1. Example of an H*Wind analysis from NOAA/AOML/HRD, for Hurricane Katrina (2005)

(http://www.aoml.noaa.gov/hrd/data_sub/wind2005.html).

2.2 Surface networks

Surface networks used for standard meteorological observations provide valuable data for diagnosing

not only the weather associated with a TC, but also to infer the position and intensity of the TC and

significant wave heights These observations are generally available over land, but include data from

buoys and other measuring systems that may extend over coastal waters. Surface networks include

synoptic and automatic weather stations, rain gauge networks, tide gauges, moored buoys, ships, etc.

The surface networks are most useful for landfalling and nearshore TCs, as ships try to avoid these

storms. The surface variables of greatest relevance to TCs include surface pressure, sustained wind

speeds and gust speeds, rain rate and accumulation, storm surge, and significant wave height.

The extreme environment associated with TCs provides many challenges for surface instrumentation.

In the worst case an instrument can be incapacitated or even destroyed by waves, strong winds or

floods, or power outages, so no measurements are recorded. Even when the instrument continues to

record data, the observations may be severely compromised by damage or obstruction due to debris

and structures that may have moved. Rainfall measured by standard tipping bucket rain gauges is

sensitive to wind-related under-catch, which can be significant in TCs (e.g., Duchon and Biddle 2010).

An important limitation of buoy wind speed measurements is sheltering of the buoy in high wave

conditions, which leads to underestimation of high winds at sea (Pierson 1990).

Measurement of wind speed is complicated by the fact that different averaging periods are in use in

different regions of the globe. Although the WMO recommends a standard averaging period of 10 min

for the maximum sustained wind, some countries use 1-, 2-, and 3-min averages, making it necessary

to apply conversion factors in order to compare intensities between regions (Harper et al. 2009, Knaff

and Harper 2010). Powell (2010) provides estimates of wind measurement uncertainties using

propeller and cup anemometers, which range from 5-15% for a variety of averaging periods from 10 s

to 10 min.

Storm surge is the water height above the predicted astronomical tide level due to the surface wind

stress, atmospheric pressure deficit, and momentum transfer from breaking surface wind waves (wave

6

Verification methods for tropical cyclone forecasts

setup). The storm surge can be measured by an offshore GPS buoy or at a tide gauge by subtracting

the astronomical tide from the measured sea level. The maximum storm surge at a particular location

can be estimated from the high water mark following a TC by subtracting from the storm tide the

contributions from the astronomical tide and freshwater flooding. A recent strategy by the US

Geological Survey to place a network of unvented pressure transducers in the path of an oncoming TC

to measure the timing, spatial extent, and magnitude of storm tide has shown promise in providing

comprehensive measurements that can be used to verify forecasts (e.g., Berenbrock et al. 2009).

Sampling issues greatly affect the measurement and representation of important characteristics of

TCs and associated severe weather. Especially for TCs with smaller eyes, the most severe part of the

TC may not pass over any instrument in the network. Estimating maximum winds and central

pressures from surface observations requires assumptions that may lead to erroneous estimates.

Rainfall is highly variable in space and time, and rain gauge networks are generally not dense enough

to adequately capture the intensity structures present in the rain fields. Remotely sensed (radar or

satellite) precipitation fields, particularly if bias-corrected using gauge data, may be preferable for

estimating areal TC rainfall and verifying rain forecasts.

2.3 Surface and airborne radar

Traditionally, weather radar observations have been and continue to be used to evaluate TC

properties (e.g., position, vertical structure, horizontal rainbands, surface rainfall, three dimensional

winds). Land-based weather radar observations are relatively limited because of the infrequent

occurrence of landfalling TCs within range of a coastal or inland radar site once it makes landfall.

However, on the occasion when this does occur, radar observations provide nearly continuous

position information for TCs as they approach the coast and are useful for diagnostic forecast

verification for several impacts associated with TCs. For instance, radar rainfall estimates can be used

to evaluate rainfall forecasts that could provide guidance on the likelihood of TC impacts such as

urban flash flooding and landslides (Kidder et al., 2005). However, radar rainfall estimates from TCs

tend to have large uncertainties (Gourley et al. 2011) due to a variety of factors including, but not

limited to radar calibration, uncertainties in radar reflectivity-rainfall (Z-R) relationships, attenuation of

the radar signal in very heavy rain, and horizontal and vertical sampling variation at varying distances

from the radar (Austin 1987). When using radar rainfall estimates for forecast verification, they should

be combined with a surface reference (e.g., rain gauges) to minimize this uncertainty. Land-based

Doppler radar observations can be useful to evaluate forecasts of wind speed of land-falling TCs;

however, some inferences are required which result in additional uncertainty since (i) the radar

only measures the component of the wind that is directed toward the radar and (ii) the returns need to

be adjusted to the surface.

Use of radar-observed inner-core structures and their temporal evolutions to evaluate a TC model

simulation is still rare in the literature. However, advances in airborne radar observations (e.g., NOAA

tail Doppler observations) over the past 20-30 years have been very useful for providing information

about three-dimensional features in TCs such as concentric and/or double eyewalls, an outward

sloping with height of the maximum tangential wind speed, a layer of radial inflow in the boundary

layer, discrete convective-scale bubbles of more intense upward motion superimposed on the rising

branch of the secondary circulation, convective-scale downdrafts located throughout and below the

core of maximum precipitation in the eyewall, and wind patterns of eyewalls and rainbands (e.g.,

Marks and Houze 1984). Many basic features characterizing a TC, such as the eye, eyewall, spiral

rainbands, and other typical inner-core structures, are reproducible with high-resolution mesoscale

models (Zou et al. 2010). Since the early 1980s, airborne radar observations have been available in

some situations to verify these attributes of the inner core of the storm. Surface-based radar

observations, when TCs pass within the radar swath, have also been used to study the intensification

of TCs associated with small-scale spirals in rainbands (Gall et al., 1998). These kinds of observations

have been used in recent years for advanced data assimilation for TC prediction (e.g., Evenson 1994,

Hamill 2006, Zhang et al. 2011), and would also provide valuable information on TC storm structure

that should be useful for verification studies.

Verification methods for tropical cyclone forecasts

2.4 Satellite

Visible (VIS), water vapor, and infrared (IR) satellite imagery are routinely used in real time and postanalysis to help estimate the position of the center of the low level circulation (the "fix") of a TC,

especially when the TC is over water. High-spatial-resolution imagery from geostationary visible

channels, and from imagers such as the Advanced Very High Resolution Radiometer (AVHRR), the

Visible Infrared Imaging Radiometer Suite (VIIRS), and the Moderate Resolution Imaging

Spectroradiometer (MODIS) on polar orbiting satellites, provide detailed views of cloud-top structure.

Frequent temporal sampling from geostationary satellites, typically 30-60 min and up to 5-15 min

frequency for rapid-scan, allows looping of images to better estimate TC position, motion and wind

velocity. VIS/IR data should be used along with other sources of information (reconnaissance,

microwave imagery, scatterometer, radar, etc.) to avoid ambiguities in the eye position due to thick,

less organized clouds obscuring surface features in early stages of TC development, and when upperlevel cloud features separate and obscure the low-level center (Hawkins et al. 2001; Edson et al.

2006).

Passive microwave imagers such as the Defense Meteorological Satellite Program (DMSP) Special

Sensor Microwave Imager/Sounder (SSM/I, SSMIS), the TRMM Microwave Imager (TMI), the

Advanced Microwave Scanning Radiometer (AMSR-E) on the NASA Earth Observing System (EOS)

satellite, and AMSR2 on the GCOM-W1 satellite, are not strongly affected by cloud droplets and ice

particles, but are sensitive to precipitation. These instruments can "see" through the cloud top into the

TC to characterize the structure of rain bands and the eye wall. These data are extremely useful in

determining the position of the low-level center, and in monitoring structural changes, including during

rapid intensification (Velden and Hawkins 2010).

Because passive microwave sensors are on polar-orbiting satellites, they do not have the same high

temporal frequency as VIS/IR data. To help fill the temporal gaps, Wimmers and Velden (2007)

developed a morphing technique with rotation called Morphed Integrated Microwave Imagery at the

Cooperative Institute for Meteorological Satellite Studies (CIMSS) (MIMIC) that creates a TC-centered

microwave image loop. More recently they developed an improved objective algorithm for resolving

the rotational eye of a TC, called Automated Rotational Center Hurricane Eye Retrieval (ARCHER)

(Wimmers and Velden 2010). Information on eye wall structure and size and cyclone intensity can be

estimated from ARCHER retrievals. The most recent version (v3.0) weights geo-IR and microwave

fixes according to their expected accuracy, favoring the high temporal resolution center fixes from

Geo-IR imagery during more intense stages of the TC for more precise storm-tracking, and the more

accurate but less frequent polar-orbiter microwave imagery during weaker stages (C. Velden, personal

communication).

The satellite-based Dvorak technique is used to estimate the intensity of TCs in Regional Specialized

Meteorological Centers (RSMCs) and TC Warning Centers (TCWCs) around the world. This subjective

technique, described by Dvorak (1984), identifies patterns in cloud features in satellite visible and

enhanced IR imagery, and associates them with phases in the lifecycle of a TC (Velden et al. 2006).

Additional information such as the temperature difference between the warm core and the surrounding

cold cloud tops, derived from IR imagery, can help estimate the intensity, as colder clouds are

generally associated with more intense storms. The Dvorak technique assigns a "T-number" and a

Current Intensity (CI) from 1 (very weak) to 8 (very strong) in increments of 0.5. The T-number and CI

are the same except in the case of a weakening storm, where the CI is higher. A look-up table

associates each T-number with an intensity in terms of maximum sustained wind speed and minimum

central pressure using a wind-pressure relationship. New wind-pressure relationships have been

derived in recent years (Knaff and Zehr 2007; Holland 2008; Courtney and Knaff 2009) and are in use

in some operational centers (Levinson et al. 2010).

An advantage of the Dvorak technique is that, although subjective, it is quite consistent when applied

by skilled analysts (Gaby et al. 1980; Velden et al. 2006). Nevertheless, it is not free from error. Knaff

et al. (2010) compared 20 years of Dvorak analyses to aircraft reconnaissance data from North

Atlantic and Eastern Pacific hurricanes and determined intensity errors as a function of intensity, 12-h

intensity trend, latitude, translation speed, and size. The bias and mean absolute error were most

strongly related to the intensity of the storm itself, and were typically 5-10% of the intensity. The

Advanced Dvorak Technique (ADT; Olander and Velden 2007) is an attempt to minimize variation due

8

Verification methods for tropical cyclone forecasts

to human judgment by using automated techniques to classify cloud patterns and apply the Dvorak

rules.

TC wind fields can be estimated from several types of satellite instruments including scatterometers,

passive microwave imagers, and passive microwave sounders. Scatterometers are satellite-borne

active microwave radars that measure near-surface wind speed and direction over the ocean by

observing backscatter from waves in two directions (note that scatterometer measurements are known

to have a low bias at high wind speeds). Passive microwave near-surface wind estimates exploit the

dependence of ocean emissivity on wind speed and direction. Other sources of information, such as

numerical weather prediction (NWP) model output or best judgment from a forecaster, must be used to

resolve any ambiguities in wind direction estimated from microwave data. Because microwave wind

retrievals are degraded by precipitation, they are more accurate away from the inner core and rain

bands.

For winds above the surface, feature-track winds (also called atmospheric motion vectors, AMV) from

geostationary visible, IR, and water vapor channel data are an important source of wind information at

many levels in the atmosphere (e.g., Velden et al. 2005). Microwave sounders such as the Advanced

Microwave Sounding Unit (AMSU) can be used to estimate two-dimensional height fields from which

lower tropospheric winds can be derived by solving the non-linear balance equation; near-surface

winds can then be estimated using statistical relationships (Bessho et al. 2006). AMSU based intensity

and structure estimates have been available globally since 2003 and operational since 2006. (Demuth

et al. 2004, 2006). Coincident measurements of IR brightness temperature fields and TC wind

structure from aircraft reconnaissance were used by Mueller et al. (2006) and Kossin et al. (2007) to

derive statistical wind algorithms for use with geostationary data.

The best satellite-derived wind estimates are produced by combining independent estimates from all

of the above platforms. Knaff et al. (2011) describe a satellite-based Multi-Platform TC Surface Wind

Analysis system (MTCSWA) that combines scatterometer, SSM/I, AMV, and IR winds into a composite

flight-level (~700 hPa) product, from which near-surface winds can be estimated using surface wind

reduction factors. This algorithm has been implemented in operations at NOAAs National

Environmental Satellite, Data, and Information Service (NESDIS). Evaluation of satellite-derived winds

-1

against H*Wind analyses during 2008-2009 yielded mean absolute wind speed errors of about 5 ms ,

and mean absolute errors in wind radii for gale-force, storm-force, and hurricane-force winds (R34,

R50, and R64, respectively) of roughly 30-40%. Therefore caution should be exercised when using

satellite-only winds to evaluate model errors.

Satellite altimeters are space-borne radars that provide direct measurements of wave height by

relating the shape of the return signal to the height of ocean waves. Wave heights derived from

altimetry compare favorably to those from buoys (e.g., Hwang et al. 1998). Altimetry also provides

estimates of wind speed (through the relationship between wind and wave height) and wave period.

Satellite estimates of precipitation are available from a number of different sensors. IR schemes such

as the Hydro-Estimator (Scofield and Kuligowski 2003) use the relationship between cold cloud-top

temperature and surface rainfall to estimate heavy rain in deep convection, whereas passive

microwave algorithms estimate rainfall more directly from the emission and scattering from hydrometeors (e.g., Kidd and Levizzani 2011). The Tropical Rainfall Measuring Mission (TRMM) satellite,

deployed in 1997 to estimate tropical rainfall, carries a precipitation radar, passive microwave imager

and VIS/IR imager, and is considered to provide the most accurate satellite estimates of heavy rain in

TCs. Direk and Chandraseker (2006) describe several benefits of TRMM precipitation radar

observations, which include the ability to monitor vertical structure of precipitation and evaluate storm

structure over the ocean, and the fact that the footprint of precipitation is sufficiently small to allow the

study of variability of TC vertical structure and rainfall. The disadvantage of TRMM is its relatively

narrow swath (878 km for the microwave imager and 215 km for the precipitation radar) which leads to

incomplete sampling of TCs.

Operational precipitation algorithms such as the TRMM Multisatellite Precipitation Analysis (TMPA)

(Huffman et al. 2007), NOAAs Climate Prediction Center (CPC) MORPHing technique (CMORPH;

Joyce et al. 2004) and the Global Satellite Mapping for Precipitation (GSMaP; Ushio et al. 2009) blend

observations from TRMM, passive microwave sensors, and geostationary IR. This will continue to be

9

Verification methods for tropical cyclone forecasts

the paradigm for future rainfall measurement with the Global Precipitation Measurement (GPM)

mission (Kubota et al. 2010). Chen et al. (2013a, b) performed a comprehensive evaluation of TMPA

rainfall estimates for tropical cyclones in the western Pacific and making landfall in Australia. They

found that the satellite estimates had good skill overall, particularly nearer the eye wall and in stronger

cyclones, but underestimated rainfall amounts over islands and land areas with significant topography.

More recently, spaceborne radar observations of clouds have become available to evaluate TC

forecasts. Starting in 2006 the CloudSat satellite cloud radar has provided new possibilities for

retrievals of hurricane properties. One of the earlier studies by Luo et al. (2008) showed the utility of

using CloudSat observations for estimating cloud top heights for hurricane evaluation. Some

advantages of the CloudSat approach include the availability of high spatial resolution combined with

rainfall and ice-cloud information and the availability of retrievals over both land and water surfaces

with similar accuracies, thus allowing one to monitor hurricane property changes during landfall. One

significant limitation of CloudSat observations is the lack of significant spatial coverage available from

scanning radars and passive instruments. The nadir pointing Cloud Profiling Radar (CPR) provides

only instantaneous vertical cross sections of hurricanes during CloudSat overpasses (Matrosov

2011).

Flood inundation and detailed assessments of TC damage can be made from very high resolution

satellite imagery. The finest resolution of MODIS is 250 m, while Landsat spatial resolution is 30 m.

Many commercial satellites make measurements at finer than 1 meter resolution for applications such

as agricultural monitoring, homeland security, and infrastructure planning. Because the satellite

overpasses are infrequent (typically several days), it is difficult to use these data quantitatively for

verifying TC forecasts.

2.5 Best track

The best track is a subjective assessment of a TCs center location, intensity, and structure along its

path over its lifetime that is specified by trained, experienced, analysts at operational tropical cyclone

warning centers using all observations (both in situ and remotely sensed) available at the time of the

analysis (typically some period of time often several months or longer after the TC occurrence). It

should be noted that several best track datasets are available in the western North Pacific, which are

always somewhat different from each other and can be considered to represent a special type of

observational uncertainty (Lee et al. 2012). A best track typically consists of center positions,

1

maximum surface wind speeds , and minimum central pressures, and may include quadrant radii of

34-, 50-, and 64-kt winds, at specific time intervals (e.g., every 6 h) during the life cycle of a tropical or

subtropical cyclone (note that some quantities, such as wind radii, may not be available at times when

the TC observations are inadequate). The best track often will differ from the operational or working

track that is estimated in real time. In addition the best track may be a subjectively smoothed

representation of the storm history over its lifetime, in which short-term variations in position or

intensity that cannot be resolved in 6-hourly increments have been deliberately removed. Thus, the

location of a strong TC with a well-defined eye might be known with great accuracy at a particular

time, but the best track may identify a location that is somewhat offset from this precise location if that

location is not representative of the overall storm track. The operational track analyses that are

created during the lifetime of a storm typically follow the observed position of the storm more closely

than the best track analyses, because unrepresentative behavior is difficult to assess in real time;

(Cangialosi and Franklin 2013); in contrast, operational intensity estimates may be less representative

in real time than after the fact due to the large uncertainties associated with estimating intensity (J.

Franklin, personal communication).

Figure 2 shows an example of the observations used to determine the best track intensity for a

particular storm (Hurricane Igor) that occurred in 2010 in the western Atlantic basin. Note the large

Note that different criteria are used by different centers to define maximum winds; for example NHC

defines intensity to be the maximum sustained wind speed measured over 1 min. In contrast, the

WMO recommends a standard averaging period of 10 min for the maximum sustained wind.

10

Verification methods for tropical cyclone forecasts

variability in the wind speed measurements that were available at some times (particularly at later

stages of the TCs life cycle), which were used to create the best track estimates of the maximum wind

speed values. Its important to note that it is likely that most of these measurements were not made in

the most severe region of the TC and thus do not directly reflect the peak wind; thus, in creating the

best track, the peak must be inferred from the accumulation of information.

Figure 2. Example of aircraft, surface, and satellite observations used to determine the best track for

Hurricane Igor, 8-21 September 2010. The solid vertical line corresponds to time of landfall (from

Pasch and Kimberlain 2011).

Naturally, as an analysis product, and as shown in Figure 2, the intensity and track location estimates

associated with the best track have some uncertainty associated with them. Studies by Torn and

Snyder (2012) and Landsea and Franklin (2013) provide some estimates of this uncertainty, which are

highly relevant for use of the best track estimates in verification applications. Specifically, Torn and

Snyder (2012) used both subjective and objective approaches to estimate the best track uncertainty in

both the Pacific and Atlantic basins; Landsea and Franklin (2013) relied on subjective estimates of

uncertainty for the Atlantic basin, obtained from hurricane specialists at the U.S. National Hurricane

Center (NHC). An important finding in both of these studies is that track position uncertainty is greater

for weak storms than for intense storms. Torn and Snyder (2012) estimated average best track

position errors of approximately 35 nm for tropical storms, 25 nm for category 1 and 2 hurricanes, and

20 nm for major hurricanes (with storm categories defined on the Saffir-Simpson scale, a major

hurricane has an intensity greater than 95 kt; Simpson 1974). Landsea and Franklin (2013) estimated

average track errors between 35 nm (for tropical storms, with only satellite data available for the

analysis) and 8 nm (for major hurricanes and landfalling TCs, when both satellite and aircraft

observations are available). With respect to intensity, Torn and Snyder (2012) estimated an average

uncertainty of approximately 10 kt for tropical storms, and 12 kt for hurricanes. Landsea and Franklin

(2013) estimated average maximum wind speed uncertainties between 8 and 12 kt for tropical storms

and Category 1 and 2 hurricanes, increasing to between 10 and 14 kt for major hurricanes, depending

on the observations available for creating the best track. These studies suggest that the uncertainty in

the best track information should be taken into account when conducting verification analyses for TC

11

Verification methods for tropical cyclone forecasts

forecasts; determining how to use this information is still a research question.

observation uncertainty are further discussed in the following section.

Implications of

The International Best Track Archive for Climate Stewardship (IBTrACS) combines track and intensity

estimates from several RSMCs and other agencies to provide a central repository of track data that is

easy to use (Knapp et al. 2010). Each record in IBTrACS provides information on the mean and interagency variability of location, maximum sustained wind, and central pressure. This information can be

used both for forecast verification and for investigating trends in cyclone frequency and intensity.

These data are freely available online at http://www.ncdc.noaa.gov/ibtracs/.

2.6 Observational uncertainty

As seen in the sections above, the observations and analyses available for verifying TC forecasts are

all subject to varying amounts of uncertainty. Nevertheless, even imperfect observations can still be

extremely useful in verification.

Verification statistics are sensitive to observational error, which artificially increases the estimated

error of deterministic forecasts, and affects the estimated reliability and resolution of probabilistic

forecasts (Bowler 2008). Biases in observations can lead to wrong conclusions, and should be

removed prior to their use in verification. This is often difficult to do, as it requires (usually prior)

calibration against a more accurate dataset. In the absence of perfect observations (a "gold standard")

it is impossible to determine the true forecast error. However, relative errors (e.g., for comparing

competing forecast systems) can still be determined.

Steps should be taken to reduce the observational uncertainty where possible. In addition to bias

removal, other error reduction strategies include data assimilation (fusion) and aggregation of multiple

samples. The H*Wind analysis (see Section 2.1) is an example of a product that combines

observations from multiple sources to reduce the overall error, and potentially could be useful for

evaluating a wind field forecast. For evaluating the forecast TC location and intensity, the best track is

preferred due to the larger variety of measurements used to create the best track, along with the

expertise of the analyst, which would reduce the uncertainty. NWP data assimilation combines many

types of conventional and remotely sensed observations with a model-based first guess to produce a

spatially and temporally consistent analysis on the model grid. Because these analyses are not

independent of the underlying model, they are less suitable for verification of NWP model forecasts as

they may lead to underestimation of the true error. Possible ways around this issue include using an

analysis associated with a different model, or using an average analysis from several models.

When random errors are large, observations can be averaged in space and/or time to reduce the

error. Recall that the variance of the average of N independent samples from a given population is 1/N

times the variance of the individual samples. Of course the forecasts being verified against averaged

observations must be averaged in an identical manner.

If the remaining uncertainty after treatment of the data is still unacceptably large then the data should

not be used for verification.

3. Forecasts

A variety of types of TC forecasts are available around the world. Official forecasts provided by the

RSMCs and TCWCs consist of human-generated track and intensity information, along with other

attributes of the forecast storm (e.g., radii associated with various maximum wind speed thresholds of

64, 50, and 34 kt). Modern efforts at producing guidance for forecasting TCs include statistical

methods for forecasting track, intensity, structure, and phase, such as the Climate and Persistence

(CLIPER) model for track prediction (Neumann 1972, Aberson 1998, Heming and Goerss 2010),

which is based on a combination of climatology and persistence, and the Statistical Hurricane Intensity

Forecast (SHIFOR) model for predicting intensity (Knaff et al. 2003). Statistical-dynamical models

such as the Statistical Hurricane Intensity Prediction Scheme (SHIPS) and its Northwest Pacific and

12

Verification methods for tropical cyclone forecasts

Southern Hemisphere version (STIPS) (DeMaria et al. 2005, Sampson and Knaff 2009) and the

Logstic Growth Equation Model (LGEM; DeMaria 2009) are also commonly used to predict TC

intensity.

NWP models, including both global and regional systems, also provide predictions of TCs. For

example, the Global Forecast System (GFS) of the U.S. National Centers for Environmental Prediction

(NCEP), the U.S. Navy Global Environmental Model (NAVGEM), the United Kingdom (U.K.) Met Office

global model, the Global Spectral Model of the Japan Meteorological Agency, the European Center for

Medium Range Weather Forecasting (ECMWF) global model, and the Canadian Global Environmental

Multiscale (GEM) model all provide forecasts of TCs (Heming and Goerss 2010). Others include

models from the China Meteorological Administration, the Korean Meteorological Administration, and

the Shanghai Typhoon Institute. In addition to track and intensity forecasts, many of these global

prediction systems are able to provide predictions of TC genesis. Examples of mesoscale models

tailored to provide TC forecasts include the limited-area Geophysical Fluid Dynamics Laboratory

(GFDL) hurricane model, the French Aire Limite, Adaptation dynamique, Dveloppement

InterNational (ALADIN) model, the Australian Community Climate and Earth-System Simulator

(ACCESS) TC model, the Hurricane Weather Research and Forecasting (HWRF) model and the U.S.

Navys Coupled Ocean-Atmosphere Mesoscale Prediction System for TCs (COAMPS-TC). New

research is leading to development of new mesoscale and global prediction systems for TCs as well

as ongoing improvements in existing systems. In addition, in recent years both global and regional

ensemble prediction systems have been developed to predict TC activity and the uncertainty

associated with those predictions.

To create and evaluate a TC forecast from an NWP model, it is necessary to post-process the model

output fields from the model to identify the TC circulation and obtain a forecast of track, intensity,

structure, and phase. Many models take this step internally, with the tracking algorithm tuned to

remove model-dependent biases. Use of an external tracker can be especially useful for comparative

verification by allowing use of a consistent algorithm on forecasts from different models. In general, the

vortex trackers identify and follow the TC track using several fields from the NWP output. One of the

more commonly used trackers was developed at NOAA/NCEP, has been enhanced by NOAA/GFDL,

and is available and maintained as a community code by the U.S. Developmental Testbed Center

(DTC; http://www.dtcenter.org/HurrWRF/users/downloads/index.tracker.php). The GFDL TC tracker

(Marchok 2002) is designed to produce a track based on an average of the positions of five different

primary parameters (MSLP, 700- and 850-hPa relative vorticity, 700 and 850 hPa geopotential height)

and two secondary parameters (minimum in wind speed at 700 and 850 hPa). See Appendix 4 for

additional information about the GFDL tracking algorithm. Other tracking algorithms have been

developed by the UK Met Office and ECMWF (e.g., van der Grin 2002), and many NWP models

include internal tracking algorithms. Not all vortex trackers utilize the same fields (or weight them

equivalently) in identifying and following TCs. Thus, to eliminate the tracking algorithm as a source of

differences in model performance, it is necessary to apply a common tracking algorithm when

comparing the TC forecasts from two or more NWP models (Heming and Goerss 2010).

TC forecasts are often accompanied by uncertainty information. This can be based on historical error

statistics, or increasingly, on ensemble prediction. The ensemble can be derived from forecasts from

multiple models, as is standard practice at many operational TC forecast centers (Goerss 2000, 2007),

or it can be generated using a NWP ensemble prediction system (EPS) (e.g., van der Grijn et al.

2005). Lagged ensemble forecasts can be created by combining the latest ensemble forecast with

output from the previous run, thus increasing the ensemble size and improving the forecast

consistency. Many of the global models mentioned earlier are used in ensemble prediction systems,

and are also included in the THORPEX Interactive Global Grand Ensemble (TIGGE; Bougeault et al.

2010). In recent years the "ensemble of ensembles" approach of the TIGGE project is encouraging

research into optimal use of multi-ensemble forecasts (e.g., Majumdar and Finocchio 2010). Gridded

ensemble forecasts and TC track forecasts can be freely downloaded from the TIGGE archives at

ECMWF, NCAR, and CMA (http://tigge.ecmwf.int).

An ensemble TC forecast is made by applying a tracker to each ensemble member individually. This

gives distributions of the properties of the ensemble members (position, central pressure, maximum

wind speed, etc.). The ensemble mean, or consensus, is obtained by averaging the TC properties of

the ensemble members. Note that the ensemble mean for wind speed and precipitation will be biased

13

Verification methods for tropical cyclone forecasts

low because these variables are not distributed normally, and also because of the (usually) lower

resolution of ensemble forecasts; post-processing to correct bias is strongly advisable. Usually a

forecast TC must be present in a certain fraction of possible ensemble members, and weights may

sometimes be applied to the different ensemble members to reflect their relative accuracy (Vijaya

Kumar et al. 2003, Elsberry et al. 2008, Qi et al. 2013). Deterministic forecasts based on ensembles

can be verified using the methods described in Section 4, whereas the ensemble and probabilistic

forecasts can be evaluated using methods described in Section 5.

When evaluating the performance of forecasts, a standard of comparison is often very valuable to

provide more meaningful information regarding the relative performance of a forecasting system. Use

of a standard of comparison is also needed to compute skill scores. Typical standards of comparison

for TC forecasts include a climatology-persistence forecast (e.g., CLIPER) for track (Neumann 1972,

Jarvinen and Neumann 1979, Aberson 1998, Cangialosi and Franklin 2013) and an analogous

climatology-persistence forecast such as SHIFOR for intensity (DeMaria et al. 2006, Knaff et al. 2003,

Cangialosi and Franklin 2013).

Impact forecasts also are of interest when evaluating the overall performance of a TC forecast.

Gridded forecasts for weather related hazards such as heavy precipitation, damaging winds, and

storm surge are often based on output from NWP models and EPSs. These may be fed directly into

impacts models for inundation and flooding, landslides, damage to buildings and infrastructure, etc.

Verification of weather hazards is addressed in this document, but verification of downstream impacts

is outside the scope of this document.

4. Current practice in TC verification deterministic forecasts

In this section, each TC-related variable that is and has been frequently or routinely verified is

discussed, along with applicable measures. Since many of the variables are derived, and their

observed counterparts are often also inferred rather than directly measured, data sources and their

characteristics are also discussed for each. Ensemble forecasts of TCs are relatively new, and

verification methods for these are still in the experimental phase. Verification of ensemble TC

forecasts is therefore discussed in Section 5.

Current practices for evaluation of deterministic TC forecasts including storm center location,

intensity, precipitation and other variables are mainly limited to standard verification methods for

deterministic forecasts. These are briefly surveyed here for the various TC parameters and weather

hazards, with reference to more complete discussions elsewhere.

WMO (2009) provides a set of recommendations for verifying model QPFs. In particular, verification

methods for evaluations against point observations (user focus) and for evaluations based on

observations upscaled to the model grid (model focus) are both included in that document. It also

provides a prioritized set of diagnostics and scores for verifying categorical forecasts (for example, rain

meeting or exceeding specified accumulation thresholds) and forecasts of continuous variables like

rain amount, shown in Table 3. These scores are described in Appendix 1, repeated from WMO

(2009), and apply not only to precipitation but also to other variables such as storm center location,

intensity, wind speed, storm surge water level, and significant wave height.

4.1 Track and storm center verification

For all TCs in the Atlantic and Eastern North Pacific Basins, the U.S. NHC conducts routine verification

of storm center location and intensity (e.g., Cangialosi and Franklin 2013). This verification is carried

out post-storm, using best track estimates. Methods used by NHC are described in some detail at

http://www.nhc.noaa.gov/verification, and reports on verification results for each seasons forecasts

are available at http://www.nhc.noaa.gov/pastall.shtml. The Joint Typhoon Warning Center (JTWC)

produces similar forecast verification reports following each typhoon season (e.g., Angove and Falvey

2011; http://www.usno.navy.mil/JTWC/annual-tropical-cyclone-reports). Other centres and forecasting

groups also compute summaries of track and intensity errors; for example, the UK Met Office posts

14

Verification methods for tropical cyclone forecasts

reports

on

track

intensity

statistics

on

http://www.metoffice.gov.uk/weather/tropicalcyclone/verification).

their

web

site

(see

Table 3. Recommended scores for verifying deterministic forecasts (from WMO 2009). The questions

answered by each measure are described in Appendix 1, along with the formulas needed for their

computation.

Scores for categorical

Scores for forecasts of

Diagnostics

(binary) forecasts

continuous variables

Mandatory

Hits, misses, false alarms,

correct rejections

Highly

Frequency bias (FB)

Mean value

Maps of observed

recommended Percent correct (PC)

Sample standard deviation

and forecast

Probability of detection (POD)

Median value (conditional on

values

Scatter plots

False alarm ratio (FAR)

event)

Gilbert Skill Score (GSS; also

Mean error (ME)

known as Equitable Threat

Root mean square error

Score)

(RMSE)

Correlation coefficient (r)

Recommended Probability of false detection

Interquartile range (IQR)

Time series of

(POFD)

Mean absolute error (MAE)

obs. and

Threat score (TS)

Mean square error (MSE)

forecast mean

Hanssen and Kuipers score

Root mean square factor

values

Histograms

(HK)

(RMSF)

Exceedance

Heidke skill score (HSS)

Rank correlation coefficient

probability

Odds ratio (OR)

(rs)

MAE skill score

Quantile-quantile

Odds ratio skill score (ORSS)

MSE skill score

plots

Extremal dependence index

Linear error in probability

(EDI)

space (LEPS)

Forecast track error is defined as the great-circle difference between a TCs forecast center position

and the best-track position at the verification time. This is a vector quantity, which is sometimes

decomposed into components of along- and cross-track error, with respect to the observed best track.

Figure 3 shows a schematic of the computation of the various track errors. Along-track errors are

important indicators of whether a forecasting system is moving a storm too slowly or too quickly,

whereas the cross-track error indicates displacement to the right or left of the observed track. The two

components can also be interpreted as errors in where the TC is heading (cross-track error) and when

it will arrive (along-track error).

Track errors are often presented as mean errors for a large sample of TCs, as in Figure 4, which

shows trends in NHC track errors over time (Cangialosi and Franklin 2011). Alternatively, track errors

can be analyzed for a single storm, but the impact of the small sample size must be taken into account

in interpreting the results. A new approach called the Track Forecast Integral Deviation (TFID)

integrates the track error over an entire forecast period (see Section 7.2).

15

Verification methods for tropical cyclone forecasts

Figure 3: Schematic of computation of track errors, including overall error (green), cross-track, and

along-track errors. (After J. Franklin).

Figure 4. Trends in mean track error for NHC TC forecasts for the Atlantic Basin (from Cangialosi and

Franklin 2011).

As noted in Section 3, a standard of comparison or reference forecast must be used to evaluate

forecast skill. Figure 5 shows an example of a skill diagram for NHC track forecasts, compared against

CLIPER (see Appendix 1 for the definition of a generalized skill score). Note that both Figure 4 and

Figure 5 indicate improvements in performance of NHC track forecasts over time. However, Figure 5

accounts for variations in performance that may be due to variations in the difficulty (e.g., the

predictability) of the forecasting situations in a given year.

16

Verification methods for tropical cyclone forecasts

Figure 5. Official NHC track skill trends for Atlantic hurricanes, compared to CLIPER (from Cangialosi

and Franklin 2011).

When looking more closely at the performance of track forecasts, distributional approaches can be

valuable. Such approaches include the use of box plots to highlight the distributions of the errors in the

forecasts, as in Figure 6. In this figure one obvious characteristic demonstrated is the increase in the

variability of the errors with increasing lead time. It is also possible to see some minor differences in

the performance of the two models. One noticeable difference between the distributions for the two

models is the apparent greater frequency of outlier values for Model 2. Displays like this (and other

analyses) also make it possible to assess whether the difference in performance of two models is

statistically significant (note that the time correlation of the performance differences must be taken into

account in doing these types of assessments, as in Aberson and DeMaria 1994, Gilleland 2010). Many

other types of displays could also be used to examine track errors in greater detail, for example, by

examining the combined direction and magnitude of the errors in a scatterplot around the storm

location, or conditioning track error distributions by the stage of the storm development.

Model 1

Model 2

Figure 6. Example of the use of box plots to represent the distributions of track errors for TC forecasts.

Black and red box plots represent the track errors from forecasts formulated by two different versions

th

th

of a model. Central box area represents the 0.25 to 0.75 quantile values of a distribution, horizontal

line inside box is the median value, and whisker ends represent the smallest and largest values that

th

are not outliers. Outliers (defined as 1.5 *IQR (interquartile range) lower than the 0.25 quantile or

17

Verification methods for tropical cyclone forecasts

th

1.5*IQR higher than the 0.75 quantile) are represented by the circles. Notches surrounding the

median values represent approximate 95% confidence intervals on the median values. The sample

sizes are given at the top of the diagram; the samples are homogeneous for each lead time. (From

Developmental Testbed Center 2009).

Timing and location of landfall (and, perhaps more importantly, the impacts of landfall) are two

variables related to the TC prediction that are of importance for emergency managers and disaster

management planners; errors in these forecasts can have large impacts on the welfare of the general

public through their impact on civil defense planning and implementation. TC landfall location and

timing can be evaluated using standard verification measures and approaches for deterministic

variables. However, the conclusions that can be drawn from such evaluations are often limited due to

the small number of TCs that actually make landfall or are predicted to make landfall. For example,

Powell and Aberson (2001) found that only 13% of TC predictions between 1976 and 2000 in the

Atlantic Basin included a forecast TC location in which the TC would be expected to make landfall.

They also investigated a variety of approaches for defining and comparing forecast and observed

landfall, which provide meaningful information about the landfall position and timing errors. In

particular, certain nuances of the forecasting situation must be taken into account, such as the

occurrence of near misses and landfall forecasts that are not associated with a landfall event. Even

when forecasts and observations agree on a cyclone center not making landfall, the weather

associated with a cyclone passing close to the coast can still have a high impact on coastal populations and environments.

4.2 Intensity

As noted in Section 2.5, TC intensity is often represented by the maximum surface wind speed

averaged over a particular time interval. Alternatively, it may be based on a minimum surface pressure

estimate inside the storm. Thus, standard verification approaches for continuous variables are

appropriate for evaluation of both types of TC intensity forecasts. In general, intensity errors are

summarized using both the raw errors and absolute values of the errors. Most commonly, the means

of each of these two parameters are presented in TC intensity forecast evaluations. The mean value of

the raw errors provides an estimate of the bias in the forecast intensity values, whereas the mean of

the absolute errors indicates the average magnitude of the error. Figure 7 shows a typical display of

absolute intensity errors for NHC forecasts.

Figure 7. As in Figure 4 for NHC intensity forecasts (from Cangialosi and Franklin 2011).

As with the storm center (track) forecast errors, it is beneficial to compare the errors against a

standard of comparison to measure skill. An example of this kind of comparison is presented in

Cangialosi and Franklin (2011). In addition, a great deal can be learned about the forecast

18

Verification methods for tropical cyclone forecasts

performance by looking beyond the average intensity errors and investigating distributions of errors

(e.g., Developmental Testbed Center 2009, Moskaitis 2008). For example, Moskaitis (2008)

demonstrates the benefits of a distributions-oriented approach to evaluation of TC intensity forecasts,

which provides more information about characteristics of the relationship between forecast and

observed intensity. In addition, box plots similar to those shown in Fig. 6 can also be used to

represent the distributions of intensity errors, as well as the distributions of differences in errors when

comparing two forecasting systems (see Section 8).

It is important to note that the traditional approach to evaluating TC track and intensity (as described

here) ignores possible misses and false alarms that might be associated with the forecasts

especially with forecasts based on results from NWP models. In particular, these models may

produce TCs that do not exist in the Best Track data (e.g., that are projected to continue to exist after

the actual storm has dissipated) and should be counted as false alarms. And it is possible that a

storm can be projected to weaken and dissipate at a time that is earlier than the actual time of

dissipation; in this case, a miss should be counted. Approaches to appropriately handling these

situations in TC verification studies were identified by Aberson (2008) who suggested using an m x m

contingency table showing counts associated with different combinations of forecast and observed

intensity, including cells for situations when either the forecast storm and/or the observed storm

dissipated. An example of the application of this idea in model evaluation is Yu et al. (2013b), who

extended the technique to be based on the contingency table for TC category forecasts. From a table

like this, contingency table statistics like FAR and POD could be computed to measure the impact of

false alarms and misses; Aberson suggests the use of the Heidke Skill Score to evaluate the accuracy

of the forecasts, including the dissipation category.

Other variables related to intensity are also of interest for many applications. For example, forecasters

are concerned about rapid changes in intensity either increasing or weakening. Typically this

characteristic is measured by setting a threshold for a change in intensity over a 24h period. Normally

this variable is treated as a Yes/No phenomenon (i.e., either the rapid change occurred or it did not

occur, and it either was or was not forecast). In that case, basic categorical verification approaches as

outlined in Appendix 1 can be applied to compute statistics such as probability of detection (POD) and

false alarm ratio (FAR). An alternative approach that might provide more complete information about

the forecasts ability to capture these events would involve evaluating the timing and intensity change

errors associated with these forecasts.

4.3 Storm structure

The size of a TC is typically given by "wind-speed radii", expressed as the distance from the center of

the TC to the maximum extent of winds exceeding 34, 50, and 64 kt (i.e., R34, R50, and R64). NHC

predicts these values for four quadrants surrounding the TC (NE, SE, SW, NW), and also estimates

them from available observations in post-storm best track analyses. However, NHC does not consider

the observed wind radii to be accurate enough for reliable quantitative verification of TC size forecasts.

As noted in Section 2.1, HRD produces a surface wind analysis called H*Wind using virtually all