Beruflich Dokumente

Kultur Dokumente

TB XC Appliance System Scalability 092514

Hochgeladen von

Faisal Abdul GaffoorCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

TB XC Appliance System Scalability 092514

Hochgeladen von

Faisal Abdul GaffoorCopyright:

Verfügbare Formate

Technical Brief: System Scalability

Dell XC Web-scale Converged Appliance

Powered by Nutanix software

Introduction

Dell XC Series Web-scale Converged Appliances

integrate Nutanix web-scale software and Dells proven

storage and x86 server platform to provide enterpriseclass features for virtualized environments. As a highly

differentiated converged infrastructure solution, the

XC Series consolidates compute and storage into a single

appliance enabling application and virtualization teams to

quickly and simply deploy new workloads. This solution

enables data center capacity to be easily expanded one

node at a time delivering linear and predictable scaleout with pay-as-you-grow flexibility

Data management in distributed systems

A key tenet in the design of massively scalable systems

is ensuring that each participating node manages only a

bounded amount of state, independent of cluster size.

Accomplishing this requires that there be no master node

responsible for maintaining all data and metadata in the

clustered system. This concept is paramount in truly

scalable architectures, and one that is very difficult to

retrofit into legacy architectures.

Nutanix Distributed File system (NDFS) efficiently manages

all types of data in order to scale out capacity linearly for

both small and large-size clusters, and without loss of

node or cluster performance.

Configuration Data

NDFS stores cluster configuration data using a very

small in-memory database backed by solid-state drives

(SSDs). Three copies of this configuration database are

maintained in the cluster at all times. Importantly, there

are strict upper bounds that are honored to ensure that

this database never exceeds a few megabytes in size.

Even for a hypothetical million node cluster, the

database holding configuration data (such as identity of

participating nodes, health status of services, and so on)

for the entire cluster would only be a few megabytes in

size. In the event that one of the three participating nodes

fails or becomes unavailable, any other node in the cluster

can be seamlessly converted to a configuration node.

Metadata

The most important and complex part of a file system is its

metadata. In a scalable file system, the amount of metadata

can potentially get very large. Further complicating the task,

it is not possible to hold the metadata centrally in a few

designated nodes or in memory.

NDFS employs multiple NoSQL concepts to scale the

storage and management of metadata. For example, the

system implements a NoSQL database called Cassandra

to maintain key-value pairs, where the key is the offset in a

particular virtual disk and the value represents the physical

locations of the replicas of that data in the cluster.

When a key needs to be stored, a consistent hash is used

to calculate the locations where the key and value will

be stored in the cluster. The consistent hash function is

responsible for uniformly distributing the load of storing

keys in the cluster. As the cluster grows or shrinks, the

ring self-heals and rebalances key storage responsibility

among the participating nodes. This ensures that every

node will be responsible for managing roughly the same

amount of metadata.

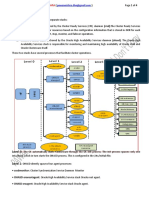

Virtual machine data and I/O

Every XC converged appliance includes a Controller Virtual

Machine (CVM) to handle all data I/O operations for the

local hypervisor and guest VMs, and to serve as a gateway

to NDFS. This n-way controller model means the number

of CVMs scales evenly with the number of XC converged

appliances in the cluster, thus eliminating the possibility of

controller bottlenecks that occur in traditional arrays.

The NDFS architecture ensures that the amount of data

stored on a cluster node is directly proportional to the

amount of storage space on that node including both

SSD and HDD capacities. This by definition is bounded

and does not depend on the size of the cluster. A system

component called Curator, which is responsible for keeping

the cluster running smoothly, runs background map-reduce

tasks periodically to check for uneven disk utilization on the

nodes in the cluster in a distributed manner.

When a VM creates data, NDFS keeps one copy resident

on the local node for optimal performance, and distributes

a redundant copy across other nodes in the cluster.

Distributed replication enables quick recovery in the

event of a disk or node failure. All remote XC converged

appliances participate in the replication. This enables

higher I/O for larger size clusters as there are more nodes

handling the replication.

If a VM moves to another node in response to a High

Availability (HA) or VM movement event, Curator

automatically migrates hot data to the node where the

VM is running. NDFS enables all of the clusters storage

resources to be available to any host or any VM, but

without requiring all data to be local to that node.

Scalable vDisk-level locks

In traditional, non-converged architectures I/O from a

single VM may arrive via multiple interfaces due to network

multi-pathing and controller load balancing. This forces

legacy file systems to use fine-grained locks to avoid nonstop transitions of ownership of locks between controllers

on writes, and massive chatter of invalidations on the

network. Such use of fine-grained locks in architectures

employing n-way controllers imposes scalability

bottlenecks that are nearly impossible to overcome.

NDFS eliminates this impediment to system scalability by

implementing locks sparingly, and only at a VMs file level

(vDisk on NDFS). NDFS queries the hypervisor and gathers

information on all the VMs running on the host, as well as

Technical Brief: System Scalability

the files backing the virtual disks of the VMs. Each virtual disk,

or any other large file, is converted into a Nutanix vDisk that

is managed as a first-class citizen in the file system.

I/O for a particular VM is served by the local Controller

VM that is running on the host. That local controller VM

acquires the lock for all of the virtual disks backing the VM.

Because virtual disks are not typically shared with other

hosts, NDFS simply uses a vDisk-level lock. As such, there

are no invalidations or cache coherency issues. Even with

a very large number of VMs, NDFS effectively manages

locks with minimal overhead to ensure that the system can

still scale linearly.

Scalable alerting, monitoring and reporting

Alerts

One of the more common oversights in designing scalable

systems is building troubleshooting facilities that scale with

the system. When such services become unstable, it is

even more important to have an alert and event recording

system that does not buckle due to non-scalability.

NDFS implements a completely scalable alert system

supported by a service running on every node. All events

and alerts are recorded into a strictly consistent distributed

NoSQL database that is accessible via any node in the

cluster. Alerts and events are indexed at scale, and within

the NoSQL database. They are easily accessed either via

the GUI or through a standards-based REST-based API.

Statistics and visualization

All distributed systems require reliable, live statistical

monitoring and reporting. NDFS scales the statisticsgathering component to provide near real-time insights

to the cluster administrator. This is accomplished by

implementing a scale-out statistics database that leverages

the NoSQL key-value store.

Each host in the Dell XC cluster runs an agent that gathers

local statistics and periodically updates the NoSQL store.

When the GUI requests data in a sorted fashion (like the

top 10 CPU consuming VMs), the request is sent using a

map-reduce framework to all hosts in the cluster to get

live information in a scalable fashion. Each host is only

responsible to serve requests for local stats.

Nutanix software also provides cluster-wide statistics,

which are handled by a dynamically elected leader in the

cluster. Strict limits are enforced on the number of such

cluster-wide statistics to ensure overall scalability.

Avoiding single points of failure

A key NDFS design principle is to not require any fixed

special nodes to maintain cluster operation and services.

There are a few operations in the clustered environment,

however, which embody the notion of a leader. It is

necessary that this leader not be statically assigned,

otherwise that node reduces to a special node and

introduces a potential single-point-of-failure, which

inhibits scalability.

To overcome these drawbacks, Nutanix software

implements a dynamic leader election scheme. For all

functions and administrative roles in the cluster, services

on all hosts volunteer to be elected as leader. A leader

is elected efficiently using the distributed configuration

service implemented in the system.

It is not necessary, however, for the leaders for all

functions be co-located on any given node. In fact, the

leaders are randomly distributed in order to spread the

leadership load among cluster nodes. When an elected

leader fails, either due to the failure of the service or the

host itself, a new leader is elected automatically from

among the healthy nodes in the cluster. This occurs in

a sub-second timeframe for all size clusters. In other

words, there is no correlation between the time duration

to elect a new leader upon failure and the number of

nodes in a cluster. The newly elected leader automatically

assumes the responsibilities of the previous leader.

Strict consistency at scale using Paxos

Most NoSQL implementations sacrifice strict consistency

to gain better availability. For example, Facebooks

Cassandra and Amazons Dynamo provide only eventual

consistency, which is not a viable option for true file

systems. Eventual consistency implies that if a piece

of data is written to one node in a cluster, the data will

become visible to another node in the cluster only

eventually. There is no guarantee that the data will be

immediately visible.

Paxos is a widely used protocol that builds consensus

among nodes in clustered systems. In the NDFS metadata

store, for each key there is a set of three nodes that

might have the latest value of the data. NDFS runs the

Paxos algorithm to obtain consensus on the latest value

for the key being requested. Paxos guarantees that the

most recent version of the data will get consensus. Strict

consistency is guaranteed even though the underlying

data store is based on NoSQL. For any key, the consensus

needs to be formed between only three nodes regardless

of cluster size.

The ability to achieve strict consistency using a scalable

NoSQL database enables NDFS to be a linearly scalable

file system.

Scalable map-reduce framework

For relatively mundane work that must be performed

by a typical file system, it is far more efficient and

scalable to complete common tasks in the background.

User performance benefits by offloading tasks from

the active I/O path to background processes. Typical

examples include disk scrubbing, disk balancing, offline

compression, metadata scrubbing and calculation of

free space.

NDFS implements a map-reduce framework, called

Curator, which is responsible for performing cluster

wide operations at scale to perform these tasks without

impacting scalability. Since all nodes participate and each

handle a part of the Curator responsibility, performance

scales linearly as the size of the cluster grows.

Each node is responsible for a bounded number of mapreduce tasks, which are processed by all cluster nodes

in phases. A coordinating node is randomly elected and

performs only the lightweight tasks of coordinating nodes

in the cluster.

While this works well for some systems, it will cause

corruption in storage file systems. It may appear as a

natural consequence that NoSQL systems are ill suited for

building scalable file systems. NDFS, however, achieves

strict consistency on top of NoSQL by implementing a

distributed version of the Paxos algorithm.

Learn More at Dell.com/XCconverged.

2015 Dell Inc. All rights reserved. Dell, the DELL logo, and the DELL badge are trademarks of Dell Inc. Other trademarks and trade names

may be used in this document to refer to either the entities claiming the marks and names or their products. Dell disclaims proprietary

interest in the marks and names of others. This document is for informational purposes only. Dell reserves the right to make changes

without further notice to any products herein. The content provided is as is and without express or implied warranties of any kind.

TB_Nutanix_System_Scalability_040915

Das könnte Ihnen auch gefallen

- The Loner-Staying Clean in IsolationDokument4 SeitenThe Loner-Staying Clean in Isolationgemgirl6686Noch keine Bewertungen

- Mars - Andrew D. Basiago - Letter To The Ngs - 12 12 08Dokument4 SeitenMars - Andrew D. Basiago - Letter To The Ngs - 12 12 08Gavin SimmonsNoch keine Bewertungen

- Dynamic Virtual Clusters in A Grid Site ManagerDokument11 SeitenDynamic Virtual Clusters in A Grid Site Managerakr808Noch keine Bewertungen

- EDIBLE PLANTSDokument10 SeitenEDIBLE PLANTSBotany DepartmentNoch keine Bewertungen

- Windows Cluster Interview Questions and Answers - Mohammed Siddiqui - AcademiaDokument4 SeitenWindows Cluster Interview Questions and Answers - Mohammed Siddiqui - AcademiaDipak SinghNoch keine Bewertungen

- Court Document SummaryDokument15 SeitenCourt Document SummaryAkAsh prAkhAr vErmA100% (1)

- Counseling techniques for special populationsDokument67 SeitenCounseling techniques for special populationsSittie Nabila Panandigan100% (2)

- NSX-T Reference Design Guide v2Dokument247 SeitenNSX-T Reference Design Guide v2krzyzaczek_2Noch keine Bewertungen

- Oracle RAC Interview Question and AnswersDokument4 SeitenOracle RAC Interview Question and Answerssherif adfNoch keine Bewertungen

- Couchbase Server An Architectural OverviewDokument12 SeitenCouchbase Server An Architectural OverviewAnonymous lKAN0M8AxNoch keine Bewertungen

- A Practice Teaching Narrative of Experience in Off Campus InternshipDokument84 SeitenA Practice Teaching Narrative of Experience in Off Campus InternshipClarenz Jade Magdoboy MonserateNoch keine Bewertungen

- Cisco Aci VMDCDokument39 SeitenCisco Aci VMDCFaisal Abdul Gaffoor100% (1)

- Day One: Inside Segment RoutingDokument183 SeitenDay One: Inside Segment Routingopenid_dr4OPAdENoch keine Bewertungen

- José Rizal: The Life of The National HeroDokument9 SeitenJosé Rizal: The Life of The National HeroMark Harry Olivier P. VanguardiaNoch keine Bewertungen

- Grade 12 - MIL - Q1 - Week1 FinalDokument19 SeitenGrade 12 - MIL - Q1 - Week1 FinalMa'am Regie Ricafort100% (2)

- Brkpca 2020Dokument129 SeitenBrkpca 2020Faisal Abdul GaffoorNoch keine Bewertungen

- Health Grade 9 1st QuarterDokument1 SeiteHealth Grade 9 1st QuarterCapila Ron100% (1)

- Technet Failover Cluster PDFDokument214 SeitenTechnet Failover Cluster PDFnashcom11100% (1)

- PSM641UX TechnicalManua-V1.03Dokument128 SeitenPSM641UX TechnicalManua-V1.03arulsethuNoch keine Bewertungen

- Nutanix Tech Notes System ReliabilityDokument4 SeitenNutanix Tech Notes System ReliabilityRochdi BouzaienNoch keine Bewertungen

- Netware Clustered ServersDokument8 SeitenNetware Clustered ServersAtthulaiNoch keine Bewertungen

- Cassandra Unit 4Dokument18 SeitenCassandra Unit 4Rahul NNoch keine Bewertungen

- WP CloserLookatMySQLCluster 141011Dokument7 SeitenWP CloserLookatMySQLCluster 141011marcsherwoodNoch keine Bewertungen

- Efficient Distributed Shared Memory On A Single System Image Operating SystemDokument7 SeitenEfficient Distributed Shared Memory On A Single System Image Operating SystemJesus De OliveiraNoch keine Bewertungen

- Microsoft Cluster Service: A RetrospectDokument37 SeitenMicrosoft Cluster Service: A RetrospecttanmeyaNoch keine Bewertungen

- A Cluster Computer and Its ArchitectureDokument3 SeitenA Cluster Computer and Its ArchitecturePratik SharmaNoch keine Bewertungen

- Log In: Windows Cluster Interview Questions and AnswersDokument4 SeitenLog In: Windows Cluster Interview Questions and AnswersBasheer AhamedNoch keine Bewertungen

- Architecture: Shared-Nothing Mysql Database Management SystemDokument5 SeitenArchitecture: Shared-Nothing Mysql Database Management SystemLeandro LealNoch keine Bewertungen

- App AcheDokument55 SeitenApp Achebabel 8Noch keine Bewertungen

- CassandraDokument18 SeitenCassandraOluremi 'Man-kind' MakindeNoch keine Bewertungen

- RAC FAQ'sDokument9 SeitenRAC FAQ'sSurender MarthaNoch keine Bewertungen

- Introduction To Distributive System: By: AgoDokument21 SeitenIntroduction To Distributive System: By: AgoALBINO C. GALGO JR.Noch keine Bewertungen

- Autoslice: Automated and Scalable Slicing For Software-Defined NetworksDokument2 SeitenAutoslice: Automated and Scalable Slicing For Software-Defined NetworksmarceloandersonNoch keine Bewertungen

- IOUG93 - Client Server Very Large Databases - PaperDokument11 SeitenIOUG93 - Client Server Very Large Databases - PaperDavid WalkerNoch keine Bewertungen

- VCS Building BlocksDokument31 SeitenVCS Building BlocksmahaboobaNoch keine Bewertungen

- RAC Interview QuestionsDokument14 SeitenRAC Interview QuestionsdesamratNoch keine Bewertungen

- M05 Cluster Storage OverviewDokument47 SeitenM05 Cluster Storage OverviewraizadasaurNoch keine Bewertungen

- A Primer On Database Clustering Architectures: by Mike Hogan, Ceo Scaledb IncDokument7 SeitenA Primer On Database Clustering Architectures: by Mike Hogan, Ceo Scaledb IncShukur SharifNoch keine Bewertungen

- Dell Emc Vplex1Dokument3 SeitenDell Emc Vplex1AtthulaiNoch keine Bewertungen

- Practical No. 1: Aim: Study About Distributed Database System. TheoryDokument22 SeitenPractical No. 1: Aim: Study About Distributed Database System. TheoryPrathmesh ChopadeNoch keine Bewertungen

- Open XDR Security Platform High Availability (HA) : Solutions NoteDokument3 SeitenOpen XDR Security Platform High Availability (HA) : Solutions NoteramramNoch keine Bewertungen

- Oracle Cluster Inside:: Level 0Dokument4 SeitenOracle Cluster Inside:: Level 0PAWANNoch keine Bewertungen

- DELL and EMC VPLEXDokument2 SeitenDELL and EMC VPLEXAtthulaiNoch keine Bewertungen

- Distributed DatabaseDokument12 SeitenDistributed Databasesrii21rohithNoch keine Bewertungen

- Service Migration in A Distributed Virtualization SystemDokument14 SeitenService Migration in A Distributed Virtualization SystemPablo PessolaniNoch keine Bewertungen

- Ijirt147527 PaperDokument6 SeitenIjirt147527 PaperPI CubingNoch keine Bewertungen

- Distributed Database Management SystemDokument5 SeitenDistributed Database Management SystemGeeti JunejaNoch keine Bewertungen

- 21 DistributedDokument6 Seiten21 DistributedMaria Lady Jeam SagaNoch keine Bewertungen

- BDA_Class3Dokument15 SeitenBDA_Class3Celina SawanNoch keine Bewertungen

- Design of Warehouse Scale Computers (WSC) ArchitectureDokument5 SeitenDesign of Warehouse Scale Computers (WSC) ArchitectureJuan Carlos Álvarez SalazarNoch keine Bewertungen

- Ijais14 451229Dokument5 SeitenIjais14 451229ThankGodNoch keine Bewertungen

- NSX Compendium Vmware NSX For VsphereDokument20 SeitenNSX Compendium Vmware NSX For VsphereninodjukicNoch keine Bewertungen

- 100059419929496Dokument10 Seiten100059419929496HIPPO LDNoch keine Bewertungen

- Index Based Deduplication File System in Cloud Storage SystemDokument4 SeitenIndex Based Deduplication File System in Cloud Storage SystemerpublicationNoch keine Bewertungen

- Distributed Database Design: BasicsDokument18 SeitenDistributed Database Design: BasicsNishant Kumar NarottamNoch keine Bewertungen

- Virtualization WhitepaperDokument7 SeitenVirtualization WhitepaperBegaxi BegaxiiNoch keine Bewertungen

- System Admin and Server IntegrationDokument3 SeitenSystem Admin and Server Integrationmanoj22490Noch keine Bewertungen

- Cisco Nexus Dashboard Fabric Controller Release Notes, Release 12.1.2eDokument21 SeitenCisco Nexus Dashboard Fabric Controller Release Notes, Release 12.1.2eDavid Wong100% (1)

- Distributed Database Vs Conventional DatabaseDokument4 SeitenDistributed Database Vs Conventional Databasecptsankar50% (2)

- What is a Server Cluster? Concept, Types, AdvantagesDokument9 SeitenWhat is a Server Cluster? Concept, Types, AdvantagesBalachandar KrishnaswamyNoch keine Bewertungen

- Ms Exchange Server AccelerationDokument8 SeitenMs Exchange Server Accelerationamos_evaNoch keine Bewertungen

- Service Proxy for Load Balancing and Autoscaling in a Distributed SystemDokument10 SeitenService Proxy for Load Balancing and Autoscaling in a Distributed SystemPablo PessolaniNoch keine Bewertungen

- Optimize OpenVMS Cluster Quorum and Data IntegrityDokument6 SeitenOptimize OpenVMS Cluster Quorum and Data IntegrityManojChakrabortyNoch keine Bewertungen

- Name: Arko: Abstract: A Computer Has Three Logical Systems. It Stores Frequently Accessed Instructions and DataDokument18 SeitenName: Arko: Abstract: A Computer Has Three Logical Systems. It Stores Frequently Accessed Instructions and DataSafaT LennoxNoch keine Bewertungen

- Apache Cassandra: A Distributed NoSQL DatabaseDokument12 SeitenApache Cassandra: A Distributed NoSQL DatabaseChaithu GowdruNoch keine Bewertungen

- Modern Networking and SDNDokument1 SeiteModern Networking and SDNNghiepjasonNoch keine Bewertungen

- Cluster ComputingDokument20 SeitenCluster Computingsharik0% (1)

- Nexenta Replicast White PaperDokument9 SeitenNexenta Replicast White PaperMarius BoeruNoch keine Bewertungen

- Stanford Dash MultiprocessorDokument19 SeitenStanford Dash MultiprocessorWinner WinnerNoch keine Bewertungen

- 2-Networks in Cloud ComputingDokument70 Seiten2-Networks in Cloud ComputingBishnu BashyalNoch keine Bewertungen

- Failover ClusteringDokument251 SeitenFailover ClusteringdoduongconanNoch keine Bewertungen

- Introduction To Mysql Cluster: Architecture and Use: (Based On An Original Paper by Stewart Smith, Mysql Ab)Dokument7 SeitenIntroduction To Mysql Cluster: Architecture and Use: (Based On An Original Paper by Stewart Smith, Mysql Ab)Nik HanifNoch keine Bewertungen

- Dynamo: Amazon'S Highly Available Key-Value Store: Csci 8101: Advanced Operating Systems Presented By: Chaithra KNDokument23 SeitenDynamo: Amazon'S Highly Available Key-Value Store: Csci 8101: Advanced Operating Systems Presented By: Chaithra KNJan DoeNoch keine Bewertungen

- Getting Started with NSX-T: Logical Routing and Switching: The Basic Principles of Building Software-Defined Network Architectures with VMware NSX-TVon EverandGetting Started with NSX-T: Logical Routing and Switching: The Basic Principles of Building Software-Defined Network Architectures with VMware NSX-TNoch keine Bewertungen

- Brkarc 3470 Cisconexus7000 7700switcharchitecture2016lasvegas 2hours 160930084524Dokument83 SeitenBrkarc 3470 Cisconexus7000 7700switcharchitecture2016lasvegas 2hours 160930084524Faisal Abdul GaffoorNoch keine Bewertungen

- How To Setup VMware Vsphere Lab in VMware Workstation ?Dokument44 SeitenHow To Setup VMware Vsphere Lab in VMware Workstation ?Stefanus E PrasstNoch keine Bewertungen

- Understanding SD-WAN Managed Services MEFDokument15 SeitenUnderstanding SD-WAN Managed Services MEFVijayaguru JayaramNoch keine Bewertungen

- Dell XC Series Appliances - Reference Architecture For Microsoft Hyper-V ClusterDokument54 SeitenDell XC Series Appliances - Reference Architecture For Microsoft Hyper-V ClusterFaisal Abdul GaffoorNoch keine Bewertungen

- Cisco UCS Director 5-4 VMware v1 Demo GuideDokument66 SeitenCisco UCS Director 5-4 VMware v1 Demo GuideFaisal Abdul GaffoorNoch keine Bewertungen

- BVWP Nutanix - Dell 258075 v2Dokument18 SeitenBVWP Nutanix - Dell 258075 v2hafssazahNoch keine Bewertungen

- Lenovo Refer ArchitDokument51 SeitenLenovo Refer ArchitFaisal Abdul GaffoorNoch keine Bewertungen

- SS XC Appliance 032516Dokument3 SeitenSS XC Appliance 032516Faisal Abdul GaffoorNoch keine Bewertungen

- AHV Admin-Acr v4 5Dokument20 SeitenAHV Admin-Acr v4 5Faisal Abdul GaffoorNoch keine Bewertungen

- Lenovo Refer ArchitDokument51 SeitenLenovo Refer ArchitFaisal Abdul GaffoorNoch keine Bewertungen

- Pope Francis' Call to Protect Human Dignity and the EnvironmentDokument5 SeitenPope Francis' Call to Protect Human Dignity and the EnvironmentJulie Ann BorneoNoch keine Bewertungen

- Laertes' Temper: Definition of DiacopeDokument27 SeitenLaertes' Temper: Definition of DiacopeAhmad Abdellah ElsheemiNoch keine Bewertungen

- Learning Disabilities in The ClassroomDokument5 SeitenLearning Disabilities in The ClassroomekielaszekNoch keine Bewertungen

- Case - 1 Marketing and Distribution of MushroomDokument2 SeitenCase - 1 Marketing and Distribution of MushroomKetan BohraNoch keine Bewertungen

- Disirders of Synaptic Plasticity and Schizophrenia - J.smythiesDokument559 SeitenDisirders of Synaptic Plasticity and Schizophrenia - J.smythiesBrett CromptonNoch keine Bewertungen

- 1 Unpacking The SelfDokument13 Seiten1 Unpacking The SelfJEMABEL SIDAYENNoch keine Bewertungen

- Case Study Analysis of Six Sigma Implementation Inservice OrganisationsDokument30 SeitenCase Study Analysis of Six Sigma Implementation Inservice OrganisationsMohammed AwolNoch keine Bewertungen

- Almeda v. CaDokument10 SeitenAlmeda v. CaRuss TuazonNoch keine Bewertungen

- Unit 8Dokument2 SeitenUnit 8The Four QueensNoch keine Bewertungen

- How To Download Cosmetic Injection Techniques A Text and Video Guide To Neurotoxins Fillers Ebook PDF Docx Kindle Full ChapterDokument36 SeitenHow To Download Cosmetic Injection Techniques A Text and Video Guide To Neurotoxins Fillers Ebook PDF Docx Kindle Full Chapterkerri.kite140100% (23)

- Accenture 2014 Celent Claims ABCD Acn Duck Creek Dec14Dokument28 SeitenAccenture 2014 Celent Claims ABCD Acn Duck Creek Dec14Ainia Putri Ayu KusumaNoch keine Bewertungen

- Mugunthan ResumeDokument4 SeitenMugunthan Resumeapi-20007381Noch keine Bewertungen

- ID Kajian Hukum Perjanjian Perkawinan Di Kalangan Wni Islam Studi Di Kota Medan PDFDokument17 SeitenID Kajian Hukum Perjanjian Perkawinan Di Kalangan Wni Islam Studi Di Kota Medan PDFsabila azilaNoch keine Bewertungen

- Description Text About Cathedral Church Jakarta Brian Evan X MIPA 2Dokument2 SeitenDescription Text About Cathedral Church Jakarta Brian Evan X MIPA 2Brian KristantoNoch keine Bewertungen

- scn625 Summativeeval SarahltDokument6 Seitenscn625 Summativeeval Sarahltapi-644817377Noch keine Bewertungen

- Factors Affecting Customer Loyalty to Indosat OoredooDokument13 SeitenFactors Affecting Customer Loyalty to Indosat OoredooDede BhubaraNoch keine Bewertungen

- 1201 CCP Literature ReviewDokument5 Seiten1201 CCP Literature Reviewapi-548148057Noch keine Bewertungen

- Chronology of TLM Event in The PhilippinesDokument3 SeitenChronology of TLM Event in The PhilippinesTheus LineusNoch keine Bewertungen

- Rehotrical AnalysisDokument3 SeitenRehotrical AnalysisShahid MumtazNoch keine Bewertungen

- EDMU 520 Phonics Lesson ObservationDokument6 SeitenEDMU 520 Phonics Lesson ObservationElisa FloresNoch keine Bewertungen