Beruflich Dokumente

Kultur Dokumente

(IJCST-V5I1P10) :D.Jasmine Shoba, D.Jasmine Shoba

Hochgeladen von

EighthSenseGroupOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

(IJCST-V5I1P10) :D.Jasmine Shoba, D.Jasmine Shoba

Hochgeladen von

EighthSenseGroupCopyright:

Verfügbare Formate

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 1, Jan Feb 2017

RESEARCH ARTICLE OPEN ACCESS

A Study on Data Compression Using Huffman Coding

Algorithms

D.Jasmine Shoba [1], Dr.S.Sivakumar [2]

Research Scholar [1], Assistant Professor [2]

Department of Computer Science [1]

Department of Computer Applications [2]

Thanthai Hans Roever College, Perambalur

Tamil Nadu India

ABSTRACT

Data reduction is one of the data preprocessing techniques which can be applied to obtain a reduced representation

of the data set that is much smaller in volume, yet closely maintains the integrity of the original data. That is, mining

on the reduced data set should be more efficient yet produce the same analytical results. Data compression is useful,

where encoding mechanisms are used to reduce the data set size. In data compression, data encoding or

transformations are applied so as to obtain a reduced or compressed representation of the original data. Huffman

coding is a successful compression method used originally for text compression. Huffman's idea is, instead of using

a fixed-length code such as 8 bit extended ASCII or DBCDIC for each symbol, to represent a frequently occurring

character in a source with a shorter codeword and to represent a less frequently occurring one with a longer

codeword.

Keywords:- Huffman Coding, Data Comparison, Data decompression

I. INTRODUCTION segment, the SAIC can achieve better compression

Data compression is one of the most ratio and quality than conventional image

widespread applications in computer technology. compression algorithm.

Algorithms and methods that are used depend

strongly on the type of data, i.e. whether the data is We also briefly introduce the technique that

static or dynamic, and on the content that can be any utilizes the statistical characteristics for image

combination of text, images, numeric data or compression. The new image compression algorithm

unrestricted binary data. The compression and called Shape- Adaptive Image Compression, which is

decompression techniques are playing main role in proposed by Huang [1], takes advantage of the local

the data transmission process. The best compression characteristics for image compaction. The SAIC

techniques among the three algorithms have to be compensates for the shortcoming of JPEG that

analyzed to handle text data file. This analysis may regards the whole image as a single object and do not

be performed by comparing the measures of the take advantage of the characteristics of image

compression and decompression segments.

II. RELATED WORKS The term refers to the use of a variable-length

Both the JPEG and JPEG 2000 image code table for encoding a source symbol (such as a

compression standard can achieve great compression character in a file) where the variable-length code

ratio, however, both of them do not take advantage of table has been derived in a particular way based on

the local characteristics of the given image the estimated probability of occurrence for each

effectively. Here is one new image compression possible value of the source symbol. Huffman coding

algorithm proposed by Huang [2], it is called Shape is based on frequency of occurrence of a data item.

Adaptive Image Compression, which is abbreviated The principle is to use a lower number of bits to

as SAIC. Instead of taking the whole image as an encode the data that occurs more frequently [3].

object and utilizing transform coding, quantization,

and entropy coding to encode this object, the SAIC The DCT separates the image into different

algorithm segments the whole image into several frequencies part. Higher frequencies represent quick

objects, and each object has its own local changes between image pixels and low frequencies

characteristic and color. Because of the high represent gradual changes between image pixels. In

correlation of the color values in each image

ISSN: 2347-8578 www.ijcstjournal.org Page 58

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 1, Jan Feb 2017

order to perform the DCT on an image, the image While AC coefficients are coded using RLC

should be divided into 8 8 or 16 16 blocks [4]. algorithm. The DPCM algorithm records the

difference between the DC coefficients of the current

It is based on the idea to replace a long block and the previous block [5].

sequence of the same symbol by a shorter sequence.

The DC coefficients are coded separately from the

AC ones. A DC coefficient is coded by the DPCM,

which is a lossless data compression technique.

III. RESEARCH METHODOLOGY

This section describes the research Methodology generated by the proposed system. We have taken different

dataset and conducted various experiments to determine the performance of the proposed system.

Figure 1. Architecture of research methodology

3.1 Huffman Coding Technique

A more sophisticated and efficient lossless compression technique is known as "Huffman coding", in which

the characters in a data file are converted to a binary code, where the most common characters in the file have the

shortest binary codes, and the least common have the longest [9]. To see how Huffman coding works, assume that a

text file is to be compressed, and that the characters in the file have the following frequencies:

A: 29 B: 64 C: 32 D: 12 E: 9 F: 66 G: 23

In practice, we need the frequencies for all the characters used in the text, including all letters, digits, and

punctuation, but to keep the example simple we'll just stick to the characters from A to G. The first step in building a

Huffman code is to order the characters from highest to lowest frequency of occurrence as follows:

66 64 32 29 23 12 9

F B C A G D E

First, the two least-frequent characters are selected, logically grouped together, and their frequencies added.

In this example, the D and E characters have a combined frequency of 21:

|

+--+--+

| 21 |

| |

ISSN: 2347-8578 www.ijcstjournal.org Page 59

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 1, Jan Feb 2017

66 64 32 29 23 12 9

F B C A G D E

This begins the construction of a "binary tree" structure. We now again select the two elements the lowest

frequencies, regarding the D-E combination as a single element. In this case, the two elements selected are G and the

D-E combination. We group them together and add their frequencies. This new combination has a frequency of 44:

|

+------+-----+

| 44 |

| |

| +--+--+

| | 21 |

| | |

66 64 32 29 23 12 9

F B C A G D E

We continue in the same way to select the two elements with the lowest frequency, group them together, and add

their frequencies, until we run out of elements.

In the third iteration, the lowest frequencies are C and A and the final binary tree will be as follows:

|

+-------------------------+----------------------------+

|0 |1

| |

| +-------------------+-----------------+

| |0 |1

| | |

| | +----------+----------+

| | |0 |1

| | | |

+-------+-------+ +-------+-------+ | +--+--+

|0 |1 |0 |1 | |0 |1

| | | | | | |

F B C A G D E

Tracing down the tree gives the "Huffman codes", with the shortest codes assigned to the characters with the

greatest frequency:

F: 00

B: 01

C: 100

A: 101

G: 110

D: 1110

E: 1111

The Huffman codes won't get confused in decoding. The best way to see that this is so is to envision the

decoder cycling through the tree structure, guided by the encoded bits it reads, moving from top to bottom and then

back to the top. As long as bits constitute legitimate Huffman codes, and a bit doesn't get scrambled or lost, the

decoder will never get lost, either.

3.2 Algorithm: Huffman encoding

Step 1: Build a binary tree where the leaves of the tree are the symbols in the alphabet.

Step 2: The edges of the tree are labeled by a 0 or I.

Step 3: Derive the Huffman code from the Huffman tree.

INPUT : a sorted list of one-node binary trees (t l , t2,... , tn) for alphabet

ISSN: 2347-8578 www.ijcstjournal.org Page 60

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 1, Jan Feb 2017

( Sl , . - . , Sn) with frequencies ( Wl , . . . , Wn)

OUTPUT : a Huffman code with n code words

3.3 Algorithm: Huffman decoding

Step 1: Read the coded message bit by bit. Starting from the root, we traverse one edge down the tree to a child

according to the bit value. If the current bit read are 0 moves to the left child, otherwise, to the right child.

Step 2: Repeat this process until reach a leaf. If a leaf is reached, decode one character and restart the traversal from

the root.

Step 3: Repeat this read-and-move procedure until the end of the message.

INPUT : a Huffman tree and a 0-1 bit string of encoded message

OUTPUT: decoded string

IV. RESULT AND DISCUSSION

This system consists of three major modules namely, compression process, comparison factors and

Reports. The first module compression process is used to compress the given input text file and decompress the

compressed file. The second module comparison factors is used to compare the Huffman Coding Technique based

on the calculated metrics namely compression ratio, Transmission time and memory utilization. The next module

Reports is used to view the various reports about the analysis process.

The input file which is to be compressed using Huffman Technique is selected from the specific location

and displayed in the List box as shown in the following figure 2

Figure 2. Huffman Technique Running window

4.1 Text Decompression

The text decompression is taking the compressed file and expanding it into its original form. The

decompression part provides the original file as output. That is converting an input data stream into another data

stream that has an original size of input file. In the decompression process, the decompression process is activated

through decompression button using Huffman coding. The decompressed text file is stored in a separate file and it is

shown in list box as shown in Figure .

ISSN: 2347-8578 www.ijcstjournal.org Page 61

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 1, Jan Feb 2017

Figure 3. Time efficiency

Figure 4. Compression ratio & Memory Occupied

The above graph is shows the comparison of shows the compression performance better

compression ratios and memory occupied of the techniques. Surely this technique will open a scope in

existing systems and the proposed system. In the the field of text compression where every bit of

graph, the horizontal axis represents the length of information is significant.

input string in bytes and vertical axis represents the

Compression Ratio in percentage. REFERENCES

V. CONCLUSION [1] Jian-Jiun Ding and Tzu-Heng Lee, "Shape-

Adaptive Image Compression", Masters Thesis,

In this Paper Huffman coding compression National Taiwan University, Taipei, 2008

techniques are compared. This system uses three [2] Jian-Jiun Ding and Jiun-De Huang, "Image

metrics such as compression ratio, transmission time Compression by Segmentation and Boundary

and memory utilization to compare and analyze the Description", Masters Thesis, National Taiwan

results. It is found that the Huffman coding technique University, Taipei, 2007.

ISSN: 2347-8578 www.ijcstjournal.org Page 62

International Journal of Computer Science Trends and Technology (IJCST) Volume 5 Issue 1, Jan Feb 2017

[3] Sharma, M.: 'Compression Using Huffman

Coding'. International Journal of Computer

Science and Network Security, VOL.10 No.5,

May 2010.

[4] O Hanen, B., and Wisan M. : ' JPEG

Compression'. December 16, 2005.

[5] Blelloch, G.: 'Introduction to Data Compression'.

Carnegie Mellon University, September , 2010.

ISSN: 2347-8578 www.ijcstjournal.org Page 63

Das könnte Ihnen auch gefallen

- (IJCST-V12I1P4) :M. Sharmila, Dr. M. NatarajanDokument9 Seiten(IJCST-V12I1P4) :M. Sharmila, Dr. M. NatarajanEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I6P8) :subhadip KumarDokument7 Seiten(IJCST-V11I6P8) :subhadip KumarEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I5P4) :abhirenjini K A, Candiz Rozario, Kripa Treasa, Juvariya Yoosuf, Vidya HariDokument7 Seiten(IJCST-V11I5P4) :abhirenjini K A, Candiz Rozario, Kripa Treasa, Juvariya Yoosuf, Vidya HariEighthSenseGroupNoch keine Bewertungen

- (IJCST-V12I1P2) :DR .Elham Hamed HASSAN, Eng - Rahaf Mohamad WANNOUSDokument11 Seiten(IJCST-V12I1P2) :DR .Elham Hamed HASSAN, Eng - Rahaf Mohamad WANNOUSEighthSenseGroupNoch keine Bewertungen

- (IJCST-V12I1P7) :tejinder Kaur, Jimmy SinglaDokument26 Seiten(IJCST-V12I1P7) :tejinder Kaur, Jimmy SinglaEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I6P5) :A.E.E. El-Alfi, M. E. A. Awad, F. A. A. KhalilDokument9 Seiten(IJCST-V11I6P5) :A.E.E. El-Alfi, M. E. A. Awad, F. A. A. KhalilEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I4P12) :N. Kalyani, G. Pradeep Reddy, K. SandhyaDokument16 Seiten(IJCST-V11I4P12) :N. Kalyani, G. Pradeep Reddy, K. SandhyaEighthSenseGroupNoch keine Bewertungen

- (Ijcst-V11i4p1) :fidaa Zayna, Waddah HatemDokument8 Seiten(Ijcst-V11i4p1) :fidaa Zayna, Waddah HatemEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P25) :pooja Patil, Swati J. PatelDokument5 Seiten(IJCST-V11I3P25) :pooja Patil, Swati J. PatelEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I4P5) :P Jayachandran, P.M Kavitha, Aravind R, S Hari, PR NithishwaranDokument4 Seiten(IJCST-V11I4P5) :P Jayachandran, P.M Kavitha, Aravind R, S Hari, PR NithishwaranEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P7) :nikhil K. Pawanikar, R. SrivaramangaiDokument15 Seiten(IJCST-V11I3P7) :nikhil K. Pawanikar, R. SrivaramangaiEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I4P15) :M. I. Elalami, A. E. Amin, S. A. ElsaghierDokument8 Seiten(IJCST-V11I4P15) :M. I. Elalami, A. E. Amin, S. A. ElsaghierEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I4P9) :KiranbenV - Patel, Megha R. Dave, Dr. Harshadkumar P. PatelDokument18 Seiten(IJCST-V11I4P9) :KiranbenV - Patel, Megha R. Dave, Dr. Harshadkumar P. PatelEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P21) :ms. Deepali Bhimrao Chavan, Prof. Suraj Shivaji RedekarDokument4 Seiten(IJCST-V11I3P21) :ms. Deepali Bhimrao Chavan, Prof. Suraj Shivaji RedekarEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I4P3) :tankou Tsomo Maurice Eddy, Bell Bitjoka Georges, Ngohe Ekam Paul SalomonDokument9 Seiten(IJCST-V11I4P3) :tankou Tsomo Maurice Eddy, Bell Bitjoka Georges, Ngohe Ekam Paul SalomonEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P11) :Kalaiselvi.P, Vasanth.G, Aravinth.P, Elamugilan.A, Prasanth.SDokument4 Seiten(IJCST-V11I3P11) :Kalaiselvi.P, Vasanth.G, Aravinth.P, Elamugilan.A, Prasanth.SEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P13) : Binele Abana Alphonse, Abou Loume Gautier, Djimeli Dtiabou Berline, Bavoua Kenfack Patrick Dany, Tonye EmmanuelDokument31 Seiten(IJCST-V11I3P13) : Binele Abana Alphonse, Abou Loume Gautier, Djimeli Dtiabou Berline, Bavoua Kenfack Patrick Dany, Tonye EmmanuelEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P24) :R.Senthilkumar, Dr. R. SankarasubramanianDokument7 Seiten(IJCST-V11I3P24) :R.Senthilkumar, Dr. R. SankarasubramanianEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P20) :helmi Mulyadi, Fajar MasyaDokument8 Seiten(IJCST-V11I3P20) :helmi Mulyadi, Fajar MasyaEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P9) :raghu Ram Chowdary VelevelaDokument6 Seiten(IJCST-V11I3P9) :raghu Ram Chowdary VelevelaEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P12) :prabhjot Kaur, Rupinder Singh, Rachhpal SinghDokument6 Seiten(IJCST-V11I3P12) :prabhjot Kaur, Rupinder Singh, Rachhpal SinghEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooDokument8 Seiten(IJCST-V11I3P17) :yash Vishwakarma, Akhilesh A. WaooEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I2P19) :nikhil K. Pawanika, R. SrivaramangaiDokument15 Seiten(IJCST-V11I2P19) :nikhil K. Pawanika, R. SrivaramangaiEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P5) :P Adhi Lakshmi, M Tharak Ram, CH Sai Teja, M Vamsi Krishna, S Pavan MalyadriDokument4 Seiten(IJCST-V11I3P5) :P Adhi Lakshmi, M Tharak Ram, CH Sai Teja, M Vamsi Krishna, S Pavan MalyadriEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P8) :pooja Patil, Swati J. PatelDokument5 Seiten(IJCST-V11I3P8) :pooja Patil, Swati J. PatelEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P4) :V Ramya, A Sai Deepika, K Rupa, M Leela Krishna, I Satya GirishDokument4 Seiten(IJCST-V11I3P4) :V Ramya, A Sai Deepika, K Rupa, M Leela Krishna, I Satya GirishEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I2P18) :hanadi Yahya DarwishoDokument32 Seiten(IJCST-V11I2P18) :hanadi Yahya DarwishoEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P1) :Dr.P.Bhaskar Naidu, P.Mary Harika, J.Pavani, B.Divyadhatri, J.ChandanaDokument4 Seiten(IJCST-V11I3P1) :Dr.P.Bhaskar Naidu, P.Mary Harika, J.Pavani, B.Divyadhatri, J.ChandanaEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedDokument4 Seiten(IJCST-V11I3P2) :K.Vivek, P.Kashi Naga Jyothi, G.Venkatakiran, SK - ShaheedEighthSenseGroupNoch keine Bewertungen

- (IJCST-V11I2P17) :raghu Ram Chowdary VelevelaDokument7 Seiten(IJCST-V11I2P17) :raghu Ram Chowdary VelevelaEighthSenseGroupNoch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Difference EquationsDokument193 SeitenDifference EquationsYukta Modernite Raj100% (1)

- TIMO Selection Primary 5Dokument2 SeitenTIMO Selection Primary 5Qurniawan Agung Putra100% (5)

- Sachi Nandan Mohanty, Pabitra Kumar Tripathy - Data Structure and Algorithms Using C++ - A Practical Implementation-Wiley-Scrivener (2021)Dokument403 SeitenSachi Nandan Mohanty, Pabitra Kumar Tripathy - Data Structure and Algorithms Using C++ - A Practical Implementation-Wiley-Scrivener (2021)Mia ShopNoch keine Bewertungen

- Gaussian Beam OpticsDokument16 SeitenGaussian Beam OpticsModyKing99Noch keine Bewertungen

- Cambridge International General Certificate of Secondary EducationDokument16 SeitenCambridge International General Certificate of Secondary Educationprathamkumar8374Noch keine Bewertungen

- Ignazio Basile, Pierpaolo Ferrari (Eds.) - Asset Management and Institutional Investors-Springer International Publishing (2016) PDFDokument469 SeitenIgnazio Basile, Pierpaolo Ferrari (Eds.) - Asset Management and Institutional Investors-Springer International Publishing (2016) PDFrhinolovescokeNoch keine Bewertungen

- Playing With Blends in CoreldrawDokument12 SeitenPlaying With Blends in CoreldrawZubairNoch keine Bewertungen

- Basis Path TestingDokument4 SeitenBasis Path TestingKaushik MukherjeeNoch keine Bewertungen

- Interpreting and Using Statistics in Psychological Research 1St Edition Christopher Test Bank Full Chapter PDFDokument41 SeitenInterpreting and Using Statistics in Psychological Research 1St Edition Christopher Test Bank Full Chapter PDFhungden8pne100% (12)

- Irodov Fundamental Laws of Mechanics PDFDokument139 SeitenIrodov Fundamental Laws of Mechanics PDFsedanya67% (3)

- Strategies For Administrative Reform: Yehezkel DrorDokument17 SeitenStrategies For Administrative Reform: Yehezkel DrorNahidul IslamNoch keine Bewertungen

- Elasticity & Oscillations: Ut Tension, Sic Vis As Extension, So Force. Extension Is Directly Proportional To ForceDokument11 SeitenElasticity & Oscillations: Ut Tension, Sic Vis As Extension, So Force. Extension Is Directly Proportional To ForceJustin Paul VallinanNoch keine Bewertungen

- Digital SignatureDokument49 SeitenDigital SignatureVishal LodhiNoch keine Bewertungen

- HaskellDokument33 SeitenHaskellanon_889114720Noch keine Bewertungen

- Landing Gear Design and DevelopmentDokument12 SeitenLanding Gear Design and DevelopmentC V CHANDRASHEKARANoch keine Bewertungen

- DD2434 Machine Learning, Advanced Course Assignment 2: Jens Lagergren Deadline 23.00 (CET) December 30, 2017Dokument5 SeitenDD2434 Machine Learning, Advanced Course Assignment 2: Jens Lagergren Deadline 23.00 (CET) December 30, 2017Alexandros FerlesNoch keine Bewertungen

- Lesson Plan Junior High School 7 Grade Social ArithmeticDokument9 SeitenLesson Plan Junior High School 7 Grade Social ArithmeticSafaAgritaNoch keine Bewertungen

- Lecture 10Dokument19 SeitenLecture 10M.Usman SarwarNoch keine Bewertungen

- Lessons For The Young Economist Robert P MurphyDokument422 SeitenLessons For The Young Economist Robert P Murphysleepyninjitsu100% (1)

- Wingridds Users GuideDokument360 SeitenWingridds Users GuidedardNoch keine Bewertungen

- Effect of Axial Load Mode Shapes A N D Frequencies BeamsDokument33 SeitenEffect of Axial Load Mode Shapes A N D Frequencies BeamsYoyok SetyoNoch keine Bewertungen

- STFTDokument5 SeitenSTFTclassic_777Noch keine Bewertungen

- Symmetry in The Music of Thelonious Monk PDFDokument97 SeitenSymmetry in The Music of Thelonious Monk PDFMicheleRusso100% (1)

- Math10 q2 Mod4 Provingtheorems v5Dokument26 SeitenMath10 q2 Mod4 Provingtheorems v5Mikaela MotolNoch keine Bewertungen

- MTH302 Solved MCQs Alotof Solved MCQsof MTH302 InonefDokument26 SeitenMTH302 Solved MCQs Alotof Solved MCQsof MTH302 InonefiftiniaziNoch keine Bewertungen

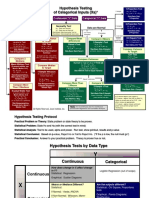

- Hypothesis Testing Roadmap PDFDokument2 SeitenHypothesis Testing Roadmap PDFShajean Jaleel100% (1)

- Design and Simulation of A Iris Recognition SystemDokument50 SeitenDesign and Simulation of A Iris Recognition SystemLateef Kayode Babatunde100% (3)

- Urdaneta City UniversityDokument2 SeitenUrdaneta City UniversityTheodore VilaNoch keine Bewertungen

- Quantum Games and Quantum StrategiesDokument4 SeitenQuantum Games and Quantum StrategiesReyna RamirezNoch keine Bewertungen

- Bike Talk KTH 2006Dokument66 SeitenBike Talk KTH 2006jdpatel28Noch keine Bewertungen