Beruflich Dokumente

Kultur Dokumente

Some Important Notes

Hochgeladen von

pbenCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Some Important Notes

Hochgeladen von

pbenCopyright:

Verfügbare Formate

OneNote: one place for all of your notes

minute

video

Watc

h the

2

1. Take notes anywhere on the page

Write your name here

2. Get organized

You start with "My Notebook" - everything lives in here

Add sections for activities like:

Add pages inside of each section:

Quick Notes Page 1

(Pages are over there)

3. For more tips, check out 30 second videos

Clip from Plan a trip Search notes Write notes

the web with others instantly on slides

4. Create your first page

You're in the Quick Notes section - use it for random notes

Quick Notes Page 2

OneNote Basics

Remember everything

Add Tags to any notes

Make checklists and to-do lists

Create your own custom tags

Collaborate with others

Keep your notebooks on OneDrive

Share with friends and family

Anyone can edit in a browser

Keep everything in sync

People can edit pages at the same time

Real-Time Sync on the same page

Everything stored in the cloud

Accessible from any device

Clip from the web

Quick Notes Page 3

Clip from the web

Quickly clip anything on your screen

Take screenshots of products online

Save important news articles

Organize with tables

Type, then press TAB to create a table

Quickly sort and shade tables

Convert tables to Excel spreadsheets

Write notes on slides

Send PowerPoint or Word docs to OneNote

Annotate with a stylus on your tablet

Highlight and finger-paint

Integrate with Outlook

Quick Notes Page 4

Integrate with Outlook

Take notes on Outlook or Lync meetings

Insert meeting details

Add Outlook tasks from OneNote

From Outlook:

Add Excel spreadsheets

Track finances, budgets, & more

Preview updates on the page

Brainstorm without clutter

Hide everything but the essentials

Extra space to focus on your notes

Take quick notes

Quick Notes Page 5

Take quick notes

Quickly jot down thoughts and ideas

They go into your Quick Notes section

Quick Notes Page 6

Stats

Friday, April 22, 2016 10:19 AM

yarn queue -status dev

yarn queue -status <queuename> - Gives the statstics of the queue.

Also for maven projects all dev teams should use Hortonworks repo instead of public apache repo:

<repositories>

<repository>

<releases>

<enabled>true</enabled>

<updatePolicy>always</updatePolicy>

<checksumPolicy>warn</checksumPolicy>

</releases>

<snapshots>

<enabled>false</enabled>

<updatePolicy>never</updatePolicy>

<checksumPolicy>fail</checksumPolicy>

</snapshots>

<id>HDPReleases</id>

<name>HDP Releases</name>

<url>http://repo.hortonworks.com/content/repositories/releases/</url>

<layout>default</layout>

</repository>

</repositories>

Hi All,

Pl find the attached script for taking backup and recreate the Hbase table with pre split. Pl follow the

below steps. Example command has used a HBase table named master:TEST_ADD with two column A, B.

Backup the data

o pig -f BackUpHBaseInHdfs.pig -param TABLENAME=master:TEST_ADD -param

columnsList=cf1:A,cf1:B -param ColumnNameWithType=A:chararray,B:chararray -

param outputDir=/dev/tset/raw/hbase/TEST_ADD

Validate the data generated in the given output folder

Drop the Hbase table

Create the Hbase table with Pre-split and snappy

o create 'master:TEST_ADD', {NAME => 'cf1', COMPRESSION => 'SNAPPY'}, {SPLITS => ['00000',

'00001', '00002', '00003', '00004', '00005', '00006', '00007', '00008',

'1','2','3','4','5','6','7','8','9','a','c','e','g', 'l', 'j', 'k', 'm', 'o', 'q', 's', 'u', 'w', 'y']}

Reload the data

o pig -f LoadHbaseFromBackup.pig -param TABLENAME=master:TEST_ADD -param

columnsList=cf1:A,cf1:B -param ColumnNameWithType=A:chararray,B:chararray -param

outputDir=/dev/tset/raw/hbase/TEST_ADD

Daily Commands Page 7

Hbase commands:

Thursday, March 03, 2016 2:03 PM

Creating pre split like below

create lineofservice:ln_of_srvc_ofrg_instnc', 'cf1', SPLITS => ['1','2','3','4','5','6','7','8','9','a',c,'e','g', 'l', j,

k, 'm', o, 'q', 's', u, 'w', y]

create 'party:NPANXX', {NAME => 'cf1', COMPRESSION => 'SNAPPY'}, {SPLITS => ['00000', '00001',

'00002', '00003', '00004', '00005', '00006', '00007', '00008', '1','2','3','4','5','6','7','8','9','a','c','e','g', 'l', 'j',

'k', 'm', 'o', 'q', 's', 'u', 'w', 'y']}

Hbase Limit command:

Scan 'hbase_table',{'LIMIT' => 5}

snapshot 'sourceTable', 'sourceTable-Snapshot'

clone_snapshot 'sourceTable-snapshot', 'newTable'

Example:

snapshot 'DEV_RPX.PAG_ADM.NGP_REF', 'DEV_RPX.PAG_ADM.NGP_REF_SNAP'

clone_snapshot 'DEV_RPX.PAG_ADM.NGP_REF_SNAP' , 'DEV_RPX.PAG_ADM.NGP_REF_BKP'

HBase commands:

get 'DEV_NCS.CPN_OWN.SKU_REF','984216:1900-01-01 12:00:00','CF1:SKU_ID'

get '<tablename>','<rowkey>','CF1:<columnname>'

deleteall 'DEV_NCS.CPN_OWN.SKU_REF','rowkey'

Daily Commands Page 8

Hive commands:

Thursday, March 03, 2016 2:04 PM

alter table dimnpanxx drop partition (partdate=20150120031606);

ALTER TABLE sku_ref DROP PARTITION (stagingpartdate > '0');

ALTER TABLE partner_ref DROP PARTITION (stagingpartdate=20160318045645);

ALTER TABLE event_adjustment ADD PARTITION (stagingpartdate=20160415052530) location

'/dev/rpx_event_adm_staging/event_adjustment/20160415052530';

mapred.job.queue.name=dev

Daily Commands Page 9

Pig

Thursday, March 03, 2016 2:04 PM

export PIG_OPTS="-Dhive.metastore.uris=thrift://devehdp004.unix.gsm1900.org:9083 -

Dmapred.job.queue.name=dev"

export PIG_CLASSPATH=/usr/hdp/current/hive-webhcat/share/hcatalog/*:/usr/hdp/2.2.4.2-2/hive/lib/*

pig -useHCatalog

REGISTER /usr/hdp/2.2.4.2-2/hbase/lib/*.jar;

REGISTER /usr/hdp/current/hive-webhcat/share/hcatalog/*.jar;

REGISTER /usr/hdp/2.2.4.2-2/hive/lib/*.jar;

REGISTER /usr/local/share/eit_hadoop/applications/idw/Finance_Retrofit/piggybank.jar;

REGISTER /usr/local/share/eit_hadoop/applications/idw/Finance_Retrofit/idwudf-1.0.jar;

Daily Commands Page 10

Oozie

Wednesday, March 16, 2016 2:59 PM

Killing a oozie job:

oozie job -oozie http://devehdp004.unix.gsm1900.org:11000/oozie -kill 0000683-160123215237769-

oozie-oozi-W

Daily Commands Page 11

Sqoop commands

Tuesday, March 22, 2016 10:35 AM

Connecting to mysql db

mysql --host=devehdp004.unix.gsm1900.org --user=sqoop --password=sqoop

Deleting a Sqoop job in DEV

sqoop job --meta-connect "jdbc:mysql://devehdp004.unix.gsm1900.org:3306/sqoop?

user=sqoop&password=sqoop" --delete hdp_event_account_transaction_event_disp_EXPORT

sqoop job --meta-connect 'jdbc:mysql://devehdp004.unix.gsm1900.org:3306/sqoop?

user=sqoop&password=sqoop' --show hdp_SAMSON_REFERENCE_SOC_IDW_IMPORT

sqoop job --meta-connect 'jdbc:mysql://prdehdp079.unix.gsm1900.org:3306/sqoop?

user=sqoop&password=sqoopaqmin123' --delete hdp_product_offering_samson_disp_EXPORT

Daily Commands Page 12

Phoenix

Thursday, April 21, 2016 12:30 PM

Below are the instructions for using Phoenix Client on Hadoop server. You are seeing error message as it is

unable to read Hbase configs.

export HBASE_CONF_PATH=/etc/hbase/conf:/etc/hadoop/conf

cd /usr/hdp/current/phoenix-client/bin

./sqlline.py devehdp001,devehdp002,devehdp003:2181:/hbase-secure

Note: Make sure you have valid kerberos ticket before starting sqlline client

#command

Kinit <<NTID>>@GSM1900.ORG

[sparepa@devehdp006 bin]$ ./sqlline.py devehdp001,devehdp002,devehdp003:2181:/hbase-secure

Setting property: [isolation, TRANSACTION_READ_COMMITTED]

issuing: !connect jdbc:phoenix:devehdp001,devehdp002,devehdp003:2181:/hbase-secure none none

org.apache.phoenix.jdbc.PhoenixDriver

Connecting to jdbc:phoenix:devehdp001,devehdp002,devehdp003:2181:/hbase-secure

Connected to: Phoenix (version 4.2)

Driver: PhoenixEmbeddedDriver (version 4.2)

Autocommit status: true

Transaction isolation: TRANSACTION_READ_COMMITTED

Building list of tables and columns for tab-completion (set fastconnect to true to skip)...

134/134 (100%) Done

Done

sqlline version 1.1.2

0: jdbc:phoenix:devehdp001,devehdp002,devehdp>

Commands:

!tables

!describe <tablename>

Daily Commands Page 13

Issues:

Friday, April 22, 2016 2:51 PM

First:

Did you ran this job by adding below property? If any job is running beyond 24 hours it is failing as

delegation token is cancelled. If you are still seeing the same issue even after adding that property,

then it has to be made at cluster level to reflect the change.

<property>

<name>mapreduce.job.complete.cancel.delegation.tokens</name>

<value>false</value>

</property>

Second:

1. hbase.rpc.timeout

2. hbase.client.scanner.timeout.period

set below properties to 900000

Third:

Daily Commands Page 14

Cluster Details:

Monday, April 18, 2016 2:33 PM

Production details:

211 datanodes

24 core

126 GB ram

111 hbase region servers

Each data node size: 24TB (of which we use 2TB for Harddisk root)

Total hdfs storage is 3.6PB

Versions:

Horton works version: 2.2.4

Hadoop: 2.6

Pig: 0.14

Hive: 0.14

Oozie: 4.1

Hbase: 0.98.4

Server n Logins n Softwares Page 15

QA Details

Saturday, March 05, 2016 11:38 AM

QA Cluster Details

IP Address: 10.158.163.15

Hostname: qatehdp005.unix.gsm1900.org

Login: NT id and password

Kerberos problem: kinit aakula@GSM1900.ORG and enter password when promted

Server n Logins n Softwares Page 16

ABC Details

Friday, March 11, 2016 11:27 AM

Dev Details:

Dev UI URL: http://devbeam002.unix.gsm1900.org:8080/abcapp/login#

Credentials: dadmin/abcuiadmin2016

Dev Balance Check Logs URL: http://devbeam002.unix.gsm1900.org:8080/abc/balanceCheckLogs

Dev Control Definition URL: http://devbeam002.unix.gsm1900.org:8080/abc/controlDefinitions

MySql details:

Hostname : devehdp006.unix.gsm1900.org

Port : 3306

DB Name : demo_abc_platform

Username : readonlyabc

Password: readonly123

Username Password Role Comments

financeretrofitui retrofitui2016 ROLE_UI_EDIT User to be used for all UI operations

financeretrofitimport retrofitimport2016 ROLE_DADMIN User to be used to test the Export/Import functionality to DevInt environment

http://devbeam002.unix.gsm1900.org:8080/abc_devint/import

financeretrofitws retrofitws2016 ROLE_USER To be used by the Job for integration with ABC in the Dev Env(Replacement for the generic TestUser

account

QA Details:

QA UI URL: http://qatbeam001.unix.gsm1900.org:8080/abc_qat

Credentials: dadmin/abcuiadmin2016

QA Balance Check Logs URL: https://qatbeam001.unix.gsm1900.org:8443/abc_qat/balanceCheckLogs

QA Control Definition URL: https://qatbeam001.unix.gsm1900.org:8443/abc_qat/controlDefinitions

For Testing:

For control check service testing, you can look into following runtime tables

CONTROL_DETAILS

For balance check service testing, you can look into following runtime tables

BALANCE_CHECK_LOG

MISMATCH_CHECK_LOG

MISMATCH_JOB_STATUS_CHECK

You can use individual process id from table LOAD_RPOCESS_AUDIT_STATS and link to the runtime tables above.

Notes:

ABC ERD pdf:

https://tmobileusa.sharepoint.com/teams/da/DM/PR207463/PL/000 IDW Architecture Design and Development/Frameworks/ABC/design/abc_ERD_20151101.pdf

Server n Logins n Softwares Page 17

Sqoop

Friday, March 11, 2016 3:45 PM

Sqoop Metastore

1) Login to devehdp004

2) mysql -u sqoop -p

3) password: sqoop

Server n Logins n Softwares Page 18

Softwares

Friday, March 11, 2016 4:18 PM

Git: https://git-scm.com/downloads

Server n Logins n Softwares Page 19

Github Details

Monday, March 14, 2016 1:54 PM

View Pre-Prep DEV: https://gitenterprise.unix.gsm1900.org/orgs/IDW-DATA-PRE-

PREPARATION/teams/pre-prep-dev

View Preparation-DEV: https://gitenterprise.unix.gsm1900.org/orgs/IDW-DATA-

PREPARATION/teams/preparation-dev

View dispatch-jobs-DEV: https://gitenterprise.unix.gsm1900.org/orgs/IDW-DATA-

DISPATCH/teams/dispatch-jobs-dev

Read more about team permissions here: https://help.github.com/enterprise/2.4/user/articles/what-

are-the-different-access-permissions

Git Commands:

Rt click -> Git Bash here

git config --global user.name aakula

git config --global user.email anusha.akula@T-Mobile.com

git pull

git sync

Jenkins:

URL: http://prdcicd005.unix.gsm1900.org:8080/login?from=%2F

Username: aakula

Password: NT password

Goto - Finance_Retrofit -> Build with Parameters ->

Nexus:

URL: http://prdcicd003.unix.gsm1900.org:8081/nexus

ID: aakula

Password: password

Server n Logins n Softwares Page 20

Edge node details

Wednesday, March 16, 2016 2:49 PM

IP Address: 10.158.31.206

Username: hdpsrvc

Password: G+j4y=z6s@ef-uh_che4ut&7bE?5xa

Server n Logins n Softwares Page 21

Dev Details

Monday, April 18, 2016 2:29 PM

Ambari Dev Link:

http://devehdp004.unix.gsm1900.org:8080

Username/password: ambari/ambari

Server n Logins n Softwares Page 22

Pig

Tuesday, March 22, 2016 12:06 PM

PIG http://blog.cloudera.com/blog/2015/07/how-to-tune-mapreduce-

performance parallelism-in-apache-pig-jobs/

Good PIG https://www.xplenty.com/blog/2014/05/improving-pig-data-integration-

performance performance-with-join/

PIG http://hortonworks.com/blog/pig-performance-and-optimization-analysis/

performance

Good PIG http://pig.apache.org/docs/r0.9.1/perf.html

performance

Learning Pages Page 23

Hive

Friday, March 25, 2016 2:56 PM

http://sanjivblogs.blogspot.com/2015/05/10-ways-to-optimizing-hive-queries.html - Hive

https://www.linkedin.com/pulse/orc-files-storage-index-big-data-hive-mich-talebzadeh-ph-d- -> ORC file format

https://code.facebook.com/posts/229861827208629/scaling-the-facebook-data-warehouse-to-300-pb/ - ORC Performance

https://snippetessay.wordpress.com/2015/07/25/hive-optimizations-with-indexes-bloom-filters-and-statistics/ - Performance of hive

https://www.linkedin.com/pulse/hive-functions-udfudaf-udtf-examples-gaurav-singh - Hive UDF example

Learning Pages Page 24

Hbase

Friday, March 25, 2016 4:50 PM

http://phoenix.apache.org/presentations/OC-HUG-2014-10-4x3.pdf - Phoenix

https://www.mapr.com/blog/in-depth-look-hbase-architecture - MapR Hbase Architecture

Learning Pages Page 25

Misc

Sunday, March 06, 2016 1:30 PM

http://www.cs.brandeis.edu/~rshaull/cs147a-fall-2008/hadoop-troubleshooting/ - Troubleshooting

http://0x0fff.com/hadoop-mapreduce-comprehensive-description/ - MapReduce

http://0x0fff.com/spark-architecture-video/ - Spark Architecture

Learning Pages Page 26

Phoenix

Tuesday, May 17, 2016 2:36 PM

Phoenix grammar:

https://phoenix.apache.org/language/index.html#create_view

https://phoenix.apache.org/faq.html - FAQs

https://phoenix.apache.org/Phoenix-in-15-minutes-or-less.html - Phoenix in 15 mins

http://kubilaykara.blogspot.com/2015/07/query-existing-hbase-tables-with-sql.html

https://community.hortonworks.com/questions/12538/phoenix-storage-in-pig.html - Pig read the

phoenix table

https://phoenix.apache.org/pig_integration.html - Pig integration

Learning Pages Page 27

Spark

Monday, May 23, 2016 3:12 PM

https://github.com/JerryLead/SparkInternals

https://github.com/JerryLead/SparkInternals/blob/master/EnglishVersion/3-JobPhysicalPlan.md

Learning Pages Page 28

Das könnte Ihnen auch gefallen

- US Internal Revenue Service: F1040sei - 1992Dokument2 SeitenUS Internal Revenue Service: F1040sei - 1992IRSNoch keine Bewertungen

- 1481546265426Dokument3 Seiten1481546265426api-370784582Noch keine Bewertungen

- H2o Portable Steam Cleaner ManualDokument2 SeitenH2o Portable Steam Cleaner ManualMnk Lte100% (1)

- Lucky Tiger Casino Card Authentication: XX XXXXDokument1 SeiteLucky Tiger Casino Card Authentication: XX XXXXบ่จัก ดอกNoch keine Bewertungen

- Exemption Certificate - SalesDokument2 SeitenExemption Certificate - SalesExecutive F&ADADUNoch keine Bewertungen

- 2016 Individual (Brading, Anthony H. & Amy N.) GovernmentDokument49 Seiten2016 Individual (Brading, Anthony H. & Amy N.) GovernmentAnonymous 9coWUONoch keine Bewertungen

- Aaron Berg w2Dokument2 SeitenAaron Berg w2kevin kuhnNoch keine Bewertungen

- Direct Deposit Enrollment Form: Account Information AmountDokument1 SeiteDirect Deposit Enrollment Form: Account Information AmountClifton WilsonNoch keine Bewertungen

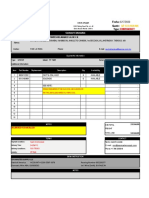

- Make Necessary Changes in Only Orange Colored Fields.: Proceed To Calculation Will Be Initially "No"Dokument36 SeitenMake Necessary Changes in Only Orange Colored Fields.: Proceed To Calculation Will Be Initially "No"xakilNoch keine Bewertungen

- Earnings StatementDokument2 SeitenEarnings StatementRielzaruxo Ka Rioelzarux Ko XNoch keine Bewertungen

- Annu Acc StatementDokument1 SeiteAnnu Acc StatementAHMAD ANTOINE DELAINENoch keine Bewertungen

- Benefits Guide Current PDFDokument32 SeitenBenefits Guide Current PDFzachNoch keine Bewertungen

- Reset Office Email Password in 3 Easy StepsDokument4 SeitenReset Office Email Password in 3 Easy StepsJojoNoch keine Bewertungen

- VA Benefits Reference GuideDokument8 SeitenVA Benefits Reference GuidegaydendNoch keine Bewertungen

- Monthly Bank Statement SummaryDokument4 SeitenMonthly Bank Statement SummaryClifton WilsonNoch keine Bewertungen

- Two Wheeler Insurance Policy DetailsDokument4 SeitenTwo Wheeler Insurance Policy DetailsShashanth Kumar (CS - OMTP)Noch keine Bewertungen

- MicroMacro Mobile Pass API GuideDokument33 SeitenMicroMacro Mobile Pass API Guideapple payNoch keine Bewertungen

- 2019 Chandler D Form 1040 Individual Tax Return - Records-ALDokument7 Seiten2019 Chandler D Form 1040 Individual Tax Return - Records-ALwhat is thisNoch keine Bewertungen

- 34 Wihh 504331 H 0714320240524151104202Dokument3 Seiten34 Wihh 504331 H 0714320240524151104202jamelmhunt22Noch keine Bewertungen

- Bank StatementDokument2 SeitenBank Statementapi-404952982Noch keine Bewertungen

- Dell RG2 01 LatitudeDokument5 SeitenDell RG2 01 LatitudeMichelle McknightNoch keine Bewertungen

- Ahtasham Ahmed Case - CompressedDokument19 SeitenAhtasham Ahmed Case - CompressedarsssyNoch keine Bewertungen

- Amanda K Scott 3535 W Cambridge AVE Fresno, CA 93722-6561Dokument6 SeitenAmanda K Scott 3535 W Cambridge AVE Fresno, CA 93722-6561Amanda Scott100% (1)

- SoapDokument88 SeitenSoapdoris marcaNoch keine Bewertungen

- Corporate Income Tax Return: Paradise Produce Dist., Inc. 59-3024048Dokument31 SeitenCorporate Income Tax Return: Paradise Produce Dist., Inc. 59-3024048kevin kuhnNoch keine Bewertungen

- Chapter 4Dokument6 SeitenChapter 4Ashanti T Swan0% (1)

- Chrome Operating SystemDokument29 SeitenChrome Operating SystemSai SruthiNoch keine Bewertungen

- PayDay Loan Terms N CDokument8 SeitenPayDay Loan Terms N CBlakeNoch keine Bewertungen

- Please To Do Not Use The Back ButtonDokument2 SeitenPlease To Do Not Use The Back ButtonDavid MillerNoch keine Bewertungen

- UFCW 1776 2012 Financials Wendell YoungDokument38 SeitenUFCW 1776 2012 Financials Wendell YoungbobguzzardiNoch keine Bewertungen

- Monetary Determination Pandemic Unemployment Assistance: Michael L PresleyDokument3 SeitenMonetary Determination Pandemic Unemployment Assistance: Michael L PresleyDylan VanslochterenNoch keine Bewertungen

- Nairn Letter and Check From School001Dokument1 SeiteNairn Letter and Check From School001Killi Leaks100% (1)

- Self Cert FormDokument2 SeitenSelf Cert Formkevin kuhnNoch keine Bewertungen

- Former Mayor Bill White's 2009 Tax ReturnDokument43 SeitenFormer Mayor Bill White's 2009 Tax ReturnTexas WatchdogNoch keine Bewertungen

- Attention:: Employer W-2 Filing Instructions and Information WWW - Socialsecurity.gov/employerDokument11 SeitenAttention:: Employer W-2 Filing Instructions and Information WWW - Socialsecurity.gov/employerHot HeartsNoch keine Bewertungen

- Transcripts 1Dokument6 SeitenTranscripts 1hojohn22003Noch keine Bewertungen

- Emergency Replacement Parts for Grove RT 760E CraneDokument1 SeiteEmergency Replacement Parts for Grove RT 760E CraneraulNoch keine Bewertungen

- EDC Profit and LossDokument7 SeitenEDC Profit and LossCody WommackNoch keine Bewertungen

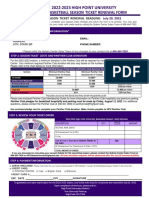

- Basketball Season Tickets 2022-2023 ProjectDokument1 SeiteBasketball Season Tickets 2022-2023 Projectapi-620442382Noch keine Bewertungen

- May 30 Provider Relief Fund FAQ RedlineDokument23 SeitenMay 30 Provider Relief Fund FAQ RedlineRachel Cohrs100% (1)

- File Your NJ Tax Return Online or by E-FileDokument68 SeitenFile Your NJ Tax Return Online or by E-FileStephen HallickNoch keine Bewertungen

- Cash FlowDokument1 SeiteCash Flowpawan_019Noch keine Bewertungen

- BMO World Elite Mastercard Benefits Guide enDokument11 SeitenBMO World Elite Mastercard Benefits Guide enTonyNoch keine Bewertungen

- Tabliga Gerwin Andres Agusti Marissa Calzado: Application For Vehicle FinancingDokument3 SeitenTabliga Gerwin Andres Agusti Marissa Calzado: Application For Vehicle FinancingJerikah Jec HernandezNoch keine Bewertungen

- Book Agadir to Rabat flight with Air ArabiaDokument2 SeitenBook Agadir to Rabat flight with Air ArabiaCubecraft GamesNoch keine Bewertungen

- INTERNATIONAL BUSINESS MACHINES CORP 10-K (Annual Reports) 2009-02-24Dokument289 SeitenINTERNATIONAL BUSINESS MACHINES CORP 10-K (Annual Reports) 2009-02-24http://secwatch.com100% (6)

- Pepco Payment Receipt 10/1/2019Dokument1 SeitePepco Payment Receipt 10/1/2019Ace MereriaNoch keine Bewertungen

- Desmand Whitson 7423 Groveoak Dr. Orlando, Florida 32810: ND NDDokument2 SeitenDesmand Whitson 7423 Groveoak Dr. Orlando, Florida 32810: ND NDRed RaptureNoch keine Bewertungen

- W2 W2taxdocument 2023Dokument3 SeitenW2 W2taxdocument 2023sywwvpdnp7Noch keine Bewertungen

- Jones: AlayaDokument6 SeitenJones: AlayaAlaya JonesNoch keine Bewertungen

- Navig8 Almandine - Inv No 2019-002 - Santa Barbara Invoice + Voucher PDFDokument2 SeitenNavig8 Almandine - Inv No 2019-002 - Santa Barbara Invoice + Voucher PDFAnonymous MoQ28DEBPNoch keine Bewertungen

- W4 Miguel MarcanoDokument4 SeitenW4 Miguel MarcanoNatasha GuzmanNoch keine Bewertungen

- zq610-zq620 User ManualDokument125 Seitenzq610-zq620 User ManualPiedmontNoch keine Bewertungen

- Confirmation Number and Date for Illinois Business RegistrationDokument7 SeitenConfirmation Number and Date for Illinois Business RegistrationMohammed HussainNoch keine Bewertungen

- FDA II LLC Lease AgreementDokument40 SeitenFDA II LLC Lease AgreementJulian CuellarNoch keine Bewertungen

- Payments 2019 RD - PA.SVDokument1.658 SeitenPayments 2019 RD - PA.SVMarco GuardadoNoch keine Bewertungen

- Green Knight Economic Development Corporation IRS Form 990 For FY2015, Showing $20K in Community Grants On $1.8m of RevenueDokument27 SeitenGreen Knight Economic Development Corporation IRS Form 990 For FY2015, Showing $20K in Community Grants On $1.8m of RevenueDickNoch keine Bewertungen

- NCJC 561348186 - 200712 - 990Dokument28 SeitenNCJC 561348186 - 200712 - 990A.P. DillonNoch keine Bewertungen

- Take notes anywhere with OneNoteDokument5 SeitenTake notes anywhere with OneNoteDarshan RathodNoch keine Bewertungen

- SDSA56 Installation GuideDokument11 SeitenSDSA56 Installation Guidefil453Noch keine Bewertungen

- 9 Module9 RPM Package ManagementDokument2 Seiten9 Module9 RPM Package ManagementpbenNoch keine Bewertungen

- Scan Doc by CamScannerDokument1 SeiteScan Doc by CamScannerpbenNoch keine Bewertungen

- Nagios 3Dokument330 SeitenNagios 3venukumar.L100% (4)

- Sqoop Interview QuestionsDokument5 SeitenSqoop Interview QuestionsAntonioNoch keine Bewertungen

- Jenkins TutorialDokument137 SeitenJenkins TutorialdasreNoch keine Bewertungen

- Panasonic XDP Flex ButtonsDokument3 SeitenPanasonic XDP Flex ButtonsBrana MitrovićNoch keine Bewertungen

- Realloc in CDokument2 SeitenRealloc in CKrishna ShankarNoch keine Bewertungen

- C++ Copy Constructors ExplainedDokument2 SeitenC++ Copy Constructors ExplainedSanjay MunjalNoch keine Bewertungen

- Create App With Your Own SkillsDokument17 SeitenCreate App With Your Own Skillsnaresh kolipakaNoch keine Bewertungen

- Computer NetworksDokument47 SeitenComputer NetworksJeena Mol AbrahamNoch keine Bewertungen

- Swot AnalysisDokument4 SeitenSwot AnalysisAnonymous Cy9amWA9Noch keine Bewertungen

- Final Exam Review Answers Database Design Sections 16 - 18 Database Programming Section 1Dokument8 SeitenFinal Exam Review Answers Database Design Sections 16 - 18 Database Programming Section 1Grigoras AlexandruNoch keine Bewertungen

- Explains-Complete Process of Web E - Way BillDokument9 SeitenExplains-Complete Process of Web E - Way BillNagaNoch keine Bewertungen

- 01 Smart Forms343411328079517Dokument117 Seiten01 Smart Forms343411328079517Kishore ReddyNoch keine Bewertungen

- Registered variables and data typesDokument17 SeitenRegistered variables and data typestrivediurvishNoch keine Bewertungen

- Open Source Linux Apache Mysql PHPDokument27 SeitenOpen Source Linux Apache Mysql PHPcharuvimal786Noch keine Bewertungen

- Alberta HighSchool 2007 Round 1Dokument2 SeitenAlberta HighSchool 2007 Round 1Weerasak Boonwuttiwong100% (1)

- Master BB 2017 VersionDokument29 SeitenMaster BB 2017 VersionSN SharmaNoch keine Bewertungen

- Newton Raphson MethodDokument36 SeitenNewton Raphson MethodAkansha YadavNoch keine Bewertungen

- Practical Programming 09Dokument257 SeitenPractical Programming 09Mayank DhimanNoch keine Bewertungen

- WellCost Data SheetDokument4 SeitenWellCost Data SheetAmirhosseinNoch keine Bewertungen

- Hanumanth Hawaldar: Managing Technology Business DevelopmentDokument6 SeitenHanumanth Hawaldar: Managing Technology Business DevelopmentSachin KaushikNoch keine Bewertungen

- Introduction To PayPal For C# - ASPDokument15 SeitenIntroduction To PayPal For C# - ASPaspintegrationNoch keine Bewertungen

- Get The Most Out of Your Storage With The Dell EMC Unity XT 880F All-Flash Array - InfographicDokument1 SeiteGet The Most Out of Your Storage With The Dell EMC Unity XT 880F All-Flash Array - InfographicPrincipled TechnologiesNoch keine Bewertungen

- ADFS Design and Deployment GuideDokument277 SeitenADFS Design and Deployment GuideKaka SahibNoch keine Bewertungen

- Vindaloo VoIP Announced To Offer VoIP Development in KamailioDokument2 SeitenVindaloo VoIP Announced To Offer VoIP Development in KamailioSandip PatelNoch keine Bewertungen

- EE323 Assignment 2 SolutionDokument5 SeitenEE323 Assignment 2 SolutionMuhammadAbdullahNoch keine Bewertungen

- Labureader Plus 2: Semi-Automated Urine Chemistry AnalyzerDokument2 SeitenLabureader Plus 2: Semi-Automated Urine Chemistry AnalyzerCARLOSNoch keine Bewertungen

- C# - Loops: Loop Control StatementsDokument2 SeitenC# - Loops: Loop Control Statementspbc3199Noch keine Bewertungen

- Addendum Proyek Konstruksi Jalan Di Kota Palangka: Analisis Faktor Penyebab, Akibat, Dan Proses Contract RayaDokument13 SeitenAddendum Proyek Konstruksi Jalan Di Kota Palangka: Analisis Faktor Penyebab, Akibat, Dan Proses Contract RayaFahmi AnwarNoch keine Bewertungen

- Oracle Taleo Recruiting: Requisition Management Training Guide - Section 2Dokument50 SeitenOracle Taleo Recruiting: Requisition Management Training Guide - Section 2krishnaNoch keine Bewertungen

- MP 8253,54 Timer SlidesDokument30 SeitenMP 8253,54 Timer SlidesAnuj GuptaNoch keine Bewertungen

- TuxGuitar Tutorial PDFDokument22 SeitenTuxGuitar Tutorial PDFWilfred DoelmanNoch keine Bewertungen

- Calculator TricksDokument13 SeitenCalculator TricksChandra Shekhar Shastri0% (1)

- Runway Friction Measurement Techniques and VariablesDokument15 SeitenRunway Friction Measurement Techniques and VariablesjaffnaNoch keine Bewertungen