Beruflich Dokumente

Kultur Dokumente

(IJETA-V4I3P7) :SekharBabu - Boddu, Prof - RajasekharaRao.Kurra

Hochgeladen von

IJETA - EighthSenseGroupOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

(IJETA-V4I3P7) :SekharBabu - Boddu, Prof - RajasekharaRao.Kurra

Hochgeladen von

IJETA - EighthSenseGroupCopyright:

Verfügbare Formate

International Journal of Engineering Trends and Applications (IJETA) Volume 4 Issue 3, May-Jun 2017

RESEARCH ARTICLE OPEN ACCESS

Web Redundancy Data Handling Using IR and Data

Mining Algorithm

SekharBabu.Boddu [1], Prof.RajasekharaRao.Kurra [2]

Research Scholar [2]

Dept.CSE, SCSVMV University,kanchipuram,Chennai,India.

Asst.Professor [1]

Dept.CSE, KLUniversity

Professor [2], & Director

Dept.CSE, Usharama Engg College

AP India

ABSTRACT

The aim of web mining is to discover and retrieve useful and interesting patterns from a large dataset. There has

been huge interest towards web mining. Web data contains different kinds of information, including, web

documents data, web structure data, web log data, and user profiles data.Information Retrieval (IR) is the area

concerned with retrieving information about a subject from a collection of data objects. IR is different from Data

Retrieval, which in the context of documents consists mainly in searching which documents of the collection

contain keywords of a user query. IR deals with finding information needed by the user.The WWW have

distinctive properties. For example, it is extremely complex, massive in size, and highly dynamic in nature.

Owing to this unique nature, the ability to search and retrieve information from the Web efficiently and

effectively is a challenging task especially when the goal is to realize its full potential. With powerful

workstations and parallel processing technology, efficiency is not a bottleneck.

Keywords:- IR,URL,HTTP,webcontent

I. INTRODUCTION instead of all inbound links pointing to one piece of

content, they link to multiple pieces, spreading the

Duplicate content is content that appears on the link equity among the duplicates. Because inbound

Internet in more than one place. That one place is links are a ranking factor, this can then impact the

defined as a location with a unique website address search visibility of a piece of content.In the vast

(URL) - so, if the same content appears at more majority of cases, website owners

than one web address, youve got duplicate content. don't intentionally create duplicate content. But,

While not technically a penalty, duplicate content that doesn't mean it's not out there. In fact by some

can still sometimes impact search engine rankings. estimates, up to 29% of the web is actually

When there are multiple pieces of, as Google calls duplicate content! Let's take a look at some of the

it, "appreciably similar" content in more than one most common ways duplicate content is

location on the Internet, it can be difficult for unintentionally created:

search engines to decide which version is more

relevant to a given search query. Duplicate content 1. URL variations.2.URL parameters, such as click

can present three main issues for search tracking and some analytics code, can cause

engines:1.They don't know which version(s) to duplicate content issues. This can be a problem

include/exclude from their indices.2.They don't caused not only by the parameters themselves, but

know whether to direct the link metrics also the order in which those parameters appear in

(trust, authority, anchor text, link equity, etc.) to the URL itself For example:

one page, or keep it separated between multiple

versions.3.They don't know which version(s) to www.widgets.com/blue-widgets?color=blue is a

rank for query results. When duplicate content is duplicateof www.widgets.com/blue-

present, site owners can suffer rankings and traffic widgetswww.widgets.com/bluewidgets?color=blue

losses. These losses often stem from two main &cat=3 is a duplicate of www.widgets.com/blue-

problems: widgets?cat=3&color=blue.

To provide the best search experience, search

engines will rarely show multiple versions of the Similarly, session IDs are a common duplicate

same content, and thus are forced to choose which content creator. This occurs when each user that

version is most likely to be the best result. This visits a website is assigned a different session ID

dilutes the visibility of each of the duplicates. Link that is stored in the URL.Printer-friendly versions

equity can be further diluted because other sites of content can also cause duplicate content issues

have to choose between the duplicates as well. when multiple versions of the pages get

ISSN: 2393-9516 www.ijetajournal.org Page 39

International Journal of Engineering Trends and Applications (IJETA) Volume 4 Issue 3, May-Jun 2017

indexed.One lesson here is that when possible, it's link back to your original article. You can also ask

often beneficial to avoid adding URL parameters or those who use your syndicated material to use the

alternate versions of URLs (the information those noindex meta tag to prevent search engines from

contain can usually be passed through scripts). indexing their version of the content.

Use Search Console to tell us how you prefer

2. HTTP vs. HTTPS or WWW vs. non-WWW your site to be indexed: You can tell

pagesIf your site has separate versions at Googleyour preferreddomain (forexample, http://w

"www.kluniversity.in" and "kluniversity.in" (with ww.example.com or http://example.com).

and without the "www" prefix), and the same

content lives at both versions, you've effectively

created duplicates of each of those pages. The same Minimize boilerplate repetition: For instance,

applies to sites that maintain versions at both http:// instead of including lengthy copyright text on the

and https://. If both versions of a page are live and bottom of every page, include a very brief

visible to search engines, you may run into a summary and then link to a page with more details.

duplicate content issue. In addition, you can use the Parameter Handling

tool to specify how you would like Google to treat

URL parameters.

3. Scraped or copied content

Content includes not only blog posts or editorial

content, but also product information pages. Avoid publishing stubs: Users don't like seeing

Scrapers republishing your blog content on their "empty" pages, so avoid placeholders where

own sites may be a more familiar source of possible. For example, don't publish pages for

duplicate content, but there's a common problem which you don't yet have real content. If you do

for e-commerce sites, as well: product information. create placeholder pages, use the noindex meta

If many different websites sell the same items, and tag to block these pages from being indexed.

they all use the manufacturer's descriptions of those

items, identical content winds up in multiple Undrstand your content management system:

locations across the web.There are some steps you Make sure you're familiar with how content is

can take to proactively address duplicate content displayed on your web site. Blogs, forums, and

issues, and ensure that visitors see the content you related systems often show the same content in

want them to. multiple formats. For example, a blog entry may

appear on the home page of a blog, in an archive

Use 301s: If you've restructured your site, use 301 page, and in a page of other entries with the same

redirects ("RedirectPermanent") in your .htaccess label.

file to smartly redirect users, Googlebot, and other

spiders. (In Apache, you can do this with an

.htaccess file; in IIS, you can do this through the Minimize similar content: If you have many

administrative console.) pages that are similar, consider expanding each

page or consolidating the pages into one. For

instance, if you have a travel site with separate

Be consistent: Try to keep your internal linking pages for two cities, but the same information on

consistent. For example, don't link both pages, you could either merge the pages into

to http://www.example.com/page/ and http://www. one page about both cities or you could expand

example.com/page and http://www.example.com/p each page to contain unique content about each

age/index.htm. city.

Google does not recommend blocking crawler

Use top-level domains: To help us serve the most access to duplicate content on your website,

appropriate version of a document, use top-level whether with a robots.txt file or other methods. If

domains whenever possible to handle country- search engines can't crawl pages with duplicate

specific content. We're more likely to know content, they can't automatically detect that these

that http://www.example.de contains Germany- URLs point to the same content and will therefore

focused content, for instance, effectively have to treat them as separate, unique

than http://www.example.com/de or http://de.exam pages. A better solution is to allow search engines

ple.com. to crawl these URLs, but mark them as duplicates

Syndicate carefully: If you syndicate your content by using the rel="canonical" link element, the URL

on other sites, Google will always show the version parameter handling tool, or 301 redirects. In cases

we think is most appropriate for users in each given where duplicate content leads to us crawling too

search, which may or may not be the version you'd much of your website, you can also adjust the crawl

prefer. However, it is helpful to ensure that each rate setting in Search Console.Duplicate content on

site on which your content is syndicated includes a a site is not grounds for action on that site unless it

appears that the intent of the duplicate content is to

ISSN: 2393-9516 www.ijetajournal.org Page 40

International Journal of Engineering Trends and Applications (IJETA) Volume 4 Issue 3, May-Jun 2017

be deceptive and manipulate search engine results. as an option to find pages similar or related to a

If your site suffers from duplicate content issues, given page. The intuition behind similarity search

and you don't follow the advice listed above, we do is the cluster hypothesis in IR, stating that

a good job of choosing a version of the content to documents similar to relevant documents are also

show in our search results.However, if our review likely to be relevant. In this section we discuss

indicated that you engaged in deceptive practices mostly approaches to similarity search based on the

and your site has been removed from our search content of the web documents. Document similarity

results, review your site carefully. If your site has can also be considered in the context of the web

been removed from our search results, review link structure.

our Webmaster Guidelines for more information.

Once you've made your changes and are confident The vector space model defines documents as

that your site no longer violates our vectors (or points) in a multidimensional Euclidean

guidelines, submit your site for reconsideration. space where the axes (dimensions) are represented

The purpose of a web crawler used by a search by terms. Depending on the type of vector

engine is to provide local access to the most recent components (coordinates), there are three basic

versions of possibly all web pages. This means that versions of this representation. Boolean, term

the Web should be crawled regularly and the frequency (TF), and term frequencyinverse

collection of pages updated accordingly. Having in document frequency (TFIDF).The Boolean

mind the huge capacity of the text repository, the representation is simple, easy to compute and

need for regular updates poses another challenge works well for document classification and

for the web crawler designers. The problem is the clustering. However, it is not suitable for keyword

high cost of updating indices. A common solution search because it does not allow document ranking.

is to append the new versions of web pages without Therefore, we focus here on the TFIDF

deleting the old ones. This increases the storage representation.

requirements but also allows the crawler repository In the term frequency (TF) approach, the

to be used for archival purposes. In fact, there are coordinates of the document vector _ dj are

crawlers that are used just for the purposes of represented as a function of the term counts,

archiving the web. Crawling of the Web also usually normalized with the document length. For

involves interaction of web page developers. As each term ti and each document dj , the TF (ti , dj )

Brin and Page mention in a paper about their search measure is computed.

engine Google, they were getting e-mail from This can be done in different ways; for example:

people who noticed that somebody (or something) Using the sum of term counts over all terms (the

visited their pages. To facilitate this interaction total number of terms in the document):

there are standards that allow web servers and TF(ti,dj)=0 nij/mk=1 nkj if n=0 or n>0

crawlers to exchange information. One of them is Using the maximum of the term count over all

the robot exclusion protocol. A file named terms in the document:

robots.txt that lists all path prefixes of pages that TF(ti,dj)=0 nij/Maxk nkj if n=0 or n>0

crawlers should not fetch is placed in the http root

directory of the server and read by the crawlers In the Boolean and TF representations, each

before crawling of the server tree. Usually, coordinate of a document vector is computed

crawling precedes the phase of web page locally, taking into account only the particular term

evaluation and ranking, as the latter comes after and document. This means that all axes are

indexing and retrieval of web documents. However, considered to be equally important. However, terms

web pages can be evaluated while being crawled. that occur frequently in documents may not be

Thus, we get some type of enhanced crawling that related to the content of the document. This is the

uses page ranking methods to achieve focusing on case with the term program in our department

interesting parts of the Web and avoiding fetching example. Too many vectors have 1s (in the

irrelevant or uninteresting pages. Boolean case) or large values (in TF) along this

The web user information needs are represented by axis. This in turn increases the size of the resulting

keyword queries, and thus document relevance is set and makes document ranking difficult if this

defined in terms of how close a query is to term is used in the query. The same effect is caused

documents found by the search engine. Because by stop words such as a, an, the, on, in, and at and

web search queries are usually incomplete and is one reason to eliminate them from the corpus.

ambiguous, many of the documents returned may The basic idea of the inverse document frequency

not be relevant to the query. However, once a (IDF) approach is to scale down the coordinates for

relevant document is found, a larger collection of some axes, corresponding to terms that occur in

possibly relevant documents may be found by many documents. For each term ti the IDF measure

retrieving documents similar to the relevant is computed as a proportion of documents where ti

document. This process, called similarity search, is occurs with respect to the total number of

implemented in some search engines (e.g.,Google) documents in the collection. Let D =Un1 dj be the

ISSN: 2393-9516 www.ijetajournal.org Page 41

International Journal of Engineering Trends and Applications (IJETA) Volume 4 Issue 3, May-Jun 2017

document collection and Dti the set of documents Boolean vectors) as the percentage of nonzero

where term ti occurs. That is, Dij== {dj |nij> 0}. As coordinates that are different in the two vectors.

with TF, there are a variety ofways to compute sim (d1, d2) = |T (d1) T (d2)| |T (d1) T (d2)|

IDF; some take a simple fraction |D|/|Dij|

IDF(ti ) = log( |D||Dti |) Two simple heuristics may drastically reduce the

Cosine Similarity number of candidate pairs:

1. Frequent terms that occur in many documents

The query-to-document similarity which we have (say, more than 50% of the

explored so far is based on the vector space model. Collection) are eliminated because they cause even

Both queries and documents are represented by loosely related documents

TFIDF vectors, and similarity is computed using to look similar.

the metric properties of vector space. It is also 2. Only documents that share at least one term are

straightforward to compute similarity between used to form a pair

documents. Moreover, we may expect that

document-to-document similarity would be more The Jaccard coefficient on two sets by representing

accurate than query-to-document similarity because them as smaller sets called sketches, which are then

queries are usually too short, so their vectors are used instead of the original documents to compute

extremely sparse in the highly dimensional vector the Jaccard coefficient. Sketches are created by

space. In similarity search we are not concerned choosing a random permutation, which is used to

with a small query vector, so we are free to use generate a sample for each document. Most

more (or all) dimensions of the vector space to important, sketches have a fixed size for all

represent our documents. In this respect it will be documents. In a large document collection each

interesting to investigate how the dimensionality of document can be represented by its sketch, thus

vector space affects the similarity search results. substantially reducing the storage requirements as

Generally, several options can be explored: well as the running time for precomputing

Using all terms from the corpus. This is the easiest similarity between document pairs. The method

option but may cause problems if the corpus is too was evaluated by large-scale experiments with

large (such as the document repository of a web clustering of all documents on the Web [8]. Used

search engine). originally in a clustering framework, the method

Selecting terms with high TF scores (usually based also suits very well the similarity search setting.So

on the entire corpus). This approach prefers terms far we have discussed two approaches to document

that occur frequently in many documents and thus modelling: the TFIDF vector and set

makes documents look more similar. However, this representations. Both approaches try to capture

similarity is not indicative of document content. document semantics using the terms that

Selecting terms with high IDF scores, this approach documents contain as descriptive features and

prefers more document specific terms and thus ignoring any information related to term positions,

better differentiates documents in vector space. ordering, or structure. The only relevant

However, it results in extremely sparse document information used for this purpose is whether or not

vectors, so that similarity search is too restricted to a particular term occurs in the documents (the set-

closely related documents. Combining the TF and of-words approach) and the frequency of its

IDF criteria, For example, a preference may be occurrence (the bag-of-words approach). For

given to terms that maximize the product of their example, the documents Mahesh loves Jhanaki

TF (on the entire corpus) and TDF scores. As this and Jhanaki loves Mahesh are identical, because

is the same type of measure used in the vector they include the same words with the same counts,

coordinates (the difference is that the TF score in although they have different meanings. The idea

the vector is taken on the particular document), the behind this representation is that content is

vectors will be better populated with nonzero identified with topic or area but not with meaning

coordinates. (that is why these approaches are also called

syntactic). Thus, we can say that the topic of both

II. JACCARD SIMILARITY documents is people and love, which is the

meaning of the terms that occur in the

There is an alternative to cosine similarity, which documents.There is a technique that extends the

appears to be more popular in the context of set-of-words approach to sequences of words. The

similarity search (we discuss the reason for this idea is to consider the document as a sequence of

later). It takes all terms that occur in the documents words (terms) and extract from this sequence short

but uses the simpler Boolean document sub sequences of fixed length called n-grams or

representation. The idea is to consider only the shingles. The document is then represented as a set

nonzero coordinates (i.e., those that are 1) of the of such n-grams. For example, the document

Boolean vectors. The approach uses the Jaccard Mahesh loves Jhanaki can be represented by the

coefficient, which is generally defined (not only for set of 2-grams {[Mahesh, loves], [loves, Jhanaki]}

ISSN: 2393-9516 www.ijetajournal.org Page 42

International Journal of Engineering Trends and Applications (IJETA) Volume 4 Issue 3, May-Jun 2017

and Jhanaki loves Mahesh by {[Jhanaki, loves], Existing system precision average

[loves, Mahesh]}. Now these four 2-grams are the is=0.35

features that represent our documents. In this

representation the documents do not have any

overlap. We have already mentioned n-grams as a

technique for approximate string matching but they

are also popular in many other areas where the task

is detecting sub sequences such as spelling

correction, speech recognition, and character

recognition. Shingled document representation can

be used for estimating document resemblance. Let

us denote the set of shingles of size w contained in

document d as S(d,w). That is, the set S(d,w)

contains all w-grams obtained from document d.

Note that T (d) = S(d,1), because terms are in fact

1-grams. Also, S(d,|d|) = d (i.e., the document itself

is aw-gram, wherevw is equal to the size of the

document). The resemblance between documents

d1 and d2 is defined by the Jaccard coefficient

computed with shingled documents: Although the

number of shingles needed to represent each

document is roughly the same as the number of

terms needed for this purpose, the storage

requirements for shingled document representation Proposed IR System precsisoin average is=0.59

increase substantially. A straightforward

representation of w-word shingles as integers with

a fixed number of bits results in a w-fold increase

in storage. we can also eliminate those that are too

similar in terms of resemblance [with large values

of rw(d1,d2)]. In this way, duplicates or near

duplicates can be eliminated from the similarity

search results.

rw(d1,d2) = |S(d1,w) S(d2,w)| |S(d1,w)

S(d2,w)|

re(d1,d2) = |L(d) L(d2)| |L(d1) L(d2)|

L(d) is a smaller set of shingles called a sketch of

document d.

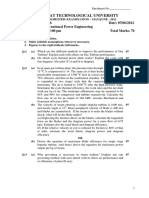

Result:

III. CONCLUSION

The web data conatins redundacny data , by using

IR and RDF Techniques, we can handle the web

redundant data with indexed keywords and query

optimization concepts through search engine

interface. Finally , after done TF& IDF ,cosine and

jacarrd similarities and Document resemblance of

their precision value is improved than the previsous

one .it means similarity data handle handled

postitively.

ISSN: 2393-9516 www.ijetajournal.org Page 43

International Journal of Engineering Trends and Applications (IJETA) Volume 4 Issue 3, May-Jun 2017

REFERENCES Network, 2011 3rd International Conference

on Information and Financial Engineering.

[1]. Hassan F. Eldirdiery, A. H. Ahmed, [12] .Tieli Sun, Zhiying Li, Yanji Liu, Zhenghong

Detecting and Removing Noisy Data on Web Liu, Algorithm Research for the Noise of

document using Text Density Approach Information Extraction Based Vision and

,International Journal of Computer DOM Tree, International Symposium on

Applications (0975 8887) Volume 112 Intelligent Ubiquitous Computing and

No. 5, February 2015. Education, pp 81-84, May 2009.

[2]. Ms. Shalaka B. Patil, Prof. Rushali A. [13].Jinbeom Kang, Joongmin Choi, Block

Deshmukh, , Enhancing Content Extraction classification of a web page by using a

from Multiple Web Pages by Noise combination of multiple classifiers, Fourth

Reduction, International Journal of Scientific International Conference on Networked

& Engineering Research, Volume 6, Issue 7, Computing and Advanced Information

July-2015 ISSN 2229-5518 Management, pp 290 -295, September 2008.

[3]. Rajni Sharma, Max Bhatia, Eliminating the [14]. Lan Yi, Eliminating Noisy Information in

Noise from Web Pages using Page Web Pages for Data Mining.2003.

Replacement Algorithm, International Journal [15]. Bassma, S., Alsulami, Maysoon, F.,

of Computer Science and Information Abulkhair and Fathy E. Eassa, Near

Technologies, Vol. 5 (3) , 2014, 3066-3068. Duplicate Document Detection Survey,

[4]. Hui Xiong, Member, IEEE, Gaurav Pandey, International Journal of Computer Science &

Michael Steinbach, Member, IEEE,and Vipin Communication Networks, Vol. 2, No. 2, pp.

Kumar, Fellow, IEEE, Enhancing Data 147-151, 2011.

Analysis with Noise Removal, IEEE [16].Huda Yasin and Mohsin Mohammad Yasin,

Transactions On Knowledge And Data Automated Multiple Related Documents

Engineering. Summarization via Jaccards Coefficient,

[5]. Rekha Garhwal, Improving Privacy in Web International Journal of Computer

Mining by eliminating Noisy data & Applications, Vol. 13, No. 3, pp. 12-15, 2011.

Sessionization, International Journal of [17]. Syed Mudhasir, Y., Deepika, J.,

Latest Trends in Engineering and Technology Sendhilkumar, S., Mahalakshmi, G. S, Near-

(IJLTET). Duplicates Detection and Elimination Based

[6].Erdinc,uzun,et.al, A hybrid approach for on Web Provenance for Effective Web

extracting informative content from web Search, (IJIDCS) International Journal on

pages, Information Processing and Internet and Distributed Computing Systems,

Management: an International Journal archive Vol. 1, No. 1-5, 2011.

Volume 49 Issue 4, July, 2013,Pages 928- [18]. Kanhaiya Lal & N.C.Mahanti ,A Novel

944. Data Mining Algorithm for Semantic Web

[7].Shine N. Das,et.al. Eliminating Noisy Based Data Cloud International Journal of

Information in Web Pages using featured Computer Science and Security (IJCSS),

DOM tree, International Journal of Applied Volume (4): Issue (2),Pg.160-175,2010.

Information Systems (IJAIS) ISSN : 2249- [19].Ranjna Gupta, Neelam Duhan, Sharma, A. K.

0868 Foundation of Computer Science FCS, and Neha Aggarwal, Query Based Duplicate

New York, USA Volume 2 No.2, May 2012. Data Detection on WWW, International

[8]. Xin Qi and JianPeng Sun, Eliminating Journal on Computer Science and

Noisy Information in Webpage through Engineering Vol. 02, No. 04, pp. 1395-1400,

Heuristic Rules, 2011 International 2010.

Conference on Computer Science and [20] .Alpuente, M. and Romero, D. A Tool for

Information Technology (ICCSIT 2011) Computing the Visual imilarity of Web

[9]. Thanda Htwe, Cleaning Various Noise pages, Applications and the Internet

Patterns in Web Pages for Web Data (SAINT), pp. 45-51, 2010.

Extraction, Iinternational Journal of Network [21].PrasannaKumar, J. and Govindarajulu, P.

and Mobile Technologies Issn 1832-6758 Duplicate and Near Duplicate Documents

Electronic Version Vol 1 / Issue 2 / Detection: A Review, European Journal of

November 2010. Scientific Research, Vol. 32 No. 4, pp. 514-

[10]. Byeong Ho Kang and Yang Sok Kim, Noise 527, 2009.

Elimination from the Web Documents by [22]. Poonkuzhali, G., Thiagarajan, G. and

Using URL paths and Information Sarukesi, K. Elimination of Redundant Links

Redundancy. in Web Pages - Mathematical Approach,

[11]. Thanda Htwe, Nan Saing Moon Kham, World Academy of Science, Engineering and

Extracting Data Region in Web Page by Technology, No. 52, p. 562,2009.

Removing Noise using DOM and Neural

ISSN: 2393-9516 www.ijetajournal.org Page 44

Das könnte Ihnen auch gefallen

- BLACK+DECKER Guidelines PDFDokument62 SeitenBLACK+DECKER Guidelines PDFAnupam GanguliNoch keine Bewertungen

- F5 TcpdumpDokument14 SeitenF5 TcpdumpNETRICH IT SolutionsNoch keine Bewertungen

- Final Case Study 2018Dokument12 SeitenFinal Case Study 2018Ahmed Ràffi100% (1)

- Steel Design DocumentDokument198 SeitenSteel Design Documentpudumai100% (5)

- Trip TicketDokument2 SeitenTrip TicketKynth Ochoa100% (2)

- How Google Search Works Under The HoodDokument61 SeitenHow Google Search Works Under The HoodnaveenksnkNoch keine Bewertungen

- Mining the Web: Discovering Knowledge from Hypertext DataVon EverandMining the Web: Discovering Knowledge from Hypertext DataBewertung: 4 von 5 Sternen4/5 (10)

- Crawling Deep Web Entity Pages: Yeye He Heyeye@cs - Wisc.edu Dong Xin Venkatesh Ganti Sriram Rajaraman Nirav ShahDokument10 SeitenCrawling Deep Web Entity Pages: Yeye He Heyeye@cs - Wisc.edu Dong Xin Venkatesh Ganti Sriram Rajaraman Nirav ShahTarun ChoudharyNoch keine Bewertungen

- Crawler and URL Retrieving & QueuingDokument5 SeitenCrawler and URL Retrieving & QueuingArnav GudduNoch keine Bewertungen

- A Novel Approach For Web Crawler To Classify The Web DocumentsDokument3 SeitenA Novel Approach For Web Crawler To Classify The Web Documentsijbui iirNoch keine Bewertungen

- Scalable Techniques For Clustering The WebDokument6 SeitenScalable Techniques For Clustering The Webmatthewriley123Noch keine Bewertungen

- DWM Assignment 1: 1. Write Detailed Notes On The Following: - A. Web Content MiningDokument10 SeitenDWM Assignment 1: 1. Write Detailed Notes On The Following: - A. Web Content MiningKillerbeeNoch keine Bewertungen

- Crawling The Web: Seed Page and Then Uses The External Links Within It To Attend To Other PagesDokument25 SeitenCrawling The Web: Seed Page and Then Uses The External Links Within It To Attend To Other Pagesjyoti222Noch keine Bewertungen

- Deep WebDokument12 SeitenDeep WebVir K Kharwar0% (1)

- Focused Crawling: A New Approach To Topic-Specific Web Resource DiscoveryDokument18 SeitenFocused Crawling: A New Approach To Topic-Specific Web Resource DiscoveryPriti SinghNoch keine Bewertungen

- Extended Curlcrawler: A Focused and Path-Oriented Framework For Crawling The Web With ThumbDokument9 SeitenExtended Curlcrawler: A Focused and Path-Oriented Framework For Crawling The Web With Thumbsurendiran123Noch keine Bewertungen

- A Survey On Web Page Segmentation and Its Applications: U.Arundhathi, V.Sneha Latha, D.Grace PriscillaDokument6 SeitenA Survey On Web Page Segmentation and Its Applications: U.Arundhathi, V.Sneha Latha, D.Grace PriscillaIjesat JournalNoch keine Bewertungen

- Search Engine Optimization - NOtesDokument8 SeitenSearch Engine Optimization - NOtesBounty HunterNoch keine Bewertungen

- Q21 - What Is Search Engine? Give Examples. Discuss Its Features and Working (With Examples) - AnsDokument11 SeitenQ21 - What Is Search Engine? Give Examples. Discuss Its Features and Working (With Examples) - Ansanil rajputNoch keine Bewertungen

- PRWB: A Framework For Creating Personal, Site-Specific Web CrawlersDokument6 SeitenPRWB: A Framework For Creating Personal, Site-Specific Web CrawlerspravinNoch keine Bewertungen

- Inverted Indexing For Text RetrievalDokument21 SeitenInverted Indexing For Text RetrievalOnet GBNoch keine Bewertungen

- SpamdexingDokument8 SeitenSpamdexinglinda976Noch keine Bewertungen

- The Portrait of A Common HTML Web Page: Ryan Levering Ryan - Levering@binghamton - Edu Michal Cutler Cutler@binghamton - EduDokument7 SeitenThe Portrait of A Common HTML Web Page: Ryan Levering Ryan - Levering@binghamton - Edu Michal Cutler Cutler@binghamton - EduK SwathiNoch keine Bewertungen

- Company Git StartDokument8 SeitenCompany Git StartDan RonohNoch keine Bewertungen

- AI's Effect on the Future Semantic WebDokument7 SeitenAI's Effect on the Future Semantic WebDarren CurranNoch keine Bewertungen

- Online Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)Dokument5 SeitenOnline Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)International Journal of Application or Innovation in Engineering & ManagementNoch keine Bewertungen

- SEO Starter Guide for BeginnersDokument3 SeitenSEO Starter Guide for BeginnersVeyaNoch keine Bewertungen

- MWA Part-2Dokument10 SeitenMWA Part-2Epsitha YeluriNoch keine Bewertungen

- Emantic Web Publishing With Rupal: Deri - Digital Enterprise Research InstituteDokument15 SeitenEmantic Web Publishing With Rupal: Deri - Digital Enterprise Research InstitutePerraYulyaniNoch keine Bewertungen

- Analysis of Web Mining Types and WeblogsDokument4 SeitenAnalysis of Web Mining Types and WeblogsVeera RagavanNoch keine Bewertungen

- Nayak (2022) - A Study On Web ScrapingDokument3 SeitenNayak (2022) - A Study On Web ScrapingJoséNoch keine Bewertungen

- Java Web CrawlerDokument1 SeiteJava Web CrawlerJohn WiltbergerNoch keine Bewertungen

- Search Engine SeminarDokument17 SeitenSearch Engine SeminarsidgrabNoch keine Bewertungen

- IR Unit 3Dokument47 SeitenIR Unit 3jaganbecsNoch keine Bewertungen

- Semantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & EngineeringDokument36 SeitenSemantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & Engineeringqwerty uNoch keine Bewertungen

- Dept. of Cse, Msec 2014-15Dokument19 SeitenDept. of Cse, Msec 2014-15Kumar Kumar T GNoch keine Bewertungen

- Web Crawler & Scraper Design and ImplementationDokument9 SeitenWeb Crawler & Scraper Design and Implementationkassila100% (1)

- Crawling Hidden Dynamic Web PagesDokument9 SeitenCrawling Hidden Dynamic Web PageskostasNoch keine Bewertungen

- Computer 7 2ND Term Session 1Dokument3 SeitenComputer 7 2ND Term Session 1DreamCodmNoch keine Bewertungen

- Default Implementations or State. Java Collections Offer Good Examples of This (MapDokument9 SeitenDefault Implementations or State. Java Collections Offer Good Examples of This (MapSrinivasa Reddy KarriNoch keine Bewertungen

- GAM Authoritative Sources in A Hyperlinked Environment. Journal of The ACMDokument29 SeitenGAM Authoritative Sources in A Hyperlinked Environment. Journal of The ACMlakshmi.vnNoch keine Bewertungen

- Web Crawler Research PaperDokument6 SeitenWeb Crawler Research Paperfvf8zrn0100% (1)

- Unit 7: Web Mining and Text MiningDokument13 SeitenUnit 7: Web Mining and Text MiningAshitosh GhatuleNoch keine Bewertungen

- 5.web Crawler WriteupDokument7 Seiten5.web Crawler WriteupPratik BNoch keine Bewertungen

- Google ScholarlyDokument8 SeitenGoogle ScholarlyHusseinZahraNoch keine Bewertungen

- A Two Stage Crawler On Web Search Using Site Ranker For Adaptive LearningDokument4 SeitenA Two Stage Crawler On Web Search Using Site Ranker For Adaptive LearningKumarecitNoch keine Bewertungen

- Explores The Ways of Usage of Web Crawler in Mobile SystemsDokument5 SeitenExplores The Ways of Usage of Web Crawler in Mobile SystemsInternational Journal of Application or Innovation in Engineering & ManagementNoch keine Bewertungen

- Web Crawler Assisted Web Page Cleaning For Web Data MiningDokument75 SeitenWeb Crawler Assisted Web Page Cleaning For Web Data Miningtheviper11Noch keine Bewertungen

- DataminingDokument21 SeitenDatamining21BCS119 S. DeepakNoch keine Bewertungen

- A Keyword Focused Web Crawler Using Domain Engineering and OntologyDokument3 SeitenA Keyword Focused Web Crawler Using Domain Engineering and OntologyGhiffari AgsaryaNoch keine Bewertungen

- Semantic Web Unit - 1 & 2Dokument16 SeitenSemantic Web Unit - 1 & 2pavanpk0812Noch keine Bewertungen

- Literature Review-2Dokument6 SeitenLiterature Review-2salamudeen M SNoch keine Bewertungen

- Deep Crawling of Web Sites Using Frontier Technique: Samantula HemalathaDokument11 SeitenDeep Crawling of Web Sites Using Frontier Technique: Samantula HemalathaArnav GudduNoch keine Bewertungen

- Site ScraperDokument10 SeitenSite ScraperpantelisNoch keine Bewertungen

- Lecture 28: Web Security: Cross-Site Scripting and Other Browser-Side ExploitsDokument48 SeitenLecture 28: Web Security: Cross-Site Scripting and Other Browser-Side Exploitsabdel_lakNoch keine Bewertungen

- Unit 7: Web Mining and Text MiningDokument13 SeitenUnit 7: Web Mining and Text MiningAshitosh GhatuleNoch keine Bewertungen

- Web Search EngineDokument26 SeitenWeb Search Enginepradhanritesh6Noch keine Bewertungen

- CALA An Unsupervised URL-based Web Page Classification SystemDokument13 SeitenCALA An Unsupervised URL-based Web Page Classification Systemsojovey836Noch keine Bewertungen

- Searching For Hidden-Web Databases: Luciano Barbosa Lab@sci - Utah.edu Juliana Freire Juliana@cs - Utah.eduDokument6 SeitenSearching For Hidden-Web Databases: Luciano Barbosa Lab@sci - Utah.edu Juliana Freire Juliana@cs - Utah.edurafael100@gmailNoch keine Bewertungen

- Conclusion For SrsDokument5 SeitenConclusion For SrsLalit KumarNoch keine Bewertungen

- Online Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)Dokument4 SeitenOnline Banking Loan Services: International Journal of Application or Innovation in Engineering & Management (IJAIEM)International Journal of Application or Innovation in Engineering & ManagementNoch keine Bewertungen

- 4-11-2017 Lect I Web Page Designing (Refersher Course)Dokument74 Seiten4-11-2017 Lect I Web Page Designing (Refersher Course)shailesh shahNoch keine Bewertungen

- Lab Manual: Web TechnologyDokument39 SeitenLab Manual: Web TechnologySalah GharbiNoch keine Bewertungen

- How Internet Search Engines WorkDokument6 SeitenHow Internet Search Engines WorklijodevasiaNoch keine Bewertungen

- Web Search Engines: Part 1Dokument6 SeitenWeb Search Engines: Part 1Pratik VanNoch keine Bewertungen

- (IJETA-V11I1P4) :manoj Kumar Sharma, Hemant Kumar SainDokument5 Seiten(IJETA-V11I1P4) :manoj Kumar Sharma, Hemant Kumar SainIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V11I1P7) :shubham Mishra, Vikas KumarDokument5 Seiten(IJETA-V11I1P7) :shubham Mishra, Vikas KumarIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V11I1P1) :gaurav Singh Raghav, Mohsin Khan AgwanDokument10 Seiten(IJETA-V11I1P1) :gaurav Singh Raghav, Mohsin Khan AgwanIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V11I1P8) :pushpendra Kumar Balotia, Anil Kumar SharmaDokument3 Seiten(IJETA-V11I1P8) :pushpendra Kumar Balotia, Anil Kumar SharmaIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I6P6) :tabssum Bano, Mohsin Khan AgwanDokument8 Seiten(IJETA-V10I6P6) :tabssum Bano, Mohsin Khan AgwanIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I1P1) :M.Thachayani, G.RubavaniDokument3 Seiten(IJETA-V10I1P1) :M.Thachayani, G.RubavaniIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I5P1) :rakesh K BumatariaDokument12 Seiten(IJETA-V10I5P1) :rakesh K BumatariaIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I6P1) :yogesh Ajmera, Anil Kumar SharmaDokument4 Seiten(IJETA-V10I6P1) :yogesh Ajmera, Anil Kumar SharmaIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I5P1) :gasana James Madson, Habumugisha Fulgence, Uwimana Josephine, Munyaneza Midas Adolphe, Uwantege Stellah Prossy, Niyonsaba MaximilienDokument6 Seiten(IJETA-V9I5P1) :gasana James Madson, Habumugisha Fulgence, Uwimana Josephine, Munyaneza Midas Adolphe, Uwantege Stellah Prossy, Niyonsaba MaximilienIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I6P9) :yogesh Ajmera, Anil Kumar SharmaDokument6 Seiten(IJETA-V10I6P9) :yogesh Ajmera, Anil Kumar SharmaIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I2P1) :dr. A. Manjula, D. Kalpana, M. Sai Prasad, G. Sanjana, B. Mahender, D. Manisha, M. AbhishekDokument10 Seiten(IJETA-V10I2P1) :dr. A. Manjula, D. Kalpana, M. Sai Prasad, G. Sanjana, B. Mahender, D. Manisha, M. AbhishekIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V10I3P1) :abdullah Al Hasan, Md. Shamim RayhanDokument7 Seiten(IJETA-V10I3P1) :abdullah Al Hasan, Md. Shamim RayhanIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I3P3) :minoti Kumari, Shivani SaxenaDokument6 Seiten(IJETA-V9I3P3) :minoti Kumari, Shivani SaxenaIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I2P2) :yew Kee WongDokument6 Seiten(IJETA-V9I2P2) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I3P1) :yew Kee WongDokument7 Seiten(IJETA-V9I3P1) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I2P3) :dr. Vivek J. VyasDokument7 Seiten(IJETA-V9I2P3) :dr. Vivek J. VyasIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I6P4) :kavita Choudhary, Brij KishoreDokument4 Seiten(IJETA-V8I6P4) :kavita Choudhary, Brij KishoreIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I3P2) :yew Kee WongDokument5 Seiten(IJETA-V9I3P2) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I1P3) :Femi-Jemilohun O. J, Abe A, Ismail IDokument6 Seiten(IJETA-V9I1P3) :Femi-Jemilohun O. J, Abe A, Ismail IIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I1P5) :kavitha RDokument6 Seiten(IJETA-V9I1P5) :kavitha RIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V921P1) :yew Kee WongDokument5 Seiten(IJETA-V921P1) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I6P5) :khalid Hussain, Hemant Kumar Sain, Shruti BhargavaDokument8 Seiten(IJETA-V8I6P5) :khalid Hussain, Hemant Kumar Sain, Shruti BhargavaIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I6P3) :mehtab Alam, Hemant Kumar SainDokument7 Seiten(IJETA-V8I6P3) :mehtab Alam, Hemant Kumar SainIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I1P4) :asir Sathia Regin.d, Dr. Vikram JainDokument6 Seiten(IJETA-V9I1P4) :asir Sathia Regin.d, Dr. Vikram JainIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I1P2) :yew Kee WongDokument7 Seiten(IJETA-V9I1P2) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I6P6) :guru Saran Chayal, Dr. Bharat Bhushan Jain, Rajkumar KaushikDokument6 Seiten(IJETA-V8I6P6) :guru Saran Chayal, Dr. Bharat Bhushan Jain, Rajkumar KaushikIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V9I1P1) :yew Kee WongDokument7 Seiten(IJETA-V9I1P1) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I6P2) :yew Kee WongDokument5 Seiten(IJETA-V8I6P2) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I5P9) :vijay Kumar Parewa, Raj Kumar Sharma, Gori ShankarDokument3 Seiten(IJETA-V8I5P9) :vijay Kumar Parewa, Raj Kumar Sharma, Gori ShankarIJETA - EighthSenseGroupNoch keine Bewertungen

- (IJETA-V8I6P1) :yew Kee WongDokument7 Seiten(IJETA-V8I6P1) :yew Kee WongIJETA - EighthSenseGroupNoch keine Bewertungen

- Note-145 Biostat Prof. Abdullah Al-Shiha PDFDokument157 SeitenNote-145 Biostat Prof. Abdullah Al-Shiha PDFAdel Dib Al-jubeh100% (2)

- GTU BE- Vth SEMESTER Power Engineering ExamDokument2 SeitenGTU BE- Vth SEMESTER Power Engineering ExamBHARAT parmarNoch keine Bewertungen

- 08M70 MGS A30Dokument4 Seiten08M70 MGS A30henkesNoch keine Bewertungen

- Apps Associates - Introductory Profile GENDokument15 SeitenApps Associates - Introductory Profile GENRodrigo MarquesNoch keine Bewertungen

- Adjustable Accurate Reference SourceDokument2 SeitenAdjustable Accurate Reference SourceJoey TorresNoch keine Bewertungen

- Bizhub 20 Service ManualDokument508 SeitenBizhub 20 Service ManualSergio Riso100% (1)

- Medium Voltage Surge Arresters Catalog HG 31.1 2017 Low Resolution PDFDokument79 SeitenMedium Voltage Surge Arresters Catalog HG 31.1 2017 Low Resolution PDFAnkur_soniNoch keine Bewertungen

- ETA 11 0006 For HAC Cast in Anchor ETAG Option Approval Document ASSET DOC APPROVAL 0198 EnglishDokument27 SeitenETA 11 0006 For HAC Cast in Anchor ETAG Option Approval Document ASSET DOC APPROVAL 0198 Englishlaeim017Noch keine Bewertungen

- Assignment 5 - AirportDokument2 SeitenAssignment 5 - AirportSaroj AcharyaNoch keine Bewertungen

- Modules Test ReportDokument4 SeitenModules Test ReportprojectsNoch keine Bewertungen

- Shinva Pharma Tech DivisionDokument35 SeitenShinva Pharma Tech DivisionAbou Tebba SamNoch keine Bewertungen

- Libro Desarrollo Organizacional Xady Nieto - AntiCopy - CompressedDokument190 SeitenLibro Desarrollo Organizacional Xady Nieto - AntiCopy - Compressedleandro cazarNoch keine Bewertungen

- 1 Guntha Horizontal Green Poly House ProjectDokument1 Seite1 Guntha Horizontal Green Poly House ProjectRajneeshNoch keine Bewertungen

- Project ScopeDokument2 SeitenProject ScopeRahul SinhaNoch keine Bewertungen

- Liner Product Specification Sheet PDFDokument1 SeiteLiner Product Specification Sheet PDFsauravNoch keine Bewertungen

- Southwire Mining Product CatalogDokument32 SeitenSouthwire Mining Product Catalogvcontrerasj72Noch keine Bewertungen

- Data Book: Automotive TechnicalDokument1 SeiteData Book: Automotive Technicallucian07Noch keine Bewertungen

- Nuclear Medicine Inc.'s Iodine Value Chain AnalysisDokument6 SeitenNuclear Medicine Inc.'s Iodine Value Chain AnalysisPrashant NagpureNoch keine Bewertungen

- ReportDokument329 SeitenReportHovik ManvelyanNoch keine Bewertungen

- MBA Final ProjectDokument108 SeitenMBA Final ProjectMindy Thomas67% (3)

- Willy Chipeta Final Thesis 15-09-2016Dokument101 SeitenWilly Chipeta Final Thesis 15-09-2016EddiemtongaNoch keine Bewertungen

- Limestone Problems & Redrilling A WellDokument4 SeitenLimestone Problems & Redrilling A WellGerald SimNoch keine Bewertungen

- TCDC Corporate IdentityDokument90 SeitenTCDC Corporate Identityชั่วคราว ธรรมดาNoch keine Bewertungen

- G-Series Pneumatic and Hydraulic Actuators: The Compact, Lightweight and Reliable SolutionDokument12 SeitenG-Series Pneumatic and Hydraulic Actuators: The Compact, Lightweight and Reliable SolutionRoo Fa100% (1)

- Your Trip: MR Mohamed Yousuf Hasan Mohamed Iqbal BasherDokument1 SeiteYour Trip: MR Mohamed Yousuf Hasan Mohamed Iqbal BasherMohamed YousufNoch keine Bewertungen