Beruflich Dokumente

Kultur Dokumente

Hypervisor - Vs Container-Based Virtualization (Seminars FI IITM WS 1516)

Hochgeladen von

Claudio Di Girolamo0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

133 Ansichten7 SeitenDifferences between virtualization approaches

Originaltitel

Hypervisor- Vs Container-based Virtualization (Seminars FI IITM WS 1516)

Copyright

© © All Rights Reserved

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenDifferences between virtualization approaches

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

133 Ansichten7 SeitenHypervisor - Vs Container-Based Virtualization (Seminars FI IITM WS 1516)

Hochgeladen von

Claudio Di GirolamoDifferences between virtualization approaches

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 7

Hypervisor- vs.

Container-based Virtualization

Michael Eder

Betreuer: Holger Kinkelin

Seminar Future Internet WS2015/16

Lehrstuhl Netzarchitekturen und Netzdienste

Fakultt fr Informatik, Technische Universitt Mnchen

Email: edermi@in.tum.de

ABSTRACT 2. DISTINCTION: HYPERVISOR VS. CON-

For a long time, the term virtualization implied talking about TAINER-BASED VIRTUALIZATION

hypervisor-based virtualization. However, in the past few When talking about virtualization, the technology most peo-

years container-based virtualization got mature and espe- ple refer to is hypervisor-based virtualization. The hypervisor

cially Docker gained a lot of attention. Hypervisor-based is a software allowing the abstraction from the hardware. Eve-

virtualization provides strong isolation of a complete opera- ry piece of hardware required for running software has to be

ting system whereas container-based virtualization strives to emulated by the hypervisor. Because there is an emulation of

isolate processes from other processes at little resource costs. the complete hardware of a computer, talking about virtual

In this paper, hypervisor and container-based virtualization machines or virtual computers is usual. It is common to be

are differentiated and the mechanisms behind Docker and able to access real hardware through an interface provided

LXC are described. The way from a simple chroot over a by the hypervisor, for example in order to access files on a

container framework to a ready to use container management physical device like a CD or a flash drive or to communicate

solution is shown and a look on the security of containers with the network. Inside this virtual computer, an operating

in general is taken. This paper gives an overview of the two system and software can be installed and used like on any

different virtualization approaches and their advantages and normal computer. The hardware running the hypervisor is

disadvantages. called the host (and the operating system host operating

system) whereas all emulated machines running inside them

Keywords are referred to as guests and their operating systems are

virtualization, Docker, Linux containers, LXC, hypervisor, called guest operating systems. Nowadays, it is usual to get

security also utility software together with the hypervisor that allows

convenient access to all of the hypervisors functions. This

improves the ease of operation and may bring additional

1. INTRODUCTION functionalities that are not exactly part of the hypervisors

The paper compares two different virtualization approaches,

job, for example snapshot functionalities and graphical inter-

hypervisor and container-based virtualization. Container-

faces. It is possible to differentiate two types of hypervisors,

based virtualization got popular when Docker [1], a free tool

type 1 and type 2 hypervisors. Type 1 hypervisors are run-

to create, manage and distribute containers gained a lot of

ning directly on hardware (hence often referred to as bare

attention by combining different technologies to a power-

metal hypervisors) not requiring an operating system and

ful virtualization software. By contrast, hypervisor-based

having their own drivers whereas type 2 hypervisors require

virtualization is the alpha male of virtualization that is wi-

a host operating system whose capabilities are used in or-

dely used and around for decades. Both technologies have

der to perform their operations. Well-known hypervisors or

advantages over each other and both come with tradeoffs

virtualization products are:

that have to be taken into account before deciding which of

the both technologies better fits the own needs. The paper

introduces hypervisor- and container-based virtualization in KVM [2], a kernel module for the Linux kernel allowing

Section 2, describes advantages and disadvantages in Secti- access to virtualization capabilities of real hardware

on 3 and goes deeper into container-based virtualization and and the emulator usually used with KVM, qemu [3],

the technologies behind in Section 4. There is already a lot which is emulating the rest of the hardware (type 2),

of literature about hypervisor-based virtualization whereas

container-based virtualization started to get popular in the Xen [4], a free hypervisor running directly on hardware

last few years and there are fewer papers about this topic (type 1),

around, so the main focus of this paper is container-based

virtualization. Another focus of the paper is Docker which is Hyper-V [5], a hypervisor from Microsoft integrated

introduced in Section 4.3, because it traversed a rapid deve- into various Windows versions (type 1),

lopment over the last two years and gained a lot of attention VMware Workstation [6], a proprietary virtualization

in the community. To round the paper out, general securi- software from VMware (type 2)

ty risks of container-based virtualization and Docker and

possible ways to deal with them are elaborated in Section 5. Virtual Box [7], a free, platform independent virtuali-

Section 6 gives a brief overview to related work on this topic. zation solution from Oracle (type 2).

Seminars FI / IITM WS 15/16, 1 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

From now on, this paper assumes talking about type 2 hy- operating system (not shown in the figure) and the real

pervisors when talking about hypervisors. hardware on the layers below it.

Container-based virtualization does not emulate an entire

computer. An operating system is providing certain features

to the container software in order to isolate processes from

other processes and containers. Because the Linux kernel pro-

vides a lot of capabilities to isolate processes, it is required by

all solutions this paper is dealing with. Other operating sys-

tems may provide similar mechanisms, for example FreeBSDs

jails [8] or Solaris Zones. Because there is no full emulation

of hardware, software running in containers has to be com-

patible with the host systems kernel and CPU architecture.

A very descriptive picture for container-based virtualization

is that containers are like firewalls between processes [9].

A similar metaphor for hypervisor-based virtualization are

processes running on different machines connected to and

supervised by a central instance, the hypervisor.

3. USE CASES AND GOALS OF BOTH

VIRTUALIZATION TECHNOLOGIES

Hypervisor and container-based virtualization technologies

come with different tradeoffs, hence there are different goals

each want to achieve. There are also different use cases for

virtualization in general and both hypervisor and container-

based virtualization have therefore special strengths and

weaknesses relating to specific use cases. Because of abstrac-

ting from hardware, both types of virtualization are easy to

migrate and allow a better resource utilization, leading to Figure 1: Scheme of hypervisor-based virtualization.

lower costs and saving energy. Hardware available to guests is usually emulated.

3.1 Hypervisor-based virtualization

Hypervisor-based virtualization allows to fully emulate ano-

ther computer, therefore it is possible to emulate other types 3.2 Container-based virtualization

of devices (for example a smartphone), other CPU architec- Container-based virtualization utilizes kernel features to

tures or other operating systems. This is useful for example create an isolated environment for processes. In contrast

when developing applications for mobile platforms the to hypervisor-based virtualization, containers do not get

developer can test his application on his development system their own virtualized hardware but use the hardware of the

without the need of physically having access to a target de- host system. Therefore, software running in containers does

vice. Another common use case is to have virtual machines directly communicate with the host kernel and has to be

with other guest operating systems than the host. Some users able to run on the operating system (see figure 2) and CPU

need special software that does not run on their preferred architecture the host is running on. Not having to emulate

operating system, virtualization allows to run nearly every hardware and boot a complete operating system enables con-

required environment and software in this environment inde- tainers to start in a few milliseconds and be more efficient

pendently from the host system. Because of the abstraction than classical virtual machines. Container images are usual-

from the hardware, it is easier to create, deploy and maintain ly smaller than virtual machine images because container

images of the system. In the case of hardware incidents, a images do not need to contain a complete toolchain to run a

virtual machine can be moved to another system with very operating system, i.e. device drivers, kernel or the init system.

little effort, in the best case even without the user noticing This is one of the reasons why container-based virtualization

that there was a migration. Hypervisor-based virtualization got more popular over the last few years. The small resource

takes advantage of modern CPU capabilities. This allows the fingerprint allows better performance on a small and larger

virtual machine and its applications to directly access the scale and there are still relatively strong security benefits.

CPU in an unprivileged mode [10], resulting in performance

improvements without sacrificing the security of the host Shipping containers is a really interesting approach in soft-

system. ware developing: Developers do not have to set up their

machines by hand, instead of that a build system image may

Hypervisors may set up on the hardware directly (type 1) be distributed containing all tools and configuration required

or on a host operating system (type 2). Figure 1 shows a for working on a project. If an update is required, only one

scheme where the hypervisor is located in this hierarchy. image has to be regenerated and distributed. This approach

Assuming a type 1 Hypervisor, all operating systems were eases the management of different projects on a developers

guests in Figure 1 whereas a type 2 hypervisor was on the machine because one can avoid dependency conflicts and

same level than other userspace applications, having the separate different projects in an easy way.

Seminars FI / IITM WS 15/16, 2 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

4.2 Linux containers

Linux containers [12] (LXC) is a project that aims to build

a toolkit to isolate processes from other processes. As said,

chroot was not developed as a security feature and it is possi-

ble to escape the chroot LXC tries to create environments

(containers) that are supposed to be escape-proof for a pro-

cess and its child processes and to protect the system even

if an attacker manages to escape the container. Apart from

that, it provides an interface to fine-grained limit resource

consumption of containers. Containers fully virtualize net-

work interfaces and make sure that kernel interfaces may

only be accessed in secure ways. The following subsections

are going to show the most important isolation mechanisms

in greater detail. The name Linux containers (LXC ) may be

Figure 2: Scheme of container-based virtualization. a little bit confusing: It is a toolkit to create containers on

Hardware and Kernel of the host are used, contai- Linux, of course there are other possibilities to achieve the

ners are running in userspace separated from each same goal with other utilities than LXC and to create isola-

other. ted processes or groups of them (containers) under Linux.

Because LXC is a popular toolkit, most of the statements of

this paper referring to Linux containers apply to LXC, too,

but we are going to use the term LXC in order to specifically

4. FROM CHROOT OVER CONTAINERS talk about the popular implementation and Linux containers

TO DOCKER in order to talk about the general concept of process isola-

Container-based virtualization uses a lot of capabilities the tion as described in this paper on Linux. The capabilities

kernel provides in order to isolate processes or to achieve other introduced in the following are difficult to use meaning that

goals that are helpful for this purpose, too. Most solutions proper configuration requires a lot of reading documentation

build upon the Linux kernel and because the papers focus lies and much time setting everything up. Deploying complex

on LXC and Docker, a closer look at the features provided configuration of system-level tools is a hard task. Easily ad-

by the Linux kernel in order to virtualize applications in opting the configuration to other environments and different

containers will be taken. Because the kernel provides most needs without endangering security of the existing solution is

of the capabilities required, container toolkits usually do nearly impossible without a toolkit providing access to those

not have to implement them again and the kernel code is features. LXC is a toolkit trying to fulfill these requirements.

already used by other software that is considered stable and

already in production use. In case of security problems of

the mechanisms provided by the kernel, fixes are getting

4.2.1 Linux kernel namespaces

distributed with kernel updates, meaning that the container

By now, processes are only chrooted but still see other pro-

software does not need to be patched and the user only has

cesses and are able to see information like user and group

to keep its kernel up to date.

IDs, access the hostname and communicate with others. The

goal is to create an environment for a process that allows

him access to a special copy of this information that does not

4.1 chroot need to be the same that other processes see, but the easy

The change root mechanism allows to change the root di- approach of preventing the process to access this information

rectory of a process and all of its subprocesses. Chroot is may crash it or lead to wrong behaviour. The kernel provi-

used in order to restrict filesystem access to a single folder des a feature called namespaces (in fact, there are several

which is treated as the root folder (/) by the target process different [13] namespaces) in order to realize these demands.

and its subprocesses. On Linux, this is not considered a se- Kernel namespaces is a feature allowing to isolate processes,

curity feature because it is possible for a user to escape the groups of processes and even complete subsystems like the

chroot [11]. interprocess communication or the network subsystem of the

kernel. It is a flexible mechanism to separate processes from

Apart from that, chroot may prevent erroneous software from each other by creating different namespaces for processes

accessing files outside of the chroot and even if it is possible that need to be separated. The kernel allows passing glo-

to escape the chroot it makes it harder for attackers to bal resources such as network devices or process/user/group

get access to the complete filesystem. Chroot is often used IDs into namespaces and manages synchronization of those

for testing software in a somehow isolated environment and resources. It is possible to create namespaces containing pro-

to install or repair the system. This means chroot does not cesses that have a process ID (PID) that is already in use on

provide any further process isolation apart from changing the the host system or other containers. This simplifies migration

root directory of a process to a different directory somewhere of a suspended container because the PIDs of the container

in the filesystem. are independent from the PIDs of the host system. User

namespaces allow to isolate security-related identifiers and

Compared to a normal execution of processes, putting them attributes, in particular, user IDs and group IDs [. . . ], the

into a chroot is a rather weak guarantee that they are not root directory, keys [. . . ], and capabilities [14]. Combining

able to access places of the filesystem they are not supposed all these features makes it possible to isolate processes in a

to access. way that

Seminars FI / IITM WS 15/16, 3 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

process IDs inside namespaces are isolated from process Control is a set of concepts defining management of access

IDs of other namespaces and are unique per-namespace, control which is gaining more and more attention over the

but processes in different namespaces may have the past years. The need for such systems is generally to improve

same process IDs, security and in this case MAC is used to harden the access

control mechanisms of Linux/Unix. Instead, if a resource

global resources are accessible via an API provided by is requested, authorization rules (policies) are checked and

the kernel if desired, if the requirements defined by the policy are met access is

there is abstraction from users, groups and other se- granted. There are different approaches on how to implement

curity related information of the host system and the such a system, the three big ones are namely SELinux [17],

containers. AppArmor [18] and grsecuritys RBAC [19]. MAC policies

are often used in order to restrict access to resources that

are sensitive and not required to be accessed in a certain

In fact, kernel namespaces are the foundation of process sepa- context. For example the chsh 1 userspace utility on Linux

ration and therefore one of the key concepts for implementing has the setuid bit set. This means that a regular user is

container-based virtualization. They provide a lot of features allowed to run this binary and the binary can elevate it self

required for building containers and are available since kernel to running with root privileges in order to do what it is

2.6.26 which means they are around for several years and are required to do. If a bug was found in the binary it may be

used in production already [15]. possible to execute malicious code with root privileges as a

normal user. A MAC policy could be provided allowing the

4.2.2 Control groups binary to elevate its rights in order to do its legitimate job

Control groups (further referred to as cgroups) are a feature but denying everything root can do but the library does not

that is not mandatory in order to isolate processes from other need to do. In this case, there is no use case for the chsh

processes. In the first place cgroups is a mechanism to track binary to do networking but it is allowed to because it can

processes and process groups including forked processes. [16] do anything root can do. A policy denying access to network

In the first instance, they do not solve a problem that is related system components does not affect the binary but

related to process isolation or making the isolation stron- if it was exploited by an attacker, he wouldnt be able to

ger, but provided hooks allow other subsystems to extend use the binary in order to sniff traffic on active network

those functionalities and to implement fine-grained resource interfaces or change the default gateway to his own box

control and limitation which is more flexible compared to capturing all the packets because a MAC policy is denying

other tools trying to achieve a similar goal. The ability to the chsh tool to access the network subsystem. There are a

assign resources to processes and process groups and manage lot of examples where bugs in software let attackers access

those assignments allows to plan and control the use of con- sensitive information or do malicious activities that are not

tainers without unrestricted waste of physical resources by a required by the attacked process, e.g. CVE200733042 which

simple container. The same way it is possible to guarantee is a bug in the Apache webserver allowing an attacker to send

that resources are not unavailable because other processes signals to other processes which gets mitigated already by

are claiming them for themselves. At first this might look enabling SELinux in enforcing mode [10]. Especially network

like a feature primarily targeting at hosts serving multiple services like web- or mail servers, but also connected systems

different users (e.g. shared web-hosting), but it is also a po- like database servers are receiving untrusted data and are

werful mechanism to avoid Denial of Service (DoS) attacks. potential attack targets. Restricting their access to resources

DoS attacks do not compromise the system, they try to ge- to their minimal requirements increases the security of the

nerate a useless workload preventing a service to fulfill its system and all connected systems. Applying these security

task by overstressing it. A container behaving different than improvements to containers adds another layer of protection

suspected may have an unknown bug, be attacked or even to mitigate attacks against the host and other containers

controlled by an attacker and consuming a lot of physical from inside of the container.

resources having limited access to those resources from

the very beginning avoids outages and may safe time, money

and nerves of all those involved. As said, controlling resources

4.3 Docker

LXC is a toolkit to work with containers saving users from

is not a feature required for isolation but essential in order

having to work with low level mechanisms. It creates an

to compete with hypervisor-based virtualization. It is very

interface to access all the features the Linux kernel is pro-

common to restrict resource usage in hypervisor-based vir-

viding while reducing the learning curve for users. Without

tualization and being able to do the same with containers

LXC, a user needs to spend a lot of time to read the kernel

allows to better utilize the given resources.

documentation in order to understand and use the provided

features to set up a container by hand. LXC allows to auto-

4.2.3 Mandatory Access Control

On Linux (and Unix), everything is a file and every file has 1

chsh allows a user to change its login shell. This is a task

related information about which user and which group the every user is allowed to do on its own but requires modifica-

file belongs to and what the owner, the owning group and tion of the file /etc/passwd which is accessible for root only.

everybody else on the system is allowed to do with a file. chsh allows safe access to the file and allows a user to change

Doing in this context means: reading, writing or executing. his own login shell, but not the login shells of other users

2

This Discretionary Access Control (DAC ) decides if access Common Vulnerabilities and Exposures is a standard to

assign public known vulnerabilities a unique number in order

to a resource is granted solely on information about users to make it easy and to talk about a certain vulnerability and

and groups. Contrary, Mandatory Access Control (MAC ) to avoid misunderstandings by confusing different vulnerabi-

does not decide on those characteristics. Mandatory Access lities

Seminars FI / IITM WS 15/16, 4 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

mate the management of containers and and enables users run on completely different machines), this example assumes

to build their own container solution that is customizable that the setup is the developers setup and differs from the

and fits even exotic needs. For those who want to simply deployment in production use. So, after installing Docker, it

run processes in an isolated environment without spending is possible to fetch the filesystem layers of all of those tools

too much time figuring out how the toolkit works, this is and combine them to one image that runs all the software

still impractical. This is were Docker [1] comes in: It is a presented above and may now be used in order to develop

command line tool that allows to create, manage and de- the web app. The web app itself may now be the content of

ploy containers. It requires very little technical background a new filesystem layer allowing to be deployed in the same

compared to using LXC for the same task and provides va- manner later on. If a new version of one of the components

rious features that are essential to work with containers in is released and the developer wants to update its local layer,

a production environment. Docker started in March 2013 only the updated layer has to be fetched. The configuration

and got a lot of attention since then, resulting in a rapid of the user usually remains in a separate layer and it is not

growth of the project. By combining technologies to isolate affected by the update. Because there is no need to fetch the

processes with different other useful tools, Docker makes it data for other layers again, space and bandwidth is saved. If

easier to create container images based on other container another user wants to use the web app the developer pushed

images. It allows the user to access a public repository on to the Docker Hub, but he prefers PostgreSQL over MySQL

the internet called Docker Hub [20] that contains hundreds and nginx over Apache webserver and the web app is capable

of ready usable images that can be downloaded and started of being used with those alternatives to the developers setup,

with a single command. Users have the ability to change he may use the respective filesystem layers of the software

and extend those images and share their improvements with he wants to use.

others. There is also an API allowing the user to interact

with Docker remotely. Docker brings a lot of improvements

compared to the usage of LXC, but introduces also new at- 4.3.3 Docker Hub

tack vectors on a layer that is not that technical anymore As said, one of the novelties Docker introduced to the vir-

because now users and their behaviour play a bigger part in tualization market was the Docker Hub [20]. It is one of the

the security of the complete system. Before talking about things nobody demanded because it was simply not there

that in greater detail, some major improvements over LXC and everybody was fine reading documentation and creating

Docker introduced are outlined. virtual machines and container images from scratch over and

over again. The Docker Hub is a web service that is fully in-

tegrated into the Docker software and allows to fetch images

4.3.1 One tool to rule them all that are ready to run from the Docker Hub. Images created

LXC is a powerful tool enabling a wide range of setups and or modified by users may be shared on the Docker Hub, too.

because of that, it is hard to come by when not being deep The result is that the Docker Hub contains a lot of images of

into the topic and the tools. Docker aims to simplify the different software in different configurations and every user

workflow. The Docker command line tool is the interface for has the ability to fetch the images and documentation of the

the user to interact with the Docker daemon. It is a single images, use and improve them and get in touch with other

command with memorizable parameter names allowing the users by commenting on images and collaborating on them.

user to access all the features. Depending on the environment, The Docker Hub can be accessed via a web browser, too. To

there is little to no configuration required to pull (download) ease the choice of the right images of often used images like

images from the online repository (Docker Hub) and run Wordpress, MongoDB and node.js, the Docker Hub allows

it on the system. Apart from not being required, it is of such projects to mark their images as official images that

course possible to create a configuration file for Docker and come directly from declared project members. The ability

specific images in order to ease administration, set specific for everyone to push their images to the Docker Hub led to

parameters and to automate the build process of new images. a great diversity and a lot of software available as container

images. Even more complex or very specific configurations of

4.3.2 Filesystem layers generated and distributed in- some programs can be found there. The Hub introduces also

some security problems that are covered in Section 5. Users

dependently are not tied to the Docker Hub. It is possible to set up private

One major improvement over classical hypervisor-based vir- registries that can be used natively the same way, but are e.g.

tualization Docker introduced is the usage of filesystem layers. only accessible for authenticated users in a company network

Filesystem layers can be thought of as different parts of a file- or in order to build a public structure that is independent to

system, i.e. the root file system of a container and filesystems the already existing structure.

containing application specific files. There may be different

filesystem layers, for example one for the base system which

may be always required and additional layers containing 4.4 Managing great numbers of containers

the files of e.g. a webserver. This means that the webserver Running containers is cheap regarding resource consumption.

that is ready to run can be distributed as an independent Building large-scale services relying on container structures

filesystem layer. By way of illustration a web service is con- may involve thousands of containers located on different ma-

sisting of a SQL database server like MySQL, a webserver chines all over the world. Today this may still be a corner

like Apache, a programming language and the framework a case, but some companies are already facing management

web application is built on like python using Django and a problems because they have too much containers to admi-

mail transfer agent like sendmail is assumed. Of course it nistrate them by hand. Google introduced Kubernetes [21]

is possible to a certain extent to split those tools and run in order to ease the administration, group containers and

them in different environments (mail, web and database may automate working with groups of containers.

Seminars FI / IITM WS 15/16, 5 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

5. SECURITY OF CONTAINER-BASED VS. A study [25] found out that Over 30% of Official Images in

HYPERVISOR-BASED VIRTUALIZATI- Docker Hub Contain High Priority Security Vulnerabilities.

The numbers were generated by a tool scanning the images

ON listed on the Docker Hub for known vulnerabilities. More

Hypervisor-based virtual machines are often used in order than 60% of official images, which means they come from

to introduce another layer of security by isolating resources official developers of the projects contained in the container,

exposed to attackers from other resources that need to be contained medium or high priority vulnerabilities. Analyzing

protected. Properly configured containers have a security the vulnerabilities of all images on the Hub showed that 75%

profile that is slightly more secure than multiple applications contained vulnerabilities considered medium or high priority

on a single server and slightly less secure than KVM virtu- issues. Overcoming these problems requires permanent analy-

al machines. [10] The better security profile compared to sis of the containers which means scanning them for security

multiple applications next to each other is because of the problems regularly and inform their creators and users about

already mentioned mechanisms making it harder to escape problems found. This means that it may be easier to come

from an isolated environment, but all processes in all contai- by the problem of outdated containers because the fact that

ners still run together with other processes under the host they are stored centrally allows to statically scan all images

kernel. Because KVM is a hypervisor emulating complete in the repository.

hardware and another operating system inside, it is consi-

dered even harder to escape this environment resulting in a Mr. Hayden points out how to build a secure LXC container

slightly higher security of hypervisor-based virtualization. from scratch that can be used as foundation for further

modifications [10].

It is possible to combine both technologies and due to the

small resource fingerprint and the introduced security layer of

container-based virtualization, this is considered a good idea.

6. RELATED WORK

One of the use cases for hypervisor and container-based

Nevertheless, container-based virtualization is not proven to

virtualization is improving security by isolating processes

be secure and container breakouts already happened in the

that are untrusted or are connected to other systems and

past [22].

expose attack surface, i.e. a webserver on a machine connected

to the internet. Of course, there are different ways to improve

Multiple security issues arise from the spirit of sharing images.

security that may be applied additionally to virtualization.

Docker has for a long time not had sufficient mechanisms

to check the integrity and authenticity of the downloaded

The already described Mandatory Access Control (see Sec-

images, meaning that the authors of the image are indeed

tion 4.2.3) goes already into the direction of hardening the

the people who claim they are and that the image has not

operating system. This means to add new or improve already

been modified without being recognized, to the contrary their

present mechanisms in order to make it harder to successful-

system exposed attack surface and it may has been possible

ly attack systems. On Linux, the grsecurity [19] project is

for attackers to modify images and pass Dockers verification

well-known for their patch set adding and improving a lot

mechanisms [23]. Docker addressed this issues by integrating

of kernel features, for example MAC through their RBAC

a new mechanism called Content Trust that allows users

system, Address Space Layout Randomization (ASLR) and

to verify the publisher of images [24]. There is nothing like

a lot of other features making it harder to attack the kernel.

the Docker Hub for hypervisor-based virtualization software,

meaning that it is usual to build the virtual machines from

Another approach of separating applications can be found in

scratch. In case of Linux, on the one hand it is common that

the Qubes OS [26] project. They build an operating system

packages are signed and the authenticity and integrity can be

based on Linux and the X11 window system on top of XEN

validated, on the other hand each virtual machine contains a

and allow to create AppVMs that run applications in dedi-

lot of software that needs to be taken care off by hand all

cated virtual machines somehow similar to the container

the time.

approach, but with hypervisor-based virtualization instead

of mechanisms built inside a standard Linux kernel. Accor-

One of the biggest problems of Docker is that it is hard to

ding to their homepage, it is even possible to run Microsoft

come to grips with the high amount of images containing

Windows based application virtual machines.

security vulnerabilities. Origin of this problem is that images

on the Docker Hub do not get updated automatically, every

MirageOS [27] is also an operating system setting up on XEN,

update has to be built by hand instead. This is a rather

but deploying applications on MirageOS means deploying

social problem of people not updating their software neither

a specialized kernel containing the application. Those uni-

updating the containers they pushed to the Docker Hub in

kernels [28] contain only what is needed in order to perform

the past. This is a somehow harder problem compared to

their only task. Because there are no features not absolutely

the same phenomenon of software not being up to date on a

required, unikernels are usually really small and performant.

virtual machine: There is actually no update available on the

MirageOS is a library operating system that may be used in

Docker Hub, the latest image contains vulnerabilities. On

order to create such unikernels. The probably biggest weak-

virtual machines when running an operating system that is

ness of this approach is that applications need to be written

still taken care of, someone needs to log in and install the

specifically to be used with unikernels.

security updates, which may be forgotten or simply ignored

because of various reasons, but building the fixed container

as a user is usually way more effort. 7. CONCLUSION

Container-based virtualization is a lightweight alternative to

hypervisor-based virtualization for those, who do not require

Seminars FI / IITM WS 15/16, 6 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

or wish to have separate emulated hardware and operating [11] chroot (2) manpage, release 4.02 of Linux man-pages

system kernel a system in a system. There are many sce- project, http://man7.org/linux/man-

narios where speed, simplicity and only the need to isolate pages/man2/chroot.2.html, Retrieved: September 16,

processes are prevalent and container-based virtualization 2015

fulfills these needs. By using mostly features already present [12] LXC project homepage,

in the Linux kernel for several years, container-based virtua- https://linuxcontainers.org/, Retrieved:

lization builds on a mature codebase that is already running September 13, 2015

on the users machines and kept up to date by the Linux [13] namespaces (7) manpage, release 4.02 of Linux

community. Especially Docker introduced new concepts that man-pages project, http://man7.org/linux/man-

were not associated and used together with virtualization. pages/man7/namespaces.7.html, Retrieved:

Giving users the social aspect of working together, sharing September 16, 2015

and reuse the work of other users is definitely one of Dockers [14] user namespaces (7) manpage, release 4.02 of Linux

recipes for success and a completely new idea in the area man-pages project, http://man7.org/linux/man-

of virtualization. Because it is relatively easy to run a huge pages/man7/user_namespaces.7.html, Retrieved:

number of containers, there are already tools allowing to September 16, 2015

manage large groups of containers. [15] Docker Docs: Docker Security,

https://docs.docker.com/articles/security/,

When it comes to security, there is no need for a vs. in Retrieved: August 18, 2015

the title of this paper. Generally, it is of course possible

[16] P. Menage, P. Jackson, C. Lameter: Linux Kernel

to run containers on an already (hypervisor-) virtualized

Documentation, https://www.kernel.org/doc

computer or to run your hypervisor in your container. The

/Documentation/cgroups/cgroups.txt, Retrieved

hypervisor layer is considered a thicker layer of security

September 13, 2015

than the application of the mechanisms described above.

Nevertheless, applying these lightweight mechanisms adds [17] SELinux project homepage,

additional security at literally no resource cost. http://selinuxproject.org/page/Main_Page,

Retrieved: September 26, 2015

Both approaches and of course their combination is hardware- [18] AppArmor project homepage,

independent, allows a better resource utilization, improves http://wiki.apparmor.net/index.php/Main_Page,

security and eases management. Retrieved: September 26, 2015

[19] grsecurity project homepage,

https://grsecurity.net/, Retrieved: September 16,

8. REFERENCES 2015

[1] Docker project homepage, https://www.docker.com/,

Retrieved: September 13, 2015 [20] Docker Hub, https://hub.docker.com/, Retrieved:

September 26, 2015

[2] KVM project homepage, http://www.linux-kvm.org/

page/Main_Page, Retrieved: September 16, 2015 [21] Kubernetes project homepage, http://kubernetes.io/,

Retrieved: September 16, 2015

[3] qemu project Homepage,

http://wiki.qemu.org/Main_Page, Retrieved: [22] J. Turnbull: Docker container Breakout

September 16, 2015 Proof-of-Concept Exploit,

https://blog.docker.com/2014/06/docker-

[4] XEN project homepage, http://www.xenproject.org/,

container-breakout-proof-of-concept-exploit/,

Retrieved: September 16, 2015

Retrieved: August 18, 2015

[5] Microsoft TechNet Hyper-V overview,

[23] J. Rudenberg: Docker Image Insecurity,

https://technet.microsoft.com/en-us/library/

https://titanous.com/posts/docker-insecurity,

hh831531.aspx, Retrieved: September 16, 2015

Retrieved: August 18, 2015

[6] VMware Homepage, http://www.vmware.com/,

[24] D. Monica: Introducing Docker Content Trust,

Retrieved: September 16, 2015

https://blog.docker.com/2015/08/content-trust-

[7] VirtualBox project homepage, docker-1-8/, Retrieved: August 18, 2015

https://www.virtualbox.org/, Retrieved: September

[25] Jayanth Gummaraju, Tarun Desikan and Yoshio

16, 2015

Turner: Over 30% of Official Images in Docker Hub

[8] M. Riondato FreeBSD Handbook, Chapter 14. Jails, Contain High Priority Security Vulnerabilities,

https://www.freebsd.org/doc/handbook/ http://www.banyanops.com/blog/analyzing-docker-

jails.html, Retrieved: September 26, 2015 hub/, Retrieved: August 18, 2015

[9] E. Windisch: On the Security of containers, [26] Cubes OS project homepage,

https://medium.com/@ewindisch/on-the-security- https://www.qubes-os.org/, Retrieved: September

of-containers-2c60ffe25a9e, Retrieved: August 18, 16, 2015

2015

[27] MirageOS project homepage, https://mirage.io/,

[10] M. Hayden: Securing Linux containers, Retrieved: September 16, 2015

https://major.io/2015/08/14/research-paper-

[28] XEN Unikernels wiki page,

securing-linux-containers/, Retrieved: August 18,

http://wiki.xenproject.org/wiki/Unikernels,

2015

Retrieved: September 16, 2015

Seminars FI / IITM WS 15/16, 7 doi: 10.2313/NET-2016-07-1_01

Network Architectures and Services, July 2016

Das könnte Ihnen auch gefallen

- Anatomy of A Linux Hypervisor: An Introduction To KVM and LguestDokument7 SeitenAnatomy of A Linux Hypervisor: An Introduction To KVM and LguestFelipe BaltorNoch keine Bewertungen

- IP Multimedia Subsystem A Complete Guide - 2020 EditionVon EverandIP Multimedia Subsystem A Complete Guide - 2020 EditionNoch keine Bewertungen

- Virtual IzationDokument92 SeitenVirtual IzationnkNoch keine Bewertungen

- Parallel and High Performance Programming with Python: Unlock parallel and concurrent programming in Python using multithreading, CUDA, Pytorch and Dask. (English Edition)Von EverandParallel and High Performance Programming with Python: Unlock parallel and concurrent programming in Python using multithreading, CUDA, Pytorch and Dask. (English Edition)Noch keine Bewertungen

- Telco Cloud - 04. Introduction To Hypervisor, Docker & ContainerDokument8 SeitenTelco Cloud - 04. Introduction To Hypervisor, Docker & Containervikas_sh81Noch keine Bewertungen

- What Is Virtualization?Dokument22 SeitenWhat Is Virtualization?muskan guptaNoch keine Bewertungen

- Lcu14 500armtrustedfirmware 140919105449 Phpapp02 PDFDokument10 SeitenLcu14 500armtrustedfirmware 140919105449 Phpapp02 PDFDuy Phuoc NguyenNoch keine Bewertungen

- L Libvirt PDFDokument11 SeitenL Libvirt PDFmehdichitiNoch keine Bewertungen

- CPP Interview QuestionsDokument4 SeitenCPP Interview QuestionsRohan S Adapur 16MVD0071Noch keine Bewertungen

- System On A Chip: From Wikipedia, The Free EncyclopediaDokument16 SeitenSystem On A Chip: From Wikipedia, The Free EncyclopediaAli HmedatNoch keine Bewertungen

- Application Base: Technical ReferenceDokument18 SeitenApplication Base: Technical ReferencetoantqNoch keine Bewertungen

- Introduction To Software Engineering PDFDokument150 SeitenIntroduction To Software Engineering PDFThe Smart Boy KungFuPawnNoch keine Bewertungen

- MCBSC and MCTC ArchitectureDokument6 SeitenMCBSC and MCTC ArchitectureAmol PatilNoch keine Bewertungen

- LTE Express: End-to-End LTE Network: HSS MME OCS IP Network IP Mobile Core Application ServerDokument1 SeiteLTE Express: End-to-End LTE Network: HSS MME OCS IP Network IP Mobile Core Application Serverdtheo00Noch keine Bewertungen

- Securing Linux: Presented By: Darren MobleyDokument23 SeitenSecuring Linux: Presented By: Darren MobleyLila LilaNoch keine Bewertungen

- VIT ProjectDokument28 SeitenVIT ProjectSaurabh SinghNoch keine Bewertungen

- Autosar Prs SomeipprotocolDokument50 SeitenAutosar Prs Someipprotocolsurya jeevagandanNoch keine Bewertungen

- Capgemini Engineering 5g Solutions NGNDokument5 SeitenCapgemini Engineering 5g Solutions NGNAhmed YakoutNoch keine Bewertungen

- Kubernetes - VSphere Cloud ProviderDokument36 SeitenKubernetes - VSphere Cloud ProvidermosqiNoch keine Bewertungen

- Virtualization Concepts and Applications: Yash Jain Da-Iict (DCOM Research Group)Dokument58 SeitenVirtualization Concepts and Applications: Yash Jain Da-Iict (DCOM Research Group)Santosh Aditya Sharma ManthaNoch keine Bewertungen

- The Command Pattern: CSCI 3132 Summer 2011Dokument29 SeitenThe Command Pattern: CSCI 3132 Summer 2011kiranshingoteNoch keine Bewertungen

- Open Ran Radio Verification: Viavi Oneadvisor 800Dokument15 SeitenOpen Ran Radio Verification: Viavi Oneadvisor 800Vamsi JadduNoch keine Bewertungen

- Virtualization: Spreading The Word " "Dokument39 SeitenVirtualization: Spreading The Word " "Nisar AhmadNoch keine Bewertungen

- Reducing Boot Time: Techniques For Fast BootingDokument27 SeitenReducing Boot Time: Techniques For Fast BootingHARDIK PADHARIYANoch keine Bewertungen

- Jaimin-Boot Time Optimization in Embedded Linux PDFDokument23 SeitenJaimin-Boot Time Optimization in Embedded Linux PDFjaimin23100% (1)

- 1.4.2 The Fetch-Execute Cycle PDFDokument7 Seiten1.4.2 The Fetch-Execute Cycle PDFtalentNoch keine Bewertungen

- IoT - Solution R&SDokument12 SeitenIoT - Solution R&SAlfred NgNoch keine Bewertungen

- NCNF: From "Network On A Cloud" To "Network Cloud": The Evolution of Network VirtualizationDokument6 SeitenNCNF: From "Network On A Cloud" To "Network Cloud": The Evolution of Network VirtualizationnobinmathewNoch keine Bewertungen

- Command and Adapter PatterDokument74 SeitenCommand and Adapter PatterYakub ShahNoch keine Bewertungen

- v16 5g Archi TechnicalDokument36 Seitenv16 5g Archi Technicalkk lNoch keine Bewertungen

- Sriov Vs DPDKDokument28 SeitenSriov Vs DPDKshilpee 90Noch keine Bewertungen

- Embedded Linux Workshop On Blueboard-AT91: B. Vasu DevDokument30 SeitenEmbedded Linux Workshop On Blueboard-AT91: B. Vasu DevJOHNSON JOHNNoch keine Bewertungen

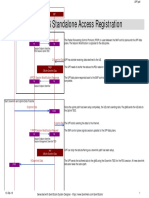

- UPF Interactions: 5G Standalone Access Registration: 1:PFCP Session Modification RequestDokument2 SeitenUPF Interactions: 5G Standalone Access Registration: 1:PFCP Session Modification RequestBabu MarannanNoch keine Bewertungen

- L2, L3 Protocol Testing Training in Chennai PDFDokument2 SeitenL2, L3 Protocol Testing Training in Chennai PDFShashank PrajapatiNoch keine Bewertungen

- 4G Emerging Trends & Market Scenario in IndiaDokument13 Seiten4G Emerging Trends & Market Scenario in IndiaPawan Kumar ThakurNoch keine Bewertungen

- COM416 Cloud Computing & VirtualizationDokument42 SeitenCOM416 Cloud Computing & VirtualizationkennedyNoch keine Bewertungen

- En DC Secondary Node Addition Core Network DetailsDokument6 SeitenEn DC Secondary Node Addition Core Network DetailsTalent & Tech Global InfotechNoch keine Bewertungen

- ETSI TS 123 501: 5G System Architecture For The 5G System (3GPP TS 23.501 Version 15.2.0 Release 15)Dokument219 SeitenETSI TS 123 501: 5G System Architecture For The 5G System (3GPP TS 23.501 Version 15.2.0 Release 15)Gabriel Rodriguez GigireyNoch keine Bewertungen

- VMware State of Kubernetes 2020 EbookDokument12 SeitenVMware State of Kubernetes 2020 EbookEdilson AzevedoNoch keine Bewertungen

- Network Scalability: © 2012 Vmware Inc. All Rights ReservedDokument51 SeitenNetwork Scalability: © 2012 Vmware Inc. All Rights ReservedwgaiottoNoch keine Bewertungen

- O RAN - Wg1.o RAN Architecture Description v02.00Dokument28 SeitenO RAN - Wg1.o RAN Architecture Description v02.00ibrahimmohamed87Noch keine Bewertungen

- IMS Core Emulator - Cosmin CabaDokument23 SeitenIMS Core Emulator - Cosmin CabaCosmin CabaNoch keine Bewertungen

- C++ Questions: KeralamDokument31 SeitenC++ Questions: Keralamsai5charan5bavanasiNoch keine Bewertungen

- SJ-20130408140048-019-ZXUR 9000 GSM (V6.50.103) Software Version Management Operation Guide - 519349Dokument37 SeitenSJ-20130408140048-019-ZXUR 9000 GSM (V6.50.103) Software Version Management Operation Guide - 519349fahadmalik89Noch keine Bewertungen

- Agile IMS Network Infrastructure For Session DeliveryDokument9 SeitenAgile IMS Network Infrastructure For Session Deliverylong.evntelecomNoch keine Bewertungen

- HC Architecture Today's Business 370127 PDFDokument14 SeitenHC Architecture Today's Business 370127 PDFpermasaNoch keine Bewertungen

- SDP Codec Selection Resource AllocationDokument2 SeitenSDP Codec Selection Resource AllocationZteTems OptNoch keine Bewertungen

- 02-NB-IoT Air Interface ISSUEDokument90 Seiten02-NB-IoT Air Interface ISSUEThang DangNoch keine Bewertungen

- Signaling in A 5G WorldDokument35 SeitenSignaling in A 5G WorldlaoaaNoch keine Bewertungen

- AstrixRadioPlanning v1.2 PDFDokument16 SeitenAstrixRadioPlanning v1.2 PDFKashifManzoorHussainNoch keine Bewertungen

- Kubernetes Vs VM PresentationDokument10 SeitenKubernetes Vs VM PresentationArde1971Noch keine Bewertungen

- Containerization Using Docker2Dokument63 SeitenContainerization Using Docker2AyushNoch keine Bewertungen

- Nokia Networks IMS Portfolio PDFDokument11 SeitenNokia Networks IMS Portfolio PDFVilasak ItptNoch keine Bewertungen

- 5G Global Unique Temporary Identifier (5G Function (AMF) To The UEDokument3 Seiten5G Global Unique Temporary Identifier (5G Function (AMF) To The UEVishal VenkataNoch keine Bewertungen

- ZXG10 ISMG Server (SBCX) Installation and Commissioning Guide (Professional) - R3.4Dokument171 SeitenZXG10 ISMG Server (SBCX) Installation and Commissioning Guide (Professional) - R3.4tongai_mutengwa5194Noch keine Bewertungen

- VSICM51 M07 VMManagementDokument73 SeitenVSICM51 M07 VMManagementwgaiottoNoch keine Bewertungen

- Internet Architecture and Performance MetricsDokument14 SeitenInternet Architecture and Performance MetricsnishasomsNoch keine Bewertungen

- NOKIA RNC in WBTS SwapOutDokument12 SeitenNOKIA RNC in WBTS SwapOutMahon Ri MaglasangNoch keine Bewertungen

- OpenText Document Management EDOCS DM 5 3 1 Suite Patch 6 Release NotesDokument49 SeitenOpenText Document Management EDOCS DM 5 3 1 Suite Patch 6 Release NotesaminymohammedNoch keine Bewertungen

- Notes-Chapter 1Dokument5 SeitenNotes-Chapter 1Echa LassimNoch keine Bewertungen

- Using The KneeboardDokument2 SeitenUsing The KneeboardDenis CostaNoch keine Bewertungen

- Comptia Linux Guide To Linux Certification 4th Edition Eckert Test BankDokument10 SeitenComptia Linux Guide To Linux Certification 4th Edition Eckert Test BankCherylRandolphpgyre100% (17)

- Panasonic Sa Ak230 PDFDokument94 SeitenPanasonic Sa Ak230 PDFCOL. CCEENoch keine Bewertungen

- Developing and Testing Phased Array Ultrasonic TransducersDokument27 SeitenDeveloping and Testing Phased Array Ultrasonic TransducersscribdmustaphaNoch keine Bewertungen

- Treinamento KACE SMADokument183 SeitenTreinamento KACE SMAValériaNoch keine Bewertungen

- SAP S - 4HANA Migration Cockpit - Deep Dive LTMOM For File - StagingDokument114 SeitenSAP S - 4HANA Migration Cockpit - Deep Dive LTMOM For File - StagingNrodriguezNoch keine Bewertungen

- BSC Card Overview and FunctionDokument35 SeitenBSC Card Overview and Functionkamal100% (1)

- Ex 3300Dokument263 SeitenEx 3300simplyredwhiteandblueNoch keine Bewertungen

- Elektor USA - November 2014 PDFDokument92 SeitenElektor USA - November 2014 PDFJean-Pierre DesrochersNoch keine Bewertungen

- Sony HVR HD1000 V1 PDFDokument410 SeitenSony HVR HD1000 V1 PDFHeNeXeNoch keine Bewertungen

- GE Digital Internship Cum PPO Recruitment Drive PPT On 8th June'23 For 2024 Graduating BatchDokument14 SeitenGE Digital Internship Cum PPO Recruitment Drive PPT On 8th June'23 For 2024 Graduating BatchrandirajaNoch keine Bewertungen

- B.E. (2019 Pattern) Endsem Exam Timetable For May-2024Dokument26 SeitenB.E. (2019 Pattern) Endsem Exam Timetable For May-2024omchavan9296Noch keine Bewertungen

- Work Instructions: Adjustable Igniter Replacement Capstone Model C200 Microturbine /C1000 Series Purpose and ScopeDokument12 SeitenWork Instructions: Adjustable Igniter Replacement Capstone Model C200 Microturbine /C1000 Series Purpose and ScopeJefferson Mosquera PerezNoch keine Bewertungen

- Antonio Garcia ResumeDokument2 SeitenAntonio Garcia Resumeapi-518075335Noch keine Bewertungen

- Assessment-1 Airline DatabaseDokument2 SeitenAssessment-1 Airline DatabaseD. Ancy SharmilaNoch keine Bewertungen

- Java Fundamentals 7-1: Classes, Objects, and Methods Practice ActivitiesDokument4 SeitenJava Fundamentals 7-1: Classes, Objects, and Methods Practice Activities203 057 Rahman QolbiNoch keine Bewertungen

- 2012 Conference NewsfgfghsfghsfghDokument3 Seiten2012 Conference NewsfgfghsfghsfghabdNoch keine Bewertungen

- Odi 12c Getting Started GuideDokument114 SeitenOdi 12c Getting Started GuidemarcellhkrNoch keine Bewertungen

- EmpTech11 Q1 Mod2 Productivity-Tools Ver3Dokument74 SeitenEmpTech11 Q1 Mod2 Productivity-Tools Ver3Frances A. PalecNoch keine Bewertungen

- Patel - Spacecraft Power SystemsDokument734 SeitenPatel - Spacecraft Power SystemsThèm Khát100% (1)

- Module 8 Final Collaborative ICT Development 3Dokument24 SeitenModule 8 Final Collaborative ICT Development 3Sir OslecNoch keine Bewertungen

- Telenor Call, SMS & Internet Packages - Daily, Weekly & Monthly Bundles (June, 2022)Dokument16 SeitenTelenor Call, SMS & Internet Packages - Daily, Weekly & Monthly Bundles (June, 2022)Yasir ButtNoch keine Bewertungen

- AmpliTube 4 User ManualDokument102 SeitenAmpliTube 4 User Manualutenteetico100% (1)

- P2M BookletDokument20 SeitenP2M BookletAkaninyeneNoch keine Bewertungen

- TC Electronic Support - Which Expression Pedal To Use With TC Devices-1Dokument3 SeitenTC Electronic Support - Which Expression Pedal To Use With TC Devices-1sarag manNoch keine Bewertungen

- Symantec Netbackup ™ For Vmware Administrator'S Guide: Unix, Windows, and LinuxDokument118 SeitenSymantec Netbackup ™ For Vmware Administrator'S Guide: Unix, Windows, and Linuxlyax1365Noch keine Bewertungen

- 2004 CatalogDokument80 Seiten2004 CatalogberrouiNoch keine Bewertungen

- A122 DVB-T2 Spec PDFDokument187 SeitenA122 DVB-T2 Spec PDFWilson OrtizNoch keine Bewertungen

- iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]Von EverandiPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]Bewertung: 5 von 5 Sternen5/5 (2)

- iPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsVon EverandiPhone 14 Guide for Seniors: Unlocking Seamless Simplicity for the Golden Generation with Step-by-Step ScreenshotsBewertung: 5 von 5 Sternen5/5 (3)

- RHCSA Red Hat Enterprise Linux 9: Training and Exam Preparation Guide (EX200), Third EditionVon EverandRHCSA Red Hat Enterprise Linux 9: Training and Exam Preparation Guide (EX200), Third EditionNoch keine Bewertungen

- Kali Linux - An Ethical Hacker's Cookbook - Second Edition: Practical recipes that combine strategies, attacks, and tools for advanced penetration testing, 2nd EditionVon EverandKali Linux - An Ethical Hacker's Cookbook - Second Edition: Practical recipes that combine strategies, attacks, and tools for advanced penetration testing, 2nd EditionBewertung: 5 von 5 Sternen5/5 (1)

- Linux For Beginners: The Comprehensive Guide To Learning Linux Operating System And Mastering Linux Command Line Like A ProVon EverandLinux For Beginners: The Comprehensive Guide To Learning Linux Operating System And Mastering Linux Command Line Like A ProNoch keine Bewertungen

- Linux Shell Scripting Cookbook - Third EditionVon EverandLinux Shell Scripting Cookbook - Third EditionBewertung: 4 von 5 Sternen4/5 (1)

- Hacking : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Ethical HackingVon EverandHacking : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Ethical HackingBewertung: 5 von 5 Sternen5/5 (3)

- Mastering Swift 5 - Fifth Edition: Deep dive into the latest edition of the Swift programming language, 5th EditionVon EverandMastering Swift 5 - Fifth Edition: Deep dive into the latest edition of the Swift programming language, 5th EditionNoch keine Bewertungen

- MAC OS X UNIX Toolbox: 1000+ Commands for the Mac OS XVon EverandMAC OS X UNIX Toolbox: 1000+ Commands for the Mac OS XNoch keine Bewertungen

- Linux: A Comprehensive Guide to Linux Operating System and Command LineVon EverandLinux: A Comprehensive Guide to Linux Operating System and Command LineNoch keine Bewertungen

- Excel : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Excel Programming: 1Von EverandExcel : The Ultimate Comprehensive Step-By-Step Guide to the Basics of Excel Programming: 1Bewertung: 4.5 von 5 Sternen4.5/5 (3)

- Mastering Linux Security and Hardening - Second Edition: Protect your Linux systems from intruders, malware attacks, and other cyber threats, 2nd EditionVon EverandMastering Linux Security and Hardening - Second Edition: Protect your Linux systems from intruders, malware attacks, and other cyber threats, 2nd EditionNoch keine Bewertungen

- React.js for A Beginners Guide : From Basics to Advanced - A Comprehensive Guide to Effortless Web Development for Beginners, Intermediates, and ExpertsVon EverandReact.js for A Beginners Guide : From Basics to Advanced - A Comprehensive Guide to Effortless Web Development for Beginners, Intermediates, and ExpertsNoch keine Bewertungen

- Azure DevOps Engineer: Exam AZ-400: Azure DevOps Engineer: Exam AZ-400 Designing and Implementing Microsoft DevOps SolutionsVon EverandAzure DevOps Engineer: Exam AZ-400: Azure DevOps Engineer: Exam AZ-400 Designing and Implementing Microsoft DevOps SolutionsNoch keine Bewertungen

- Hello Swift!: iOS app programming for kids and other beginnersVon EverandHello Swift!: iOS app programming for kids and other beginnersNoch keine Bewertungen

- RHCSA Exam Pass: Red Hat Certified System Administrator Study GuideVon EverandRHCSA Exam Pass: Red Hat Certified System Administrator Study GuideNoch keine Bewertungen

![iPhone Unlocked for the Non-Tech Savvy: Color Images & Illustrated Instructions to Simplify the Smartphone Use for Beginners & Seniors [COLOR EDITION]](https://imgv2-2-f.scribdassets.com/img/audiobook_square_badge/728318688/198x198/f3385cbfef/1714829744?v=1)