Beruflich Dokumente

Kultur Dokumente

Frisch-Waugh Theorem Example

Hochgeladen von

Franco ImperialOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Frisch-Waugh Theorem Example

Hochgeladen von

Franco ImperialCopyright:

Verfügbare Formate

Example of Frisch-Waugh Theorem

The Frisch-Waugh theorem says that the multiple regression coefficient of any single

variable can also be obtained by first netting out the effect of other variable(s) in the

regression model from both the dependent variable and the independent variable.

^

1 = ( X 1 ' M 2 ' M 2 X 1 ) 1 X 1 ' M 2 ' M 2 y = ( X 1 ' X 1 ) 1 X 1 ' y ( X 1 ' X 1 ) 1 X 1 ' X 2 2

In practice this means regressing the residuals when y is regressed on X2 , (M2y), on the

residuals from when X1 is regressed on X2, (M2X1)

First regress the dependent variable on the X2 variable (in this case London)

. reg grosspay london

Source | SS df MS Number of obs = 12266

-------------+------------------------------ F( 1, 12264) = 18.73

Model | 2998066.02 1 2998066.02 Prob > F = 0.0000

Residual | 1.9629e+09 12264 160055.968 R-squared = 0.0015

-------------+------------------------------ Adj R-squared = 0.0014

Total | 1.9659e+09 12265 160287.359 Root MSE = 400.07

------------------------------------------------------------------------------

grosspay | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

london | 50.70394 11.7154 4.33 0.000 27.73992 73.66797

_cons | 232.5472 3.821297 60.86 0.000 225.0568 240.0375

------------------------------------------------------------------------------

. predict uhat1, resid

and save the residuals (named uhat1). This gives M2y

Next regress the X1 variable on the X2 variable and save these residuals (named uhat2).

This gives M2X1

. reg age london

Source | SS df MS Number of obs = 12266

-------------+------------------------------ F( 1, 12264) = 21.36

Model | 2681.55785 1 2681.55785 Prob > F = 0.0000

Residual | 1539528.39 12264 125.532322 R-squared = 0.0017

-------------+------------------------------ Adj R-squared = 0.0017

Total | 1542209.95 12265 125.740722 Root MSE = 11.204

------------------------------------------------------------------------------

age | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

london | -1.516403 .3280945 -4.62 0.000 -2.15952 -.8732865

_cons | 40.01296 .107017 373.89 0.000 39.80318 40.22273

------------------------------------------------------------------------------

. predict uhat2, resid

Now regress the residuals 2y on the residuals M2X1

. reg uhat1 uhat2

Source | SS df MS Number of obs = 12266

-------------+------------------------------ F( 1, 12264) = 2.96

Model | 473241.472 1 473241.472 Prob > F = 0.0855

Residual | 1.9625e+09 12264 160017.382 R-squared = 0.0002

-------------+------------------------------ Adj R-squared = 0.0002

Total | 1.9629e+09 12265 160042.92 Root MSE = 400.02

------------------------------------------------------------------------------

uhat1 | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

uhat2 | .5544311 .3223961 1.72 0.086 -.077516 1.186378

_cons | 2.02e-07 3.61187 0.00 1.000 -7.079833 7.079834

and check that this is the same as in the multiple regression (it is)

. reg grosspay age london

Source | SS df MS Number of obs = 12266

-------------+------------------------------ F( 2, 12263) = 10.85

Model | 3471307.55 2 1735653.78 Prob > F = 0.0000

Residual | 1.9625e+09 12263 160030.429 R-squared = 0.0018

-------------+------------------------------ Adj R-squared = 0.0016

Total | 1.9659e+09 12265 160287.359 Root MSE = 400.04

------------------------------------------------------------------------------

grosspay | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

age | .5544311 .3224092 1.72 0.086 -.0775417 1.186404

london | 51.54468 11.72466 4.40 0.000 28.5625 74.52687

_cons | 210.3628 13.45452 15.64 0.000 183.9898 236.7357

In practice you will hardly ever use the residual approach to obtain multiple regression

coefficients (one exception to this is if the X2 variables capture seasonal or time effects, in

which case you can think of the 2-step process as an alternative method of de-

seasonalising or detrending both dependent and control variables such as exhibit strong

trends or seasonality as in the road accidents data below). The usefulness of this exercise

is that it helps makes clearer the partialing out nature of multiple regression.

100000 90000

road accidents each quarter

70000 80000

60000

1984 1988 1992 1996 2000

1983.25 1987.25 1991.25 1995.25 1999.25

time

v

twoway (line acc time), xlabel(1983.25(4)2000) xmlabel(1984(4)2000) xline(1984 1988 1992) ytitle(road accidents

each quarter)

Another way to think abour partialling out is to recognise that the multivariate OLS estimate of any

single variable can always be written as

^ Cov( M 2 X k , Y )

k = (see lecture notes and exercise 1)

Var (M 2 X k )

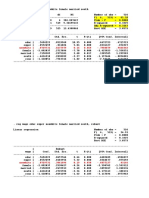

Consider an OLS regression of food share expenditure on total expenditure and age

use "C:\qea\food.dta", clear

The multivariate regression model is given by

. reg foodsh expnethsum age

Source | SS df MS Number of obs = 200

-------------+------------------------------ F( 2, 197) = 29.37

Model | 4290.50386 2 2145.25193 Prob > F = 0.0000

Residual | 14387.5993 197 73.0334989 R-squared = 0.2297

-------------+------------------------------ Adj R-squared = 0.2219

Total | 18678.1031 199 93.8598148 Root MSE = 8.546

------------------------------------------------------------------------------

foodsh | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

expnethsum | -.0128509 .0021607 -5.95 0.000 -.0171119 -.0085898

age | .1023938 .0418339 2.45 0.015 .019894 .1848935

_cons | 18.78057 2.657014 7.07 0.000 13.54072 24.02041

------------------------------------------------------------------------------

We are interested in understanding the estimated OLS coefficient on age in a

multiple regression

Partialling out the effect of total expenditure on the food share

. reg foodsh expnethsum

Source | SS df MS Number of obs = 200

-------------+------------------------------ F( 1, 198) = 51.46

Model | 3852.96953 1 3852.96953 Prob > F = 0.0000

Residual | 14825.1336 198 74.8744122 R-squared = 0.2063

-------------+------------------------------ Adj R-squared = 0.2023

Total | 18678.1031 199 93.8598148 Root MSE = 8.653

------------------------------------------------------------------------------

foodsh | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

expnethsum | -.0147015 .0020494 -7.17 0.000 -.018743 -.01066

_cons | 24.87616 .9376504 26.53 0.000 23.0271 26.72522

------------------------------------------------------------------------------

saving the residual

. predict yhat2 if e(sample), resid

Partialling out the effect of total expenditure on age

. reg age expnethsum

Source | SS df MS Number of obs = 200

-------------+------------------------------ F( 1, 198) = 27.63

Model | 5823.33649 1 5823.33649 Prob > F = 0.0000

Residual | 41731.6185 198 210.76575 R-squared = 0.1225

-------------+------------------------------ Adj R-squared = 0.1180

Total | 47554.955 199 238.969623 Root MSE = 14.518

------------------------------------------------------------------------------

age | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

expnethsum | -.0180738 .0034385 -5.26 0.000 -.0248545 -.0112931

_cons | 59.53094 1.573165 37.84 0.000 56.42863 62.63324

------------------------------------------------------------------------------

and saving this residual

. predict xhat2 if e(sample), resid

then regressing the first residual on the second (in a univariate model) we see

the estimated OLS estimate on age from the multiple regression above

. reg yhat2 xhat2

Source | SS df MS Number of obs = 200

-------------+------------------------------ F( 1, 198) = 6.02

Model | 437.534334 1 437.534334 Prob > F = 0.0150

Residual | 14387.5994 198 72.6646436 R-squared = 0.0295

-------------+------------------------------ Adj R-squared = 0.0246

Total | 14825.1338 199 74.4981597 Root MSE = 8.5244

------------------------------------------------------------------------------

yhat2 | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

xhat2 | .1023938 .0417281 2.45 0.015 .0201051 .1846824

_cons | 6.26e-09 .602763 0.00 1.000 -1.188659 1.188659

------------------------------------------------------------------------------

. predict ahat if e(sample)

(option xb assumed; fitted values)

. sort xhat2

Graphing this relationship

. two (scatter yhat2 xhat2) (line ahat xhat2)

30

20

10

0

-10

-20

-40 -20 0 20 40

Residuals

Residuals Fitted values

Das könnte Ihnen auch gefallen

- 4.2 Tests of Structural Changes: X y X yDokument8 Seiten4.2 Tests of Structural Changes: X y X ySaifullahJaspalNoch keine Bewertungen

- Homework 5Dokument5 SeitenHomework 5Sam GrantNoch keine Bewertungen

- DIAGNOSTIC TESTSDokument3 SeitenDIAGNOSTIC TESTSAlanazi AbdulsalamNoch keine Bewertungen

- Nu - Edu.kz Econometrics-I Assignment 5 Answer KeyDokument6 SeitenNu - Edu.kz Econometrics-I Assignment 5 Answer KeyAidanaNoch keine Bewertungen

- Solutions of Wooldridge LabDokument19 SeitenSolutions of Wooldridge LabSayed Arafat ZubayerNoch keine Bewertungen

- ECO311 StataDokument111 SeitenECO311 Stataсимона златкова100% (1)

- Chapter 4: Answer Key: Case Exercises Case ExercisesDokument9 SeitenChapter 4: Answer Key: Case Exercises Case ExercisesPriyaprasad PandaNoch keine Bewertungen

- Regression analysis of factors impacting economic growthDokument7 SeitenRegression analysis of factors impacting economic growthHector MillaNoch keine Bewertungen

- Autocorrelation Graphs and TablesDokument4 SeitenAutocorrelation Graphs and Tablescosgapacyril21Noch keine Bewertungen

- Testing EndogeneityDokument3 SeitenTesting Endogeneitysharkbait_fbNoch keine Bewertungen

- Regression Analysis IntroductionDokument10 SeitenRegression Analysis IntroductionArslan TariqNoch keine Bewertungen

- Hasil Regress Mi Pengeluaran DummyDokument11 SeitenHasil Regress Mi Pengeluaran Dummypurnama mulia faribNoch keine Bewertungen

- Omitted Variable TestsDokument4 SeitenOmitted Variable TestsAndrew TandohNoch keine Bewertungen

- Lab 12Dokument13 SeitenLab 12Muhammad HuzaifaNoch keine Bewertungen

- Re: ST: Panel Data-FIXED, RANDOM EFFECTS and Hausman Test: Sindijul@msu - Edu Statalist@hsphsun2.harvard - EduDokument5 SeitenRe: ST: Panel Data-FIXED, RANDOM EFFECTS and Hausman Test: Sindijul@msu - Edu Statalist@hsphsun2.harvard - EduAdalberto Calsin SanchezNoch keine Bewertungen

- Heteroskedasticity Test AnswersDokument10 SeitenHeteroskedasticity Test AnswersRoger HughesNoch keine Bewertungen

- Correlation Analysis of Manufacturing Pharmacy Subject and Internship PerformanceDokument2 SeitenCorrelation Analysis of Manufacturing Pharmacy Subject and Internship PerformanceApril Mergelle LapuzNoch keine Bewertungen

- Tutorials2016s1 Week9 AnswersDokument4 SeitenTutorials2016s1 Week9 AnswersyizzyNoch keine Bewertungen

- Statistical Inference 1. Determinant of Salary of Law School GraduatesDokument3 SeitenStatistical Inference 1. Determinant of Salary of Law School GraduatesToulouse18Noch keine Bewertungen

- InterpretationDokument8 SeitenInterpretationvghNoch keine Bewertungen

- Ekonometrika TM9: Purwanto WidodoDokument44 SeitenEkonometrika TM9: Purwanto WidodoEmbun PagiNoch keine Bewertungen

- Auto Quarter DataDokument6 SeitenAuto Quarter DatavghNoch keine Bewertungen

- How To Evaluate and Check RegressionDokument4 SeitenHow To Evaluate and Check RegressionSuphachai SathiarmanNoch keine Bewertungen

- Answear 6Dokument2 SeitenAnswear 6Bayu GiriNoch keine Bewertungen

- Stata Result EbafDokument6 SeitenStata Result EbafRija DawoodNoch keine Bewertungen

- Question 09Dokument8 SeitenQuestion 09huzaifa anwarNoch keine Bewertungen

- Modelo ARMA JonathanDokument7 SeitenModelo ARMA JonathanJosé Tapia BvNoch keine Bewertungen

- Lecture 9 - Slides - Multiple Regression and Effect On Coefficients PDFDokument127 SeitenLecture 9 - Slides - Multiple Regression and Effect On Coefficients PDFSyed NoamanNoch keine Bewertungen

- Ak 3Dokument14 SeitenAk 3premheenaNoch keine Bewertungen

- Example of 2SLS and Hausman TestDokument4 SeitenExample of 2SLS and Hausman TestuberwudiNoch keine Bewertungen

- Econometrics Pset #1Dokument5 SeitenEconometrics Pset #1tarun singhNoch keine Bewertungen

- Auto StataDokument8 SeitenAuto Statacosgapacyril21Noch keine Bewertungen

- ECMT1020 - Week 06 WorkshopDokument4 SeitenECMT1020 - Week 06 Workshopperthwashington.j9t23Noch keine Bewertungen

- Nonwhite - .0729731 .4437979 0.16 0.869 - .7988879 .9448342Dokument11 SeitenNonwhite - .0729731 .4437979 0.16 0.869 - .7988879 .9448342TrầnMinhChâuNoch keine Bewertungen

- Modelo ProbabilidadDokument4 SeitenModelo Probabilidada292246Noch keine Bewertungen

- Stata Textbook Examples Introductory Econometrics by Jeffrey PDFDokument104 SeitenStata Textbook Examples Introductory Econometrics by Jeffrey PDFNicol Escobar HerreraNoch keine Bewertungen

- Instrumental Variables Stata Program and OutputDokument10 SeitenInstrumental Variables Stata Program and OutputBAYU KHARISMANoch keine Bewertungen

- Centeno - Alexander PSET2 LBYMET2 FinalDokument11 SeitenCenteno - Alexander PSET2 LBYMET2 FinalAlex CentenoNoch keine Bewertungen

- PE Test Compares Linear & Log-Linear ModelsDokument3 SeitenPE Test Compares Linear & Log-Linear ModelsrcretNoch keine Bewertungen

- Nu - Edu.kz Econometrics-I Assignment 7 Answer KeyDokument6 SeitenNu - Edu.kz Econometrics-I Assignment 7 Answer KeyAidanaNoch keine Bewertungen

- QM 4 Nonlinear Regression Functions 1Dokument37 SeitenQM 4 Nonlinear Regression Functions 1Darkasy EditsNoch keine Bewertungen

- Millsite-Retail 2-10-19Dokument4 SeitenMillsite-Retail 2-10-19CarlNoch keine Bewertungen

- ch2 CapmDokument4 Seitench2 CapmvghNoch keine Bewertungen

- Log Bebas EDA Mas BayuDokument11 SeitenLog Bebas EDA Mas BayuNasir AhmadNoch keine Bewertungen

- Heckman Selection ModelsDokument4 SeitenHeckman Selection ModelsrbmalasaNoch keine Bewertungen

- Stata 2Dokument11 SeitenStata 2Chu BundyNoch keine Bewertungen

- Econometria 2 Tarea 2Dokument11 SeitenEconometria 2 Tarea 2ARTURO JOSUE COYLA YNCANoch keine Bewertungen

- Hetero StataDokument2 SeitenHetero StataGucci Zamora DiamanteNoch keine Bewertungen

- SOCY7706: Longitudinal Data Analysis TechniquesDokument12 SeitenSOCY7706: Longitudinal Data Analysis TechniquesHe HNoch keine Bewertungen

- Saad AkhtarDokument48 SeitenSaad AkhtarAmmarNoch keine Bewertungen

- HeteroskedasticityDokument12 SeitenHeteroskedasticityMNoch keine Bewertungen

- Practical software training in econometricsDokument41 SeitenPractical software training in econometricsahma ahmaNoch keine Bewertungen

- Autocorrelation STATA ResultsDokument18 SeitenAutocorrelation STATA Resultscosgapacyril21Noch keine Bewertungen

- 2SLS Klein Macro PDFDokument4 Seiten2SLS Klein Macro PDFNiken DwiNoch keine Bewertungen

- Financial Econometrics Tutorial Exercise 4 SolutionsDokument5 SeitenFinancial Econometrics Tutorial Exercise 4 SolutionsParidhee ToshniwalNoch keine Bewertungen

- ASEU TEACHERFILE WEB 3936427542519593610.ppt 1608221424Dokument9 SeitenASEU TEACHERFILE WEB 3936427542519593610.ppt 1608221424Emiraslan MhrrovNoch keine Bewertungen

- Chapter 4 Ramsey's Reset Test of Functional Misspecification (EC220)Dokument10 SeitenChapter 4 Ramsey's Reset Test of Functional Misspecification (EC220)fayz_mtjkNoch keine Bewertungen

- Factors Affecting Birth WeightDokument14 SeitenFactors Affecting Birth WeightNghĩa Nguyễn Thị MinhNoch keine Bewertungen

- ch2 WagesDokument5 Seitench2 WagesvghNoch keine Bewertungen

- Allen Latta's Blog On Private Equity - Allen Latta's Thoughts On Private Equity, EtcDokument7 SeitenAllen Latta's Blog On Private Equity - Allen Latta's Thoughts On Private Equity, EtcFranco ImperialNoch keine Bewertungen

- Types of IntangiblesDokument12 SeitenTypes of IntangiblesFranco ImperialNoch keine Bewertungen

- Dividend S Policy: - Legal RestrictionsDokument1 SeiteDividend S Policy: - Legal RestrictionsFranco ImperialNoch keine Bewertungen

- Simulating Stock Prices Using Geometric Brownian Motion - EvidenceDokument27 SeitenSimulating Stock Prices Using Geometric Brownian Motion - EvidenceFranco ImperialNoch keine Bewertungen

- In This Chapter, Look For The Answers To These QuestionsDokument8 SeitenIn This Chapter, Look For The Answers To These QuestionsFranco ImperialNoch keine Bewertungen

- EuroDokument125 SeitenEuroFranco ImperialNoch keine Bewertungen

- Frisch-Waugh Theorem ExampleDokument4 SeitenFrisch-Waugh Theorem ExampleFranco ImperialNoch keine Bewertungen

- Frisch-Waugh Theorem ExampleDokument4 SeitenFrisch-Waugh Theorem ExampleFranco ImperialNoch keine Bewertungen

- Ateneo de Manila University: John Gokongwei School of ManagementDokument4 SeitenAteneo de Manila University: John Gokongwei School of ManagementFranco ImperialNoch keine Bewertungen

- The Pricing of Options and Corporate Liabilities PDFDokument19 SeitenThe Pricing of Options and Corporate Liabilities PDFFranco ImperialNoch keine Bewertungen

- Simulating Stock Prices Using Geometric Brownian Motion - EvidenceDokument27 SeitenSimulating Stock Prices Using Geometric Brownian Motion - EvidenceFranco ImperialNoch keine Bewertungen

- Transformations of VariablesDokument5 SeitenTransformations of VariablesFranco ImperialNoch keine Bewertungen

- Monopolistic Competition: Comparing Perfect & Monop. Competition Comparing Monopoly & Monop. CompetitionDokument4 SeitenMonopolistic Competition: Comparing Perfect & Monop. Competition Comparing Monopoly & Monop. CompetitionFranco ImperialNoch keine Bewertungen

- Consumers, Producers, and The Efficiency of Markets: Welfare Economics Willingness To Pay (WTP)Dokument10 SeitenConsumers, Producers, and The Efficiency of Markets: Welfare Economics Willingness To Pay (WTP)Franco ImperialNoch keine Bewertungen

- The Theory of Consumer Choice: in This Chapter, Look For The Answers To These QuestionsDokument10 SeitenThe Theory of Consumer Choice: in This Chapter, Look For The Answers To These QuestionsFranco ImperialNoch keine Bewertungen

- Lecture 06MP 10-11 1sDokument10 SeitenLecture 06MP 10-11 1sFranco ImperialNoch keine Bewertungen

- Lecture 09MP 10-11 1s PDFDokument7 SeitenLecture 09MP 10-11 1s PDFFranco ImperialNoch keine Bewertungen

- What Is Poverty Threshold? What Is Food Threshold?: Poverty and Inequality: A National ConcernDokument8 SeitenWhat Is Poverty Threshold? What Is Food Threshold?: Poverty and Inequality: A National ConcernFranco ImperialNoch keine Bewertungen

- Production Function Marginal Costs Short Run Long RunDokument8 SeitenProduction Function Marginal Costs Short Run Long RunFranco ImperialNoch keine Bewertungen

- Lecture 12MP 10-11 1s PDFDokument4 SeitenLecture 12MP 10-11 1s PDFFranco ImperialNoch keine Bewertungen

- Lecture 11MP 10-11 1sDokument7 SeitenLecture 11MP 10-11 1sFranco ImperialNoch keine Bewertungen

- Supply, Demand, and Government Policies: in This Chapter, Look For The Answers To These QuestionsDokument6 SeitenSupply, Demand, and Government Policies: in This Chapter, Look For The Answers To These QuestionsFranco ImperialNoch keine Bewertungen

- Monopoly: in This Chapter, Look For The Answers To These QuestionsDokument6 SeitenMonopoly: in This Chapter, Look For The Answers To These QuestionsFranco ImperialNoch keine Bewertungen

- The Theory of Consumer Choice: in This Chapter, Look For The Answers To These QuestionsDokument10 SeitenThe Theory of Consumer Choice: in This Chapter, Look For The Answers To These QuestionsFranco ImperialNoch keine Bewertungen

- CarDokument5 SeitenCarFranco ImperialNoch keine Bewertungen

- Lecture 05MP 10-11 1sDokument6 SeitenLecture 05MP 10-11 1sFranco ImperialNoch keine Bewertungen

- Firms in Competitive Markets: Introduction: A Scenario Characteristics of Perfect CompetitionDokument7 SeitenFirms in Competitive Markets: Introduction: A Scenario Characteristics of Perfect CompetitionFranco ImperialNoch keine Bewertungen

- Oligopoly: Between Monopoly and CompetitionDokument7 SeitenOligopoly: Between Monopoly and CompetitionFranco ImperialNoch keine Bewertungen

- Monopolistic Competition: Comparing Perfect & Monop. Competition Comparing Monopoly & Monop. CompetitionDokument4 SeitenMonopolistic Competition: Comparing Perfect & Monop. Competition Comparing Monopoly & Monop. CompetitionFranco ImperialNoch keine Bewertungen

- Botnets: The Dark Side of Cloud Computing: by Angelo Comazzetto, Senior Product ManagerDokument5 SeitenBotnets: The Dark Side of Cloud Computing: by Angelo Comazzetto, Senior Product ManagerDavidLeeCoxNoch keine Bewertungen

- Astera Event CatalogDokument19 SeitenAstera Event CatalogedgarNoch keine Bewertungen

- Applause Whitepaper Embracing Agile PDFDokument13 SeitenApplause Whitepaper Embracing Agile PDFMark BryantNoch keine Bewertungen

- How To Make Digital Clock in ExcelDokument10 SeitenHow To Make Digital Clock in ExcelSaidNoch keine Bewertungen

- 7code PresentationDokument14 Seiten7code PresentationNicuMardariNoch keine Bewertungen

- Sample Resume For Radio InternshipDokument7 SeitenSample Resume For Radio Internshipkpcvpkjbf100% (2)

- REMOTE LAB EXAM INSTRUCTIONSDokument32 SeitenREMOTE LAB EXAM INSTRUCTIONSManoranjan PandaNoch keine Bewertungen

- UWRC Saturn-Megadrive ManualDokument5 SeitenUWRC Saturn-Megadrive ManualMiguel Arcangel Mejias MarreroNoch keine Bewertungen

- 2022 Oct - CSC128 Quiz - QDokument4 Seiten2022 Oct - CSC128 Quiz - QAidil Ja'afarNoch keine Bewertungen

- Flow Chart SymbolsDokument7 SeitenFlow Chart SymbolsAbhishekNoch keine Bewertungen

- Android and Bluetooth Based Voice Controlled Wireless Smart Home SystemDokument2 SeitenAndroid and Bluetooth Based Voice Controlled Wireless Smart Home SystemAshley MartinezNoch keine Bewertungen

- Evolution of AiDokument3 SeitenEvolution of AiromohacNoch keine Bewertungen

- 20.1Dokument25 Seiten20.1Hoson AkNoch keine Bewertungen

- Solutions Partner Benefits GuideDokument23 SeitenSolutions Partner Benefits GuiderabihNoch keine Bewertungen

- Comp Net Amsterdam 2000Dokument284 SeitenComp Net Amsterdam 2000GeraldoadriNoch keine Bewertungen

- Fingerprint Door Lock Using Arduino: Technical ReportDokument54 SeitenFingerprint Door Lock Using Arduino: Technical ReportAbbaNoch keine Bewertungen

- Mathematics For Ntse Questions - MMDokument127 SeitenMathematics For Ntse Questions - MMKavyansh NagNoch keine Bewertungen

- Word Web - Semantic MapDokument1 SeiteWord Web - Semantic Mapmaryclaire comediaNoch keine Bewertungen

- STD 22 Compact Gyro Compass and STD 22 Gyro Compass: Type 110 - 233 NG002Dokument50 SeitenSTD 22 Compact Gyro Compass and STD 22 Gyro Compass: Type 110 - 233 NG002KOSTAS THOMASNoch keine Bewertungen

- Arduino Nano DHT11 Temperature and Humidity VisualDokument12 SeitenArduino Nano DHT11 Temperature and Humidity Visualpower systemNoch keine Bewertungen

- A Brief Introduction To Apache SparkDokument10 SeitenA Brief Introduction To Apache SparkVenkatesh NarisettyNoch keine Bewertungen

- 2.marketing Analytics Notes BookDokument231 Seiten2.marketing Analytics Notes BookSandeep KaurNoch keine Bewertungen

- 161 PDFsam Book Adams-Tutorial-3rdEditionDokument192 Seiten161 PDFsam Book Adams-Tutorial-3rdEditionShevon FernandoNoch keine Bewertungen

- MDOP Datasheet DRTDokument2 SeitenMDOP Datasheet DRTmichaelknightukNoch keine Bewertungen

- 7011B-34B Im 73892-012 PDFDokument510 Seiten7011B-34B Im 73892-012 PDFVaishali LakhnotaNoch keine Bewertungen

- Guidelines for filling ABAP code review checklistDokument42 SeitenGuidelines for filling ABAP code review checklistSunil UndarNoch keine Bewertungen

- x530L-52GPX: Stackable Intelligent Layer 3 SwitchDokument7 Seitenx530L-52GPX: Stackable Intelligent Layer 3 SwitchnisarahmedgfecNoch keine Bewertungen

- Multiple Choice Quiz 2Dokument5 SeitenMultiple Choice Quiz 2Shahid Ali Murtza0% (1)

- جمال علوي السيرة الذاتية Gamal Ahmed Ahmed Abdullah Alawi CVDokument16 Seitenجمال علوي السيرة الذاتية Gamal Ahmed Ahmed Abdullah Alawi CVdistNoch keine Bewertungen

- FIST For Engineering SciencesDokument46 SeitenFIST For Engineering Sciencesripsearch92Noch keine Bewertungen