Beruflich Dokumente

Kultur Dokumente

Strong Points:: REMO Review - Binod Kumar

Hochgeladen von

BINOD KUMAROriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Strong Points:: REMO Review - Binod Kumar

Hochgeladen von

BINOD KUMARCopyright:

Verfügbare Formate

REMO review ---- Binod Kumar

Strong points:

The paper presents an approach for redundant execution for fault-tolerance with minimum

overhead. Errors are detected by exploiting spatial and temporal redundancy. Spatial redundancy is

checked by a simple inorder checker module and temporal redundancy is performed by re-

computation in the original functional units. Although paper lacks novelty as this idea has already

been attempted in some form or other, the paper has contribution in keeping the power, area and

power overhead as minimum. Since re-execution of the instructions are initiated after resolving the

dependencies between them, the redundant execution is supposed to incur very low performance

penalty. The authors claim that proposed technique has very low detection latency and a high degree

of fault coverage comparable to a full double modular redundant architecture. The key results of

proposed technique are increase in area of only 0.4%, power overhead near to 9% and a negligible

performance penalty during fault free run (when no recovery is needed).

Weak points:

It is a common issue with many fault-tolerant architecture papers that they do not perform

experiments pertaining fault injection for the purpose of evaluating their proposal. This paper too

suffers from this. The authors claim The simulation results show almost full soft error

coverage...... Without a fault injection experiment, this claim is not very appealing although the

authors discuss three cases of occurrence of fault in Section-IV. The assumption that instruction

decoder is outside SOR (sphere of replication) can essentially lead to chances that errors in decoder

can have severe impact. How does fault recovery proceeds in such a scenario? The authors consider

fault coverage scenario in case of single bit-flips. Although single bit-flip serves as a sufficient

model for soft-errors, still the authors should comment on how the architecture behaves in

double/triple bit-flips scenario?

Disagreement:

The reuse of same unit for temporal redundancy from performance perspective may not always be

helpful for the case when programs have a large fraction of floating-point instructions. The

assumption that memory state can be recovered after 1 billion instructions is a very optimistic one.

The authors state that, A single bit flip in any of the field of ROB would result in incorrect

result of either the verifier or the main OOO processor but not both. For double-bit flips, incorrect

result may appear in both. How to deal with such cases?

Suggestions for improvement:

The authors should have experimented with different replay buffer size and impact on performance

penalty. Fault injection experiment should be performed for accurate estimation of fault coverage.

Quantitative comparison must be done with sate-of-the-art schemes. Even if the comparison is done

with DIVA (which has a complete inorder for the verifier part), the authors can bring out the

contribution of only the time-replay part for fault-tolerance.

Points which are not clear:

How do authors calculate/estimate that checkpoint overhead ranges from 30 to 50 cycles? In case of

temporal redundancy for A single bit flips in FU, the authors claim that Within this time span

even multi- cycle fault is expected to decay,....... what is the reasoning behind that? How does

performance get impacted in case the transient fault has not decayed?

Points to be discussed in class:

Are read ports to ROB and ARF sufficient for the verifier to access? This doubt arises because

during re-execution, verifier part accesses ROB and ARF for reading operands.

The paper states that checkpoints are taken at one billion instructions. It is definitely possible to

restore architectural state that far back, restoring memory that far back may not be feasible.

The recovery mechanism should be explained more elaborately.

Das könnte Ihnen auch gefallen

- ServerAdmin v10.6Dokument197 SeitenServerAdmin v10.6कमल कुलश्रेष्ठNoch keine Bewertungen

- Cisco Next-Generation Security Solutions All-In-One Cisco ASA Firepower Services, NGIPS, and AMPDokument547 SeitenCisco Next-Generation Security Solutions All-In-One Cisco ASA Firepower Services, NGIPS, and AMProdion86% (7)

- ClearCase Concepts GuideDokument194 SeitenClearCase Concepts Guideapi-3728519100% (2)

- Column EfficiencyDokument8 SeitenColumn Efficiencynebulakers100% (1)

- C++ Programming ExercisesDokument6 SeitenC++ Programming Exercisesragunath32527117Noch keine Bewertungen

- CRC CalculationDokument8 SeitenCRC CalculationsuneeldvNoch keine Bewertungen

- Mms PaperDokument14 SeitenMms Papernom3formoiNoch keine Bewertungen

- Recvf BinodKumarDokument2 SeitenRecvf BinodKumarBINOD KUMARNoch keine Bewertungen

- Temperature Hotspot Than in A Conventional Design. This Is Not Always True As in ThisDokument2 SeitenTemperature Hotspot Than in A Conventional Design. This Is Not Always True As in ThisBINOD KUMARNoch keine Bewertungen

- Dependency-Based Automatic Parallelization of Java CodeDokument13 SeitenDependency-Based Automatic Parallelization of Java CodeGeorge-Alexandru IoanaNoch keine Bewertungen

- Backwards-Compatible Bounds Checking For Arrays and Pointers in C ProgramsDokument14 SeitenBackwards-Compatible Bounds Checking For Arrays and Pointers in C Programspix3l_2Noch keine Bewertungen

- A Study On Rapidly-Growing Random TreesDokument3 SeitenA Study On Rapidly-Growing Random TreeskfuScribdNoch keine Bewertungen

- SPE-182704-MS - A Novel IPR Calculation Technique To Reduce Oscillations in Time-Lagged Network-Reservoir Coupled Modeling Using Analytical Scaling and Fast Marching Method PDFDokument12 SeitenSPE-182704-MS - A Novel IPR Calculation Technique To Reduce Oscillations in Time-Lagged Network-Reservoir Coupled Modeling Using Analytical Scaling and Fast Marching Method PDFDenis GontarevNoch keine Bewertungen

- In-Line Interrupt Handling and Lock-Up Free Translation Lookaside Buffers (TLBS)Dokument16 SeitenIn-Line Interrupt Handling and Lock-Up Free Translation Lookaside Buffers (TLBS)snathickNoch keine Bewertungen

- Missing Topic of PCDokument4 SeitenMissing Topic of PCHarseerat SidhuNoch keine Bewertungen

- Region-Based Compilation: An Introduction to a New TechniqueDokument11 SeitenRegion-Based Compilation: An Introduction to a New Techniqueayesha_princessNoch keine Bewertungen

- jucs-29-8-892_article-94462Dokument19 Seitenjucs-29-8-892_article-94462Mohamed EL GHAZOUANINoch keine Bewertungen

- Compile-Time Stack Requirements Analysis With GCCDokument13 SeitenCompile-Time Stack Requirements Analysis With GCCapi-27351105100% (2)

- R2 - On Tolerating Faults in Naturally Redundant AlgorithmsDokument10 SeitenR2 - On Tolerating Faults in Naturally Redundant AlgorithmsN EMNoch keine Bewertungen

- Assembly Line Balancing Literature ReviewDokument5 SeitenAssembly Line Balancing Literature Reviewaflstquqx100% (1)

- A Rapid Robust Fault Detection Algorithm For Flight Control ReconfigurationDokument290 SeitenA Rapid Robust Fault Detection Algorithm For Flight Control ReconfigurationAliNoch keine Bewertungen

- Cprog Report CA-2Dokument10 SeitenCprog Report CA-2TitaniumNoch keine Bewertungen

- Floating-Point To Fixed-Point Code Conversion With Variable Trade-Off Between Computational Complexity and Accuracy LossDokument6 SeitenFloating-Point To Fixed-Point Code Conversion With Variable Trade-Off Between Computational Complexity and Accuracy LossMohan RajNoch keine Bewertungen

- LQR ThesisDokument7 SeitenLQR Thesisstephaniemoorelittlerock100% (2)

- Driels Fundamentals of Manipulator CalibrationDokument342 SeitenDriels Fundamentals of Manipulator CalibrationKozhevnikovNoch keine Bewertungen

- Software Power Optimization Via Post-Link-Time Binary RewritingDokument7 SeitenSoftware Power Optimization Via Post-Link-Time Binary Rewritingsweet2shineNoch keine Bewertungen

- Instruction-Level Parallelism For Recongurable ComputingDokument10 SeitenInstruction-Level Parallelism For Recongurable ComputingThiyagu RajanNoch keine Bewertungen

- Solutions Ch4Dokument7 SeitenSolutions Ch4Sangam JindalNoch keine Bewertungen

- Downtime Data Collection and Analysis for Valid Simulation ModelsDokument7 SeitenDowntime Data Collection and Analysis for Valid Simulation Modelsram_babu_59Noch keine Bewertungen

- Code-Coverage Analysis: Structural Testing and Functional TestingDokument4 SeitenCode-Coverage Analysis: Structural Testing and Functional TestingKapildevNoch keine Bewertungen

- Volatiles Are MiscompiledDokument10 SeitenVolatiles Are Miscompileddnn38hdNoch keine Bewertungen

- 1Dokument11 Seiten1aiadaiadNoch keine Bewertungen

- Prototyping A Concurrency ModelDokument10 SeitenPrototyping A Concurrency ModelKornél IllésNoch keine Bewertungen

- 255 - For Ubicc - 255Dokument6 Seiten255 - For Ubicc - 255Ubiquitous Computing and Communication JournalNoch keine Bewertungen

- Compiler Technology For Future MicroprocessorsDokument52 SeitenCompiler Technology For Future MicroprocessorsBiswajit DasNoch keine Bewertungen

- Generating A Periodic Pattern For VLIWDokument18 SeitenGenerating A Periodic Pattern For VLIWanon_817055971Noch keine Bewertungen

- Repairable Cell-Based Chip Design For Simultaneous Yield Enhancement and Fault DiagnosisDokument6 SeitenRepairable Cell-Based Chip Design For Simultaneous Yield Enhancement and Fault DiagnosisYosi SoltNoch keine Bewertungen

- JavaScript Documentations AdjacentDokument9 SeitenJavaScript Documentations Adjacentgdub171Noch keine Bewertungen

- Crude Oil Fiscal Sampling (Class 5070)Dokument6 SeitenCrude Oil Fiscal Sampling (Class 5070)yoyokpurwantoNoch keine Bewertungen

- Bayesian, Linear-Time CommunicationDokument7 SeitenBayesian, Linear-Time CommunicationGathNoch keine Bewertungen

- Object-Oriented Programming Using Parallel Computing to Optimize Algorithm EfficiencyDokument6 SeitenObject-Oriented Programming Using Parallel Computing to Optimize Algorithm EfficiencypeNoch keine Bewertungen

- Inventory Levels On Throughput: The Effect of Work-In-Process and Lead TimesDokument6 SeitenInventory Levels On Throughput: The Effect of Work-In-Process and Lead TimesTino VelazquezNoch keine Bewertungen

- Twelve WaysDokument4 SeitenTwelve Waysde7yT3izNoch keine Bewertungen

- The Power of Ten - Rules For Developing Safety Critical CodeDokument6 SeitenThe Power of Ten - Rules For Developing Safety Critical CodeMassimo LanaNoch keine Bewertungen

- Sound Approximation of Programs with Elementary FunctionsDokument25 SeitenSound Approximation of Programs with Elementary FunctionsLovinf FlorinNoch keine Bewertungen

- 04 - Deep-Learning-Based Surrogate Model For Reservoir Simulation With Time-Varying Well ControlsDokument20 Seiten04 - Deep-Learning-Based Surrogate Model For Reservoir Simulation With Time-Varying Well ControlsAli NasserNoch keine Bewertungen

- Conflict-Aware Optimal Scheduling of Prioritised Code Clone RefactoringDokument20 SeitenConflict-Aware Optimal Scheduling of Prioritised Code Clone Refactoringer.manishgoyal.ghuddNoch keine Bewertungen

- Mf195 Preliminary ReportDokument4 SeitenMf195 Preliminary ReportqwertyNoch keine Bewertungen

- Accepted Manuscript: Swarm and Evolutionary Computation BASE DATADokument44 SeitenAccepted Manuscript: Swarm and Evolutionary Computation BASE DATANasir SayyedNoch keine Bewertungen

- A Case of RedundancyDokument4 SeitenA Case of RedundancyAnhar RisnumawanNoch keine Bewertungen

- Reference Paper 1Dokument10 SeitenReference Paper 1Sailesh KhandelwalNoch keine Bewertungen

- The Practical Problems of Assembly Line BalancingDokument2 SeitenThe Practical Problems of Assembly Line BalancingMartin PedrozaNoch keine Bewertungen

- Inlining of Virtual Methods: AbstractDokument21 SeitenInlining of Virtual Methods: AbstractfirefoxextslurperNoch keine Bewertungen

- Acs Iecr 5b00480 PDFDokument11 SeitenAcs Iecr 5b00480 PDFAvijit KarmakarNoch keine Bewertungen

- Chap2 SlidesDokument127 SeitenChap2 SlidesDhara RajputNoch keine Bewertungen

- File PDFDokument6 SeitenFile PDFKamran MalikNoch keine Bewertungen

- A Case For Voice-over-IPDokument4 SeitenA Case For Voice-over-IPMatheus Santa LucciNoch keine Bewertungen

- A VAR: Computer Package Optimal Multi-Objective Planning in Large Scale Power SystemsDokument7 SeitenA VAR: Computer Package Optimal Multi-Objective Planning in Large Scale Power SystemsWilliam Paul MacherlaNoch keine Bewertungen

- Itanium Processor SeminarDokument30 SeitenItanium Processor SeminarDanish KunrooNoch keine Bewertungen

- Scimakelatex 23918 NoneDokument6 SeitenScimakelatex 23918 None51pNoch keine Bewertungen

- Scientific Paper On BobDokument8 SeitenScientific Paper On Bobarule123Noch keine Bewertungen

- Scimakelatex 32961 Peter+Rabbit Gumby Carlos+danger Andrew+breitbart Vladimir+putinDokument3 SeitenScimakelatex 32961 Peter+Rabbit Gumby Carlos+danger Andrew+breitbart Vladimir+putinAMERICAblogNoch keine Bewertungen

- Schwartz - An Alternative Method For System SizingDokument4 SeitenSchwartz - An Alternative Method For System SizingRyanRamroopNoch keine Bewertungen

- 01 TutorialDokument5 Seiten01 TutorialBINOD KUMARNoch keine Bewertungen

- 2015fall EE669 Course Description PolicyDokument3 Seiten2015fall EE669 Course Description PolicyNehruBodaNoch keine Bewertungen

- Datacenter Simulation Methodologies: Getting Started with MARSSx86 and DRAMSim2Dokument52 SeitenDatacenter Simulation Methodologies: Getting Started with MARSSx86 and DRAMSim2BINOD KUMARNoch keine Bewertungen

- Illusionist BinodKumarDokument2 SeitenIllusionist BinodKumarBINOD KUMARNoch keine Bewertungen

- Strong Points:: REMO Review - Binod KumarDokument1 SeiteStrong Points:: REMO Review - Binod KumarBINOD KUMARNoch keine Bewertungen

- Pepsc BinodKumarDokument1 SeitePepsc BinodKumarBINOD KUMARNoch keine Bewertungen

- Heterogeneous PostClassDokument1 SeiteHeterogeneous PostClassBINOD KUMARNoch keine Bewertungen

- Mascar BinodKumarDokument1 SeiteMascar BinodKumarBINOD KUMARNoch keine Bewertungen

- Illusionist BinodKumarDokument2 SeitenIllusionist BinodKumarBINOD KUMARNoch keine Bewertungen

- Blank BinodKumarDokument1 SeiteBlank BinodKumarBINOD KUMARNoch keine Bewertungen

- List of Latex Mathematical Symbols - OeiswikiDokument6 SeitenList of Latex Mathematical Symbols - OeiswikiBINOD KUMARNoch keine Bewertungen

- Scheduling BinodKumarDokument1 SeiteScheduling BinodKumarBINOD KUMARNoch keine Bewertungen

- OpenSPARC Primer 1Dokument10 SeitenOpenSPARC Primer 1BINOD KUMARNoch keine Bewertungen

- 3.1. If Statements: 3.1.1. Simple ConditionsDokument24 Seiten3.1. If Statements: 3.1.1. Simple ConditionsBINOD KUMARNoch keine Bewertungen

- Automatic Line Breaking of Long Lines of Text? - Tex..Dokument3 SeitenAutomatic Line Breaking of Long Lines of Text? - Tex..BINOD KUMARNoch keine Bewertungen

- Python L CalculatorDokument7 SeitenPython L CalculatorBINOD KUMARNoch keine Bewertungen

- Two Dimensional Array in Python - Stack Over OwDokument4 SeitenTwo Dimensional Array in Python - Stack Over OwBINOD KUMARNoch keine Bewertungen

- Tests: Implicit CombinationalDokument8 SeitenTests: Implicit CombinationalBINOD KUMARNoch keine Bewertungen

- CCS TivaWareDokument26 SeitenCCS TivaWareJoginder YadavNoch keine Bewertungen

- Livro - Mastering Simulink PDFDokument9 SeitenLivro - Mastering Simulink PDFGusttavo Hosken Emerich Werner GrippNoch keine Bewertungen

- BluetoothDokument3 SeitenBluetoothJennifer PowellNoch keine Bewertungen

- Fryrender Swap 1.1 User ManualDokument11 SeitenFryrender Swap 1.1 User ManualrikhardbNoch keine Bewertungen

- 2 - 7 Multiprocessor ConfigurationsDokument15 Seiten2 - 7 Multiprocessor ConfigurationsrakkkkNoch keine Bewertungen

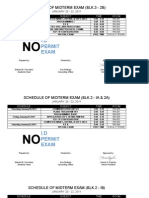

- Schedule of PRELIM ExamDokument4 SeitenSchedule of PRELIM Examdagz10131979Noch keine Bewertungen

- A Practical Guide To Adopting The Universal Verification Methodology (Uvm) Second EditionDokument2 SeitenA Practical Guide To Adopting The Universal Verification Methodology (Uvm) Second EditionDitzsu tzsuDiNoch keine Bewertungen

- Xerox Standard AccountingDokument2 SeitenXerox Standard AccountingDionisius BramedyaNoch keine Bewertungen

- SCJP Questions Set 1Dokument11 SeitenSCJP Questions Set 1rajuks1991Noch keine Bewertungen

- Java SwingDokument15 SeitenJava SwingSonamNoch keine Bewertungen

- Shell CommandsDokument4.346 SeitenShell Commandsgeek_sbcNoch keine Bewertungen

- A Challenge Called Boot Time - WitekioDokument5 SeitenA Challenge Called Boot Time - Witekiomrme44Noch keine Bewertungen

- ASIC Design and Verification Resume Govinda KeshavdasDokument1 SeiteASIC Design and Verification Resume Govinda Keshavdasgovindak14Noch keine Bewertungen

- DHTMLX GridDokument7 SeitenDHTMLX Gridrhinowar99Noch keine Bewertungen

- Configuring and Deploying The Dell Equal Logic Multipath I O Device Specific Module (DSM) in A PS Series SANDokument18 SeitenConfiguring and Deploying The Dell Equal Logic Multipath I O Device Specific Module (DSM) in A PS Series SANAndrew J CadueNoch keine Bewertungen

- Case Study-Face AnimationDokument5 SeitenCase Study-Face AnimationvigneshNoch keine Bewertungen

- Thesis On Sequential Quadratic Programming As A Method of OptimizationDokument24 SeitenThesis On Sequential Quadratic Programming As A Method of OptimizationNishant KumarNoch keine Bewertungen

- Survey On Stereovision Based Disparity Map Using Sum of Absolute DifferenceDokument2 SeitenSurvey On Stereovision Based Disparity Map Using Sum of Absolute DifferenceInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- K Avian Pour 2017Dokument4 SeitenK Avian Pour 2017Adeel TahirNoch keine Bewertungen

- DBMS Mini ProjectDokument45 SeitenDBMS Mini ProjectKaushalya Munuswamy100% (1)

- Archmodels Vol.03 PDFDokument5 SeitenArchmodels Vol.03 PDFMarius DumitrescuNoch keine Bewertungen

- RredisDokument16 SeitenRredisJohn PerkinsonNoch keine Bewertungen

- Book Shop Management SystemDokument18 SeitenBook Shop Management SystemAkhil SinghNoch keine Bewertungen

- VNX DP Upgrading Disk FirmwareDokument5 SeitenVNX DP Upgrading Disk Firmwarevijayen123Noch keine Bewertungen

- CS8492-Database Management Systems-2017r Question BankDokument24 SeitenCS8492-Database Management Systems-2017r Question BankJai ShreeNoch keine Bewertungen