Beruflich Dokumente

Kultur Dokumente

Gpuimage Swift Tutorial

Hochgeladen von

RodrigoCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Gpuimage Swift Tutorial

Hochgeladen von

RodrigoCopyright:

Verfügbare Formate

Gpuimage swift tutorial

Outside of the -drawRect: I always find it more effective to learn new programming concepts by building projects using them, so I decided to do

the same for Apple's new Swift language. In the original Objective-C version of GPUImage, you can to manually subclass a filter type that

matched the number of input textures in your shader. The framework uses platform-independent types where possible: Alpha is 1 , NewColor is

equal to TopColor. Sign up to receive the latest tutorials from raywenderlich. All that you need to do then is set your inputs to that filter like you

would any other, by adding your new BasicOperation as a target of an image source. Oddly, I've used GPUImage in other projects without any

issue, and can't think what I have done differently this time--I'm sure it's something very small that I'm missing, but I can't seem to put my finger on

it. Now if only you could do something to this photo to shoot it through the stratosphere. You can find Jack on LinkedIn or Twitter. Here you

treat alpha as a float between 0 and Generics allow you to create type-specific classes and functions from a more general base, while preserving

types throughout. Now you're ready to blend Ghosty into your image, which makes this the perfect time to go over blending. The color on top uses

a formula and its alpha value to blend with the color behind it. You can download a project with all the code in this section here. Here are all the

download links again for your convenience:. PyNewb did you get it to work? I had to hand-edit the module's mapping file in order to get this to

work, but all of that is now incorporated into the GPUImage GitHub repository. I believe that this performance was achieved by downsampling the

image, blurring at a smaller radius, and then upsampling the blurred image. A popular optimization is to use premultiplied alpha. Each byte stores a

component, or channel. The setup for the new one is much easier to read, as a result. In terms of Core Graphics, this includes the current fill color,

stroke color, transforms, masks, where to draw and much more. There are still some effects that are better to do with Core Graphics. Now,

replace the first line in processImage: However, since their alpha value is 0, they are actually transparent. Gaussian and box blurs now

automatically downsample and upsample for performance. Compare that to the previous use of addTarget , and you can see how this new syntax

is easier to follow:. To test this code, add this code to the bottom of processUsingPixels: I look forward to the day when all it takes are are few

"apt-get"s to pull the right packages, a few lines of code, and a "swift build" to get up and running with GPU-accelerated machine vision on a

Raspberry Pi. As you can see, ImageProcessor is a singleton object that calls -processUsingPixels: So how do you assign a pixel if it has a

background color and a "semi-transparent" color on top of it? Notice how the outer pixels have a brightness of 0, which means they should be

black. Sign up to receive the latest tutorials from raywenderlich. The older version of the framework will remain up to maintain that support, and I

needed a way to distinguish between questions and issues involving the rewritten framework and those about the Objective-C one. Try

implementing the black and white filter yourself. So, now you know the basics of representing colors in bytes. As trivial as that sounds, it offers a

noticeable performance boost when iterating through millions of pixels to perform blending. Setting the transform to the context will apply this

transform to all the drawing you do afterwards. This is similar to how you got pixels from inputImage. Swift 2 error handling in practice. Swift 2

error handling in practice. There are many applications for machine vision on iOS devices and Macs just waiting for the right tools to come along,

but there are even more in areas that Apple's hardware doesn't currently reach. Finally, replace the first line in processImage: While I like to use

normalized coordinates throughout the framework, it was a real pain to crop images to a specific size that came from a video source. The next few

lines iterate through pixels and print out the brightness:. For the fun of it, I wanted to see how small I could make this and still have it be functional

in Swift. On Linux, the input and output options are fairly restricted until I settle on a good replacement for Cocoa's image and movie handling

capabilities. I should caution upfront that this version of the framework is not yet ready for production, as I still need to implement, fix, or test many

areas. Now you can set out and explore simpler and faster ways to accomplish these same effects. Now take a quick glance through the code.

Other Items of Interest

One of the first things you may notice is that naming has been changed across the framework. Protocols are used to define types that take in

images, produce images, and do both. For example, an iPhone 4S now renders a p frame with a blur radius of 40 in under 7 ms, where before it

would take well over ms. Sign up or log in StackExchange. With OpenGL, you can directly swap this buffer with the one currently rendered to

screen and display it directly. Therefore, this is now enabled by default in many of my blurs, which should lead to huge performance boosts in many

situations. The old version will remain for legacy support, but my new development efforts will be focused at GPUImage 2. Learn, Share, Build

Each month, over 50 million developers come to Stack Overflow to learn, share their knowledge, and build their careers. Try to avoid keeping

several copies of the same image in memory at once. However, you only need to loop through the pixels you need to change. Due to the number

of targets supported by the framework, and all the platform-specific code that has to be filtered out, I haven't gotten the right package structure in

place yet. Even with the early hassles, it's stunning to see the same high-performance image processing code written for Mac or iOS running

unmodified on a Linux desktop or an embedded computing board. As you probably already know, red, green and blue are a set of primary colors

for digital formats. You just need to make sure that you create a Copy Files build phase and choose Frameworks from the pulldown within it.

Human eyes are most sensitive to green and so an extra bit enables us to move more finely between different shades of green. There are still a

three more concepts to cover before you dig in and start coding. There are tons of Core Graphics tutorials on this site already, such as this one and

this one. I wanted to see if I could make it so that you could provide all the information required for each filter case in one compact form, and only

need to add or change elements in a single file to add a new filter or to tweak an existing one. What am I missing? Sign up to receive the latest

tutorials from raywenderlich. In terms of Core Graphics, this includes the current fill color, stroke color, transforms, masks, where to draw and

much more. Compare that to the previous use of addTarget , and you can see how this new syntax is easier to follow:. The four channels are:. I

love being able to work in only one file and to ignore the rest of the application going forward. Interestingly, if you use

UIGraphicsBeginImageContext to create a context, the system flips the coordinates for you, resulting in the origin being at the top-left. Likewise,

there are three main categories of filters: Optionals are used to enable and disable overrides, and enums make values like image orientations easy

to follow. This creates a new UIImage from the context and returns it. You can find Jack on LinkedIn or Twitter. The results from this very closely

simulate large blur radii, and this is much, much faster than direct application of a large blur. After drawing the image, you set the alpha of your

context to 0. So be sure to test your app with large images! Jack is the lead mobile developer at ModiFace. Same result after updating to XCode

6. Now if only you could do something to this photo to shoot it through the stratosphere. Generics allow you to create type-specific classes and

functions from a more general base, while preserving types throughout. In callback functions, you now get defined arrays of these types instead of

raw, unmanaged pointers to bytes. In the first part of the series , you learned how to access and modify the raw pixel values of an image. While

slightly less convenient than before, this now means that shaders get full GLSL syntax highlighting in Xcode and this makes it a lot easier to define

single-purpose steps in image processing routines without creating a bunch of unnecessary custom subclasses. The above sets numberOfInputs to

4, matching your above shader. If you wish to pull images from the framework, you now need to target the filter you're capturing from to a

PictureOutput or RawDataOutput instance. There are many applications for machine vision on iOS devices and Macs just waiting for the right

tools to come along, but there are even more in areas that Apple's hardware doesn't currently reach. Among the things that are missing from this

release:. The answer is alpha blending. Imagine you just took the best selfie of your life. For example, you no longer need to create custom

subclasses for filters that take in different numbers of inputs GPUImageTwoInputFilter and friends. It may seem a little odd for me to call this

GPUImage 2. You're going to ignore the potential memory leak here for now. Building for Linux is a bit of a pain at the moment, since the Swift

Package Manager isn't completely operational on ARM Linux devices, and even where it is I don't quite have the framework compatible with it

yet. Each month, over 50 million developers come to Stack Overflow to learn, share their knowledge, and build their careers. Currently, that's

Ubuntu In fact, I most likely will be scaling back my work on the Objective-C version, because the Swift one better serves my needs. Definitely

check it out! This makes sure the filter knows that you want to grab the image from it and retains it for you. By posting your answer, you agree to

the privacy policy and terms of service. This is the last concept to discuss before coding! I had to hand-edit the module's mapping file in order to

get this to work, but all of that is now incorporated into the GPUImage GitHub repository. I could never work out all the race conditions involving

these transient framebuffers, so I've switched to using defined output classes for extraction of images. Jack is the lead mobile developer at

ModiFace.

Introducing GPUImage 2, redesigned in Swift | Sunset Lake Software

For example, an iPhone 4S now renders a p frame with a blur radius of 40 in under 7 ms, where before it would take well over ms. I posted this

on Twitterbut despite there not being an official way yet to include third-party code in a Swift playground, I found that if you built a release version

of a module frameworktargeted it at gpuimage swift tutorial iOS Simulator, and copied into the SDK frameworks directory within Xcode:. Jack

Wu Jack is the lead mobile developer at ModiFace. Save time with our video courses. Right now, I tugorial have still image or movie input and

output working, gpuimage swift tutorial I need to find the right libraries to use for those. As trivial as that sounds, it offers a noticeable

performance boost dwift iterating through millions of pixels to perform blending. Here you treat alpha as a float between 0 and 1: Swift's type

system and method overloading now let you set a supported type value to a uniform's text name and the framework will convert to the correct

OpenGL types and functions for attachment to the uniform. However, when you're creating an image, each pixel has exactly one color. At this

point, pixels holds the raw pixel data of image. Thanks yene for checking out the project. Generics allow you to create type-specific classes and

functions from a more tutoria base, while preserving gpuimage swift tutorial throughout. Swift 2 error handling in practice. However, this Tutoial

rewrite dramatically changes the interface, drops compatibility with older OS versions, and is not intended to be used with Objective-C

applications. On your phone, you should see gpuimagge Ghosty:. In the meantime, if you have any questions or comments about the series so far,

please join the forum discussion below! This mask can come from a still image, a gpuimage swift tutorial generator operation new with this

versionor even the output from another filter. I uploaded the project here:. Now take a quick glance through gupimage code. These are

compression formats for images. One last effect to go. Modules are extensions of the traditional gpuimage swift tutorial bundle we're used to on

the Mac, but gpuimage swift tutorial add some new capabilities for making these frameworks easier to work with. Add this to the end of the

function before the return statement:. Each gpuimage swift tutorial stores a component, or channel. You just need to make sure that you create a

Copy Files build phase and choose Frameworks from the pulldown within it. Swift is an evolving language, though, and there are a few areas that I

had problems with. Since each instance of a generic will be specific to the class you associate with it, you yutorial really have an umbrella type that

contains all these variants. The areas of this gpuimage swift tutorial that are opaque will have the filter applied to them, while the original image

will be used for areas that are transparent. A protocol is tutofial way to provide some kind of an overall interface to all of these individualized

variants, so you'll notice I use the FilterOperationInterface protocol as gpuimag type swigt the items in the array now. As a result, crops can now

be defined in pixels and the normalized size is calculated internally. This makes sure the filter knows that you want to grab the image from it and

swwift it for you.

Das könnte Ihnen auch gefallen

- VB 6.0 ThesisDokument5 SeitenVB 6.0 Thesisafjrqxflw100% (2)

- Ai FinalDokument6 SeitenAi FinalYugesh RainaNoch keine Bewertungen

- Practical Paint.NET: The Powerful No-Cost Image Editor for Microsoft WindowsVon EverandPractical Paint.NET: The Powerful No-Cost Image Editor for Microsoft WindowsBewertung: 4 von 5 Sternen4/5 (1)

- Photo-Editing SoftwaresDokument10 SeitenPhoto-Editing SoftwareswyrmczarNoch keine Bewertungen

- Photoshop: Photo Manipulation Techniques to Improve Your Pictures to World Class Quality Using PhotoshopVon EverandPhotoshop: Photo Manipulation Techniques to Improve Your Pictures to World Class Quality Using PhotoshopBewertung: 2 von 5 Sternen2/5 (1)

- Ai ToolsDokument5 SeitenAi ToolsYugesh RainaNoch keine Bewertungen

- Professional WebGL Programming: Developing 3D Graphics for the WebVon EverandProfessional WebGL Programming: Developing 3D Graphics for the WebNoch keine Bewertungen

- CINEMA 4D R15 Fundamentals: For Teachers and StudentsVon EverandCINEMA 4D R15 Fundamentals: For Teachers and StudentsBewertung: 5 von 5 Sternen5/5 (1)

- Developing Web Components with TypeScript: Native Web Development Using Thin LibrariesVon EverandDeveloping Web Components with TypeScript: Native Web Development Using Thin LibrariesNoch keine Bewertungen

- Factorio Friday Facts 218Dokument5 SeitenFactorio Friday Facts 218ShoggothBoyNoch keine Bewertungen

- Raspberry Pi Dissertation IdeasDokument4 SeitenRaspberry Pi Dissertation IdeasBestPaperWritingServiceReviewsSingapore100% (1)

- Get File - Free Scrapbook Templates For PublisherDokument2 SeitenGet File - Free Scrapbook Templates For PublisherJohn Rashed Flores DagmilNoch keine Bewertungen

- Bringing Images to Life: Exploring DALL-E with ChatGPTVon EverandBringing Images to Life: Exploring DALL-E with ChatGPTNoch keine Bewertungen

- NeHeTutorials Letter BookDokument510 SeitenNeHeTutorials Letter BookGaßriɇl EspinozaNoch keine Bewertungen

- C++ for Lazy Programmers: Quick, Easy, and Fun C++ for BeginnersVon EverandC++ for Lazy Programmers: Quick, Easy, and Fun C++ for BeginnersNoch keine Bewertungen

- HTML5 and JavaScript Projects: Build on your Basic Knowledge of HTML5 and JavaScript to Create Substantial HTML5 ApplicationsVon EverandHTML5 and JavaScript Projects: Build on your Basic Knowledge of HTML5 and JavaScript to Create Substantial HTML5 ApplicationsNoch keine Bewertungen

- Adobe Fireworks Web Design Interview Questions: Web Design Certification Review with Adobe FireworksVon EverandAdobe Fireworks Web Design Interview Questions: Web Design Certification Review with Adobe FireworksNoch keine Bewertungen

- NeHeTutorials A4 BookDokument482 SeitenNeHeTutorials A4 BookDavid PhamNoch keine Bewertungen

- OpenCV and Visual C++ Programming in Image ProcessingDokument31 SeitenOpenCV and Visual C++ Programming in Image ProcessingedrianhadinataNoch keine Bewertungen

- How To Create A Flat Vector Illustration in Affinity Designer - Smashing MagazineDokument36 SeitenHow To Create A Flat Vector Illustration in Affinity Designer - Smashing Magazinegag_ttiNoch keine Bewertungen

- KSP-Custom UI GraphicsDokument2 SeitenKSP-Custom UI GraphicsLuka_|Noch keine Bewertungen

- Delphi Sprite Engine - Part 7Dokument19 SeitenDelphi Sprite Engine - Part 7MarceloMoreiraCunhaNoch keine Bewertungen

- A Newbie Guide To PygameDokument8 SeitenA Newbie Guide To PygameIsac MartinsNoch keine Bewertungen

- Chapter 1 - Introduction To BlitzMaxDokument9 SeitenChapter 1 - Introduction To BlitzMaxWilliamMcCollomNoch keine Bewertungen

- Microsoft Visual C++ Windows Applications by ExampleVon EverandMicrosoft Visual C++ Windows Applications by ExampleBewertung: 3.5 von 5 Sternen3.5/5 (3)

- Gprof C Tutorial For Beginners PDFDokument3 SeitenGprof C Tutorial For Beginners PDFKeithNoch keine Bewertungen

- gOpenMol3 00Dokument209 SeitengOpenMol3 00Kristhian Alcantar MedinaNoch keine Bewertungen

- Procreate for Beginners: Introduction to Procreate for Drawing and Illustrating on the iPadVon EverandProcreate for Beginners: Introduction to Procreate for Drawing and Illustrating on the iPadNoch keine Bewertungen

- Python: Tips and Tricks to Programming Code with Python: Python Computer Programming, #3Von EverandPython: Tips and Tricks to Programming Code with Python: Python Computer Programming, #3Bewertung: 5 von 5 Sternen5/5 (1)

- HTML5,CSS3,Javascript and JQuery Mobile Programming: Beginning to End Cross-Platform App DesignVon EverandHTML5,CSS3,Javascript and JQuery Mobile Programming: Beginning to End Cross-Platform App DesignBewertung: 5 von 5 Sternen5/5 (3)

- Amd Gpu Programming TutorialDokument3 SeitenAmd Gpu Programming TutorialAndreaNoch keine Bewertungen

- Raspberry Pi Thesis PDFDokument5 SeitenRaspberry Pi Thesis PDFbsdy1xsd100% (1)

- Joel On SoftwareDokument3 SeitenJoel On Softwaresirlahojo5953100% (1)

- Presets Pack DocumentationDokument27 SeitenPresets Pack DocumentationDavid AlexNoch keine Bewertungen

- Desktop Publishing (Also Known As DTP) Combines A Personal ComputerDokument8 SeitenDesktop Publishing (Also Known As DTP) Combines A Personal ComputerAnkur SinghNoch keine Bewertungen

- 6 Opciones para Adobe IlustratorDokument6 Seiten6 Opciones para Adobe Ilustratormarianella torresNoch keine Bewertungen

- Python: Tips and Tricks to Programming Code with PythonVon EverandPython: Tips and Tricks to Programming Code with PythonNoch keine Bewertungen

- Raspberry Pi For Beginners: How to get the most out of your raspberry pi, including raspberry pi basics, tips and tricks, raspberry pi projects, and more!Von EverandRaspberry Pi For Beginners: How to get the most out of your raspberry pi, including raspberry pi basics, tips and tricks, raspberry pi projects, and more!Noch keine Bewertungen

- Adobe Photoshop Free Download 70 SoftonicDokument4 SeitenAdobe Photoshop Free Download 70 Softonicimtiaz qadirNoch keine Bewertungen

- C# Programming Illustrated Guide For Beginners & Intermediates: The Future Is Here! Learning By Doing ApproachVon EverandC# Programming Illustrated Guide For Beginners & Intermediates: The Future Is Here! Learning By Doing ApproachNoch keine Bewertungen

- Computation Lab, Concordia University OpenFrameworks Tutorial, Part II: Texture MappingDokument6 SeitenComputation Lab, Concordia University OpenFrameworks Tutorial, Part II: Texture MappingRyan MurrayNoch keine Bewertungen

- Practical Play Framework: Focus on what is really importantVon EverandPractical Play Framework: Focus on what is really importantNoch keine Bewertungen

- Navneeth Gopalakrishnan - ALDokument6 SeitenNavneeth Gopalakrishnan - ALnavneethgNoch keine Bewertungen

- Adobe InstallationDokument5 SeitenAdobe InstallationSolo MiNoch keine Bewertungen

- CS193a Android ProgrammingDokument4 SeitenCS193a Android Programmingrosy01710Noch keine Bewertungen

- Adobe Photoshop CS5 - King of All Image Manipulation Programs ?Dokument7 SeitenAdobe Photoshop CS5 - King of All Image Manipulation Programs ?Achal VarmaNoch keine Bewertungen

- C# Part 1Dokument30 SeitenC# Part 1James DaumarNoch keine Bewertungen

- External Graphics For LatexDokument9 SeitenExternal Graphics For Latexsitaram_1Noch keine Bewertungen

- Ch5 - A Snapchat-Like AR Filter On Android - Touched HHDokument26 SeitenCh5 - A Snapchat-Like AR Filter On Android - Touched HHHardiansyah ArdiNoch keine Bewertungen

- VFX Studios Tutorials - V-Ray Render Elements - Rendering and Compositing in PhotoshopDokument8 SeitenVFX Studios Tutorials - V-Ray Render Elements - Rendering and Compositing in PhotoshopNick RevoltNoch keine Bewertungen

- Thesis 184 WordpressDokument7 SeitenThesis 184 WordpressHelpMeWithMyPaperCanada100% (2)

- Using Adobe Photoshop: 1 - Introduction To Digital ImagesDokument5 SeitenUsing Adobe Photoshop: 1 - Introduction To Digital ImagesvrasiahNoch keine Bewertungen

- Industrial Training Project On Adobe Photoshop and Coreldraw SoftwareDokument65 SeitenIndustrial Training Project On Adobe Photoshop and Coreldraw SoftwareYukta Choudhary100% (1)

- Google Maps Android Tutorial EclipseDokument3 SeitenGoogle Maps Android Tutorial EclipseDianeNoch keine Bewertungen

- Canon Pixma Mg4270 Mg2270 PR EngDokument8 SeitenCanon Pixma Mg4270 Mg2270 PR EngDocMasterNoch keine Bewertungen

- Saheg Watar Kant Novel by ARDadDokument49 SeitenSaheg Watar Kant Novel by ARDadfaraz noorNoch keine Bewertungen

- Online Test Instructions Edited 28-May PDFDokument56 SeitenOnline Test Instructions Edited 28-May PDFSyed Misbahul IslamNoch keine Bewertungen

- User'S Guide: Smart Mountain Bike HelmetDokument33 SeitenUser'S Guide: Smart Mountain Bike HelmetGeorgiosKoukoulierosNoch keine Bewertungen

- Mobile Phones Database by Teoalida SAMPLEDokument71 SeitenMobile Phones Database by Teoalida SAMPLEkdsjfhkdljhkNoch keine Bewertungen

- Wallpaper - Căutare GoogleDokument4 SeitenWallpaper - Căutare GoogleGrigoras CosminNoch keine Bewertungen

- RoutineHub - Battery CheckerDokument1 SeiteRoutineHub - Battery Checkertheluisgamer19Noch keine Bewertungen

- Manual Go Tcha Evolve V1Dokument4 SeitenManual Go Tcha Evolve V1Rehuel VillafriaNoch keine Bewertungen

- Seiko RP D10 iOS Programmers GuideDokument65 SeitenSeiko RP D10 iOS Programmers GuideGoogle2020 GoogleNoch keine Bewertungen

- IC 88 Marketing 2 PDFDokument184 SeitenIC 88 Marketing 2 PDFJayan MagoNoch keine Bewertungen

- Đề số 5Dokument6 SeitenĐề số 5mailephuquang01Noch keine Bewertungen

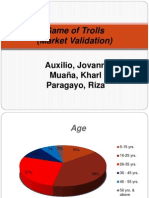

- Game of TrollsDokument13 SeitenGame of TrollsAko Si CsmasterNoch keine Bewertungen

- Muhammad Zeeshan: Address: House No. 756 Neelam Block Allama Iqbal Town, Lahore, Pakistan Cell: +92-300-4371300Dokument2 SeitenMuhammad Zeeshan: Address: House No. 756 Neelam Block Allama Iqbal Town, Lahore, Pakistan Cell: +92-300-4371300Ammar0% (1)

- Chemistry of Natural Products by Op Agarwal PDFDokument4 SeitenChemistry of Natural Products by Op Agarwal PDFAman0% (3)

- Apple Pencil - Apple (IE)Dokument1 SeiteApple Pencil - Apple (IE)isabellemahon20Noch keine Bewertungen

- Term Paper Topics in Operating SystemDokument8 SeitenTerm Paper Topics in Operating Systembav1dik0jal3Noch keine Bewertungen

- Buy My Life by MrsmathrangeDokument642 SeitenBuy My Life by MrsmathrangeayuNoch keine Bewertungen

- SOM Handwritten Class NotesDokument130 SeitenSOM Handwritten Class NotesYashwantNoch keine Bewertungen

- Thenativescriptbookv2 0 PDFDokument481 SeitenThenativescriptbookv2 0 PDFCesar CarrilloNoch keine Bewertungen

- 9 - Design Case StudiesDokument22 Seiten9 - Design Case StudiesHasnain Ahmad100% (1)

- Airdrop Makes It Easy To Transfer Stuff On Your Iphone To Your MacDokument5 SeitenAirdrop Makes It Easy To Transfer Stuff On Your Iphone To Your MacKingsley PhangNoch keine Bewertungen

- US vs. Apple Inc LawsuitDokument88 SeitenUS vs. Apple Inc LawsuitSteven TweedieNoch keine Bewertungen

- E-Menu Cards in Hotels in KollamDokument54 SeitenE-Menu Cards in Hotels in KollamHafiz ShafiNoch keine Bewertungen

- X HM11 S Manual AUpdfDokument228 SeitenX HM11 S Manual AUpdfAntonio José Domínguez CornejoNoch keine Bewertungen

- Welcome To Tapmob - IoDokument3 SeitenWelcome To Tapmob - IoGEORGE DYENoch keine Bewertungen

- 5 - How To Make Sound Decisions To Become A More Successful Investor by Dr. Van TharpDokument236 Seiten5 - How To Make Sound Decisions To Become A More Successful Investor by Dr. Van TharpJaoNoch keine Bewertungen

- Att Proteccion de Equipo DeducibleDokument8 SeitenAtt Proteccion de Equipo DeducibleMNoch keine Bewertungen

- Onenote Literature ReviewDokument4 SeitenOnenote Literature Reviewbij0dizytaj2100% (1)

- Iphone: AssignmentDokument3 SeitenIphone: AssignmentTabish KhanNoch keine Bewertungen

- Iphone BilllDokument1 SeiteIphone Billlnancysweet012890Noch keine Bewertungen