Beruflich Dokumente

Kultur Dokumente

L4 Speeding Up Execution

Hochgeladen von

VIGHNESH AIYACopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

L4 Speeding Up Execution

Hochgeladen von

VIGHNESH AIYACopyright:

Verfügbare Formate

General Aspects of Computer Organization

(Lecture-4)

R S Ananda Murthy

Associate Professor and Head

Department of Electrical & Electronics Engineering,

Sri Jayachamarajendra College of Engineering,

Mysore 570 006

R S Ananda Murthy General Aspects of Computer Organization

Specific Learning Outcomes

After completing this lecture, the student should be able to

Explain the meaning of datapath cycle.

List RISC design principles followed to improve processor

performance.

State the meaning of parallelism and why it is adopted in

modern computer architectures.

Explain how pipelining can speed up program execution.

Explain the meaning of superscalar architecture.

R S Ananda Murthy General Aspects of Computer Organization

Data Path In Side CPU

A+B A B ALU A+B

Registers ALU Input ALU ALU Output

Register Input Bus Register

Feeding two operands to the ALU and storing the output of

ALU in an internal register is called data path cycle.

Faster data path cycle results in faster program execution.

Multiple ALUs operating in parallel results in faster data

path cycle.

R S Ananda Murthy General Aspects of Computer Organization

RISC Design Speeds Up Program Execution

Most manufacturers today implement the following features in

their processors to improve performance

All instructions are directly executed by hardware instead

of being interpreted by a microprogram.

Maximize the rate at which instructions are issued by

adopting parallelism.

Use simple fixed-length instructions to speed-up decoding.

Avoid performing arithmetic and logical operations directly

on data present in the memory i.e., only LOAD and STORE

instructions should be executed with reference to memory.

Provide plently of registers in side the CPU.

R S Ananda Murthy General Aspects of Computer Organization

Parallelism for Faster Execution

Instruction execution can be made faster only upto a

certain limit by increasing the processor clock frequency as

it increases the power loss in the chip.

Consequently modern computer architects adopt some

kind of parallelism doing more operations simultaneously

to speed up performance.

Kinds of parallelism employed in computer architecture are

Instruction Level Parallelism (ILP)

Pipelining

Superscalar Architectures

Processor Level Parallelism (PLP)

SIMD or Vector Processor

Multiprocessors

Multicomputers

R S Ananda Murthy General Aspects of Computer Organization

Pipelining for High Performance

Number of stages in a pipeline varies depending upon the

hardware design of the CPU.

Each stage in a pipeline is executed by a dedicated

hardware unit in side the CU.

Each stage in a pipeline takes the same amount of time to

complete its task.

Hardware units of different stages in a pipeline can work

concurrently.

Operation of hardware units is synchronized by the clock

signal.

To implement pipelining instructions must be of fixed length

and same instruction cycle time.

Pipelining requires sophisticated compiling techniques to

be implemented in the compiler.

R S Ananda Murthy General Aspects of Computer Organization

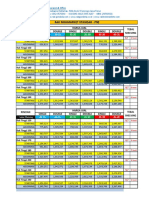

A 4-Stage Pipeline

1 2 3 4 5 6 7 Period of clock signal

Clock Cycle

Time No. of stages in pipeline

Instruction

I1 F1 D1 E1 W1

Hardware Stages in Pipeline

I2 F2 D2 E2 W2 F: Fetch instruction

I3 F3 D3 E3 W3 D: Decode and get operands

E: Execute the instruction

I4 F4 D4 E4 W4 W: Write result at destination

D: Decode

F: Fetch E: Execute W: Write

B1 and get B2 B3

Instruction operation results

operands

B1, B2, and B3 are storage buffers.

Information is passed from one stage to the next through

storage buffers.

Time taken to execute each instruction is nT .

Processor Band Width is 1/(T 106 ) MIPS (Million

Instructions Per Second).

R S Ananda Murthy General Aspects of Computer Organization

Superscalar Architecture

S1 S2 S3 S4 S5

Instruction Operand Instruction Write

decode fetch execution back

Instruction unit unit unit unit

fetch

unit Instruction Operand Instruction Write

decode fetch execution back

unit unit unit unit

Superscalar architecture has multiple pipelines as shown

above.

In the above example, a single fetch unit fetches a pair of

instructions together and puts each one into its own

pipeline, complete with its own ALU for parallel operation.

Compiler must ensure that the two instructions fetched do

not conflict over resource usage.

R S Ananda Murthy General Aspects of Computer Organization

Superscalar Architecture with Five Functional Units

S4

ALU

S1 S2 S3 ALU S5

Instruction Instruction Operand Write

fetch decode fetch LOAD back

unit unit unit unit

STORE

Floating

Point

Now-a-days the word superscalar is used to describe

processors that issue multiple instructions often four to

six in a single clock cycle.

Superscalar processors generally have one pipeline with

multiple functional units as shown above.

R S Ananda Murthy General Aspects of Computer Organization

License

This work is licensed under a

Creative Commons Attribution 4.0 International License.

R S Ananda Murthy General Aspects of Computer Organization

Das könnte Ihnen auch gefallen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- SPRD How To Add Components For SP3DDokument45 SeitenSPRD How To Add Components For SP3Dgiorivero100% (2)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Deltav Virtual Studio (2013)Dokument10 SeitenDeltav Virtual Studio (2013)Сергей БалжиNoch keine Bewertungen

- Kubernetes Threat ModelDokument56 SeitenKubernetes Threat ModelSudeep BatraNoch keine Bewertungen

- Automotive Quality Management System Manual: Century Metal Recycling LimitedDokument7 SeitenAutomotive Quality Management System Manual: Century Metal Recycling LimitedsunilNoch keine Bewertungen

- 6bk1200 0aa20 0aa0 Sicomp Imc 01s Siemens Panel ManualDokument130 Seiten6bk1200 0aa20 0aa0 Sicomp Imc 01s Siemens Panel Manualkarim100% (2)

- VLAN and Link-Aggregation Interoperability ArubaOS-switch and Cisco IOSDokument8 SeitenVLAN and Link-Aggregation Interoperability ArubaOS-switch and Cisco IOSel97639Noch keine Bewertungen

- L8 Understanding Atmega328P 1Dokument22 SeitenL8 Understanding Atmega328P 1VIGHNESH AIYANoch keine Bewertungen

- L9 Understanding Atmega328PExraDokument15 SeitenL9 Understanding Atmega328PExraVIGHNESH AIYANoch keine Bewertungen

- Carrier: Xpressbees: Deliver To FromDokument1 SeiteCarrier: Xpressbees: Deliver To FromVIGHNESH AIYANoch keine Bewertungen

- EE540 Lab Experiment 5Dokument3 SeitenEE540 Lab Experiment 5VIGHNESH AIYANoch keine Bewertungen

- L9 Understanding Atmega328P 2Dokument15 SeitenL9 Understanding Atmega328P 2VIGHNESH AIYANoch keine Bewertungen

- Free OCI Certification Presentaton Sept 2021Dokument9 SeitenFree OCI Certification Presentaton Sept 2021Aditya DasNoch keine Bewertungen

- Product Design and DevelopmentDokument16 SeitenProduct Design and DevelopmentTedy ThomasNoch keine Bewertungen

- 1a - Boehm Software EconomicsDokument19 Seiten1a - Boehm Software EconomicsBAYERN JOELNoch keine Bewertungen

- Mod 5Dokument26 SeitenMod 5Triparna PoddarNoch keine Bewertungen

- Resume Sherry Schwarcz BerlitzDokument4 SeitenResume Sherry Schwarcz Berlitzapi-206510088Noch keine Bewertungen

- STM 32 Cubef 1Dokument3 SeitenSTM 32 Cubef 1thuandvt97Noch keine Bewertungen

- Tsi t2s User Manual v1 2Dokument32 SeitenTsi t2s User Manual v1 2AllamNoch keine Bewertungen

- 4112-4127 Color Scan KitDokument3 Seiten4112-4127 Color Scan KitxcopytechNoch keine Bewertungen

- Onapsis Webcasttopnotes FinalDokument17 SeitenOnapsis Webcasttopnotes FinalnizartcsNoch keine Bewertungen

- Released DmapsDokument396 SeitenReleased Dmapsredoctober24Noch keine Bewertungen

- An R Package For High-Frequency TradersDokument24 SeitenAn R Package For High-Frequency TradersFabianMontescuNoch keine Bewertungen

- Grey Card Sasha HeinDokument15 SeitenGrey Card Sasha HeinIvan LiwuNoch keine Bewertungen

- Chapter 5 Synchronous Sequential CircuitDokument32 SeitenChapter 5 Synchronous Sequential CircuitSenthur PriyaNoch keine Bewertungen

- GEI-100165 Ethernet TCP-IP GEDS Standard Message Format (GSM)Dokument30 SeitenGEI-100165 Ethernet TCP-IP GEDS Standard Message Format (GSM)Mohamed Amine100% (1)

- Mini Project Report FMSDokument6 SeitenMini Project Report FMSjohnNoch keine Bewertungen

- List of Experiments and Record of Progressive Assessment: Date of Performance Date of SubmissionDokument37 SeitenList of Experiments and Record of Progressive Assessment: Date of Performance Date of SubmissionIqbal HassanNoch keine Bewertungen

- Project - Setting Up A CI - CD Pipeline Using Java, Maven, JUnit, Jenkins, GitHub, AWS EC2, Docker, and AWS S3Dokument24 SeitenProject - Setting Up A CI - CD Pipeline Using Java, Maven, JUnit, Jenkins, GitHub, AWS EC2, Docker, and AWS S3chidiebere onwuchekwaNoch keine Bewertungen

- Instruction Sheet For HTML Spoken Tutorial Team, IIT Bombay: 5.1 Instructions To PractiseDokument2 SeitenInstruction Sheet For HTML Spoken Tutorial Team, IIT Bombay: 5.1 Instructions To PractiseAjit PriyadarshiNoch keine Bewertungen

- Linux Basics - Linux Guide To Learn Linux C - Steven LandyDokument81 SeitenLinux Basics - Linux Guide To Learn Linux C - Steven LandyAndre RodriguesNoch keine Bewertungen

- Brother QL 600Dokument8 SeitenBrother QL 600Darnell WilliamsNoch keine Bewertungen

- Distributing and Installing RMI SoftwareDokument11 SeitenDistributing and Installing RMI SoftwareKrishna VanjareNoch keine Bewertungen

- Rak Minimarket Standar - P90: Single Double Single Double Single Double Rincian Harga Jual Tebal ShelvingDokument6 SeitenRak Minimarket Standar - P90: Single Double Single Double Single Double Rincian Harga Jual Tebal ShelvingAndi HadisaputraNoch keine Bewertungen

- Config Netapp AutosupportDokument10 SeitenConfig Netapp AutosupportaayoedhieNoch keine Bewertungen

- CAED Chapter No1Dokument24 SeitenCAED Chapter No1zain2010Noch keine Bewertungen