Beruflich Dokumente

Kultur Dokumente

AI Safety Levels

Hochgeladen von

Turchin AlexeiCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

AI Safety Levels

Hochgeladen von

Turchin AlexeiCopyright:

Verfügbare Formate

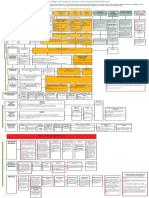

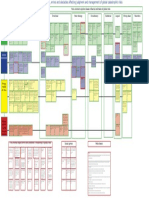

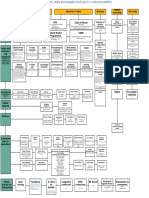

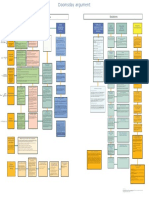

Levels of defense in AI safety

Levels Global solutions Local solutions

Funding of Attracting Creation of AI safety

0 Preparation the AI safety guidlines and

the AI safety minds

reserch theory mindset

Prelimnary

measures Prevention

Destruction Promotion of Slowing AI

of the of AI labs

Restriction

AI safety idea

by law development

Strong AI everywhere and theory

creation

Limiting AI Creating Preventing self- Messy AIs and

scale and Manipulating

many AIs for improvement uploads

impact AI arms race

balansing? of an AI

Solving AI EthicsNet dataset Correct decesion Provably safe Human Human

1 alignment competition theory AI design emulation self-improving

problem

Safe

AI Installing CEV AI Vague values

correct constitution

design goals

Solving Taking over Creating AI First AI creators will

other AI the world police implement safe AI

problem theory

Safely interruptable Red button and

2 Control agents; Corrigibility other internal

Circuit-breakers

systems constrains

Catching

treacherous

turn Testing

Testing in Testing in Narrow AI

simulation small tasks to control AI

3 Information Disconection from Phisically remote

limiting interent place

AI boxing

Instruments

4 to affect Messaging to Utility monsters

mature AI future Young AI and landmines

Stopping Created by Alexey Turchin, 2017, CC4.0

See also other my maps on the topic:

AI failures modes

AI AI safety solution

and articles

Hopes to

in the wild survive AI Humans Humans as AI will

Message to any future AI

Catching treacherous turn MIRI strategy (as

in simulation slaves abandon Earth Global and local solutions of AI safety I understand it)

Human upload based AI Nanny marked in yellow

Time

Das könnte Ihnen auch gefallen

- The Rise of AI: A Brief HistoryDokument10 SeitenThe Rise of AI: A Brief HistoryJagadeeswaranNoch keine Bewertungen

- 04 Ai 150Dokument155 Seiten04 Ai 150Ibtissam RiyNoch keine Bewertungen

- Future SexDokument1 SeiteFuture SexTurchin AlexeiNoch keine Bewertungen

- AI For Immortality MapDokument3 SeitenAI For Immortality MapTurchin AlexeiNoch keine Bewertungen

- Cyber Attack Conclusion - Anything You Can Store - I Can StealDokument6 SeitenCyber Attack Conclusion - Anything You Can Store - I Can StealMicha.Shafir100% (1)

- STC 2012 10k Without ContractsDokument80 SeitenSTC 2012 10k Without Contractspeterlee100Noch keine Bewertungen

- Nick Bostrom - The Transhumanist FAQDokument56 SeitenNick Bostrom - The Transhumanist FAQCarlos100% (1)

- How China Is Preparing For An AI-Powered FutureDokument19 SeitenHow China Is Preparing For An AI-Powered FutureThe Wilson CenterNoch keine Bewertungen

- Artificial Intelligence and Knowledge RepresentationDokument20 SeitenArtificial Intelligence and Knowledge Representationlipika008Noch keine Bewertungen

- Active Denial SystemDokument17 SeitenActive Denial SystemSwapnajit KonwarNoch keine Bewertungen

- CoMmOnLy CoNfuSeD WoRdSsSsDokument9 SeitenCoMmOnLy CoNfuSeD WoRdSsSsColourful TulipsNoch keine Bewertungen

- Chatbot White PaperDokument10 SeitenChatbot White PaperVignesh GangulyNoch keine Bewertungen

- That Which Is Legal in War Is As Illegal As War Is LegallyDokument15 SeitenThat Which Is Legal in War Is As Illegal As War Is LegallyFrank GallagherNoch keine Bewertungen

- The Plain Truth About GodDokument193 SeitenThe Plain Truth About GodMacwiezeNoch keine Bewertungen

- PowerPoint Presentation On Artificial IntelligenceDokument14 SeitenPowerPoint Presentation On Artificial IntelligencejiahasinstagramNoch keine Bewertungen

- AI For Business Final - CompressedDokument52 SeitenAI For Business Final - CompressedAdhi NaNoch keine Bewertungen

- Técnicas de Inteligencia Artificial Aplicadas en CiberseguridadDokument63 SeitenTécnicas de Inteligencia Artificial Aplicadas en CiberseguridadJuan Carlos Olivares RojasNoch keine Bewertungen

- Artifical IntelligenceDokument25 SeitenArtifical IntelligenceM. Umair MaqboolNoch keine Bewertungen

- Digital Transformation - AI Maturity and Organizations - 181010Dokument37 SeitenDigital Transformation - AI Maturity and Organizations - 181010him2000himNoch keine Bewertungen

- Modern Approach to AI in Business and LifeDokument19 SeitenModern Approach to AI in Business and LifeZarinNoch keine Bewertungen

- AI for All: Understand, Learn, EarnDokument36 SeitenAI for All: Understand, Learn, EarnAouthithiye MeghaNoch keine Bewertungen

- AnChain - AI Sales DeckDokument18 SeitenAnChain - AI Sales DeckAnonymous KXB8bB8mS100% (1)

- Ai in Business00Dokument26 SeitenAi in Business00narjissafantaziNoch keine Bewertungen

- Project Report of CsDokument26 SeitenProject Report of CspandeyshriamshNoch keine Bewertungen

- 2108 03929Dokument201 Seiten2108 03929DylanNoch keine Bewertungen

- Lec 01 Introductionv 2024Dokument127 SeitenLec 01 Introductionv 2024wstybhNoch keine Bewertungen

- 8.driving Innovation With Responsible AI - Microsoft - Fiki SetiyonoDokument18 Seiten8.driving Innovation With Responsible AI - Microsoft - Fiki Setiyonokonservasi beduatekaeNoch keine Bewertungen

- Iise Newsletter October 2018 IssueDokument13 SeitenIise Newsletter October 2018 IssueKağan YaylaNoch keine Bewertungen

- Artificial Intelligence (AI)Dokument46 SeitenArtificial Intelligence (AI)Mekkara RajeevNoch keine Bewertungen

- AIDokument12 SeitenAINithyashree MNoch keine Bewertungen

- Blue Modern Minimalist Artificial Intelligence Technology PresentationDokument19 SeitenBlue Modern Minimalist Artificial Intelligence Technology PresentationPhan Hoai Nam HL K17Noch keine Bewertungen

- HOW Is The Future of Pharma-: Drug DiscoveryDokument24 SeitenHOW Is The Future of Pharma-: Drug DiscoverybhavaniNoch keine Bewertungen

- Machine Learning NotesDokument21 SeitenMachine Learning NotesKaushik SNoch keine Bewertungen

- Will You Embrace AI Fast EnoughDokument12 SeitenWill You Embrace AI Fast Enoughraymond32jNoch keine Bewertungen

- Artificial IntelligenceDokument12 SeitenArtificial Intelligenceayushksgaming72Noch keine Bewertungen

- Abhinav Biswas: Iew Efycon 2018Dokument30 SeitenAbhinav Biswas: Iew Efycon 2018Dipesh PujaNoch keine Bewertungen

- What is Artificial IntelligenceDokument12 SeitenWhat is Artificial IntelligenceĐinh Thị Minh ThưNoch keine Bewertungen

- Beyond The Hype - AI in Your SOC (Ebook)Dokument8 SeitenBeyond The Hype - AI in Your SOC (Ebook)Indra SukmaNoch keine Bewertungen

- Ai-900 Whi Zcar D: Quick Exam Reference - Hand-Picked For YouDokument5 SeitenAi-900 Whi Zcar D: Quick Exam Reference - Hand-Picked For Youili aisyahNoch keine Bewertungen

- Artificial Intelligence: Debarghya Biswas Abhishek Pallashree Shreya Esa Sonali Subhadeep SurajDokument17 SeitenArtificial Intelligence: Debarghya Biswas Abhishek Pallashree Shreya Esa Sonali Subhadeep SurajdbiswasNoch keine Bewertungen

- Artificial Intelligence Template 16x9Dokument16 SeitenArtificial Intelligence Template 16x9Aryank GuptaNoch keine Bewertungen

- WWW Techtarget Com Searchenterpriseai Definition Artificial General Intelligence AGIDokument7 SeitenWWW Techtarget Com Searchenterpriseai Definition Artificial General Intelligence AGIJayNoch keine Bewertungen

- Azure OpenAI WorkshopDokument30 SeitenAzure OpenAI WorkshopkomalaNoch keine Bewertungen

- Artificial Intelligence: Dr. Akram AlkouzDokument69 SeitenArtificial Intelligence: Dr. Akram AlkouzAkram AlkouzNoch keine Bewertungen

- Shining A Light On Shadow ITDokument17 SeitenShining A Light On Shadow ITTruong DuyNoch keine Bewertungen

- Week 1 - Basic Cocepts of AIDokument27 SeitenWeek 1 - Basic Cocepts of AIAvinash PrashadNoch keine Bewertungen

- privacy-in-the-world-of-ai-reportDokument16 Seitenprivacy-in-the-world-of-ai-reportsylvionetoNoch keine Bewertungen

- Artificial Intelligence FinalpptDokument20 SeitenArtificial Intelligence FinalpptVaibhav RajNoch keine Bewertungen

- Catalog WizMind V1.0 EN 20200702 - (28P) PDFDokument28 SeitenCatalog WizMind V1.0 EN 20200702 - (28P) PDFHelmy HtssNoch keine Bewertungen

- CB Insights AI 100 2024Dokument30 SeitenCB Insights AI 100 2024Kaushik TiwariNoch keine Bewertungen

- Artificial Intelligence: LA Inteligencia Artifial Avanzara Cada DIA MASDokument3 SeitenArtificial Intelligence: LA Inteligencia Artifial Avanzara Cada DIA MASRichard QuispeNoch keine Bewertungen

- Emerging Technology and Business Model Innovation: The Case of Artificial IntelligenceDokument12 SeitenEmerging Technology and Business Model Innovation: The Case of Artificial IntelligencePratyush JaiswalNoch keine Bewertungen

- AI in Cyber SecurityDokument14 SeitenAI in Cyber Securitysandeep darla100% (1)

- AI and Cyber SecurityDokument56 SeitenAI and Cyber SecuritySumit ThatteNoch keine Bewertungen

- Artificial IntelligenceDokument32 SeitenArtificial Intelligencekanika aggarwalNoch keine Bewertungen

- 01 AI OverviewDokument62 Seiten01 AI OverviewDhouha BenzinaNoch keine Bewertungen

- 01 AI OverviewDokument85 Seiten01 AI OverviewPablo GleisnerNoch keine Bewertungen

- ახალი პრეზენტაცია (შეცვლილი)Dokument8 Seitenახალი პრეზენტაცია (შეცვლილი)მარიამი mariamiNoch keine Bewertungen

- AI.1 - Introduction To AI (1-4)Dokument71 SeitenAI.1 - Introduction To AI (1-4)Le NhatNoch keine Bewertungen

- The Map of Risks of AliensDokument1 SeiteThe Map of Risks of AliensTurchin AlexeiNoch keine Bewertungen

- Resurrection of The Dead MapDokument1 SeiteResurrection of The Dead MapTurchin Alexei100% (1)

- The Map of Methods of OptimisationDokument1 SeiteThe Map of Methods of OptimisationTurchin AlexeiNoch keine Bewertungen

- The Map of Ideas About P-ZombiesDokument1 SeiteThe Map of Ideas About P-ZombiesTurchin AlexeiNoch keine Bewertungen

- The Map of Agents Which May Create X-RisksDokument1 SeiteThe Map of Agents Which May Create X-RisksTurchin Alexei100% (1)

- The Map of Asteroids Risks and DefenceDokument1 SeiteThe Map of Asteroids Risks and DefenceTurchin AlexeiNoch keine Bewertungen

- The Map of Shelters and Refuges From Global Risks (Plan B of X-Risks Prevention)Dokument1 SeiteThe Map of Shelters and Refuges From Global Risks (Plan B of X-Risks Prevention)Turchin AlexeiNoch keine Bewertungen

- The Map of BiasesDokument1 SeiteThe Map of BiasesTurchin AlexeiNoch keine Bewertungen

- The Map of Natural Global Catastrophic RisksDokument1 SeiteThe Map of Natural Global Catastrophic RisksTurchin AlexeiNoch keine Bewertungen

- The Map of X-Risk-Preventing Organizations, People and Internet ResourcesDokument1 SeiteThe Map of X-Risk-Preventing Organizations, People and Internet ResourcesTurchin AlexeiNoch keine Bewertungen

- The Map of MontenegroDokument1 SeiteThe Map of MontenegroTurchin AlexeiNoch keine Bewertungen

- The Map of Ideas of Global Warming PreventionDokument1 SeiteThe Map of Ideas of Global Warming PreventionTurchin AlexeiNoch keine Bewertungen

- The Map of Ideas About IdentityDokument1 SeiteThe Map of Ideas About IdentityTurchin AlexeiNoch keine Bewertungen

- Life Extension MapDokument1 SeiteLife Extension MapTurchin Alexei100% (1)

- Bio Risk MapDokument1 SeiteBio Risk MapTurchin AlexeiNoch keine Bewertungen

- Double Scenarios of A Global Catastrophe.Dokument1 SeiteDouble Scenarios of A Global Catastrophe.Turchin AlexeiNoch keine Bewertungen

- Simulation MapDokument1 SeiteSimulation MapTurchin AlexeiNoch keine Bewertungen

- The Map of Ideas How Universe Appeared From NothingDokument1 SeiteThe Map of Ideas How Universe Appeared From NothingTurchin Alexei100% (1)

- Global Catastrophic Risks Connected With Nuclear Weapons and Nuclear EnergyDokument5 SeitenGlobal Catastrophic Risks Connected With Nuclear Weapons and Nuclear EnergyTurchin AlexeiNoch keine Bewertungen

- (Plan C From The Immortality Roadmap) Theory Practical StepsDokument1 Seite(Plan C From The Immortality Roadmap) Theory Practical StepsTurchin Alexei100% (1)

- Doomsday Argument MapDokument1 SeiteDoomsday Argument MapTurchin AlexeiNoch keine Bewertungen

- AI Failures Modes and LevelsDokument1 SeiteAI Failures Modes and LevelsTurchin AlexeiNoch keine Bewertungen

- The Roadmap To Personal ImmortalityDokument1 SeiteThe Roadmap To Personal ImmortalityTurchin Alexei100% (1)

- Russian Naive and Outsider Art MapDokument1 SeiteRussian Naive and Outsider Art MapTurchin AlexeiNoch keine Bewertungen

- Typology of Human Extinction RisksDokument1 SeiteTypology of Human Extinction RisksTurchin AlexeiNoch keine Bewertungen

- MGT 3150 SyllabusDokument7 SeitenMGT 3150 SyllabusCarl NguyenNoch keine Bewertungen

- Unhinge Your SoulDokument5 SeitenUnhinge Your SoulPa Dooley0% (1)

- Ofra Ayalon Therapy Today 2007 SepDokument3 SeitenOfra Ayalon Therapy Today 2007 SepCarlos VizcarraNoch keine Bewertungen

- Power of English Phrasal VerbsDokument15 SeitenPower of English Phrasal VerbsLearn English Online86% (7)

- Lesson Plan APA...Dokument4 SeitenLesson Plan APA...cess auNoch keine Bewertungen

- Managing Attrition in BpoDokument7 SeitenManaging Attrition in BpoGeoffrey MainaNoch keine Bewertungen

- Amity School of Languages Amity University, Jaipur, RajasthanDokument12 SeitenAmity School of Languages Amity University, Jaipur, RajasthanKoysha KatiyarNoch keine Bewertungen

- The End of Philosophy, The Origin of IdeologyDokument4 SeitenThe End of Philosophy, The Origin of IdeologyAlex DuqueNoch keine Bewertungen

- Lens Sharpness ChartDokument8 SeitenLens Sharpness ChartAntipa DanielNoch keine Bewertungen

- PWT Chords (English-Tagalog) EDIT Dec-16 PDFDokument79 SeitenPWT Chords (English-Tagalog) EDIT Dec-16 PDFChristine Joy Manguerra RabiNoch keine Bewertungen

- (g11) Practice Test 06 - MegaDokument4 Seiten(g11) Practice Test 06 - MegaPhương LiênNoch keine Bewertungen

- Personal Update, May 2016Dokument44 SeitenPersonal Update, May 2016Chuck MisslerNoch keine Bewertungen

- Third World Narratives in Things Fall Apart An Insight Into Postcolonial TheoryDokument14 SeitenThird World Narratives in Things Fall Apart An Insight Into Postcolonial TheoryRashid Ahmad100% (1)

- Uranian Astrology For Beginners Lesson 1Dokument5 SeitenUranian Astrology For Beginners Lesson 1Valentin BadeaNoch keine Bewertungen

- The Wild Boy of AveyronDokument9 SeitenThe Wild Boy of AveyronVIA BAWINGANNoch keine Bewertungen

- Any WayDokument23 SeitenAny WaymariaagaNoch keine Bewertungen

- Merit of The Mass (Fr. Ripperger, F.S.S.P.)Dokument12 SeitenMerit of The Mass (Fr. Ripperger, F.S.S.P.)Richard Aroza100% (5)

- Hci Lect 1Dokument36 SeitenHci Lect 1rizwanNoch keine Bewertungen

- Sampling and PopulationDokument53 SeitenSampling and PopulationNoorhanani Muhd100% (2)

- Release Technique Newletter Aug 2015Dokument58 SeitenRelease Technique Newletter Aug 2015hanako1192100% (1)

- Determiners With Countable and Uncountable NounsDokument11 SeitenDeterminers With Countable and Uncountable NounsDhiman Nath100% (1)

- Pocock-Barbarism and ReligionDokument355 SeitenPocock-Barbarism and Religionulianov333100% (1)

- DLL - EN6 1st Quarter Week 9 Day 5Dokument2 SeitenDLL - EN6 1st Quarter Week 9 Day 5Jeclyn D. FilipinasNoch keine Bewertungen

- BSCE Term Paper on Improving English SkillsDokument2 SeitenBSCE Term Paper on Improving English Skillsjanz13Noch keine Bewertungen

- Globalization Will Lead To The Total Loss of Cultural IdentityDokument2 SeitenGlobalization Will Lead To The Total Loss of Cultural IdentityNurul Nadia NaspuNoch keine Bewertungen

- Approximate Formula For Steady-State, Infinite Capacity QueuesDokument26 SeitenApproximate Formula For Steady-State, Infinite Capacity QueuesNasir Ali RizviNoch keine Bewertungen

- Relationship Between Leadership & Organizational CultureDokument17 SeitenRelationship Between Leadership & Organizational Cultureayesha shabbir100% (1)

- Lesson Plan Poem MicroteachingDokument7 SeitenLesson Plan Poem MicroteachingSolehah Rodze100% (1)

- Lums Megzine PDFDokument286 SeitenLums Megzine PDFShahzad Nasir SayyedNoch keine Bewertungen

- Navajo Language SpecificsDokument15 SeitenNavajo Language Specificssyrupofthesun100% (1)