Beruflich Dokumente

Kultur Dokumente

Defect Residual Metrics

Hochgeladen von

sujadilip0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

63 Ansichten2 SeitenDefect Residual Metrics

Copyright

© © All Rights Reserved

Verfügbare Formate

DOCX, PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenDefect Residual Metrics

Copyright:

© All Rights Reserved

Verfügbare Formate

Als DOCX, PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

63 Ansichten2 SeitenDefect Residual Metrics

Hochgeladen von

sujadilipDefect Residual Metrics

Copyright:

© All Rights Reserved

Verfügbare Formate

Als DOCX, PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 2

Residual defects is one of the most important factors that

allow one to decide if a piece of software is ready to be released.

In theory, one can find all the defects and count

them, however it is impossible to find all the defects within

a reasonable amount of time. Estimating defect density can

become difficult for high reliability software, since remaining

defects can be extremely hard to test for. One possible

way is to apply the exponential SRGM and thus estimate the

total number of defects present at the beginning of testing.

Here we show the problems with this approach and present

a new approach based on software test coverage. Software

test coverage directly measures the thoroughness of testing

avoiding the problem of variations of test effectiveness. We

apply this model to actual test data to project the residual

number of defects. The results show that this method results

in estimates that are more stable than the existing methods.

This method is easier to understand and the convergence to

the estimate can be visually observed.

1. Introduction

The estimated number of remaining defects in a piece

of code is often used as an acceptance criterion. Some recent

publications suggest that leading edge software development

organizations typically achieve a defect density of

about 2.0 defects/KLOC [1]. Some organizations now target

even lower defect densities. The NASA Space Shuttle

Avionics software with an estimated defect density of 0.1

defects /KLOC is regarded to be an example of what can

be currently achieved by the best methods [5]. A low defect

density can be quite expensive to achieve, the Space

Shuttle code has been reported to have cost about $1,000 per

line of code. The cost of fixing a defect later can be several

orders of magnitude higher than during development,

yet a program must be shipped by some deadline dictated by

market considerations. This makes the estimation of defect

density a very important challenge. One conceivableway of

knowing the exact defect density of a program is to actually

find all remaining defects. This is obviously infeasible for

any commercial product. Even if resources are available, it

will take a prohibitive amount of time to find all bugs in a

large program [2]. Sampling based methods have been suggested

for estimating the number of remaining defects. Mc-

Connell [14] has given a method that involves using two independent

testing activities, perhaps by two different teams

of testers. This and other sampling technique assume that

faults found have the same testability as faults not found.

However, in actual practice, the faults not found represent

faults that are harder to find [13]. Thus such techniques are

likely to yield an estimate of faults that are relatively easier

to find and thus less than the true number. Fault seeding

methods [14] suffer from similar problems.

It is possible to estimate the defect density based on

past experience using empirical models like the Rome Lab

model [7] or themodel proposed by Malaiya andDenton [9].

The estimates obtained by such models can be very useful

for initial planning, however these models are not expected

to be accurate enough to compare with methods involving

actual test data.

Another possible way to estimate the number of faults is

by using the exponential SRGM. It assumes that the failure

intensity is given by

_(t) = _E

0 _E

1 e_E

(1)

1t

It can be shown that the parameters _E

and

0 _E

depend

1

on system and test process characteristics. Specifically,

0

_E

represents the total number of defects that would be eventually

found. We can estimate the number of remaining defects

by subtracting the number of defects found from the

value of _E

obtained by fitting the model to the data.

0

We applied this technique to several data sets, figure 1

show results typical of this technique. Several things are

worth noting about this plot. First, testing continued to reveal

defects even after this technique predicted that there

where no remaining defects. Second, the value of _E

never

0

stabilizes. Towards the end of testing _E

takes on a value

0

very close to the number of defects already found, and thus

provides no useful information.

An SRGM relates the number of defects found to testing

time spent. In actual practice, the defect finding rate will depend

on test effectiveness and can vary depending on the test

input selection strategy. A software test coverage measure

(like block, branch, and P-use coverage etc.) directly measures

the extent to which the software under test has been

exercised. Thus we expect that a suitably chosen test coverage

measure will correlate better with the number of defects

encountered. The relationship between test coverage

and the number of defects found has been investigated by

Piwowarski, Ohba and Caruso [16], Hutchins, Goradia and

Ostrand [6],Malaiya et al [11], Lyu, Horgan and London [8]

and Chen, Lyu and Wong [3].

In the next two sections, a model for defect density in

terms of test coverage is introduced and its applicability

is demonstrated using test data. Section 4 presents a twoparameter

approximation of the model. Finally we present

some observations on this new approach.

Das könnte Ihnen auch gefallen

- Mitsubishi forklift manual pdf downloadDokument3 SeitenMitsubishi forklift manual pdf downloadDwi Putra33% (12)

- Angus SolutionDokument8 SeitenAngus SolutionBen Karthiben NathanNoch keine Bewertungen

- IPTC 12029 Selection Criteria For Artificial Lift Technique in Bokor FieldDokument13 SeitenIPTC 12029 Selection Criteria For Artificial Lift Technique in Bokor FieldJean Carlos100% (1)

- Furuno GMDSS Installation Manual PDFDokument64 SeitenFuruno GMDSS Installation Manual PDFEric PskdNoch keine Bewertungen

- DDNS Management System User's Manual V1.0 - 20120301Dokument7 SeitenDDNS Management System User's Manual V1.0 - 20120301judapiesNoch keine Bewertungen

- Software Testing: A Guide to Testing Mobile Apps, Websites, and GamesVon EverandSoftware Testing: A Guide to Testing Mobile Apps, Websites, and GamesBewertung: 4.5 von 5 Sternen4.5/5 (3)

- Discrete Time NHPP Models For Software Reliability Growth PhenomenonDokument8 SeitenDiscrete Time NHPP Models For Software Reliability Growth PhenomenonAmit PatilNoch keine Bewertungen

- Semi-Proving Method for Program VerificationDokument17 SeitenSemi-Proving Method for Program VerificationsvksolaiNoch keine Bewertungen

- StreckerMemonICST2008Dokument10 SeitenStreckerMemonICST2008lthanhlongNoch keine Bewertungen

- Gaining Confidence in Random Test Selection for Modified SoftwareDokument10 SeitenGaining Confidence in Random Test Selection for Modified SoftwareBogdan NicaNoch keine Bewertungen

- Evaluating and Improving Fault LocalizationDokument12 SeitenEvaluating and Improving Fault LocalizationJavid Safiullah 100Noch keine Bewertungen

- Are Testing True Reliability?Dokument7 SeitenAre Testing True Reliability?rai2048Noch keine Bewertungen

- Mutation Operators For Spreadsheets: Robin Abraham and Martin Erwig, Member, IEEEDokument17 SeitenMutation Operators For Spreadsheets: Robin Abraham and Martin Erwig, Member, IEEEkiterotNoch keine Bewertungen

- Mythical Unit Test Coverage: KeywordsDokument9 SeitenMythical Unit Test Coverage: Keywordsor_rsNoch keine Bewertungen

- Classifying Generated White-Box Tests: An Exploratory Study: D Avid Honfi Zolt An MicskeiDokument42 SeitenClassifying Generated White-Box Tests: An Exploratory Study: D Avid Honfi Zolt An MicskeiIntan PurnamasariNoch keine Bewertungen

- Estimating Handling Time of Software DefectsDokument14 SeitenEstimating Handling Time of Software DefectsCS & ITNoch keine Bewertungen

- Optimizing Testing Efficiency With Error-Prone Path Identification and Genetic AlgorithmsDokument10 SeitenOptimizing Testing Efficiency With Error-Prone Path Identification and Genetic AlgorithmsIrtiqa SaleemNoch keine Bewertungen

- MOdule 1Dokument82 SeitenMOdule 1Lakshmisha.R.A. Lakshmisha.R.A.Noch keine Bewertungen

- Software VulnarabilityDokument10 SeitenSoftware VulnarabilityShubham ShuklaNoch keine Bewertungen

- Successive Sampling and Software ReliabiDokument36 SeitenSuccessive Sampling and Software ReliabiDr.Arpita LakhreNoch keine Bewertungen

- Structbbox 2Dokument21 SeitenStructbbox 2api-3806986Noch keine Bewertungen

- Impact of Pair Programming On Thoroughness and Fault Detection Effectiveness of Unit Test SuitesDokument20 SeitenImpact of Pair Programming On Thoroughness and Fault Detection Effectiveness of Unit Test SuitesSquishyPoopNoch keine Bewertungen

- Software Testing: Gregory M. Kapfhammer Department of Computer Science Allegheny CollegeDokument44 SeitenSoftware Testing: Gregory M. Kapfhammer Department of Computer Science Allegheny Collegeyashodabai upaseNoch keine Bewertungen

- Assessing Software Reliability Using Modified Genetic Algorithm: Inflection S-Shaped ModelDokument6 SeitenAssessing Software Reliability Using Modified Genetic Algorithm: Inflection S-Shaped ModelAnonymous lPvvgiQjRNoch keine Bewertungen

- A Bayesian Model For Controlling Software Inspections SW - InspectionDokument10 SeitenA Bayesian Model For Controlling Software Inspections SW - Inspectionjgonzalezsanz8914Noch keine Bewertungen

- White Paper on Defect Clustering and Pesticide ParadoxDokument10 SeitenWhite Paper on Defect Clustering and Pesticide ParadoxShruti0805Noch keine Bewertungen

- Optimal Release Time For Software Systems Considering Cost, Testing-Effort, and Test EfficiencyDokument9 SeitenOptimal Release Time For Software Systems Considering Cost, Testing-Effort, and Test EfficiencyClarisa HerreraNoch keine Bewertungen

- Software Testing Defect Prediction Model - A Practical ApproachDokument5 SeitenSoftware Testing Defect Prediction Model - A Practical ApproachesatjournalsNoch keine Bewertungen

- A Novel Deep Diverse Prototype Forest Algorithm (DDPF) Based Test Case Selection & PrioritizationDokument15 SeitenA Novel Deep Diverse Prototype Forest Algorithm (DDPF) Based Test Case Selection & PrioritizationAngel PushpaNoch keine Bewertungen

- Software Testing Process FrameworkDokument54 SeitenSoftware Testing Process FrameworkDbit DocsNoch keine Bewertungen

- 1401 5830 PDFDokument14 Seiten1401 5830 PDFSachin WaingankarNoch keine Bewertungen

- Testing and DebuggingDokument90 SeitenTesting and DebuggingNalini RayNoch keine Bewertungen

- Automatic Test Software: Yashwant K. MalaiyaDokument15 SeitenAutomatic Test Software: Yashwant K. MalaiyaVan CuongNoch keine Bewertungen

- Theis ProposalDokument6 SeitenTheis ProposaliramNoch keine Bewertungen

- Estimation of Software Defects Fix Effort Using Neural NetworksDokument2 SeitenEstimation of Software Defects Fix Effort Using Neural NetworksSpeedSrlNoch keine Bewertungen

- Winsem2018-19 Ite2004 Eth Sjtg05 Vl2018195004497 Reference Material II Myths - PrinciplesDokument36 SeitenWinsem2018-19 Ite2004 Eth Sjtg05 Vl2018195004497 Reference Material II Myths - PrinciplesShaurya KumarNoch keine Bewertungen

- Principles of Testing Terminology and DefinitionsDokument126 SeitenPrinciples of Testing Terminology and DefinitionsAliNoch keine Bewertungen

- How Effective Mutation Testing Tools Are? An Empirical Analysis of Java Mutation Testing Tools With Manual Analysis and Real FaultsDokument31 SeitenHow Effective Mutation Testing Tools Are? An Empirical Analysis of Java Mutation Testing Tools With Manual Analysis and Real FaultssaadiaNoch keine Bewertungen

- jucs-29-8-892_article-94462Dokument19 Seitenjucs-29-8-892_article-94462Mohamed EL GHAZOUANINoch keine Bewertungen

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Dokument10 SeitenIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationNoch keine Bewertungen

- Software Testing Effort Estimation With Cobb-Douglas Function A Practical ApplicationDokument5 SeitenSoftware Testing Effort Estimation With Cobb-Douglas Function A Practical ApplicationInternational Journal of Research in Engineering and TechnologyNoch keine Bewertungen

- Achieving and Assuring High Availability: 1 OverviewDokument6 SeitenAchieving and Assuring High Availability: 1 OverviewlakshmiepathyNoch keine Bewertungen

- Functional Testing Interview QuestionsDokument18 SeitenFunctional Testing Interview QuestionsPrashant GuptaNoch keine Bewertungen

- Kuhn 2011Dokument1 SeiteKuhn 2011mheba11Noch keine Bewertungen

- ObjDokument44 SeitenObjapi-3733726Noch keine Bewertungen

- Software Failure and Classification of Software FaultsDokument12 SeitenSoftware Failure and Classification of Software FaultsGesse SantosNoch keine Bewertungen

- Software TestingDokument18 SeitenSoftware TestingtechzonesNoch keine Bewertungen

- 08 00 SW Testing OverviewDokument79 Seiten08 00 SW Testing OverviewDileepNoch keine Bewertungen

- Localization of Fault Based On Program SpectrumDokument9 SeitenLocalization of Fault Based On Program SpectrumseventhsensegroupNoch keine Bewertungen

- Software testin-WPS OfficeDokument2 SeitenSoftware testin-WPS OfficeSubah SrinivasanNoch keine Bewertungen

- Art of Software Defect Association & Correction Using Association Rule MiningDokument11 SeitenArt of Software Defect Association & Correction Using Association Rule MiningIAEME PublicationNoch keine Bewertungen

- Time: 3hours Max - Marks:70 Part-A Is Compulsory Answer One Question From Each Unit of Part-BDokument16 SeitenTime: 3hours Max - Marks:70 Part-A Is Compulsory Answer One Question From Each Unit of Part-BRamya PatibandlaNoch keine Bewertungen

- Considering Fault Removal Efficiency in Software Reliability AssessmentDokument7 SeitenConsidering Fault Removal Efficiency in Software Reliability AssessmentDebapriya MitraNoch keine Bewertungen

- 22MCA344 Software Testing Module2Dokument311 Seiten22MCA344 Software Testing Module2Ashok B PNoch keine Bewertungen

- Top Software Testing Interview Questions And AnswersDokument5 SeitenTop Software Testing Interview Questions And AnswersRufiah BashaNoch keine Bewertungen

- PrinciplesDokument3 SeitenPrinciplesrsnaik28Noch keine Bewertungen

- PrinciplesDokument3 SeitenPrinciplesGaurangNoch keine Bewertungen

- Publication 2 (Final)Dokument7 SeitenPublication 2 (Final)Ranjana DalwaniNoch keine Bewertungen

- 105 PDFDokument6 Seiten105 PDFInternational Journal of Scientific Research in Science, Engineering and Technology ( IJSRSET )Noch keine Bewertungen

- Mutation TesingmutationDokument249 SeitenMutation TesingmutationKhushboo Khanna100% (1)

- Unit - III Cyclomatic Complexity Measure, Coverage Analysis, Mutation TestingDokument3 SeitenUnit - III Cyclomatic Complexity Measure, Coverage Analysis, Mutation Testingbright.keswaniNoch keine Bewertungen

- Structural Coverage MetricsDokument19 SeitenStructural Coverage MetricsRamu BanothNoch keine Bewertungen

- Defect Prediction in Software Development & MaintainenceVon EverandDefect Prediction in Software Development & MaintainenceNoch keine Bewertungen

- IdeaDokument1 SeiteIdeasujadilipNoch keine Bewertungen

- Agile Model Benefits Customer Satisfaction Through Frequent DeliveryDokument1 SeiteAgile Model Benefits Customer Satisfaction Through Frequent DeliverysujadilipNoch keine Bewertungen

- Kanban Is Based On 3 Basic PrinciplesDokument2 SeitenKanban Is Based On 3 Basic PrinciplessujadilipNoch keine Bewertungen

- How To Implement ChangeDokument1 SeiteHow To Implement ChangesujadilipNoch keine Bewertungen

- Leadership Is Not A PositionDokument9 SeitenLeadership Is Not A PositionsujadilipNoch keine Bewertungen

- Service ReportingDokument1 SeiteService ReportingsujadilipNoch keine Bewertungen

- Machine LearningDokument1 SeiteMachine LearningsujadilipNoch keine Bewertungen

- Pattern AnalysisDokument1 SeitePattern AnalysissujadilipNoch keine Bewertungen

- Get Rita RMP in AmazonDokument1 SeiteGet Rita RMP in AmazonsujadilipNoch keine Bewertungen

- Key To Validate The SolutionDokument1 SeiteKey To Validate The SolutionsujadilipNoch keine Bewertungen

- Worksheet in Agile For PBDokument6 SeitenWorksheet in Agile For PBsujadilipNoch keine Bewertungen

- Worksheet in Agile For PBDokument6 SeitenWorksheet in Agile For PBsujadilipNoch keine Bewertungen

- Worksheet in Agile For PBDokument6 SeitenWorksheet in Agile For PBsujadilipNoch keine Bewertungen

- Residual Defect Desity - New Approach PDFDokument8 SeitenResidual Defect Desity - New Approach PDFsujadilipNoch keine Bewertungen

- Difference Between The Acquirer and Issuer: Acquiring BankDokument1 SeiteDifference Between The Acquirer and Issuer: Acquiring BanksujadilipNoch keine Bewertungen

- Project Brief Template Date: 1 March 2006Dokument4 SeitenProject Brief Template Date: 1 March 2006sujadilipNoch keine Bewertungen

- Difference Between The Acquirer and Issuer: Acquiring BankDokument1 SeiteDifference Between The Acquirer and Issuer: Acquiring BanksujadilipNoch keine Bewertungen

- Digital Transformation ChallengesDokument2 SeitenDigital Transformation ChallengessujadilipNoch keine Bewertungen

- Key To Validate The SolutionDokument1 SeiteKey To Validate The SolutionsujadilipNoch keine Bewertungen

- Sample Games: 15. Ibble Dibble (A Burnt Cork)Dokument2 SeitenSample Games: 15. Ibble Dibble (A Burnt Cork)sujadilipNoch keine Bewertungen

- Sample Games: 15. Ibble Dibble (A Burnt Cork)Dokument2 SeitenSample Games: 15. Ibble Dibble (A Burnt Cork)sujadilipNoch keine Bewertungen

- Kanban Is Based On 3 Basic PrinciplesDokument2 SeitenKanban Is Based On 3 Basic PrinciplessujadilipNoch keine Bewertungen

- Classic party games for kids and adultsDokument2 SeitenClassic party games for kids and adultssujadilipNoch keine Bewertungen

- Classic party games for kids and adultsDokument2 SeitenClassic party games for kids and adultssujadilipNoch keine Bewertungen

- Key To Validate The SolutionDokument1 SeiteKey To Validate The SolutionsujadilipNoch keine Bewertungen

- Classic party games for kids and adultsDokument2 SeitenClassic party games for kids and adultssujadilipNoch keine Bewertungen

- Key To Validate The SolutionDokument1 SeiteKey To Validate The SolutionsujadilipNoch keine Bewertungen

- Solutioning Certification ClueDokument1 SeiteSolutioning Certification CluesujadilipNoch keine Bewertungen

- IE Compatibility Changes by VersionDokument2 SeitenIE Compatibility Changes by VersionsujadilipNoch keine Bewertungen

- TNK500P Taneko Industrial Generator (TNK JKT)Dokument2 SeitenTNK500P Taneko Industrial Generator (TNK JKT)Rizki Heru HermawanNoch keine Bewertungen

- QlikView Business Intelligence Tool OverviewDokument11 SeitenQlikView Business Intelligence Tool OverviewMithun LayekNoch keine Bewertungen

- Contact-Molded Reinforced Thermosetting Plastic (RTP) Laminates For Corrosion-Resistant EquipmentDokument8 SeitenContact-Molded Reinforced Thermosetting Plastic (RTP) Laminates For Corrosion-Resistant EquipmentQUALITY MAYURNoch keine Bewertungen

- Assist. Prof. DR - Thaar S. Al-Gasham, Wasit University, Eng. College 136Dokument49 SeitenAssist. Prof. DR - Thaar S. Al-Gasham, Wasit University, Eng. College 136Hundee HundumaaNoch keine Bewertungen

- Dod P 16232FDokument24 SeitenDod P 16232FArturo PalaciosNoch keine Bewertungen

- Fsls 11.10 Adminguide EngDokument67 SeitenFsls 11.10 Adminguide Engsurender78Noch keine Bewertungen

- Hdpe Alathon H5520 EquistarDokument2 SeitenHdpe Alathon H5520 EquistarEric Mahonri PereidaNoch keine Bewertungen

- Documentation For: Bank - MasterDokument6 SeitenDocumentation For: Bank - MastervijucoolNoch keine Bewertungen

- 4 LoopsDokument30 Seiten4 LoopsThirukkuralkaniNoch keine Bewertungen

- Arduino Uno Schematic Annotated1Dokument1 SeiteArduino Uno Schematic Annotated1matthewwu2003100% (1)

- CST Design Studio - WorkflowDokument102 SeitenCST Design Studio - WorkflowHeber Bustos100% (7)

- Performance-Creative Design Concept For Concrete InfrastructureDokument11 SeitenPerformance-Creative Design Concept For Concrete InfrastructureTuan PnNoch keine Bewertungen

- Preparatory Year Program Computer Science (PYP 002)Dokument34 SeitenPreparatory Year Program Computer Science (PYP 002)Hassan AlfarisNoch keine Bewertungen

- Ibm Lenovo Whistler Rev s1.3 SCHDokument52 SeitenIbm Lenovo Whistler Rev s1.3 SCH1cvbnmNoch keine Bewertungen

- Catalogo Recordplus General ElectricDokument12 SeitenCatalogo Recordplus General ElectricDruen Delgado MirandaNoch keine Bewertungen

- Soil CompactionDokument13 SeitenSoil Compactionbishry ahamedNoch keine Bewertungen

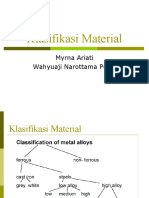

- Klasifikasi Material: Myrna Ariati Wahyuaji Narottama PutraDokument49 SeitenKlasifikasi Material: Myrna Ariati Wahyuaji Narottama Putrachink07Noch keine Bewertungen

- Control your ship with Kobelt electronic controlsDokument36 SeitenControl your ship with Kobelt electronic controlsBERANGER DAVESNE DJOMALIA SIEWENoch keine Bewertungen

- Sustainable Transport Development in Nepal: Challenges and StrategiesDokument18 SeitenSustainable Transport Development in Nepal: Challenges and StrategiesRamesh PokharelNoch keine Bewertungen

- Davao October 2014 Criminologist Board Exam Room AssignmentsDokument113 SeitenDavao October 2014 Criminologist Board Exam Room AssignmentsPRC Board0% (1)

- EN 12663-1 - 2010 - IndiceDokument6 SeitenEN 12663-1 - 2010 - IndiceOhriol Pons Ribas67% (3)

- M103C 10/11 Meter Maximum Beam AntennaDokument9 SeitenM103C 10/11 Meter Maximum Beam AntennaRádio Técnica AuroraNoch keine Bewertungen

- PBM and PBZ Crown Techniques ComparedDokument6 SeitenPBM and PBZ Crown Techniques ComparedDonanguyenNoch keine Bewertungen

- History of JS: From Netscape to Modern WebDokument2 SeitenHistory of JS: From Netscape to Modern WebJerraldNoch keine Bewertungen

- VELUXDokument16 SeitenVELUXEko SalamunNoch keine Bewertungen