Beruflich Dokumente

Kultur Dokumente

Hybrid IWD-PSO Algorithm

Hochgeladen von

jaspreetpreetOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Hybrid IWD-PSO Algorithm

Hochgeladen von

jaspreetpreetCopyright:

Verfügbare Formate

See

discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/317185524

Artificial Neural Network Optimization by a

Hybrid IWD - PSO Approach for Iris

Classification

Conference Paper · April 2017

CITATIONS READS

0 15

2 authors, including:

Ashima Kalra

Chandigarh Engineering College

7 PUBLICATIONS 5 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

ANN optimization View project

All content following this page was uploaded by Ashima Kalra on 01 February 2018.

The user has requested enhancement of the downloaded file.

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

Artificial Neural Network Optimization by a Hybrid IWD-PSO

Approach for Iris Classification

Jaspreet Kaur Ashima Kalra

Electronics & Communication Engineering Electronics & Communication Engineering

Chandigarh Engineering College Chandigarh Engineering College

Landran, INDIA Landran, INDIA

ABSTRACT

The categorization and identification of type on the base of lone attributes and comportment constitute an initial measure

and is a predominant target in the behavioral sciences. Contemporary statistical techniques do not always provide

adequate results. A feed forward Artificial Neural Network (ANN) is the computer model impressed by the configuration

of the Human Brain. The main objective of this paper is to indicate the procedure of evolving the Artificial Neural

network based classifier which categorizes the Iris dataset. The problem scrutinizes the recognition of Iris plant species

on the basis of plant feature quantifications. This paper is associated with the utilization of feed forward neural networks

in direction of the recognition of iris plants on the basis of the following quantifications: sepal length, sepal width, petal

length, and petal width. This paper presents a new hybrid approach of PSO (Particle swarm optimization) and IWD

(intelligent water drop) as hybrid IWD-PSO approach for the optimization of ANN for Iris classification and compared

its performance with the individual approaches PSO and IWD. The outcomes of simulations elucidate the better

performance of the hybrid IWD-PSO approach in terms of accuracy and SSE as compared to IWD and PSO stand alone

with reference to the number of hidden layers and hidden nodes as well.

Keywords

Swarm Intelligence, Artificial Neural Network, Feed forward Neural Network, Particles Swarm Optimization

Approach, Intelligent Water Drop Approach.

1. INTRODUCTION

This section consists of two subsections. Subsection 1.1 presents brief of artificial neural networks, its

characteristics and applications. The subsection 1.2 introduces swarm intelligence.

1.1 Artificial Neural Network

The simplified model of human nervous system represents artificial neural network. ANN is composed of

many faux neurons to achieve the required functionality. It is a conjecture Function that maps inputs to

outputs [9]. It’s learning capability and adaptability to data sets makes it pertinent in various fields. The output

of ANN system is function of its inputs and weight values. Let X i input, Wi is weight, thus output (Y) of ANN

is given as:

𝑌 = 𝑛𝑖=1 𝑋𝑖 𝑊𝑖 (1)

ANN consists of three kinds of layers: input, hidden and output layers. The number of hidden layers may vary.

Each layer consists of n-neurons. Figure.1 represents ANN system. The outputs at hidden layer are given as:

ℎ𝑗 = 𝑛𝑖=1 𝑛𝑗=1 𝑥𝑖 𝑤𝑖𝑗 (2)

where wij is weight value for input to hidden neurons. The final output Y at output layer is given as

summation of product of hidden neurons and weight values. The weighted summation of hidden neurons is

then pass through activation function which results in final output. The output from hidden layer is given as:

𝑌𝑖 = 𝑛𝑗=1 ℎ𝑗 𝑣𝑗 (3)

232 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

Where Vj is hidden-output layer weight matrix. Ys is summation of hidden layer outputs and is given as:

𝑌𝑠 = 𝑛𝑗=1 𝑌𝑗 (4)

Final output using sigmoid function is given as:

1

𝑌= (5)

1+𝑒 −𝑌 𝑠

The output neurons depend on the output variable to be mapped.

Figure 1. Artificial Neural Network system [5]

1.1.1 Features and characteristics of an effective ANN

It learns in the presence of noise.

It drives it computing power through its massively parallel distributive structure and its ability to learn

and generalize.

Adaptively: Adapting the synaptic weights to change in surrounding environments

Fault tolerance

Evidential response

Uniformity of analysis and design

VLSI implement ability

Neurobiological analogy

1.1.2 Applications of Artificial Neural Network

Pattern Classification

Clustering/Categorization

Function Approximation

Prediction/Forecasting

Stock market prediction

Travelling salesman’s problem

Medicine Applications

Image Compression

Industrial applications

Employee selection and hiring

233 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

1.2 Swarm Intelligence

Swarm intelligence approaches are meta-heuristic algorithms that are inspired by collective intelligent nature

of the group of homogenous insects, birds, etc.The complications regarding the use of classical optimization

techniques on a wide-ranging engineering problems have contributed to evolution of alternative solutions.

Traditional optimization techniques are often trapped in local optima minima in solving problems with large

number of variables, wide explore area, etc. To vanquish these problems, researchers have suggested SI

approaches for obtaining a global optimum solution. A rich survey of available classical and swarm

approaches is found in literature [3] [4]. SI algorithms simulate behavior of a group of biological entities. The

behavior of biological entities is guided by learning, adaptation and evolution. Artificial neural networks are

models that are inspired by biological neural networks. To improve the prediction accuracy of ANN we need

to arrive at optimal values for some ANN parameters like a number of neurons for input, output and hidden

layer, weight values and activation function [1].

2. RESEARCH METHODOLOGY

We have used a feed forward neural network in order to categorize the iris data set. The Iris data set is one of

the benchmark data sets used to exemplify the approach for categorization problems. We have implemented

PSO, IWD and new hybrid approach IWD-PSO to optimize ANN. The proposed approach is implemented on

MATLAB (nntool) toolbox using scaled conjugate gradient (trainscg) activation function.

2.1 Iris Dataset

Iris classification problem is a famous benchmark classification problem. It is used to test the performance of

the new proposed hybrid approach. The Iris dataset consists of 150 snippets that can be divided into three

classes, consisting Setosa, Versicolor, and Virginica [2]. Each class accounts for 50 snippets. This comprises

the matrix of 150*3 and is applied in the course of study. All snippets have four properties:

a. Sepal Length,

b. Sepal Width,

c. Petal Length,

d. Petal Width.

2.2 Proposed ANN and proposed optimization approach

2.2.1 Proposed ANN architecture: We have used single layer ANNs with the structure 4-S-3 to solve this

classification problem, where S is the number of hidden nodes with S = 2, 3, 4……, 15. Figure 2 shows the

proposed ANN system.

Figure 2. Proposed 4-S-3 ANN Architecture [2]

234 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

2.2.2 Proposed hybrid IWD-PSO Algorithm: There is no single approach which can completely solve all

optimization problems. Amalgamating the existing approaches is one way to make sure that a universal

optimum result can be attained. IWD provides good quality results using average values [6]. It has also been

proven that the IWD is capable to explore for the global optimum, and has the property of the convergence in

value when compared to other methods. It is also flexible in the dynamic environment and pop-up threats are

easily incorporated. The PSO is one of the most widely used approach in hybrid methods due to its lucidity

and convergence speed [7] [8]. In order to resolve the aforementioned problem, a hybrid algorithm of IWD

and PSO (IWD-PSO) is proposed. Basically, the hybrid IWD-PSO combines the ability of social

communication in PSO with the global search capability of IWD. The pseudo code of proposed algorithm is

given as below [10] [11]:

a) Input: Problem data set i.e. Iris data set

b) Output: An optimal solution

Formulate the optimization problem as fully connected graph.

Initialize the static parameters i.e. parameters are not changed during the search process.

c) Stopping Criterion: Set the fixed number of generations/iterations.

Initialize the dynamic parameters i.e. parameters changed during the search process.

d) Initialization Phase: Initialize the population/solutions.

Variables are initialized in the range [lb, ub].

Create Nodes in Graph.

Update the visited node list of each IWD to include the nodes just visited.

Complete the partial solution vectors for all the IWDs by visiting next nodes in the graph using probability

function:

f(soil (i,j)

Pki(j)= f(soil (i,l)

(6)

∀l∉Nvisit

Update the velocity of kth IWD after moving from current node to next node using:

av

Vk(t+1) = Vk(t)+ (7)

b v + c v ∗soil (i,j)

Compute the amount of soil removed from local path between current nodes to next visited node and update

the soil loaded with kth IWD:

as

∆soil (i,j)= b (i,j:V k (t+1))

(8)

s + c s ∗time

soilk = soilk+∆soil(i,j) (9)

Update the soil of path from current node to next node

soil(i,j)=(1-ρn)*soil(i,j)-ρn*∆soil(i,j) (10)

e) Iteration Best solution: find the Ibest solution from the solutions of all IWD as:

Ibest= arg min/max∀Tiwdy(Tiwd) (11)

Update the soils on the paths that form the current Iteration-Best solution as:

Soil(i,j)=(1+ρiwd)*soil(i,j)-ρiwd*soilkbest*1/q(Ibest) (12)

f) Total global best solution: update the total best/global best solution as:

T if q Tbest > 𝑞(Ibest )

Tbest= best (13)

Ibest otherwise

Increment the iteration number by:

IterCount = IterCount + 1 (14)

Then the PSO algorithm is applied to total global best solution.

235 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

2.2.3 Criteria for Evaluating Performance: The performance is evaluated on the basis of SSE criterion,

accuracy rate. Accuracy rate is defined as the ability of the classifier to produce accurate results and can be

computed as follows:

Number of correctly classified objects by the classifier

Accuracy = Number of objects in the dataset

(15)

These criteria are commonly used to know how well an algorithm works. For a best approach the accuracy

rate should be maximum and SSE should be minimum.

3. EXPERIMENTAL RESULTS

In order to test and evaluate the proposed algorithm for training ANNs, experiment is commonly performed

over synthetic and real (benchmark) problem set. In the following experiment, we used classification example

to compare the performances of IWD, PSO and IWD-PSO algorithms in training ANNs.

For ANN trained by IWD, the velocity updating parameters are set as Av = 1, Bv = 0.01 and Cv = 1 and soil

updating parameters are set as As = 1, Bs = 0.01 and Cs = 1 the local soil updating parameter is set to Pn=0.9

and global soil updating parameter is set to PIWD=0.9.Initial Velocity of every IWD and initial soil loaded

with every IWD is user defined and set to as InitVel=100 and InitSoilIWD=4 respectively.

For ANN trained by PSO, the number of maximum iterations are set to 50, inertia weight is set to 1, correction

parameters c1, c2 are set to 2.For ANN trained by hybrid IWD-PSO, the parameters setting is same as for

individual algorithms.

The MATLAB version used is R2013a. Out of these 150 samples, 70% sample is used for training, 15% for

validation and 15% for testing. The network architecture taken is 4-S-3, i.e. the input layer has 4 nodes, and

the hidden layer has S nodes with S=2, 3…, 15 and the output layer has 3 nodes. Every procedure was run five

times successively, and then the mean values were calculated for these five results and are shown in Table 1.

Figure 3(a-f) shows the convergence rates of IWD-ANN, PSO-ANN, and IWD-PSO ANN based on the

average values of SSE with S = 10, 11, 12, 13, 14, and 15. This figure confirms that the IWD-PSO ANN had a

settlement amid preventing untimely convergence and searching the whole search space for all values of

hidden numbers. Figure 3a and 3e for S=10, 14 respectively shows that hybrid approach provide minimum

SSE as compared to IWD and PSO-ANN approach. The lowest SSE for IWD-PSO ANN is 3.8085.

Figure 4 (a-b), shows the testing and training accuracy for all three approaches respectively. It can be

concluded that IWD-PSO ANN has a better accuracy rate than PSO-ANN and IWD-ANN. Best training

accuracy rate is 0.995 while for IWD-ANN, PSO-ANN it is 0.992 and 0.989 respectively.

These results prove that IWD-PSO ANN is capable of solving the Iris classification problem more reliably

and accurately than PSO-ANN and IWD-ANN. As per results, it can be concluded that IWD-PSO ANN

outperforms PSO-ANN and IWD-ANN due to the capability of the proposed hybrid IWD-PSO algorithm.

4. CONCLUSIONS AND FUTURE SCOPE

It has been found that various enhancements are done in the area of optimization in order to achieve minimum

sum square error with respect of reliability and accuracy of the optimization approaches. But the work done in

previous is not much satisfactory. In this study, we proposed a new hybrid IWD-PSO approach based on the

IWD and PSO approaches. This approach combines the IWD algorithm’s strong ability regarding

convergence rate and the PSO algorithm’s strong ability in global search. Therefore, it has a trade-off between

avoiding premature convergence and exploring the whole search space. We can get better search results using

this hybrid algorithm. The IWD, PSO, and IWD-PSO are utilized as optimization approaches for ANNs. The

comparison results represents that IWD-PSO-ANN outperforms IWD-ANN and PSO-ANN in terms of SSE

and accuracy rate. It can be concluded that the proposed hybrid IWD-PSO approach is suitable for use as an

236 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

optimization approach for ANNs. The results of the present study also show the fact that a comparative

analysis of different optimization approaches is always supportive in enhancing the performance of a neural

network. This hybrid IWD-PSO approach can be further applied to deal with more optimization problems in

future scope.

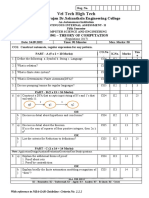

Table 1. Comparison of the performance of the PSO-ANN, IWD-ANN and IWD-PSO-ANN in the Iris

classification problem

Hidden Neurons (S) Algorithm SSE Training Accuracy Testing Accuracy

2 IWD 4.3271 0.940 0.956

PSO 4.5906 0.951 0.926

IWD-PSO 4.2219 0.976 0.982

3 IWD 4.1049 0.974 0.970

PSO 3.9423 0.974 0.986

IWD-PSO 4.0707 0.984 0.987

4 IWD 4.2825 0.983 0.979

PSO 5.1209 0.967 0.980

IWD-PSO 4.1487 0.974 0.986

5 IWD 4.1607 0.978 0.967

PSO 4.2199 0.973 0.988

IWD-PSO 4.0709 0.985 0.999

6 IWD 4.1368 0.977 0.974

PSO 4.2064 0.973 0.998

IWD-PSO 4.0520 0.980 0.994

7 IWD 4.0530 0.973 0.985

PSO 4.0570 0.975 0.985

IWD-PSO 3.9484 0.978 0.995

8 IWD 3.8659 0.973 0.935

PSO 3.8851 0.989 0.936

IWD-PSO 3.8622 0.991 0.937

9 IWD 3.8559 0.992 0.933

PSO 3.9790 0.972 0.881

IWD-PSO 3.8365 0.995 0.961

10 IWD 3.9365 0.973 0.990

PSO 4.0809 0.966 0.942

IWD-PSO 3.8390 0.976 0.999

11 IWD 4.0952 0.973 0.995

PSO 3.8868 0.974 0.972

IWD-PSO 3.8483 0.976 0.998

12 IWD 3.9037 0.971 0.951

PSO 4.0293 0.973 0.881

IWD-PSO 3.9036 0.975 0.994

13 IWD 4.0735 0.970 0.903

PSO 3.9731 0.973 0.960

IWD-PSO 3.9097 0.976 0.984

14 IWD 4.0435 0.978 0.954

PSO 3.9355 0.964 0.953

IWD-PSO 3.8085 0.979 0.997

15 IWD 3.8211 0.981 0.954

PSO 3.9518 0.987 0.934

IWD-PSO 3.8185 0.987 0.977

237 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

(3a) (3b)

(3d)

(3c)

(3f)

(3e)

Figure. 3 (a-f) Convergence of IWD-ANN, PSO-ANN, and IWDPSO-ANN in the Iris classification

problem with S = 10, 11, 12, 13, 14, and 15.

238 Jaspreet Kaur, Ashima Kalra

International Journal of Electronics, Electrical and Computational System

IJEECS

ISSN 2348-117X

Volume 6, Issue 4

April 2017

(4a) (4b)

Figure 4a, 4b. Accuracy rate for IWD-ANN, PSO-ANN, and IWDPSO-ANN in the Iris classification

problem for S=2 to 15

ACKNOWLEDGMENT

The authors would like to thank the Direction of Research & Innovation Centre in CEC-ECE Department of

CGC Landran for the special support that made possible the preparation of this paper.

REFERENCES

[1] M.Swain, S.K.Dash, S.Dash, A.Mohapatra, “An Approach for Iris Plant Classification using Neural Network”,

International Journal on Soft Computing ( IJSC ),Vol.3, pp.79-89, February 2012.

[2] J.F. Chen, Q.Hung Do , H.N.Hsieh, “Training Artificial Neural Networks by a Hybrid PSO-CS Algorithm”, mdpi

algorithms, Vol.8, pp.292-308, 2015.

[3] A. Kalra, S. Kumar, S.S Waliya. “ANN Training: A Survey of classical and Soft Computing Approaches”,

International Journal of Control Theory and Applications, Vol. 9, pp. 715-736, Dec-2016.

[4] A. Kalra, S. Kumar, S.S Waliya. “ANN Training: A Review of Soft Computing Approaches”, International Journal

of Electrical & Electronics Engineering, Vol. 2, Spl. Issue 2, pp. 193-205, 2015.

[5] M.Rostami, M.Piroozbakht, “Comparing of Three Meta-heuristic Algorithm for Artificial Neural Network Training

with Case Study of Stock Price Forecasting”, International Journal of Advanced studies in Computer Science and

Engineering (IJASCSE), Vol.4, pp.25-34, 2015.

[6] S.Pothumani, J.Sridhar, “A Survey on Applications of IWD Algorithm”, International Journal of Innovative

Research in Computer and Communication Engineering, Vol.3, pp.1-6, 2015.

[7] D.P. Rini, S.M. Shamsuddin, S.S. Yuhaniz, “Particle Swarm Optimization: Technique, System and Challenges”,

International journal of computer applications, vol.14, pp.0975-8887, January 2011.

[8] Qinghai Bai, “Analysis of Particle Swarm Optimization Algorithm”, Computer and Information Science, Vol.3,

No.1, pp.180-184, Feb-2010.

[9] I.A.Basheer, M.Hajmeer, “Artificial neural networks: fundamentals, computing, design, and application”,Journal of

Microbiological Methods, Vol.43, pp.3-31, 2000.

[10] H.S Hosseini, “The intelligent water drops algorithm: a nature-inspired swarm-based optimization algorithm”,

International Journal of Bio-Inspired Computation, Vol.1, pp.71-79, 2009.

[11] H.S.Hosseini, “An approach to continuous optimization by Intelligent Water Drop Algorithm”, ELSEVIER

Procedia-Social and Behavioural Sciences, pp.224-229, 2011.

239 Jaspreet Kaur, Ashima Kalra

View publication stats

Das könnte Ihnen auch gefallen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Kim 2016Dokument5 SeitenKim 2016Anjani ChairunnisaNoch keine Bewertungen

- A Simple Laplace Transform Is Conducted While Sending Signals Over Any TwoDokument2 SeitenA Simple Laplace Transform Is Conducted While Sending Signals Over Any TwohamryNoch keine Bewertungen

- Semester I: Discipline: Electronics and Communication Stream: EC3Dokument99 SeitenSemester I: Discipline: Electronics and Communication Stream: EC3Jerrin Thomas PanachakelNoch keine Bewertungen

- Chapter 10: Forouzan Data Communications and NetworksDokument3 SeitenChapter 10: Forouzan Data Communications and NetworksMaithaNoch keine Bewertungen

- Output Decision To Maximize Profit Under MonopolyDokument15 SeitenOutput Decision To Maximize Profit Under MonopolyDipesh KarkiNoch keine Bewertungen

- State Space Model of A Mechanical System in Matlab Simulink PDFDokument7 SeitenState Space Model of A Mechanical System in Matlab Simulink PDFnecromareNoch keine Bewertungen

- MathDokument12 SeitenMathRichard S baidNoch keine Bewertungen

- Intelligent Techniques For Data ScienceDokument282 SeitenIntelligent Techniques For Data Scienceswjaffry100% (7)

- D1, L2 Sorting AlgorithmsDokument17 SeitenD1, L2 Sorting AlgorithmsmokhtarppgNoch keine Bewertungen

- A Fast Nearest Neighbor AlgorithmDokument5 SeitenA Fast Nearest Neighbor AlgorithmEditor in ChiefNoch keine Bewertungen

- DS100-2-Grp#4 Chapter 6 Advanced Analytical Theory and Methods Regression (CADAY, CASTOR, CRUZ, SANORIA, TAN)Dokument4 SeitenDS100-2-Grp#4 Chapter 6 Advanced Analytical Theory and Methods Regression (CADAY, CASTOR, CRUZ, SANORIA, TAN)Gelo CruzNoch keine Bewertungen

- Deep Learning: Yann LecunDokument58 SeitenDeep Learning: Yann LecunAnonymous t4uG4pFdNoch keine Bewertungen

- CIA - II - Cs8501 - Theory of Computation - CIA I - Set ADokument1 SeiteCIA - II - Cs8501 - Theory of Computation - CIA I - Set Asaran SanjayNoch keine Bewertungen

- Agarwal CV PDFDokument17 SeitenAgarwal CV PDFlaharadeoNoch keine Bewertungen

- OS Deadlock (Chapter 8)Dokument39 SeitenOS Deadlock (Chapter 8)ekiholoNoch keine Bewertungen

- Saponara Game Theory Practice 2Dokument3 SeitenSaponara Game Theory Practice 2vuduyducNoch keine Bewertungen

- Huffman CodingDokument23 SeitenHuffman CodingNazeer BabaNoch keine Bewertungen

- How To Calculate Outage ProbabilityDokument11 SeitenHow To Calculate Outage ProbabilitysurvivalofthepolyNoch keine Bewertungen

- Algorithm, Pseudo Code and The Corresponding FlowchartDokument5 SeitenAlgorithm, Pseudo Code and The Corresponding FlowchartArul JothiNoch keine Bewertungen

- Student Notes - Convolutional Neural Networks (CNN) Introduction - Belajar Pembelajaran Mesin IndonesiaDokument14 SeitenStudent Notes - Convolutional Neural Networks (CNN) Introduction - Belajar Pembelajaran Mesin Indonesiaandres alfonso varelo silgadoNoch keine Bewertungen

- BFS and DFS-4Dokument27 SeitenBFS and DFS-4Anushka SharmaNoch keine Bewertungen

- 3 Step-by-Step Calculator - SymbolabDokument2 Seiten3 Step-by-Step Calculator - SymbolabJared GuntingNoch keine Bewertungen

- Time Series: Chapter 4 - EstimationDokument53 SeitenTime Series: Chapter 4 - EstimationTom AlexNoch keine Bewertungen

- Week 6Dokument6 SeitenWeek 6gowrishankar nayanaNoch keine Bewertungen

- 1.a) SolutionDokument7 Seiten1.a) SolutionPolu Vidya SagarNoch keine Bewertungen

- Ioitc 2008 NotesDokument230 SeitenIoitc 2008 Notesjatinimo1996100% (2)

- Discrete Course OutlineDokument2 SeitenDiscrete Course Outlinebeshahashenafe20Noch keine Bewertungen

- Sheet5 solution-CC471-Fall 2021Dokument3 SeitenSheet5 solution-CC471-Fall 2021زياد عبدالجوادNoch keine Bewertungen

- Graph Signal ProcessingDokument56 SeitenGraph Signal Processingale3265Noch keine Bewertungen

- Predictive Targeting Suite V2 ManualDokument28 SeitenPredictive Targeting Suite V2 ManualMoise SoaresNoch keine Bewertungen