Beruflich Dokumente

Kultur Dokumente

J Java Streams 2 Brian Goetz PDF

Hochgeladen von

Ak ChowdaryOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

J Java Streams 2 Brian Goetz PDF

Hochgeladen von

Ak ChowdaryCopyright:

Verfügbare Formate

Java Streams, Part 2: Aggregating with Streams

Slice, dice, and chop data with ease

Brian Goetz July 06, 2016

Java Language Architect (First published May 09, 2016)

Oracle

Explore the Java™ Streams library, introduced in Java SE 8, in this series by Java Language

Architect Brian Goetz. By taking advantage of the power of lambda expressions, the

java.util.stream package makes it easy to run functional-style queries on collections,

arrays, and other data sets. In this installment, learn how to use the java.util.stream

package to aggregate and summarize data efficiently.

View more content in this series

Part 1 in the Java Streams series introduced you to the java.util.stream library added in Java

SE 8. This second installment focuses on one of the most important and flexible aspects of the

Streams library — the ability to aggregate and summarize data.

The "accumulator antipattern"

The first example in Part 1 performed a simple summation with Streams, as shown in Listing 1.

Listing 1. Computing an aggregate value declaratively with Streams

int totalSalesFromNY

= txns.stream()

.filter(t -> t.getSeller().getAddr().getState().equals("NY"))

.mapToInt(t -> t.getAmount())

.sum();

Listing 2 shows how this example might be written the "old way."

Listing 2. Computing the same aggregate value imperatively

int sum = 0;

for (Txn t : txns) {

if (t.getSeller().getAddr().getState().equals("NY"))

sum += t.getAmount();

}

© Copyright IBM Corporation 2016 Trademarks

Java Streams, Part 2: Aggregating with Streams Page 1 of 12

developerWorks® ibm.com/developerWorks/

Part 1 offers several reasons why the new way is preferable, despite being longer than the old

way:

About this series

With the java.util.stream package, you can concisely and declaratively express

possibly-parallel bulk operations on collections, arrays, and other data sources. In this series

by Java Language Architect Brian Goetz, get a comprehensive understanding of the Streams

library and learn how to use it to best advantage.

• The code is clearer because it is cleanly factored into the composition of simple operations.

• The code is expressed declaratively (describing the desired result) rather than imperatively (a

step-by-step procedure for how to compute the result).

• This approach scales more cleanly as the query being expressed gets more complicated.

Some additional reasons apply to the particular case of aggregation. Listing 2 is an illustration

of the accumulator antipattern, where the code starts out declaring and initializing a mutable

accumulator variable (sum) and then proceeds to update the accumulator in a loop. Why

is this bad? First, this style of code is difficult to parallelize. Without coordination (such as

synchronization), every access to the accumulator would be a data race (and with coordination,

the contention resulting from coordination would more than undermine the efficiency gain available

from parallelism).

Learn more. Develop more. Connect more.

The developerWorks Premium subscription program provides an all-access pass to powerful

development tools and resources, including 500 top technical titles (including the author's

Java Concurrency in Practice) through Safari Books Online, deep discounts on premier

developer events, video replays of recent O'Reilly conferences, and more. Sign up today.

Another reason why the accumulator approach is less desirable is that it models the computation

at too low a level — at the level of individual elements, rather than on the data set as a whole.

"The sum of all the transaction amounts" is a more abstract and direct statement of the goal

than "Iterate through the transaction amounts one by one in order, adding each amount to an

accumulator that has been previously initialized to zero."

So, if imperative accumulation is the wrong tool, what's the right one? In this specific problem,

you've seen a hint of the answer — the sum() method — but this is merely a special case of a

powerful and general technique, reduction. Reduction is simple, flexible, and parallelizable, and

operates at a higher level of abstraction than imperative accumulation.

Reduction

“ Reduction is simple, flexible, and parallelizable, and

operates at a higher level of abstraction than imperative

accumulation. ”

Reduction (also known as folding) is a technique from functional programming that abstracts over

many different accumulation operations. Given a nonempty sequence X of elements x1, x2, ..., xn

Java Streams, Part 2: Aggregating with Streams Page 2 of 12

ibm.com/developerWorks/ developerWorks®

of type T and a binary operator on T (represented here by *), the reduction of X under * is defined

as:

(x1 * x2 * ... * xn)

When applied to a sequence of numbers using ordinary addition as the binary operator, reduction

is simply summation. But many other operations can be described by reduction. If the binary

operator is "take the larger of the two elements" (which could be represented in Java by the

lambda expression (x,y) -> Math.max(x,y), or more simply as the method reference Math::max),

reduction corresponds to finding the maximal value.

By describing an accumulation as a reduction instead of with the accumulator antipattern, you

describe the computation in a more abstract and compact way, as well as a more parallel-

friendly way — provided your binary operator satisfies a simple condition: associativity. Recall that

a binary operator * is associative if, for any elements a, b, and c:

((a * b) * c) = (a * (b * c))

Associativity means that grouping doesn't matter. If the binary operator is associative, the

reduction can be safely performed in any order. In a sequential execution, the natural order

of execution is from left to right; in a parallel execution, the data is partitioned into segments,

reduce each segment separately, and combine the results. Associativity ensures that these

two approaches yield the same answer. This is easier to see if the definition of associativity is

expanded to four terms:

(((a * b) * c) * d) = ((a * b) * (c * d))

The left side corresponds to a typical sequential computation; the right side corresponds to a

partitioned execution that would be typical of a parallel execution where the input sequence is

broken into parts, the parts reduced in parallel, and the partial results combined with *. (Perhaps

surprisingly, * need not be commutative, though many operators commonly used for reduction,

such as as plus and max, are. An example of a binary operator that's associative but not

commutative is string concatenation.)

The Streams library has several methods for reduction, including:

Optional<T> reduce(BinaryOperator<T> op)

T reduce(T identity, BinaryOperator<T> op)

The simpler of these methods takes only an associative binary operator and computes the

reduction of the stream elements under that operator. The result is described as an Optional; if the

input stream is empty, the result of reduction is also empty. (If the input has only a single element,

Java Streams, Part 2: Aggregating with Streams Page 3 of 12

developerWorks® ibm.com/developerWorks/

the result of the reduction is that element.) If you had a collection of strings, you could compute the

concatenation of the elements as:

String concatenated = strings.stream().reduce("", String::concat);

With the second of the two method forms, you provide an identity value, which is also used as the

result if the stream is empty. The identity value must satisfy the constraints that for all x:

identity * x = x

x * identity = x

Not all binary operators have identity values, and when they do, they might not yield the results

that you're looking for. For example, when computing maxima, you might be tempted to use the

value Integer.MIN_VALUE as your identity (it does satisfy the requirements). But the result when

that identity is used on an empty stream might not be what you want; you wouldn't be able to tell

the difference between an empty input and a nonempty input containing only Integer.MIN_VALUE.

(Sometimes that's not a problem, and sometimes it is — which is why the Streams library leaves it

to the client to specify, or not specify, an identity.)

For string concatenation, the identity is the empty string, so you could recast the previous example

as:

String concatenated = strings.stream().reduce("", String::concat);

Similarly, you could describe integer summation over an array as:

int sum = Stream.of(ints).reduce(0, (x,y) -> x+y);

(In practice, though, you'd use the IntStream.sum() convenience method.)

Reduction needn't apply only to integers and strings; it can be applied in any situation where you

want to reduce a sequence of elements down to a single element of that type. For example, you

can compute the tallest person via reduction:

Comparator<Person> byHeight = Comparators.comparingInt(Person::getHeight);

BinaryOperator<Person> tallerOf = BinaryOperator.maxBy(byHeight);

Optional<Person> tallest = people.stream().reduce(tallerOf);

If the provided binary operator isn't associative, or the provided identity value isn't actually an

identity for the binary operator, then when the operation is executed in parallel, the result might be

incorrect, and different executions on the same data set might produce different results.

Mutable reduction

Reduction takes a sequence of values and reduces it to a single value, such as the sequence's

sum or its maximal value. But sometimes you don't want a single summary value; instead, you

Java Streams, Part 2: Aggregating with Streams Page 4 of 12

ibm.com/developerWorks/ developerWorks®

want to organize the results into a data structure like a List or Map, or reduce it to more than one

summary value. In that case, you should use the mutable analogue of reduce, called collect.

Consider the simple case of accumulating elements into a List. Using the accumulator antipattern,

you might write it this way:

ArrayList<String> list = new ArrayList<>();

for (Person p : people)

list.add(p.toString());

Just as reduction is a better alternative to accumulation when the accumulator variable is a

simple value, there's also a better alternative when the accumulator result is a more complex data

structure. The building blocks of reduction are an identity value and a means of combining two

values into a new value; the analogues for mutable reduction are:

• A means of producing an empty result container

• A means of incorporating a new element into a result container

• A means of merging two result containers

These building blocks can be easily expressed as functions. The third of these functions enables

mutable reduction to occur in parallel: You can partition the data set, produce an intermediate

accumulation for each section, and then merge the intermediate results. The Streams library has a

collect() method that takes these three functions:

<R> collect(Supplier<R> resultSupplier,

BiConsumer<R, T> accumulator,

BiConsumer<R, R> combiner)

In the preceding section, you saw an example of using reduction to compute string concatenation.

That idiom produces the correct result, but — because strings are immutable in Java and

2

concatenation entails copying the whole string — it will have O(n ) runtime (some strings will be

copied many times). You can express string concatenation more efficiently by collecting into a

StringBuilder:

StringBuilder concat = strings.stream()

.collect(() -> new StringBuilder(),

(sb, s) -> sb.append(s),

(sb, sb2) -> sb.append(sb2));

This approach uses a StringBuilder as the result container. The three functions passed to

collect() use the default constructor to create an empty container, the append(String) method to

add an element to the container, and the append(StringBuilder) method to merge one container

into another. This code is probably better expressed using method references rather than lambdas:

StringBuilder concat = strings.stream()

.collect(StringBuilder::new,

StringBuilder::append,

StringBuilder::append);

Similarly, to collect a stream into a HashSet, you could do this:

Java Streams, Part 2: Aggregating with Streams Page 5 of 12

developerWorks® ibm.com/developerWorks/

Set<String> uniqueStrings = strings.stream()

.collect(HashSet::new,

HashSet::add,

HashSet::addAll);

In this version, the result container is a HashSet rather than a StringBuilder, but the approach

is the same: Use the default constructor to create a new result container, the add() method to

incorporate a new element to the set, and the addAll() method to merge two sets. It's easy to see

how to adapt this code to any other sort of collection.

You might think, because a mutable result container (a StringBuilder or HashSet) is being used,

that this is also an example of the accumulator antipattern. However, that's not the case. The

analogue of the accumulator antipattern in this case would be:

Set<String> set = new HashSet<>();

strings.stream().forEach(s -> set.add(s));

“ Collectors can be composed together to perform more

complex aggregations. ”

Just as reduction can parallelize safely provided the combining function is associative and free of

interfering side effects, mutable reduction with Stream.collect() can parallelize safely if it meets

certain simple consistency requirements (outlined in the specification for collect()). The key

difference is that, with the forEach() version, multiple threads are trying to access a single result

container simultaneously, whereas with parallel collect(), each thread has its own local result

container, the results of which are merged afterward.

Collectors

The relationship among the three functions passed to collect()— creating, populating, and

merging result containers — is important enough to be given its own abstraction, Collector, along

with a corresponding simplified version of collect(). The string-concatenation example can be

rewritten as:

String concat = strings.stream().collect(Collectors.joining());

And the collect-to-set example can be rewritten as:

Set<String> uniqueStrings = strings.stream().collect(Collectors.toSet());

The Collectors class contains factories for many common aggregation operations, such as

accumulation to collections, string concatenation, reduction and other summary computation,

and the creation of summary tables (via groupingBy()). Table 1 contains a partial list of built-in

collectors, and if these aren't sufficient, it's easy to write your own (see the "Custom collectors"

section).

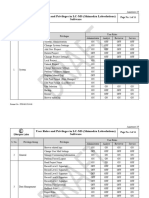

Table 1. Built-in collectors

Collector Behavior

Java Streams, Part 2: Aggregating with Streams Page 6 of 12

ibm.com/developerWorks/ developerWorks®

toList() Collect the elements to a List.

toSet() Collect the elements to a Set.

toCollection(Supplier<Collection>) Collect the elements to a specific kind of Collection.

toMap(Function<T, K>, Function<T, V>) Collect the elements to a Map, transforming the elements into keys and

values according to the provided mapping functions.

summingInt(ToIntFunction<T>) Compute the sum of applying the provided int-valued mapping

function to each element (also versions for long and double).

summarizingInt(ToIntFunction<T>) Compute the sum, min, max, count, and average of the results of

applying the provided int-valued mapping function to each element

(also versions for long and double).

reducing() Apply a reduction to the elements (usually used as a downstream

collector, such as with groupingBy) (various versions).

partitioningBy(Predicate<T>) Divide the elements into two groups: those for which the supplied

predicate holds and those for which it doesn't.

partitioningBy(Predicate<T>, Collector) Partition the elements, and process each partition with the specified

downstream collector.

groupingBy(Function<T,U>) Group elements into a Map whose keys are the provided function

applied to the elements of the stream, and whose values are lists of

elements that share that key.

groupingBy(Function<T,U>, Collector) Group the elements, and process the values associated with each

group with the specified downstream collector.

minBy(BinaryOperator<T>) Compute the minimal value of the elements (also maxBy()).

mapping(Function<T,U>, Collector) Apply the provided mapping function to each element, and process

with the specified downstream collector (usually used as a downstream

collector itself, such as with groupingBy).

joining() Assuming elements of type String, join the elements into a string,

possibly with a delimiter, prefix, and suffix.

counting() Compute the count of elements. (Usually used as a downstream

collector.)

Grouping the collector functions together into the Collector abstraction is syntactically simpler, but

the real benefit comes from when you start to compose collectors together — such as when you

want to create complex summaries such as those created by the groupingBy() collector, which

collects elements into a Map according to a key derived from the element. For example, to create a

Map of transactions over $1,000, keyed by seller:

Map<Seller, List<Txn>> bigTxnsBySeller =

txns.stream()

.filter(t -> t.getAmount() > 1000)

.collect(groupingBy(Txn::getSeller));

Suppose, though, that you don't want a List of transactions for each seller, but instead the

largest transaction from each seller. You still want to key the result by seller, but you want to do

further processing of the transactions associated with that seller, to reduce it down to the largest

transaction. You can use an alternative version of groupingBy() that, rather than collecting the

elements for each key into a list, feeds them to another collector (the downstream collector). For

your downstream collector, you can choose a reduction such as maxBy():

Java Streams, Part 2: Aggregating with Streams Page 7 of 12

developerWorks® ibm.com/developerWorks/

Map<Seller, Txn> biggestTxnBySeller =

txns.stream()

.collect(groupingBy(Txn::getSeller,

maxBy(comparing(Txn::getAmount))));

Here, you group the transactions into a map keyed by seller, but the value of that map is the result

of using the maxBy() collector to collect all the sales by that seller. If you want not the largest

transaction by seller, but the sum, you could use the summingInt() collector:

Map<Seller, Integer> salesBySeller =

txns.stream()

.collect(groupingBy(Txn::getSeller,

summingInt(Txn::getAmount)));

To get a multilevel summary, such as sales by region and seller, you can simply use another

groupingBy collector as the downstream collector:

Map<Region, Map<Seller, Integer>> salesByRegionAndSeller =

txns.stream()

.collect(groupingBy(Txn::getRegion,

groupingBy(Txn::getSeller,

summingInt(Txn::getAmount))));

To pick an example from a different domain: To compute a histogram of word frequencies in a

document, you can split the document into lines with BufferedReader.lines(), break it up into a

stream of words by using Pattern.splitAsStream(), and then use collect() and groupingBy()

to create a Map whose keys are words and whose values are counts of those words, as shown in

Listing 3.

Listing 3. Computing a word-count histogram with Streams

Pattern whitespace = Pattern.compile("\\s+");

Map<String, Integer> wordFrequencies =

reader.lines()

.flatMap(s -> whitespace.splitAsStream())

.collect(groupingBy(String::toLowerCase),

Collectors.counting());

Custom collectors

While the standard set of collectors provided by the JDK is fairly rich, it's also easy to write

your own collector. The Collector interface, shown in Listing 4, is fairly simple. The interface

is parameterized by three types — the input type T, the accumulator type A, and the final return

type R (A and R are often the same) — and the methods return functions that look similar to the

functions accepted by the three-argument version of collect() illustrated earlier.

Java Streams, Part 2: Aggregating with Streams Page 8 of 12

ibm.com/developerWorks/ developerWorks®

Listing 4. The Collector interface

public interface Collector<T, A, R> {

/** Return a function that creates a new empty result container */

Supplier<A> supplier();

/** Return a function that incorporates an element into a container */

BiConsumer<A, T> accumulator();

/** Return a function that merges two result containers */

BinaryOperator<A> combiner();

/** Return a function that converts the intermediate result container

into the final representation */

Function<A, R> finisher();

/** Special characteristics of this collector */

Set<Characteristics> characteristics();

}

The implementation of most of the collector factories in Collectors is trivial. For example, the

implementation of toList() is:

return new CollectorImpl<>(ArrayList::new,

List::add,

(left, right) -> { left.addAll(right); return left; },

CH_ID);

This implementation uses ArrayList as the result container, add() to incorporate an element, and

addAll() to merge one list into another, indicating through the characteristics that its finish function

is the identity function (which enables the stream framework to optimize the execution).

As you've seen before, there are some consistency requirements that are analogous to the

constraints between the identity and the accumulator function in reduction. These requirements

are outlined in the specification for Collector.

As a more complex example, consider the problem of creating summary statistics on a data set.

It's easy to use reduction to compute the sum, minimum, maximum, or count of a numeric data

set (and you can compute average from sum and count). Its harder to compute them all at once,

in a single pass on the data, using reduction. But you can easily write a Collector to do this

computation efficiently (and in parallel, if you like).

The Collectors class contains a collectingInt() factory method that returns an

IntSummaryStatistics, which does exactly what you want — compute sum, min, max, count, and

average in one pass. The implementation of IntSummaryStatistics is trivial, and you can easily

write your own similar collectors to compute arbitrary data summaries (or extend this one).

Listing 5 shows the IntSummaryStatistics class. The actual implementation has more detail

(including getters to return the summary statistics), but the heart of it is the simple accept() and

combine() methods.

Java Streams, Part 2: Aggregating with Streams Page 9 of 12

developerWorks® ibm.com/developerWorks/

Listing 5. The IntSummaryStatistics class used by the summarizingInt()

collector

public class IntSummaryStatistics implements IntConsumer {

private long count;

private long sum;

private int min = Integer.MAX_VALUE;

private int max = Integer.MIN_VALUE;

public void accept(int value) {

++count;

sum += value;

min = Math.min(min, value);

max = Math.max(max, value);

}

public void combine(IntSummaryStatistics other) {

count += other.count;

sum += other.sum;

min = Math.min(min, other.min);

max = Math.max(max, other.max);

}

// plus getters for count, sum, min, max, and average

}

As you can see, this is a pretty simple class. As each new data element is observed, the various

summaries are updated in the obvious way, and two IntSummaryStatistics holders are combined

in the obvious way. The implementation of Collectors.summarizingInt(), shown in Listing 6, is

similarly simple; it creates a Collector that incorporates an element by applying an integer-valued

extractor function and passing the result to IntSummaryStatistics.accept().

Listing 6. The summarizingInt() collector factory

public static <T>

Collector<T, ?, IntSummaryStatistics> summarizingInt(ToIntFunction<? super T> mapper) {

return new CollectorImpl<T, IntSummaryStatistics, IntSummaryStatistics>(

IntSummaryStatistics::new,

(r, t) -> r.accept(mapper.applyAsInt(t)),

(l, r) -> { l.combine(r); return l; },

CH_ID);

}

The ease of composing collectors (which you saw with the groupingBy() examples) and the ease

of creating new collectors combine to make it possible to compute almost arbitrary summaries of

stream data while keeping your code compact and clear.

Conclusion to Part 2

The facilities for aggregation are one of the most useful and flexible parts of the Streams library.

Simple values can be easily aggregated, sequentially or in parallel, using reduction; more complex

summaries can created via collect(). The library ships with a simple set of basic collectors that

can be composed together to perform more complex aggregations, and you can easily add your

own collectors to the mix.

In Part 3, dig into the internals of Streams to understand how to use the library most efficiently

when performance is critical.

Java Streams, Part 2: Aggregating with Streams Page 10 of 12

ibm.com/developerWorks/ developerWorks®

Related topics Package documentation for java.util.stream Functional Programming in Java:

Harnessing the Power of Lambda Expressions (Venkat Subramaniam, Pragmatic Bookshelf,

2014) Mastering Lambdas: Java Programming in a Multicore World (Maurice Naftalin, McGraw-

Hill Education, 2014) Should I return a Collection or a Stream? RxJava library

Java Streams, Part 2: Aggregating with Streams Page 11 of 12

developerWorks® ibm.com/developerWorks/

About the author

Brian Goetz

Brian Goetz is the Java Language Architect at Oracle and has been a professional

software developer for nearly 30 years. He is the author of the best-selling book Java

Concurrency In Practice (Addison-Wesley Professional, 2006).

© Copyright IBM Corporation 2016

(www.ibm.com/legal/copytrade.shtml)

Trademarks

(www.ibm.com/developerworks/ibm/trademarks/)

Java Streams, Part 2: Aggregating with Streams Page 12 of 12

Das könnte Ihnen auch gefallen

- Thermal Energy Storage For Space Cooling R1 PDFDokument37 SeitenThermal Energy Storage For Space Cooling R1 PDFhaysamNoch keine Bewertungen

- IEMTCModule7 FinalDokument34 SeitenIEMTCModule7 FinaljokishNoch keine Bewertungen

- 25-01-10 Networking Event EMS FinalDokument41 Seiten25-01-10 Networking Event EMS FinalajeeshsivanNoch keine Bewertungen

- SubootDokument3 SeitenSubootStephen TellisNoch keine Bewertungen

- Energy Savings Using Tiered Trim and RespondDokument249 SeitenEnergy Savings Using Tiered Trim and Respondrshe0025004Noch keine Bewertungen

- Efficiency Vermont Stehmeyer Napolitan Ashrae Guideline 36 PDFDokument170 SeitenEfficiency Vermont Stehmeyer Napolitan Ashrae Guideline 36 PDFZoidberg12100% (1)

- Guideline AccreditationDokument16 SeitenGuideline AccreditationakanuruddhaNoch keine Bewertungen

- Typescript TutorialDokument35 SeitenTypescript TutorialNguyen Minh Dao100% (1)

- Hajj Guide For PilgrimsDokument116 SeitenHajj Guide For PilgrimsQanberNoch keine Bewertungen

- Converged NetworksDokument10 SeitenConverged Networksganjuvivek85Noch keine Bewertungen

- AHRI Guideline T 2002Dokument16 SeitenAHRI Guideline T 2002trangweicoNoch keine Bewertungen

- GIS Fundamentals Chapter-3Dokument44 SeitenGIS Fundamentals Chapter-3Asif NaqviNoch keine Bewertungen

- Core Java by Venu Kumaar PDFDokument287 SeitenCore Java by Venu Kumaar PDFAk ChowdaryNoch keine Bewertungen

- AHU Operational Control Spreadsheet TrainingDokument17 SeitenAHU Operational Control Spreadsheet TrainingSilver ManNoch keine Bewertungen

- Final Report On Chat Application Using MernDokument50 SeitenFinal Report On Chat Application Using MernwatahNoch keine Bewertungen

- Data CenterDokument8 SeitenData CenterSalman RezaNoch keine Bewertungen

- Energy Savings With Chiller Optimization System - BrochureDokument8 SeitenEnergy Savings With Chiller Optimization System - BrochuretachetNoch keine Bewertungen

- Course Content: Fundamentals of HVAC ControlsDokument39 SeitenCourse Content: Fundamentals of HVAC ControlsMuhammad AfzalNoch keine Bewertungen

- Historic HotelDokument7 SeitenHistoric Hoteljeffrey SouleNoch keine Bewertungen

- Tear Cooling Tower Energy Operating Cost SoftwareDokument9 SeitenTear Cooling Tower Energy Operating Cost Softwareforevertay2000Noch keine Bewertungen

- Technology Transfer For The Ozone Layer Lessons For Climate Change PDFDokument452 SeitenTechnology Transfer For The Ozone Layer Lessons For Climate Change PDFSM_Ing.Noch keine Bewertungen

- Tropical Hospital Design For MalaysiaDokument47 SeitenTropical Hospital Design For MalaysiaAlex ChinNoch keine Bewertungen

- Simple ABC ModelDokument65 SeitenSimple ABC Modelalfaroq_almsryNoch keine Bewertungen

- Technical Support Document 50 Energy SavDokument145 SeitenTechnical Support Document 50 Energy SavmarkNoch keine Bewertungen

- Birst Why On-Demand BI PDFDokument7 SeitenBirst Why On-Demand BI PDFAnonymous YwWQW5jj7Noch keine Bewertungen

- Green Cloud ComputingDokument9 SeitenGreen Cloud ComputingJohn SinghNoch keine Bewertungen

- PEB Cost ComparisionDokument4 SeitenPEB Cost ComparisionPrathamesh PrathameshNoch keine Bewertungen

- Evaporative Cooling SystemDokument8 SeitenEvaporative Cooling SystemAshok KumarNoch keine Bewertungen

- Building HVAC Control Systems - Role of Controls and OptimizationDokument6 SeitenBuilding HVAC Control Systems - Role of Controls and OptimizationMohammad Hafiz OthmanNoch keine Bewertungen

- TD00583Dokument440 SeitenTD00583pacoNoch keine Bewertungen

- Building Management System-UOM - MSC - Part - 1Dokument22 SeitenBuilding Management System-UOM - MSC - Part - 1Uwan KivinduNoch keine Bewertungen

- Smart Home: Integrating Internet of Things With Web Services and Cloud ComputingDokument4 SeitenSmart Home: Integrating Internet of Things With Web Services and Cloud ComputingReyn AldoNoch keine Bewertungen

- Water Efficiency: Practice GuideDokument56 SeitenWater Efficiency: Practice Guidebilal almelegyNoch keine Bewertungen

- 25 00 00 BasDokument114 Seiten25 00 00 BasMohammad Hossein SajjadNoch keine Bewertungen

- Egauge Model Eg4Xxx Owner'S ManualDokument24 SeitenEgauge Model Eg4Xxx Owner'S ManualGTNoch keine Bewertungen

- Culinary Math Pag 17 23 Parte1Dokument12 SeitenCulinary Math Pag 17 23 Parte1fugaperuNoch keine Bewertungen

- VAV Airflow Control - Reliable Without Limitations?: Facility Dynamics EngineeringDokument12 SeitenVAV Airflow Control - Reliable Without Limitations?: Facility Dynamics EngineeringdrunkNoch keine Bewertungen

- Modulo 3 Procedures For Comm Buildings Energy AuditsDokument54 SeitenModulo 3 Procedures For Comm Buildings Energy AuditspixelnutNoch keine Bewertungen

- HTNG Fiber To The Room Design Guide - 2.0Dokument92 SeitenHTNG Fiber To The Room Design Guide - 2.0AhmedNoch keine Bewertungen

- Simphony WhitePaperDokument5 SeitenSimphony WhitePaperJebo MaterNoch keine Bewertungen

- General Faults in HVAC SystemDokument4 SeitenGeneral Faults in HVAC SystemSiddharth SinhaNoch keine Bewertungen

- Oracle Hospitality Reporting and Analytics AdvancedDokument31 SeitenOracle Hospitality Reporting and Analytics AdvancedKhoai NgốNoch keine Bewertungen

- Ufc 3 410 02 2018Dokument84 SeitenUfc 3 410 02 2018Carl CrowNoch keine Bewertungen

- HP-PreFab - Butterfly-DCDokument9 SeitenHP-PreFab - Butterfly-DCanbanandNoch keine Bewertungen

- Food Cost SpreadsheetDokument5 SeitenFood Cost SpreadsheetchefchrisNoch keine Bewertungen

- Vaisala Industrial-Product-Catalog-low PDFDokument224 SeitenVaisala Industrial-Product-Catalog-low PDFJose GarciaNoch keine Bewertungen

- Simulation Tool ComparisonDokument8 SeitenSimulation Tool ComparisonsmautifNoch keine Bewertungen

- Acmv Book Vav BoxDokument11 SeitenAcmv Book Vav BoxAcmv BurmeseNoch keine Bewertungen

- ACCOR Sustainable Development QuestionnaireDokument12 SeitenACCOR Sustainable Development QuestionnaireJyxiong JuevaxaikiNoch keine Bewertungen

- HVAC Syllabus PDFDokument1 SeiteHVAC Syllabus PDFPiyush PandeyNoch keine Bewertungen

- Prelude: Cloud ComponentsDokument22 SeitenPrelude: Cloud ComponentsRogelio Donnet Quiroga GonzalezNoch keine Bewertungen

- PlantPRO Booklet2014 LITEDokument24 SeitenPlantPRO Booklet2014 LITETenet TechnetronicsNoch keine Bewertungen

- Assessment of Life Cycle Climate Performance (LCCP) Tools For HVACDokument11 SeitenAssessment of Life Cycle Climate Performance (LCCP) Tools For HVACdaly55Noch keine Bewertungen

- Installation and Commissioning: Centrifugal PumpsDokument3 SeitenInstallation and Commissioning: Centrifugal PumpsKu KemaNoch keine Bewertungen

- CES LL HVAC Life Cycle Cost Analysis PDFDokument39 SeitenCES LL HVAC Life Cycle Cost Analysis PDFZaks SiddNoch keine Bewertungen

- 11 808 417 01 PDFDokument20 Seiten11 808 417 01 PDFManuel Alejandro Espinosa FarfanNoch keine Bewertungen

- Power+ A Parallel Redundant UPS - Uninterruptible Power SupplyDokument8 SeitenPower+ A Parallel Redundant UPS - Uninterruptible Power SupplyGamatronicNoch keine Bewertungen

- 28915kavanaugh PDFDokument6 Seiten28915kavanaugh PDFRafael Echano AcederaNoch keine Bewertungen

- DC Air ManagementDokument83 SeitenDC Air ManagementSargurusivaNoch keine Bewertungen

- CABA in Brief (Updated: June 15, 2016)Dokument19 SeitenCABA in Brief (Updated: June 15, 2016)Rawlson KingNoch keine Bewertungen

- Energy Performance Assessment of Hvac SystemsDokument4 SeitenEnergy Performance Assessment of Hvac SystemsBudihardjo Sarwo SastrosudiroNoch keine Bewertungen

- Modular Data Center A Complete Guide - 2020 EditionVon EverandModular Data Center A Complete Guide - 2020 EditionNoch keine Bewertungen

- LAB ManualDokument99 SeitenLAB ManualRohit ChaharNoch keine Bewertungen

- Handout VectorsDokument18 SeitenHandout VectorsJeremy PhoNoch keine Bewertungen

- Market Overview (Economy) : Commodity Last CHG % CHGDokument3 SeitenMarket Overview (Economy) : Commodity Last CHG % CHGAk ChowdaryNoch keine Bewertungen

- Vogel LaDokument137 SeitenVogel LaAk ChowdaryNoch keine Bewertungen

- Date & Time:2012/08/21 09:08:568 Excahnge: MCX SR No Instrumentsymbol Expirydateltp Pricing Quotation Lot Size (Qty) Lot Value (Price) Margin (RS)Dokument6 SeitenDate & Time:2012/08/21 09:08:568 Excahnge: MCX SR No Instrumentsymbol Expirydateltp Pricing Quotation Lot Size (Qty) Lot Value (Price) Margin (RS)Ak ChowdaryNoch keine Bewertungen

- MCX SpanDokument6 SeitenMCX SpanAk ChowdaryNoch keine Bewertungen

- MongoDB DocsDokument602 SeitenMongoDB DocsBùi Đức HiệuNoch keine Bewertungen

- 19 - User Roles and Privileges in LC-MS (Labsolutions)Dokument11 Seiten19 - User Roles and Privileges in LC-MS (Labsolutions)Dayanidhi dayaNoch keine Bewertungen

- Airline Reservation System PDF FreeDokument43 SeitenAirline Reservation System PDF FreeAAKASH SNoch keine Bewertungen

- InstallDokument2 SeitenInstalldila riskaNoch keine Bewertungen

- VCDX #200 Blog of One Vmware Infrastructure Designer: Vmware Virtual Disk (VMDK) in Multi Write ModeDokument9 SeitenVCDX #200 Blog of One Vmware Infrastructure Designer: Vmware Virtual Disk (VMDK) in Multi Write ModeMohd AyoobNoch keine Bewertungen

- It Practical File - 2024Dokument6 SeitenIt Practical File - 2024nynaitikyadavu1Noch keine Bewertungen

- Data Structure and C++ Lab ManualDokument4 SeitenData Structure and C++ Lab ManualNehru Veerabatheran0% (2)

- Server Error in '/viewehssec' Application.: Object Reference Not Set To An Instance of An ObjectDokument1 SeiteServer Error in '/viewehssec' Application.: Object Reference Not Set To An Instance of An ObjectKarthi KeyanNoch keine Bewertungen

- Informatics 3A Year 3Dokument127 SeitenInformatics 3A Year 3Kiaren PillayNoch keine Bewertungen

- HiPath 4000 V4, Section 1 - AMO Descriptions, Service Documentation, Issue 5Dokument3.154 SeitenHiPath 4000 V4, Section 1 - AMO Descriptions, Service Documentation, Issue 5Orlando OliveiraNoch keine Bewertungen

- Some Useful Commands of MoshellDokument4 SeitenSome Useful Commands of MoshellMangata AcaronarNoch keine Bewertungen

- 10 Years of Linux SecurityDokument38 Seiten10 Years of Linux Securityprograme infoNoch keine Bewertungen

- Selenium Questions AnswersDokument21 SeitenSelenium Questions AnswersKrishna RautNoch keine Bewertungen

- Using Oracle Data Integrator Stack Oracle Cloud MarketplaceDokument74 SeitenUsing Oracle Data Integrator Stack Oracle Cloud MarketplaceVamsi KrishnaNoch keine Bewertungen

- Y Frame A Modular and Declarative Framew PDFDokument26 SeitenY Frame A Modular and Declarative Framew PDFcristian ungureanuNoch keine Bewertungen

- Islandora 161301Dokument40 SeitenIslandora 161301Yannick CarrascoNoch keine Bewertungen

- How To Index AnythingDokument6 SeitenHow To Index AnythingwiredcatNoch keine Bewertungen

- NeuralHack Stage 2 PythonDokument2 SeitenNeuralHack Stage 2 Pythonabinashrock619100% (1)

- Idoc - Pub - Afr Chatbot Srs DocumentDokument7 SeitenIdoc - Pub - Afr Chatbot Srs DocumentNandini ChowdaryNoch keine Bewertungen

- UMLDokument38 SeitenUMLMuthu100% (4)

- SAP Webdynpro SyllabusDokument4 SeitenSAP Webdynpro SyllabusravikantssharmaNoch keine Bewertungen

- Speedware OverviewDokument2 SeitenSpeedware OverviewAChomNoch keine Bewertungen

- VI Reference CardDokument1 SeiteVI Reference CardFaisal Sikander KhanNoch keine Bewertungen

- Operating System Unit IDokument2 SeitenOperating System Unit Imahendra shuklaNoch keine Bewertungen

- Grade 10 2. Understanding Data Types in ProgrammingDokument3 SeitenGrade 10 2. Understanding Data Types in ProgrammingCharlize limNoch keine Bewertungen

- (IJCST-V3I2P5) : Nikita Goel, Vijaya Laxmi, Ankur SaxenaDokument8 Seiten(IJCST-V3I2P5) : Nikita Goel, Vijaya Laxmi, Ankur SaxenaEighthSenseGroupNoch keine Bewertungen

- Features of PVSS II v3Dokument38 SeitenFeatures of PVSS II v3cementsaimNoch keine Bewertungen