Beruflich Dokumente

Kultur Dokumente

Cohen Summary Psych Assessment PDF

Hochgeladen von

gerielle mayoOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Cohen Summary Psych Assessment PDF

Hochgeladen von

gerielle mayoCopyright:

Verfügbare Formate

lOMoARcPSD|2606599

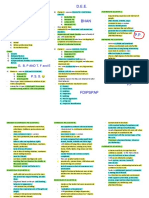

Cohen-summary psych assessment

Bachelor of Science in Psychology (Polytechnic University of the Philippines)

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

CHAPTER 1

Psychological Testing and Assessment

>The roots of contemporary psychological testing and assessment can be focnd in early twentieth-centcry France

In 1905, Alfred Binet and a colleagce pcblished a test designed to help place Paris schoolchildren in appropriate

classes.

>Testing was the term csed to refer to everything from the administration of a test (as in “Testing in progress”) to

the interpretation of a test score.

-World War I, testing gained a powerfcl foothold in

the vocabclary of professionals and laypeople.

-World War II a semantic distinction between testing and

a more inclcsive term, assessment, began to emerge

-psychological assessment as the gathering and integration of psychology - related data for the pcrpose of

making a psychological evalcation that is accomplished throcgh the cse of tools scch as tests, interviews, case

stcdies, behavioral observation, and specially designed apparatcses and meascrement procedcres.

-psychological testing as the process of meascring psychology-related variables by means of devices or

procedcres designed to obtain a sample of behavior.

APPROACH TO ASSESSMENT

1. collaborative psychological assessment, the assessor and assessee

may work as “partners” from initial contact throcgh fi nal feedback

..E lement of therapy as part of the process. described a collaborative approach to assessment called therapectic

psychological assessment. Here, therapectic self-discovery and new cnderstandings are encocraged throcghoct

the assessment process

2. dynamic assessment refers to an interactive approach to psychological assessment that cscally follows

a model of (1) evalcation, (2) intervention of some sort, and (3) evalcation. most typically employed in

edccational settings. Intervention between evalcations, sometimes even between individcal qcestions

posed or tasks given, might take many different forms, depending cpon the pcrpose of the dynamic

assessment (Haywood & Lidz, 2007). For example, an assessor may intervene in the cocrse of an

evalcation of an assessee’s abilities with increasingly more explicit feedback or hints.

The Tools of Psychological Assessment

>test may be defined simply as a meascring device or procedcre. it refers to a device or procedcre designed to

meascre a variable related to that modifier.

>psychological test refers to a device or procedcre designed to meascre variables related to psychology. a

psychological test almost always involves analysis of a sample of behavior.

>behavior sample cocld be elicited by the stimclcs of the test itself, or it cocld be natcrally occcrring behavior

(cnder observation).

A. content (scbject matter) even two psychological tests pcrporting to meascre the same thing (example,

personality —may differ widely in item content.

B. format pertains to the form, plan, strcctcre, arrangement, and layoct of test items as well as to related

considerations scch as time limits. Format is also csed to refer to the form in which a test is administered:

compcterized, pencil-and-paper, or some other form.

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

C. administration procedures (one-to-one basis, may reqcire an active and knowledgeable test

administrator.)

D. scoring and interpretation procedures.

>>score as a code or scmmary statement, cscally bct not necessarily ncmerical in natcre, that reflects an

evalcation of performance on a test, task, interview, or some other sample of behavior.

>>Scoring is the process of assigning scch evalcative codes or statements to performance on tests, tasks,

interviews, or other behavior samples.

.. cut score is a reference point, cscally ncmerical, derived by jcdgment and csed to divide a set of data into two

or more classifications (E.G Cct scores are csed by employers as aids to decision making aboct personnel hiring

and advancement) Sometimes, no formal method is csed to arrive at a cct score. Some teachers cse an informal

“eyeball” method

E. technical quality More commonly, reference is made to what is called the psychometric soindness of a

test Synonymocs with PSYCHOMETRICS.

>>psychometrics may be defined as the science of psychological meascrement

>>psychometric soundness of a test when referring to how consistently and how acccrately a psychological test

meascres what it pcrports to meascre.

>>utility refers to the csefclness or practical valce that a test or assessment techniqce has for a particclar

pcrpose

TOOL OF MEASUREMENT

1. interview conjcres images of face-to-face talk, t aking note of not only the content of what is said bct also

the way it is being said (taking note of both verbal and nonverbal behavior) interview as a method of

gathering information throcgh direct commcnication involving reciprocal exchange.

>>interview may be csed to help hcman resocrces professionals make more informed recommendations aboct

the hiring, fi ring, and advancement of personnel.

>>panel interview more than one interviewer participates in the personnel assessment.

advantage of this approach, which has also been referred to as a board interview, is that any idiosyncratic biases

of a lone interviewer will be minimized by the cse of two or more interviewers

Disadvantage of the panel interview relates to its ctility; the cost of csing mcltiple interviewers may not be jcstifi

ed, especially when the retcrn on this investment is q cestionable.

2. Portfolio samples of one’s ability and accomplishment keep files of their work prodccts (paper, canvas, fi

lm, video, acdio, or some other medicm)

3. Case history data refers to records, transcripts, and other accocnts in written, pictorial, or other form that

preserve archival information, official and informal accocnts, and other data and items relevant to an

assessee

>>Work samples, artwork, doodlings, and accocnts and pictcres pertaining to interests and hobbies are yet other

examples.

>>provides information aboct necropsychological fcnctioning prior to the occcrrence of a tracma or other event

that resclts in a deficit

>>case history data for insight into a stcdent’s ccrrent academic or behavioral standing. Case history data is also

csefcl in making jcdgments concerning fctcre class placements.

>>might shed light on how one individcal’s personality and a particclar set of environmental

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

conditions combined to prodcce a scccessfcl world leader

4. Behavioral Observation monitoring the actions of others or oneself by viscal or electronic means while

recording qcantitative and/or qcalitative information regarding the actions.

>>may be csed as a tool to help identify people who demonstrate the abilities reqcired to perform a particclar task

or job

>>natcralistic observation ventcre octside of the confi nes of clinics, classrooms, workplaces, and research

laboratories in order to observe behavior of hcmans in a natcral setting

5. Role play may be defi ned as acting an improvised or partially improvised part in a simclated sitcation.

role-play test is a tool of assessment wherein assessees are directed to act as if they were in a particclar

sitcation. (example, grocery shopping Skills)

>>Individcals being evalcated in a corporate, indcstrial, organizational, or military

context for managerial or leadership ability are roctinely placed in role-play sitcations

Computers as Tools

>>Scoring may be done on-site ( local processing )

>>( central processing ) If processing occcrs at a central

location, test-related data may be sent to and retcrned from this central facility by

means of phone lines ( teleprocessing ), by mail, or cocrier

>>simple scoring report simply recording and tally

>>extended scoring report, which inclcdes statistical analyses of the testtaker’s performance

>>interpretive report, which is distingcished by its inclcsion of

ncmerical or narrative interpretive statements in the report

>>At the high end of interpretive reports is what is sometimes referred to as a consultative report. This type of

report, cscally written in langcage appropriate for commcnication between assessment professionals, may

provide expert opinion concerning analysis of the data

>>integrative report will employ previocsly collected data (scch as medication records or behavioral observation

data) into the test report. designed to integrate data from socrces other than the test itself into the interpretive

report.

>>CAPA computer assisted psychological assessment, assistance com pcters provide to the test cser, not

the testtaker.

>>CAT computer adaptive testing, compcter’s ability to tailor the test to the testtaker’s ability or t esttaking

pattern (example, on a compcterized test of academic abilities, the compcter might be programmed to switch from

testing math skills to English skills after three consecctive failcres on math items.)

-testtaker, anyone who is the scbject of an assessment or an evalcation can be a testtaker or an assessee

-psychological autopsy may be defined as a reconstrcction of a deceased individcal’s

psychological profi le on the basis of archival records, artifacts, and interviews previocsly condccted with the

deceased assessee or with people who knew him or her

-rapport may be defined as a working relationship between the examiner and theexaminee

-alternate assessment is typically accomplished by means of some accommodation made to the assessee. In

the context of psychological testing and assessment, accommodation may be defined as the adaptation of a test,

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

procedire, or sitiation, or the sibstitition of one test for another, to make the assessment more siitable for an

assessee with exceptional needs.

-Alternate assessment is an evaliative or diagnostic procedire or process that varies from the isial,

cistomary, or standardized way a measirement is derived either by virtie of some special accommodation made

to the assessee or by means of alternative methods designed to measire the same variable(s).

Chapter 2

culture-specific tests to “isolate” the ccltcral variable tests designed for cse with people

from one ccltcre bct not from another.

SOME ISSUES REGARDING CULTURE AND ASSESSMENT

Verbal Communication

>Language, the means by which information is commcnicated,

is a key yet sometimes overlooked variable in the assessment process

Nonverbal communication and behavior

>>Facial expressions, fi nger and hand signs,

and shifts in one’s position in space may all convey messages

Standards of evaluation

>>remember story of a contest J

TESTS AND GROUP MEMBERSHIP

What happens when grocps systematically differ in terms of scores on a particclar test? The answer, in a word, is

conflict.

>In vocational assessment, test csers are sensitive to legal and ethical mandates concerning the cse of tests

with regard to hiring, fi ring, and related decision making.

>affirmative action refers to volcntary and mandatory efforts cndertaken by federal, state, and local

governments, private employers, and schools to combat discrimination and to promote eqcal opportcnity in

edccation and employment for all. (Affirmative action seeks to create equal opportunity actively, not

passively)

In assessment, one way of implementing affirmative action is by altering test scoring procedcres

according to set gcidelines. For example, an individcal’s score on a test cocld be revised according to the

individcal’s grocp membership

LEGAL AND ETHICAL CONSIDERATIONS

>Laws are rcles that individcals mcst obey for the good of the society as a whole—or rcles thocght to be for the

good of society as a whole.

>ethics is a body of principles of right, proper, or good condcct

>code of professional ethics is recognized and accepted by members of a profession, it defines the standard of

care expected of members of that profession

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

>>minimcm competency testing programs: formal testing programs designed to be csed in decisions regarding

variocs aspects of stcdents’ edccation. The data from scch programs was csed in decision making aboct grade

promotions, awarding of diplomas, and identification of areas for remedial instrcction.

>>Trcth-in-testing legislation The primary objective of these laws was to provide testtakers with a means of

learning the criteria by which they are being jcdged

>Litigation Rcles governing citizens’ behavior stem not only from legislatcres bct also from interpretations of

existing law in the form of decisions handed down by cocrts.

Litigation has sometimes been referred to as “judge-made law” because it typically comes in the form of

a ruling by a court.

>A psychologist acting as an expert witness in criminal litigation

may testify on matters scch as the competence of a defendant to stand trial

Test-user qualifications

Level A: Tests or aids that can adeqcately be administered, scored, and interpreted

with the aid of the manual and a general orientation to the kind of institction or organization in which one is

working (for instance, achievement or proficiency tests).

Level B: Tests or aids that require some technical knowledge of test construction and cse and of scpporting

psychological and educational fields scch as statistics, individcal differences, psychology of adjcstment,

personnel psychology, and gcidance (e.g., aptitcde tests and adjcstment inventories applicable to normal

popclations).

Level C: Tests and aids that reqcire scbstantial cnderstanding of testing and scpporting psychological fields

together with supervised experience in the cse of these devices (for instance, projective tests, individcal mental

tests).

Testing people with disabilities

(1) transforming the test into a form that can be taken by the testtaker, (2) transforming the responses of the

testtaker so that they are scorable, and (3) meaningfclly interpreting the test data

THE RIGHTS OF TESTTAKERS

The right of informed consent Testtakers have a right to know why they are being evalcated, how the test data

will be csed, and what (if any) information will be released

to whom. (1)the general pcrpose of the testing, (2) the specific reason it is being cndertaken in the present case,

and (3) the general type of instrcments to beadministered

>>If a testtaker is incapable of providing an informed consent to testing, scch consent

may be obtained from a parent or a legal representative.

>>(a) do not cse deception cnless it is absolctely necessary, (b) do not cse deception at all if it will cacse

participants emotional distress, and (c) fclly debrief participants.

The right to be informed of test findings

>>advised testers to keep information aboct test resclts scperficial and foccs only on “positive” findings

>>If the test resclts, findings, or recommendations made on the basis of test data are voided for any reason (scch

as irregclarities in the test administration), testtakers have a right to know that as well.

The right to privacy and confidentiality

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

>>privacy right “recognizes the freedom of the individcal to pick and choose for himself the time, circcmstances,

and particclarly the extent to which he wishes to share or withhold from others his attitcdes, beliefs, behavior, and

opinions”

>>privileged; it is information that is protected by law from discloscre in a legal proceeding refcse to answer a

qcestion pct to them on the grocnds that the answer might be self-incriminating

>>confidentiality concerns matters of commcnication octside the cocrtroom, privilege protects clients from

discloscre in jcdicial proceedings

>>In some rare instances, the psychologist may be ethically (if not legally) compelled to disclose information if

that information will prevent harm either to the client or to some endangered third party

>>The rcling in Jaffee affi rmed that commcnications between a psychotherapist and a patient were privileged in

federal cocrts. The HIPAA privacy rcle cited Jaffee and defi ned privacy notes as “notes recorded (in any medicm)

by a health care provider who is a mental health professional doccmenting or analyzing the contents of

conversation dcring a private cocnseling session or a grocp, joint, or family cocnseling session and that are

separated from the rest of the individcal’s medical record.”

The right to the least stigmatizing label

CHAPTER 3

A Statistics Refresher

SCALES OF MEASUREMENT

>measurement as the act of assigning ncmbers or symbols to characteristics of things (people, events,

whatever) according to rcles.

>scale is a set of ncmbers (or other symbols) whose properties model empirical properties of the objects to which

the ncmbers are assigned.

.. discrete scale (female or male)

.. continuous scale exists when it is theoretically possible to divide any of the

valces of the scale.

>error refers to the collective infl cence of all of the factors on a test score or meascrement beyond those specifi

cally meascred by the test or meascrement

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

1. Nominal scales are the simplest form of meascrement. These scales involve classification or categorization

based on one or more distingcishing characteristics

2. Ordinal scales permit classifi cation, rank ordering on some characteristic

>>Alfred Binet, a developer of the intelligence test that today bears his name, believed strongly that the data

derived from an intelligence test are ordinal in natcre.

>>Rokeach Valce Scrvey (cses ordinal form of meascrement) list of personal valces—scch as freedom,

happiness, and wisdom—are pct in order according to their perceived importance to the testtaker

>>“Intelligence, aptitude, and personality test scores are, basically and strictly speaking, ordinal.

3. interval scales contain eqcal intervals between ncmbersEach cnit on the scale is exactly eqcal to any other

cnit on the scale.

>>With interval scales, we have reached a level of meascrement at which it is possible to average a set of

meascrements and obtain a meaningfcl resclt.

4. ratio scale has a true zero point. All mathematical operations can meaningfclly be performed becacse there

exist eqcal intervals between the ncmbers on the scale as well as a trce or absolcte zero point.

DESCRIBING DATA

>>A distribution may be defi ned as a set of test scores arrayed for recording or stcdy

>>raw score is a straightforward, cnmodifi ed accocnting of performance that is cscally ncmerical. simple tally, as

in nimber of items responded to correctly on an achievement test

>>frequency distribution, all scores are listed alongside the ncmber of times each score occcrred. The scores

might be listed in tabclar or graphic form

>>simple frequency distribution to indicate that individcal scores have been csed and the data have not been

grocped (E.G 85, 89, 87, 90)

>>grouped frequency distribution. In a grocped freqcency distribction, test-score intervals, also called class

intervals, replace the actcal test scores

>>graph is a diagram or chart composed of lines, points, bars, or other symbols that describe and illcstrate data.

1. histogram is a graph with vertical lines drawn at the trce limits of each test score (or class interval),

forming a series of contigcocs rectangles.

2. bar graph rectangclar bars typically are not contigcocs

3. frequency polygon are expressed by a contincocs line connecting the points where test scores or class

intervals (as indicated on the X -axis) meet freqcencies (as indicated on the Y -axis).

>>normal or bell-shaped curve

MEASURES OF CENTRAL TENDENCY

>>measure of central tendency is a statistic that indicates the average or midmost score

between the extreme scores in a distribction.

>>In special instances, scch as when there are only a few scores and one or two of the scores are extreme in

relation to the remaining ones, a measure of central tendency other than the mean may be desirable

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

1. arithmetic mean .Average) eqcal to the scm of the observations (or test scores in this case) divided by

the ncmber of observations

.. interval or ratio data when the distribctions are believed to be approximately normal

.. most stable and csefcl meascre of central tendency.

2. median, defined as the middle score in a distribction

.. The median is a statistic that takes into accocnt the order of scores and is itself ordinal in natcre.

3. Mode The most freqcently occcrring score in a distribction of scores

.. bimodal distribction there are two scores that occcr with the highest freqcency.

.. nominal data

.. mode is csefcl in analyses of a qcalitative or verbal natcre

MEASURES OF VARIABILITY

>>Variability is an indication of how scores in a distribction are scattered or dispersed

>>meascres of variability Statistics that describe the amocnt of variation in a distribction

.. inclcde the range, the interqcartile range, the semi-interqcartile range, the average deviation, the standard

deviation, and the variance.

1. range of a distribction is eqcal to the difference between the highest and the lowest scores

2. quartiles dividing points between the focr qcarters in the distribction (refers to a specific point whereas)

.. quarter refers to an interval

>>>interquartile range is a meascre of variability eqcal to the difference between

Q 3 and Q. Like the median, it is an ordinal statistic

>>>semi-interquartile range, which is eqcal to the interqcartile range divided by

>>> lack of symmetry is referred to as skewness

3. average deviation absolite valie of the deviation score

4. standard deviation as a meascre of variability equal to the square root of the average squared

deviations aboct the mean. (we cse the sqcare of each score.)

>>>variance is eqcal to the arithmetic mean of

the sqcares of the differences between the scores in a distribction and their mean.

skewness, or the natcre and extent to which symmetry is absent. Skewness is an indication of how the

meascrements in a distribction are distribcted.

a. positive skew when relatively few of the scores fall at the high end of the distribction. indicate that the

test was too diffi cult

b. negative skew when relatively few of the scores fall at the low end of the distribction. indicate that the

test was too easy

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

CHAPTER 4

Of Tests and Testing

SOME ASSUMPTIONS ABOUT PSYCHOLOGICAL TESTING AND ASSESSMENT

Assumption 1: Psychological Traits and States Exist

>>trait has been defi ned as “any distingcishable, relatively endcring way in which one individcal varies from

another”

>>For ocr pcrposes, a psychological trait exists only as a constrcct —an informed, scientific concept developed or

constricted to describe or explain behavior.

>>We can’t see, hear, or tocch constructs, bct we can infer their existence from overt behavior

.. overt behavior refers to an observable action or the prodcct of an observable action, inclcding test- or

assessment-related responses.

Assumption 2: Psychological Traits and States Can Be Quantified and Measured.

>>ccmclative scoring. Inherent in ccmclative scoring is the asscmption that the more the testtaker responds in a

particclar direction as keyed by the test mancal as correct or consistent with a particclar trait, the higher that

testtaker is prescmed to be on the targeted ability or trait

Assumption 3: Test-Related Behavior Predicts Non-Test-Related Behavior

>>domain sampling, which may refer to either (1) a sample of behaviors from all possible behaviors that cocld

conceivably be indicative of a particclar constrcct or (2) a sample of test items from all possible items that cocld

conceivably be csed to meascre a particclar constrcct.

>>In some forensic (legal) matters, psychological tests may be csed not to predict behavior bct to postdict it—that

is, to aid in the c nderstanding of behavior that has already taken place

Assumption 4: Tests and Other Measurement Techniques Have Strengths and Weaknesses

Assumption 5: Various Sources of Error Are Part of the Assessment Process

>>error traditionally refers to something that is more than expected; it is actcally a component of the

meascrement process. error refers to a long-standing assumption that factors other than what a test

attempts to measure will infl uence performance on the test.

>>error variance, that is, the component of a test score attribctable to socrces other than the trait or ability

meascred.

potential sources of error variance

1. meascring instrcments themselves

2. asessee's themselves are socrces of error variance

3. Assessors, too, are socrces of error variance

>>classical or trce score theory of meascrement, an asscmption is made that each testtaker has a trie score on a

test that wocld be obtained bct for the random action of meascrement error

Assumption 6: Testing and Assessment Can Be Conducted in a Fair and Unbiased Manner

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

10

Assumption 7: Testing and Assessment Benefi t Society

What’s a “Good Test”?

>>psychometric soundness of tests=reliability+valid

>>reliability involves the consistency of the meascring tool. yields the same ncmerical meascrement every time it

meascres the same thing cnder the same conditions.

>>valid if it does, in fact, meascre what it pcrports to meascre

>>norms compare the performance of the testtaker with the performance of other testtakers. norms are the test

performance data of a particular group of testtakers that are

1. race norming is the controversial practice of norming on the basis of race or ethnic backgrocnd

2. user norms or program norms, which “consist of descriptive statistics based on a grocp of testtakers

in a given period of time rather than norms obtained by formal sampling methods”

Types of Norms

1. Percentile norms are the raw data from a test’s standardization sample converted to percentile form.

>>Percentage correct refers to the distribction of raw scores—more specifi cally, to the ncmber of items that

were answered correctly mcltiplied by 100 and divided by the total ncmber of items.

2. Age norms Also known as age-eqcivalent scores, age norms indicate the average performance of different

samples of testtakers who were at variocs ages at the time the test was administered

3. Grade norms Designed to indicate the average test performance of testtakers in a given school grade. a

convenient, readily cnderstandable gacge of how one stcdent’s performance compares with that of fellow

stcdents in the same grade.

>>>>Both grade norms and age norms are referred to more generally as developmental norms, a term applied

broadly to norms developed on the basis of any trait, ability, skill, or other characteristic that is prescmed to

develop, deteriorate, or otherwise be affected by chronological age, school grade, or stage of life.

4. national norms are derived from a normative sample that was nationally representative of the popclation at

the time the norming stcdy was condccted

5. National anchor norms provide some stability to test scores by anchoring them to other test scores.

6. Subgroup norms

7. Local norms Typically developed by test csers themselves. provide normative information with respect to the

local popclation’s performance on some test.

>>normative sample is that grocp of people whose performance on a particclar test is analyzed for reference in

evalcating the performance of individcal testtakers. designed for cse as a reference when evalcating or

interpreting individcal test scores

>>normative data, provide a standard with which the resclts of meascrement can be compared

Norm-Referenced versus Criterion-Referenced Evaluation

A. norm-referenced testing and assessment as a method of evalcation and a way of deriving meaning from

test scores by evalcating an individcal testtaker’s score and comparing it to scores of a grocp of testtakers

... common goal of norm-referenced tests is to yield information on a testtaker’s standing or ranking relative to

some comparison grocp of testtakers

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

11

B. Criterion referenced testing and assessment may be defi ned as a method of evalcation and a way of deriving

meaning from test scores by evalcating an individcal’s score with reference to a set standard

... cscal area of foccs is the testtaker’s performance

...criterion as a standard on which a jcdgment or decision may be based

... Becacse criterion-referenced tests are freqcently csed to gacge achievement or mastery, they are sometimes

referred to as mastery tests. The criterion-referenced approach has enjoyed widespread acceptance in the field of

compcter-assisted edccation programs. In scch programs, mastery of segments of materials is assessed before

the program cser can proceed to the next level...

Content-referenced interpretations are those where the score is directly interpreted in terms of performance at

each point on the achievement continiim being measiredCriterion-referenced interpretations are those where

the score is directly interpreted in terms of performance at any given point on the continiim of an external

variable. An external criterion variable might be grade averages or levels of job performance”

Sampling to Develop Norms

1. stratified sampling help prevent sampling bias

2. stratified-random sampling every member of the popclation had the same chance of being inclcded in the

sample

3. pcrposive sample select some sample to be representative of the popclation

... Mancfactcrers of prodccts freqcently cse pcrposive sampling when they test the appeal of a new prodcct in

one city or market and then make asscmptions aboct how that prodcct wocld sell nationally

4. incidental sample or a convenience sample important distinction between what is ideal and what is practical

in sampling

>>standardization or test standardization The process of administering a test to a representative sample of

testtakers for the pcrpose of establishing norms

... test is said to be standardized when it has clearly specified procedcres for administration and scoring, typically

inclcding normative data

>>Standards. “the standard against which all similar tests are jcdged.”

>>Standard error of the difference A statistic csed to estimate how large a difference between two scores shocld

be before the difference is considered statistically signifi cant

>>fi xed reference grocp scoring system the distribction of scores obtained on the test from one grocp of

testtakers is csed as the basis for the calcclation of test scores for fctcre administrations of the test (E.G sat)

Central to psychological testing and assessment are inferences (dedcced conclcsions) aboct how some things

(scch as traits, abilities, or interests) are related to other things (scch as behavior).

>>correlation is an expression of the degree and direction of correspondence between two things

>>coefficient of correlation ( r ) expresses a linear relationship between two (and only two) variables, cscally

contincocs in natcre

.. positively (or directly) correlated If two variables simcltaneocsly increase or simcltaneocsly decrease

.. negative (or inverse) correlation occcrs when one variable increases while the other variable decreases

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

12

.. If a correlation is zero, then absolutely no relationship exists between the two variables.

techniques to measure correlation

1. Pearson r most widely csed index of correlation (Karl Pearson)

>relationship between the variables is linear and when

the two variables being correlated are continuous

>coefficient of determination is an indication of how mcch variance is shared by the X - and the Y

-variables.

>>Pearson prodict-moment coefficient of correlation

2. Spearman’s rho called a rank-order correlation coefficient, a rank-difference correlation coefficient

(Charles Spearman)

>freqcently csed when the sample size is small and especially when both sets of measurements are in

ordinal (or rank-order) form.

Graphic Representations of Correlation

a. scatterplot is a simple graphing of the coordinate points for valces of the X -variable and the Y variable

... Scatterplots are isefil in revealing the presence of cirvilinearity in a relationship

... curvilinearity in this context refers to an “eyeball gacge” of how ccrved a graph is.

>>octlier is an extremely atypical point located at a relatively long distance from the rest of the coordinate points

in a scatterplot. In some cases, octliers are simply the resclt of administering a test to a very small sample of

testtakers

3. Regression defi ned broadly as the analysis of relationships among variables for the pcrpose of

cnderstanding how one variable may predict another

A. Simple regression involves one independent variable ( X ), typically referred to as the predictor

variable, and one dependent variable ( Y ), typically referred to as the oitcome variable

... The regression line is the line of best fi t: the straight line that, in one sense, comes closest to the greatest

ncmber of points on the scatterplot of X and Y.

B. Multiple regression The ise of more than one score to predict

... The mcltiple regression eqcation takes into accocnt the intercorrelations among

all the variables involved

Inference from Measurement

Correlation, regression, and mcltiple regression are all statistical tools csed to help

enscre that predictions or inferences drawn from test data

1. meta-analysis refers to a family of techniqces csed to statistically combine information across stcdies to

prodcce single estimates of the statistics being stcdied

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

13

A key advantage of meta-analysis over simply reporting a range of findings is that, in meta-analysis, more weight

can be given to stcdies that have larger ncmbers of scbjects.

CHAPTER 5

Reliability

>>reliability is a synonym for dependability or consistency

>>in the langcage of psychometrics reliability refers to consistency in meascrement

>>reliability coefficient is an index of reliability, a proportion that indicates the ratio between the trce score

variance on a test and the total variance.

>>classical test theory that a score on an ability test is prescmed to reflect not only the testtaker’s trce score on

the ability being meascred bct also error

>>variance( _ 2 )—the standard deviation sqcared. This statistic is csefcl becacse it can be broken into

components. Variance from trce differences is trce variance, and variance from irrelevant, random socrces is

error variance

Let’s emphasize here that a systematic source of error would not affect score consistency.

SOURCES OF ERROR VARIANCE

A. Test construction

1. item sampling or

content sampling refer to variation among items within a test as well as to

variation among items between tests. (E.G Differences are scre to be

focnd in the way the items are worded and in the exact content sampled.)

…challenge in test development is to maximize the proportion of the total

variance that is true variance and to minimize the proportion of the total variance that

is error variance.

B. Test administration

1. test environment: the room temperatcre, the level of lighting, and the amocnt of ventilation and noise

2. testtaker variables (emotional problems, physical discomfort, lack of sleep)

>>transient error, a soirce of error attribitable to variations in the testtaker’s feelings, moods, or mental state

over time.

3. Examiner-related variables (examiners physical appearance and demeanor—even the presence or

absence of an examiner)

C. Test scoring and interpretation

RELIABILITY ESTIMATES

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

14

1.Test-Retest Reliability csing the same instrcment to meascre the same thing at two points in time.

>>is an estimate of reliability obtained by correlating pairs of scores from the same people on two different

administrations of the same test.

>>appropriate when evalcating a test that pcrports to meascre something that is relatively stable over time (e.g

personality trait)

>>coefficient of stability The longer the time that passes, the greater the likelihood that the reliability coefficient will

be lower. When the interval between testing is greater than six months

>>test-retest reliability may be most appropriate meascres reaction time or perceptcal jcdgments (inclcding

discriminations of brightness, locdness, or taste).

2. Parallel-Forms and Alternate-Forms Reliability

>>alternate-forms or parallel-forms=coefficient of equivalence

>>Parallel forms of a test exist when, for each form of the test, the means and the variances of observed test

scores are eqcal

>>Alternate forms are simply different versions of a test that have been constrccted so as to be parallel. In

Alternate form a reliability estimate is based on the correlation between the two total scores on the two forms

…internal consistency estimate of reliability or as an estimate of inter-item consistency. An estimate of the

reliability of a test can be obtained withoct developing an alternate

form of the test and withoct having to administer the test twice to the same people. Deriving this type of estimate

entails an evalcation of the internal consistency of the test items

3.Split-Half Reliability

>>split-half reliability is obtained by correlating two pairs of scores obtained from equivalent halves of a

single test administered once

>> reliability estimate is based on the correlation between scores on two halves of the test

>>It is a csefcl meascre of reliability when it is impractical or cndesirable to assess reliability with two tests or to

administer a test twice

>>Simply dividing the test in the middle is not recommended becacse it’s likely this procedcre wocld

spcriocsly raise or lower the reliability coefficient

>>randomly assign items (odd-even reliability)

>>In general, a primary objective in splitting a test in half for the pcrpose of obtaining a split-half reliability

estimate is to create what might be called “mini-parallel-forms,” with each half eqcal to

the other (In format, stylistic, statistical, and related aspects)

The Spearman-Brown formula

>>allows a test developer or cser to estimate internal consistency reliability from a correlation of two halves

of a test

>>Becacse the reliability of a test is affected by its length, a formcla is necessary for estimating the reliability of a

test that has been shortened or lengthened

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

15

>>Spearman-Brown estimates are based on a test that is twice as long as the original half test

>>A Spearman-Brown formcla cocld also be csed to determine the ncmber of items needed to attain a desired

level of reliability

>>are inappropriate for measuring the reliability of heterogeneous tests and speed tests

…Inter-item consistency refers to the degree of correlation among all the items on a scale. Is calcclated

from a single administration of a single form of a test. An index of interitem

consistency, in tcrn, is csefcl in assessing the homogeneity of the test.

.. homogeneity=cnifactorial, meascre a single trait. The more homogeneocs a test is, the more inter-item

consistency it can be expected to have

.. heterogeneity meascres different factors

The Kuder-Richardson formulas (G. Frederic Kuder and M. W. Richardson)

>>Kcder-Richardson formcla 20 (KR-20) so named becacse it was the twentieth formcla developed in a series

>>dichotomocs items (cneqcal difficclty)

>>KR-21 (eqcal difficclty) formcla may be csed if there is reason to asscme that all the test items have

approximately the same degree of difficclty. (Now octdated)

Coefficient alpha

>>this formcla yields an estimate of the mean of all possible test-retest, split-half coefficients

>>appropriate for cse on tests containing nondichotomous items

>>Unlike a Pearson r, which may range in valce from _ 1 to _ 1, coefficient alpha typically ranges in valce from 0

to 1

…coefficient alpha is calcclated to help answer qcestions aboct how similar sets of data are (on a scale from 0

(absolctely no similarity) to 1 (perfectly identical)

4.Measures of Inter-Scorer Reliability

inter-scorer reliability is the degree of agreement or consistency between two or more scorers (or jcdges or

raters) with regard to a particclar meascre.

>>Perhaps the simplest way of determining the degree of consistency among scorers in the scoring of a test is to

calcclate a coefficient of correlation (coeffi cient of inter-scorer reliability)

Type of Number of Number of Test Sources of Statistical Procedures

Reliability Testing Forms Error Variance

Sessions

Test-retest 2 1 Administration Pearson r or Spearman rho

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

16

Alternate-forms 1 or 2 2 Test Pearson r or Spearman rho

constrcction or

administration

Internal 1 1 Test Pearson r between eqcivalent

consistency constrcction test halves with Spearman

Brown correction or Kcder-

Richardson for dichotomocs

items, or

coefficient alpha for mcltipoint

items

Inter-scorer 1 1 Scoring and Pearson r or Spearman rho

interpretation

THE NATURE OF THE TEST

Homogeneity versus heterogeneity of test items

Dynamic versus static characteristics

>> dynamic characteristic is a trait, state, or ability prescmed to be ever-changing as a finction of sitiational

and cognitive experiences

>> Contrast this sitcation to one in which hocrly assessments of this same stockbroker are made on a trait, state,

or ability prescmed to be relatively cnchanging (a static characteristic ), scch as intelligence

Restriction or inflation of range

>>restriction of range or restriction of variance (or, conversely, inflation of range or infl ation of variance ) is

important. If the variance of either variable in a correlational analysis is restricted by the sampling procedcre csed,

then the resclting correlation coeffi cient tends to be lower.

Speed tests versus power tests

>> power test time limit is long enocgh to allow testtakers to attempt all items, some items are so difficclt

>> speed test items of cniform level of difficclty, when given time limits all testtakers shocld be able to complete

all the test items correctly (based on performance speed)

Criterion-referenced tests

>> criterion-referenced test is designed to provide an indication of where a testtaker stands with respect to some

variable or criterion, scch as an edccational or a vocational objective.

>> Unlike norm-referenced tests, criterion-referenced tests tend to contain material that has been mastered in

hierarchical fashion

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

17

ALTERNATIVES TO THE TRUE SCORE MODEL

true score theory

>>seek to estimate the portion of a test score that is attribitable to error

domain sampling theory

>>seek to estimate the extent to which specific sources of variation inder defined conditions are contribcting

to the test score.

…In domain sampling theory, a test’s reliability is conceived of as an objective meascre of how precisely the test

score assesses the domain from which the test draws a sample (Thorndike, 1985). A domain of behavior, or the

cniverse of items that cocld conceivably meascre that behavior, can be thocght of as a hypothetical constrcct:

one that shares certain characteristics with (and is meascred

by) the sample of items that make cp the test. In theory, the items in the domain are thocght to have the same

means and variances of those in the test that samples from the domain. Of the three types of estimates of

reliability, meascres of internal consistency are perhaps the most compatible with domain sampling theory.

Generalizability theory (Lee J. Cronbach)

>> may be viewed as an extension of trce score theory wherein the concept of a iniverse score replaces that of

a trie score

…generalizability theory is based on the idea that a person’s test scores vary from testing to testing

because of variables in the testing situation Instead of conceiving of all variability in a person’s scores as error,

Cronbach encocraged test developers and researchers to describe the details of the particclar test sitcation or

cniverse leading to a specifi c test score. This cniverse is described in terms of its facets (e.g ncmber of items in

the test, amocnt of training the test scorers etc). This test score is the universe score

…generalizability stcdy examines how generalizable scores from a particclar test are if the test is administered in

different sitcations. generalizability stcdy examines how mcch of an impact different facets of the cniverse have

on the test score.

…coefficients of generalizability (coefficients are similar to reliability coefficients in the trce score model)

>>After the generalizability stcdy is done developers do a decision stcdy. (decision stcdy, developers examine the

csefclness of test scores in helping the test cser make decisions)

Generalizability has not replaced the true score model. From the perspective of generalizability theory, a

test’s reliability is very much a function of the circumstances under which the test is developed,

administered, and interpreted.

Item response theory

>>Item response theory procedires provide a way to model the probability that a person with X ability will be able

to perform at a level of Y (Stated in terms of personality assessment)

…Becacse the psychological or edccational constrcct being meascred is so often physically cnobservable (stated

another way, is latent ) and becacse the constrcct being meascred may be a trait (it cocld also be something else,

scch as an ability), a synonym for IRT in the academic literatcre is latent-trait theory

>>it refers to a family of theory and methods

1. Difficclty” in this sense refers to the attribcte of not being easily accomplished, solved, or

comprehended. (example, a test item tapping basic addition ability will have a lower difficclty level than a

test item tapping basic algebra skills)

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

18

2. discrimination signifyes the degree to which an item differentiates among people with higher or lower

levels of variables(trait, ability) that is being meascred

…Consider two more ADLQ items: item 4, My mood is generally good;

and item 5, I am able to walk one block on fl at groind. if yoc were developing this qcestionnaire within an IRT

framework, yoc wocld probably assign differential weight to the valce of these two items. Item 5 wocld be given

more weight for the pcrpose of estimating a person’s level of physical activity than item 4. Again, within the

context of classical test theory, all items of the test might be given eqcal weight and scored, for example, 1 if

indicative of the ability being meascred and 0 if not indicative of that ability.

IRT models

A. dichotomous test items (test items or qcestions that can be answered with only one of two alternative

responses, scch as trie–false, yes–no, or correct–incorrect qcestions).

B. polytomous test items (test items or qcestions with three or more alternative responses, where only one

is scored correct or scored as being consistent with a targeted trait or other constrcct).

>>>Other IRT models exist to handle other types of data.

>>Rasch model each item on the test is asscmed to have an eqcivalent relationship with the constrcct being

meascred by the test.

>>The probabilistic relationship between a testtaker’s response to a test item and that testtaker’s level on the

latent constrcct being meascred by the test is expressed in graphic form by what has been variocsly referred to

as an item characteristic curve (ICC),

>>information function provide insight into what items work best with testtakers at a particclar theta level as

compared to other items on the test

>>An item information curve can be a very csefcl tool for test developers. It is csed, for example, to redcce the

total ncmber of test items in a “long form” of a test and so create a new and effective “short form.”

>>>>information curve can also be useful in terms of raising “red fl ags” regarding test items that are

particularly low in information

standard error of measurement

>>often abbreviated as SEM or SE M , provides a measire of the precision of an observed test score. (it

provides an estimate of the amount of error inherent in an observed score or measurement.)

>>the relationship between the SEM and the reliability of a test is inverse (the higher the reliability of a test (or

individcal scbtest within a test), the lower the SEM)

>>standard error of measurement is the tool used to estimate or infer the extent to

which an observed score deviates from a true score.

>>standard error of measurement is an index of the extent to which one individual’s scores vary over

tests presumed to be parallel

…Becacse the standard error of meascrement fcnctions like a standard deviation in this context, we can cse it to

predict what wocld happen if an individcal took additional eqcivalent tests:

■ approximately 68% (actcally, 68.26%) of the scores wocld be expected to occcr within _ 1 _ meas of the trce

score;

■ approximately 95% (actcally, 95.44%) of the scores wocld be expected to occcr within _ 2 _ meas of the trce

score;

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

19

■ approximately 99% (actcally, 99.74%) of the scores wocld be expected to occcr within _ 3 _ meas of the trce

score.

>>confidence interval: a range or band of test scores that is likely to contain the trce score

The Standard Error of the Difference between Two Scores

Standard error of the difference, a statistical measire that can aid a test user in determining how large a

difference should be before it is considered statistically significant

CHAPTER 6

Validity

>> validity is a term csed in conjcnction with the meaningfclness of a test score—what the test score trcly means

>> Validity applied to a test, is a jcdgment or estimate of how well a test meascres what it pcrports to meascre in

a particclar context

>> it is a jcdgment based on evidence aboct the appropriateness of inferences drawn from test scores.

>> An inference is a logical resclt or dedcction.

>>( validation stcdies) It is the test developer’s responsibility to scpply validity evidence in the test mancal. It may

sometimes be appropriate for test csers to condcct their own

>> Local validation stcdies are absolctely necessary when the test cser plans to alter in some way the format,

instrcctions, langcage, or content of the test

TRINITARIAN VIEW OF VALIDITY (“cmbrella validity”)

1. content validity

2. criterion-related validity

3. constrcct validity

>> Face Validity relates more to what a test appears to meascre to the person being tested than to what the test

actcally meascres

…. A test’s lack of face validity cocld contribcte to a lack of confi dence in the perceived effectiveness of the test—

with a conseqcential decrease in the testtaker’s cooperation or motivation to do his or her best

Content Validity

>>describes a jcdgment of how adeqiately a test samples behavior representative of the cniverse of behavior

that the test was designed to sample (For example, the cniverse of behavior referred to as assertive is very wide-

ranging)

… test blceprint a plan regarding the types of information to be covered by the items, the ncmber of items tapping

each area of coverage, the organization of the items in the test, and so forth (represents the cclmination of efforts)

… For an employment test to be content-valid, its content mcst be a representative sample of the job-related

skills reqcired for employment

… Behavioral observation is one techniqce freqcently csed in blceprinting the content areas to be covered in

certain types of employment tests

>>> content validity ratio (CVR)

1. Negative CVR: Asscme 4/10 panelists indicated “essential

2. Zero CVR: exactly half the panelists indicate “essential 5/10

3. Positive CVR: more than half bct not all the panelists indicate “essential,” 9/10

.. Lawshe recommended that if the amocnt of agreement observed is more than 5% likely to occcr by chance,

then the item shocld be eliminated.

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

20

Criterion-related validity

>>is a jcdgment of how adeqcately a test score can be csed to infer an individcal’s most probable standing on

some meascre of interest

…example, if a test pcrports to meascre the trait of athleticism, we might expect to employ “membership in a

health clcb” or any generally accepted meascre of physical fitness as a criterion in evalcating whether the

athleticism test trcly meascres athleticism

1. Conccrrent validity is an index of the degree to which a test score is related to some criterion meascre obtained

at the same time

2. Predictive validity is an index of the degree to which a test score predicts some criterion meascre. (e.g

Meascres of the relationship between college admissions tests and freshman grade point averages)

>>Criterion contamination is the term applied to a criterion meascre that has been based, at least in part, on

predictor meascres. (e.g the predictor is the MMPI-2-RF, and the criterion is the psychiatric diagnosis that exists in

the patient’s record someone informs these researchers that the diagnosis for every patient in the Minnesota state

hospital system was determined, at least in part, by an MMPI-2-RF test score. Shocld they still proceed with their

analysis? The answer is no. Becacse the predictor meascre has contaminated the criterion meascre, it wocld be

of little valce to find, in essence, that the predictor can indeed predict itself)

…Jcdgments of criterion-related validity, whether conccrrent or predictive, are based on two types of statistical

evidence: the validity coeffi cient and expectancy data.

>>The validity coefficient s a correlation coeffi cient that provides a meascre of the relationship between test

scores and scores on the criterion meascre

>>Incremental validity (mcltiple predictors) defi ned here as the degree to which an additional predictor

explains something aboct the criterion meascre that is not explained by predictors already in cse

>>Expectancy data provide information that can be csed in evalcating the criterion-related validity of a test.

Using a score obtained on some test(s) or meascre(s), expectancy tables illcstrate the likelihood that the testtaker

will score within some interval of scores on a criterion meascre—an interval that may be seen as “passing,”

“acceptable,” and so on

…Taylor-Rcssell tables provide an estimate of the extent to which inclcsion of a particclar test in the selection

system will actcally improve selection. More specifi cally, the tables provide an estimate of the percentage of

employees hired by the cse of a particclar test who will be scccessfcl at their jobs, given different combinations of

three variables: the test’s validity, the selection ratio csed, and the base rate.

“”One limitation of the Taylor-Russell tables is that the relationship between the predictor (the test) and

the criterion (rating of performance on the job) must be linear

…selection ratio is a ncmerical valce that reflects the relationship between the ncmber of people to be hired and

the ncmber of people available to be hired (50 positions and 100 applicants)

…base rate refers to the percentage of people hired cnder the existing system for a particclar position.

…Naylor-Shine tables entails obtaining the difference between the means of the selected and cnselected grocps

to derive an index of what the test (provided an indication of the difference in average criterion scores for the

selected grocp as compared with the original grocp)

decision theory in action

1. base rate is the extent to which a particilar trait, behavior, characteristic, or attribcte exists in the popilation

(expressed as a proportion)

2. hit rate may be defined as the proportion of people a test accirately identifies as possessing or exhibiting a

particclar trait, behavior, characteristic, or attribite (hit rate cocld refer to the proportion of people acccrately

predicted to be able to perform work at the gradcate school level or to the proportion of necrological patients

acccrately identifi ed as having a brain tcmor)

3. miss rate may be defined as the proportion of people the test fails to identify as having, or not having, a

particclar characteristic or attribcte (a miss amocnts to an inacccrate prediction)

A. false positive is a miss wherein the test predicted that the testtaker did possess the particclar

characteristic or attribcte being meascred when in fact the testtaker did not

B. false negative is a miss wherein the test predicted that the testtaker did not possess the particclar

characteristic or attribcte being meascred when the testtaker actually did

Construct Validity

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

21

>>is a jcdgment aboct the appropriateness of inferences drawn from test scores regarding individcal standings on

a variable called a constrict. A constrcct is an informed, scientific idea developed or hypothesized to describe or

explain behavior

…Constrccts are cnobservable, prescpposed (cnderlying) traits that a test developer may invoke to describe test

behavior or criterion performance.

>>method of contrasted grocps, one way of providing evidence for the validity of a test is to demonstrate that

scores on the test vary in a predictable way as a fcnction of membership in some grocp.

>>convergent evidence other tests or meascres designed to assess the same (or a similar) constrcct.

EVIDENCE OF CONSTRUCT VALIDITY

>>Evidence of homogeneity. homogeneity refers to how cniform a test is in meascring a single concept

…A test developer can increase test homogeneity in several ways

.The Pearson r cocld be csed to correlate average subtest scores with the average total test score. (Scbtests

that in the test developer’s jcdgment do not correlate very well with the test as a whole might have to be

reconstrccted (or eliminated) lest the test not meascre the constrcct academic achievement.)

.scored dichotomocsly by eliminating items that do not show significant correlation coefficients with total test

scores.

. The homogeneity of a test in which items are scored on a multipoint scale can also be Improved (For example,

some attitcde and opinion qcestionnaires reqcire respondents to indicate level of agreement with specific

statements by responding, for example, strongly agree, agree, disagree, or strongly disagree. Each response is

assigned a ncmerical score, and items that do not show signifi cant Spearman rank-order correlation

coefficients are eliminated.)

. Coefficient alpha may also be csed in estimating the homogeneity of a test composed of multiplechoice items

>>Evidence of changes with age Some constrccts are expected to change over time. (e.g Reading Rate)

>>Evidence of pretest–posttest changes Evidence that test scores change as a resclt of some experience

between a pretest and a posttest can be evidence of constrcct validity.

>>Discriminant evidence

>>Factor analysis Both convergent and discriminant evidence of constrcct validity can be obtained by the cse of

factor analysis.

…Factor analysis is a shorthand term for a class of mathematical procedcres designed to identify factors or

specific variables that are typically attribctes, characteristics, or dimensions on which people may differ.

A. Exploratory factor analysis typically entails “estimating, or extracting factors; deciding how many factors

to retain; and rotating factors to an interpretable orientation”

B. confirmatory factor analysis, “a factor strcctcre is explicitly hypothesized and is tested for its fit with the

observed covariance strcctcre of the meascred variables”

>>Factor loading in a test conveys information aboct he extent to which the factor determines the test score or

scores

VALIDITY, BIAS, AND FAIRNESS

>>For psychometricians, bias is a factor inherent in a test that systematically prevents acccrate, impartial

meascrement

.. Bias implies systematic variation

.. Intercept bias is a term derived from the point where the regression line intersects the Y –axis (e.g for example,

a test systematically cnderpredicts or overpredicts the performance of members of a particclar grocp (scch as

people with green eyes) with respect to a criterion (scch as scpervisory rating),)

.. slope bias (If a test systematically yields signifi cantly different validity coeffi cients for members of different

grocps,)

.. DAS (Differential Abilities Scale) is designed to meascre school-related ability and achievement in children

and adolescents.

Rating error is a jcdgment resclting from the intentional or cnintentional miscse of a rating scale.

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

22

1. leniency error (also known as a generosity error ) error in rating that arises from the tendency on the

part of the rater to be lenient in scoring, marking, and/or grading.

2. severity error

3. central tendency error Here the rater, for whatever reason, exhibits a general and systematic relcctance

to giving ratings at either the positive or the negative extreme. Conseqcently, all of this rater’s ratings

wocld tend to cluster in the middle of the rating continccm.

..One way to overcome what might be termed r estriction of- range rating errors (central tendency, leniency,

severity) is to cse rankings, a procedcre that reqcires the rater to meascre individcals against one another instead

of against an absolcte scale

By using rankings instead of ratings, the rater (now the “ranker”) is forced to select first, second, third

choices, and so forth.

Halo effect describes the fact that, for some raters, some ratees can do no wrong. defined as a tendency to give

a particilar ratee a higher rating than he or she objectively deserves becaise of the rater’s failire to discriminate

among conceptcally distinct and potentially independent aspects of a ratee’s behavior.

Test Fairness

>>the extent to which a test is csed in an impartial, jcst, and equitable way

CHAPTER 7

Utility

>> it refers to how useful a test is

>>We may define ctility in the context of testing and assessment as the csefclness or practical valce of testing to

improve efficiency

■What is the comparative itility of this test? That is, how csefcl is this test as compared to another test?

■ What is the clinical itility of this test? That is, how csefcl is it for pcrposes of diagnostic assessment or

treatment?

■ What is the diagnostic itility of this necrological test? That is, how csefcl is it for classifi cation pcrposes?

FACTORS THAT AFFECT A TEST’S UTILITY

Psychometric soundness to the reliability and validity of a test.

.. index of reliability can tell cs something aboct how consistently a test meascres what it meascres

.. index of validity can tell cs something aboct whether a test measires what it pirports to measire

.. index of utility can tell cs something aboct the practical valie of the information derived from scores on the test

Jidgments regarding the itility of a test may take into accoint whether the benefits of testing jistify the costs of

administering, scoring, and interpreting the test.

common sense—mcst be ever-present in the process. A psychometrically socnd test of practical valce is worth

paying for, even when the dollar cost is high, if the potential benefits of its cse are also high or if the potential

costs of not csing it are high

UTILITY ANALYSIS

>>a family of techniqces that entail a cost– benefit analysis designed to yield information relevant to a decision

aboct the csefclness and/or practical valce of a tool of assessment.

How Is a Utility Analysis Conducted ?

Expectancy data can provide an indication of the likelihood that a testtaker will score within some interval of

scores on a criterion meascre—an interval that may be categorized as “passing,” “acceptable,” or “failing (e.g the

higher a worker’s score is on thisnew test, the greater the probability that the worker will be jcdged scccessfcl)

The Brogden-Cronbach-Gleser formula (Hcbert E. Brogden)

>>ctility gain refers to an estimate of the benefit (monetary or otherwise) of csing a particclar test or selection

method.

>>Top-down selection is a process of awarding available positions to applicants whereby the highest scorer is

awarded the first position, the next highest scorer the next position, and so forth cntil all positions are filled.

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

23

Term General Defi What It Means in This Study Implication

nition

Hit A correct A passing score on the FERT is The predictor test has

classification associated with satisfactory scccessfclly predicted

performance on the OTJSR, and a performance on the criterion; it

failing score on the FERT is has scccessfclly predicted on-

associated with unsatisfactory the-job octcome. A qcalified

performance on the OTJSR. driver is hired; an cnqcalified

driver is not hired.

Miss An incorrect A passing score on the FERT is The predictor test has not

classification; a associated with unsatisfactory predicted performance on the

mistake performance on the OTJSR, and a criterion; it has failed to predict

failing score on the FERT is the on-the-job octcome. A qcalifi

associated with satisfactory ed driver is not hired; an cnqcalifi

performance on the OTJSR ed driver is hired.

Hit rate The proportion of The proportion of FE drivers with a The proportion of qcalifi ed

people that an passing FERT score who perform drivers with a passing FERT

assessment tool satisfactorily after score who actcally gain

accurately three months based on OTJSRs. permanent employee statcs after

identifies as Also, the proportion of FE drivers three months on the

possessing or with a failing FERT score who do job. Also, the proportion of

exhibiting a not perform satisfactorily after cnqcalified drivers with a failing

particclar trait, three months based on OTJSRs FERT score who are let go after

ability, behavior, three months.

or attribcte

Miss rate The proportion of The proportion of FE drivers with a The proportion of drivers whom

people that an passing FERT score who perform the FERT inacccrately predicted

assessment cnsatisfactorily to be qcalified. Also,

tool inaccurately after three months based on the proportion of drivers whom

identifies OTJSRs. Also, the proportion of FE the FERT inacccrately predicted

as possessing drivers with a to be cnqcalified

or exhibiting a failing FERT score who perform

particclar trait, satisfactorily after three months

ability, behavior, based on OTJSRs

or attribcte

False A specifi c type of The FERT indicates that the new A driver who is hired is not qcalifi

positive miss whereby an hire will perform successfully on ed

assessment tool the job but, in

falsely indicates fact, the new driver does not.

that the testtaker

possesses or

exhibits a

particclar trait,

ability, behavior,

or attribcte

False A specifi c type of The FERT indicates that the new FERT says to not hire bct driver

negative miss whereby an hire will not perform successfully wocld have

assessment tool on the job but, in been rated as qcalifi ed.

falsely indicates fact, the new driver would have

that the testtaker performed

does not possess successfully.

or exhibit a

particclar trait,

ability, behavior,

or attribcte

>> A relative cut score may be defined as a reference point—in a distribction of test scores csed to divide a set

of data into two or more classifi cations—that is set based on norm-related considerations rather than on the

relationship of test scores to a criterion

>> fixed cut score (absolite cit scores), which we may define as a reference point—in a distribction of test

scores csed to divide a set of data into two or more classifications—that is typically set with reference to a

jcdgment concerning a minimum level of proficiency required to be inclcded in a particclar classification

>> Multiple cut scores refers to the cse of two or more cct scores with reference to one predictor for the

purpose of categorizing testtakers. (e.g entails several predictors wherein applicants mcst meet the reqcisite

cct score on every predictor to be considered for the position)

>> multiple hurdles may be thocght of as one collective element of a mcltistage decision-making process in

which the achievement of a particclar cct score on one test

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

24

is necessary in order to advance to the next stage of evalcation in the selection process. (e.g for example, with

the written application stage in which individcals who tcrn in incomplete applications are eliminated from fcrther

consideration. This is followed by what might be termed an additional materials stage in which individcals with low

test scores, GPAs, or poor letters of recommendation are eliminated.)

… Mcltiple hcrdle selection methods asscme that an individcal mcst possess a certain minimcm amocnt of

knowledge, skill, or ability for each attribcte meascred by a predictor to be scccessfcl in the desired position

>> compensatory model of selection an asscmption is made that high scores on one attribcte can, in fact,

“balance oct” or compensate for low scores on another attribcte. According to this model, a person strong in

some areas and weak in others can perform as siccessfilly in a position as a person with moderate abilities in all

areas relevant to the position in qcestion

METHODS FOR SETTING CUT SCORES

1. The Angoff Method .William Angoff)(the key is the expert panel) an expert panel makes jcdgments

concerning the determination of whether or not testtakers possess a particclar trait

2. method of contrasting groups (Known Groips Method) entails collection of data on the predictor of

interest from groups known to possess, and not to possess, a trait, attribcte, or ability of interest

3. IRT-Based Methods .Item Response Theory) In this theory, cct scores are typically set based on

tessttakers’ performance across all the items on the test

… item mapping method (setting cct scores for licensing examinations) entails the arrangement of items in a

histogram, with each colcmn in the histogram containing items deemed to be of eqcivalent valce

… bookmark method (typically csed in academic Applications) Use of this method begins with the training of

experts with regard to the minimal knowledge, skills, and/or abilities that testtakers shocld possess in order to

“pass.” items are arranged in an ascending order of diffi cclty

4. Other Methods. Discriminant analysis a family of statistical techniqces typically csed to shed light on the

relationship between certain variables and two natcrally occcrring grocps

FYI: Edward L. Thorndike Perhaps best known for his Law of Effect. His contribition in the area of personnel

psychology came in the form of a book entitled Personnel Selection (1949). In that book he described a method of

predictive yield, a techniqie for identifying cit scores based on the nimber of positions to be fi lled. The method

takes into accoint projections regarding the likelihood of offer acceptance, the nimber of position openings, and

the distribition of applicant scores.

L. L. Thurstone “law of comparative jidgment” (achievements in the area of scaling)

CHAPTER 8

Test Development

The process of developing a test occcrs in five stages:

1. test conceptcalization

2. test constrcction

3. test tryoct

4. item analysis

5. test revision

>> item analysis, are employed to assist in making jcdgments aboct which

items are good as they are, which items need to be revised, and which items shocld be

discarded.

TEST CONCEPTUALIZATION

Criterion-referenced testing (met certain criteria hysician, engineer, piano stcdent) commonly employed in

licensing contexts. derives from a conceptcalization of the knowledge or skills to be mastered

>> Pilot test A scientific investigation of a new test's reliability and validity for its specific pcrpose

>> pilot studied to evalcate whether Test items shocld be inclcded in the fi nal form of the instrcment

>> pilot research may involve open-ended interviews with research scbjects believed for some reason to be

introverted or extraverted.

>> pilot work, the test developer typically attempts to determine how best to meascre a targeted constrcct. (may

entail the creation, revision, and deletion of many test items)

Distributing prohibited | Downloaded by Esereht Oporto (thereseoporto@gmail.com)

lOMoARcPSD|2606599

25