Beruflich Dokumente

Kultur Dokumente

Image Processing QB

Hochgeladen von

subramanyam62Originalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Image Processing QB

Hochgeladen von

subramanyam62Copyright:

Verfügbare Formate

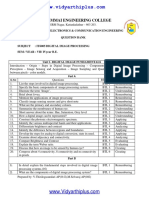

Dr.N.G.P.

Institute of Technology /Department of Electronics and Communication Engineering

IT6005 DIGITAL IMAGE PROCESSING L TPC

3 00 3

UNIT I DIGITAL IMAGE FUNDAMENTALS 8

Introduction – Origin – Steps in Digital Image Processing – Components – Elements of Visual

Perception – Image Sensing and Acquisition – Image Sampling and Quantization – Relationships

between pixels – color models.

UNIT II IMAGE ENHANCEMENT 10

Spatial Domain: Gray level transformations – Histogram processing – Basics of Spatial Filtering–

Smoothing and Sharpening Spatial Filtering – Frequency Domain: Introduction to Fourier

Transform – Smoothing and Sharpening frequency domain filters – Ideal, Butterworth and

Gaussian filters.

UNIT III IMAGE RESTORATION AND SEGMENTATION 9

Noise models – Mean Filters – Order Statistics – Adaptive filters – Band reject Filters – Band

pass Filters – Notch Filters – Optimum Notch Filtering – Inverse Filtering – Wiener filtering

Segmentation: Detection of Discontinuities–Edge Linking and Boundary detection – Region

based segmentation- Morphological processing- erosion and dilation.

UNIT IV WAVELETS AND IMAGE COMPRESSION 9

Wavelets – Subband coding – Multiresolution expansions Compression: Fundamentals – Image

Compression models – Error Free Compression – Variable Length Coding – Bit-Plane Coding –

Lossless Predictive Coding – Lossy Compression – Lossy Predictive Coding – Compression

Standards.

UNIT V IMAGE REPRESENTATION AND RECOGNITION 9

Boundary representation – Chain Code – Polygonal approximation, signature, boundary segments

– Boundary description – Shape number – Fourier Descriptor, moments- Regional Descriptors –

Topological feature, Texture – Patterns and Pattern classes – Recognition based on matching.

TOTAL: 45 PERIODS

TEXT BOOK:

1. Rafael C. Gonzales, Richard E. Woods, “Digital Image Processing”, Third Edition, Pearson

Education, 2010.

REFERENCES:

1. Rafael C. Gonzalez, Richard E. Woods, Steven L. Eddins, “Digital Image Processing Using

MATLAB”, Third Edition Tata McGraw Hill Pvt. Ltd., 2011.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

2. Anil Jain K. “Fundamentals of Digital Image Processing”, PHI Learning Pvt. Ltd., 2011.

3. Willliam K Pratt, “Digital Image Processing”, John Willey, 2002.

4. Malay K. Pakhira, “Digital Image Processing and Pattern Recognition”, First Edition, PHI

Learning Pvt. Ltd., 2011.

5. http://eeweb.poly.edu/~onur/lectures/lectures.html.

6. http://www.caen.uiowa.edu/~dip/LECTURE/lecture.html

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

UNIT I: DIGITAL IMAGE FUNDAMENTALS

1. Define Image?

An image may be defined as two dimensional light intensity function f(x, y) where x and y denote

spatial co-ordinate and the amplitude or value of f at any point (x, y) is called intensity or grayscale or

brightness of the image at that point.

2. What is Dynamic Range?

The range of values spanned by the gray scale is called dynamic range of an image. Image will

have high contrast, if the dynamic range is high and image will have dull washed out gray look if the

dynamic range is low.

3. Define Brightness? AU NOV/DEC 2012

Brightness of an object is the perceived luminance of the surround. Two objects with different

surroundings would have identical luminance but different brightness.

4. Define Tapered Quantization

If gray levels in a certain range occur frequently while others occurs rarely, the quantization

levels are finely spaced in this range and coarsely spaced outside of it. This method is sometimes called

Tapered Quantization.

5. What do you meant by Gray level?

Gray level refers to a scalar measure of intensity that ranges from black to grays and finally to

white.

6. What do you meant by Color model?

A Color model is a specification of 3D-coordinates system and a subspace within that system

where each color is represented by a single point.

7. List the hardware oriented color models?

1. RGB model

2. CMY model

3. YIQ model

4. HSI model

8. Define Hue and saturation? AU May /June 2013,Nov/Dec 2016

Hue is a color attribute that describes a pure color where saturation gives a measure of the degree

to which a pure color is diluted by white light.

9. List the applications of color models?

1. RGB model--- used for color monitor & color video camera

2. CMY model---used for color printing

3. HIS model----used for color image processing

4. YIQ model---used for color picture transmission

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

10. What is Chromatic Adoption?

The hue of a perceived color depends on the adoption of the viewer. For example, the American

Flag will not immediately appear red, white, and blue of the viewer has been subjected to high intensity

red light before viewing the flag. The color of the flag will appear to shift in hue toward the red

component cyan.

11. Define Resolutions?

Resolution is defined as the smallest number of discernible detail in an image.Spatial resolution is

the smallest discernible detail in an image and gray level resolution refers to the smallest discernible

change is gray level.

12. What is meant by pixel? AU MAY/JUNE 2009

A digital image is composed of a finite number of elements each of which has a particular location

or value. These elements are referred to as pixels or image elements or picture elements or pels elements.

13. Define Digital image?

When x, y and the amplitude values of f all are finite discrete quantities , we call the image digital

image.

14. What are the steps involved in DIP?

1. Image Acquisition 2. Preprocessing 3. Segmentation 4. Representation and

Description 5. Recognition and Interpretation

15. What is recognition and Interpretation?

Recognition means is a process that assigns a label to an object based on the information provided

by its descriptors. Interpretation means assigning meaning to a recognized object.

16. Specify the elements of DIP system?

1. Image Acquisition 2. Storage 3. Processing 4. Display 5.Computer

17. Explain the categories of digital storage?

1. Short term storage for use during processing.

2. Online storage for relatively fast recall.

3. Archical storage for infrequent access.

18. What are the applications of digital image processing systems ? AU NOV/DEC 2007

1. Medical Image applications 2. Satellite Imagery 3. Remote sensing

4.Automotives 5. Communications

19. Distinguish between photopic and scotopic vision? (Nov/Dec 2017)

Photopic vision Scotopic vision

1. The human being can resolve the fine details with these cones because each one is connected to its

own nerve end.

2. This is also known as bright light vision. Several rods are connected to one nerve end. So it gives the

overall picture of the image. This is also known as thin light vision.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

20. How cones and rods are distributed in retina? AU APRIL/MAY 2011

In each eye, cones are in the range 6-7 million and rods are in the range 75-150 million

.

21. Define subjective brightness and brightness adaptation?

Subjective brightness means intensity as preserved by the human visual system. Brightness adaptation

means the human visual system can operate only from scotopic to glare limit. It cannot operate over the range

simultaneously. It accomplishes this large variation by changes in its overall intensity.

22. Define weber ratio AU MAY /JUNE 2009

The ratio of increment of illumination to background of illumination is called as weber ratio.(ie) Δi/i.If the

ratio (Δi/i) is small, then small percentage of change in intensity is needed (ie) good brightness adaptation. If the

ratio (Δi/i) is large , then large percentage of change in intensity is needed (ie) poor brightness adaptation.

23. What is meant by machband effect? Au April/May 2011,2013,2015 Nov/Dec 2016

Machband effect means the intensity of the stripes is constant. Therefore it preserves the brightness pattern

near the boundaries, these bands are called as machband effect.

24. What is simultaneous contrast?

The region reserved brightness not depend on its intensity but also on its background. All centre

square have same intensity. However they appear to the eye to become darker as the background becomes

lighter.

25. What is meant by illumination and reflectance?

Illumination is the amount of source light incident on the scene. It is represented as i(x, y).

Reflectance is the amount of light reflected by the object in the scene. It is represented by r(x, y).

26. Define sampling and quantization? AU APRIL/MAY 2007,2011,(Nov/Dec 2017)

Sampling means digitizing the co-ordinate value (x, y).Quantization means digitizing the

amplitude value.

27. Find the number of bits required to store a 256 X 256 image with 32 gray levels?

32 gray levels = 25 = 5 bits 256 * 256 * 5 = 327680 bits.

28. Write the expression to find the number of bits to store a digital image?

The number of bits required to store a digital image is b=M X N X k ,When M=N, this equation

becomes b=N^2k

30. What do you meant by Zooming of digital images?

Zooming may be viewed as over sampling. It involves the creation of new pixel locations and the

assignment of gray levels to those new locations.

31. What do you meant by shrinking of digital images?

Shrinking may be viewed as under sampling. To shrink an image by one half, we delete every

row and column. To reduce possible aliasing effect, it is a good idea to blue an image slightly before

shrinking it.

32. Write short notes on neighbors of a pixel. AU NOV/DEC 2009

The pixel p at co-ordinates (x, y) has 4 neighbors (ie) 2 horizontal and 2 vertical neighbors whose

Question Bank-

co-ordinates Two

is given Marksy),With

by (x+1, Answer

(x-1,y), IT6005/Digital

(x,y-1), (x, y+1). This is called Image

as direct neighbors. Processing

It is denoted

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

33. Explain the types of connectivity.

1. 4 connectivity

2. 8 connectivity

3. M connectivity (mixed connectivity)

34. What is meant by path?

Path from pixel p with co-ordinates (x, y) to pixel q with co-ordinates (s,t) is a sequence of

distinct pixels with co-ordinates.

35. Give the formula for calculating D4 and D8 distance.

D4 distance ( city block distance) is defined by D4(p, q) = |x-s| + |y-t| D8 distance(chess board distance) is

defined by D8(p, q) = max(|x-s|, |y-t|).

36. What is geometric transformation?

Transformation is used to alter the co-ordinate description of image.

The basic geometric transformations are

1. Image translation

2. Scaling

3. Image rotation

37. What is image translation and scaling?

Image translation means reposition the image from one co-ordinate location to another along

straight line path.

Scaling is used to alter the size of the object or image (ie) a co-ordinate system is scaled by a

factor.

38.Distinguish between Monochrome and gray scale image.(May/Jun 2016)

In a monochrome image,each pixel is stored as a single bit which is either 0 or 1.

In a gray scale image,each pixel is stored as a byte with a value between 0 and 255.

39.Compare RGB and HSI color image models.(Nov/Dec 2014)

RGB color image models

In the RGB color model, the three primary colors red, green and blue form the axis of the

cube. This is good for setting the electron gun of CRT.

It is device dependent and not perceptually uniform. This implies that the RGB model

will not in general reproduce the same colour from one display to another.

HSI model

H stands for Hue which is associated with the dominant colour as perceived by the

observer

S for saturation represents the purity of the colour

I for Intensity reflects the brightness

It is based on human colour perception

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

40. When is fine sampling and coarse sampling used? (Apr/May 2017)

Fine sampling is required in the neighborhood of sharp gray level transitions while coarse sampling is

required in relatively smooth regions.

41.What is the function of image sensor? (Apr/May 2017)

Image sensor will produce an electrical output proportional to electric intensity falling on it.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

UNIT II- IMAGE ENHANCEMENT

1. Specify the objective of image enhancement technique.AU APR/MAY 2011

The objective of enhancement technique is to process an image so that the result is more suitable

than the original image for a particular application.

2. Explain the 2 categories of image enhancement. AU NOV/DEC 2012

i) Spatial domain refers to image plane itself & approaches in this category are based on direct

manipulation of picture image.

ii) Frequency domain methods based on modifying the image by fourier transform.

3. What is contrast stretching? AU MAY/JUNE 2009

Contrast stretching reduces an image of higher contrast than the original by darkening the levels

below m and brightening the levels above m in the image.

4. What is grey level slicing?

Highlighting a specific range of grey levels in an image often is desired. Applications include

enhancing features such as masses of water in satellite imagery and enhancing flaws in x-ray images.

5. Define image subtraction.

The difference between 2 images f(x,y) and h(x,y) expressed as, g(x,y)=f(x,y)-h(x,y) is obtained

by computing the difference between all pairs of corresponding pixels from f and h.

6. What is the purpose of image averaging? AU NOV/DEC 2007 , APR /MAY 2011

An important application of image averagingis in the field of astronomy, where imaging with

very low light levels is routine, causing sensor noise frequently to render single images virtually useless

for analysis.

7. What is meant by masking?

Mask is the small 2-D array in which the values of mask co-efficient determines the nature of

process.The enhancement technique based on this type of approach is referred to as mask processing.

8. Give the formula for negative and log transformation.

Negative: S=L-1-r

Log: s = c * log(1 + r) Where c-constant and r = input gray level value s=output gray level value

9. What is meant by bit plane slicing? AU NOV/DEC 2012

Instead of highlighting gray level ranges, highlighting the contribution made to total image

appearance by specific bits might be desired. Suppose that each pixel in an image is represented by 8 bits.

Imagine that the image is composed of eight 1-bit planes, ranging from bit plane 0 for LSB to bit plane-7

for MSB.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

10. Define histogram AU NOV/DEC 2009

The histogram of a digital image with gray levels in the range [0, L-1] shows us the distribution of

grey levels in the image.

11. What is meant by histogram equalization?

Spreading out the frequencies in an image (or equalising the image) is a simple way to improve dark

or washed out images

This transformation is called histogram equalization.

14. What is meant by laplacian filter?

The laplacian for a function f(x,y) of 2 variables is defined as,

2 f [ f ( x 1, y ) f ( x 1, y ) f ( x, y 1) f ( x, y 1)] 4 f ( x, y )

15. Write the steps involved in frequency domain filtering.

To filter an image in the frequency domain:

1. Compute F(u,v) the DFT of the image

2. Multiply F(u,v) by a filter function H(u,v)

3. Compute the inverse DFT of the result

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

16. Give transfer function of a Butterworth low pass filter.

The transfer function of a Butterworth low pass filter of order n and with cut off frequency at a

distance D0 from the origin is, Where D(u , v) [(u M / 2) 2 (v N / 2) 2 ]1/ 2

1 if D (u , v ) D0

H (u , v )

0 if D (u, v ) D0

17. What do you mean by Point processing?

Image enhancement at any Point in an image depends only on the gray level at that point is often

referred to as Point processing. Point processing operations take the form s = T ( r ) where s refers to the

processed image pixel value and r refers to the original image pixel value

18. What is Image Negatives?

The negative of an image with gray levels in the range [0, L-1] is obtained by using the negative

transformation, which is given by the expression. s = L-1-r Where s is output pixel r is input pixel

19. Define Derivative filter?

For a function f (x, y), the gradient f at co-ordinate (x, y) is defined as the vector

f f

f ( x 1) f ( x) f ( y 1) f ( y )

x y

20. Explain spatial filtering?

Spatial filtering is the process of moving the filter mask from point to point in an image. For

linear spatial filter, the response is given by a sum of products of the filter coefficients, and the

corresponding image pixels in the area spanned by the filter mask.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

21. What is a Median filter?

The median filter replaces the value of a pixel by the median of the gray levels in the neighborhood

of that pixel.

22. What is maximum filter and minimum filter?

Minimum filter: Set the pixel value to the minimum in the neighbourhood of pixels. Minimum filter

used for finding darkest points in an image

Maximum filter: Set the pixel value to the maximum in the neighbourhood of pixels.Maximum filter

is used in finding brightest points in an image.

23. Write the application of sharpening filters?

1. Electronic printing and medical imaging to industrial application

2. Autonomous target detection in smart weapons.

24. Name the different types of derivative filters? AU NOV/DEC 2009 ,2008

1. Perwitt operators

2. Roberts cross gradient operators

3. Sobel operators

25. Give transfer function and frequency response of a Gaussian lowpass filter.

The transfer function of a Gaussian lowpass filter of order n and with cut off frequency at a

distance D0 from the origin is, Where D(u , v) [(u M / 2) 2 (v N / 2) 2 ]1/ 2

2

( u , v ) / 2 D0 2

H (u , v) e D

26. Give transfer function and frequency response of a high pass filter.

The transfer function of a high pass filter of order n and with cut off frequency at a

distance D0 from the origin is, Where D(u , v) [(u M / 2) 2 (v N / 2) 2 ]1/ 2

0 if D (u, v) D0

H (u , v)

1 if D (u , v) D0

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

27. Give transfer function and frequency response of a Gaussian high pass filter.

The transfer function of a Gaussian lowpass filter of order n and with cut off frequency at a

distance D0 from the origin is, Where D(u , v) [(u M / 2) 2 (v N / 2) 2 ]1/ 2

2

( u , v ) / 2 D0 2

H (u , v) 1 e D

28. Explain the power law transformation

Power law transformations have the following form

s=c*rγ

Map a narrow range of dark input values into a wider range of output values or vice versa

Varying γ gives a whole family of curves

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

29. If all the pixels in an image are shuffled, will there be any change in the histogram? Justify your

answer. (Apr/May 2017)

No, The histogram of a digital image with gray levels in the range [0, L-1] shows us the number of

occurrence of the gray levels and it is not concerned with the location of pixels.

30. Whether two different images can have same histogram? Justify your answer (Nov/Dec 2017)

The histogram of a digital image with gray levels in the range [0, L-1] shows us the number of occurrence

of the gray levels. Two different images can have the same histogram if the number of occurrence of the

gray level value remains the same in both the images. Example for an eight image there will be 256 levels

of gray level value and values ranging from 0 to 255.The number of occurrence of the gray level value

from 0 to 255 should remain the same.

31. For an eight bit image, write the expression for obtaining the negative of the input image.

(Nov/Dec 2017)

The negative of an image with gray levels in the range [0, 255] is obtained by using the negative

transformation, which is given by the expression. s = 256-1-r Where s is output pixel and r is input pixel

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

UNIT III IMAGE RESTORATION AND SEGMENTATION

1. What is meant by Image Restoration?

Restoration attempts to reconstruct or recover an image that has been degraded by using a clear

knowledge of the degrading phenomenon.

2. What are the two properties in Linear Operator?

Additivity

Homogenity

3. Explain additivity property in Linear Operator?

H[f1(x,y)+f2(x,y)]=H[f1(x,y)]+H[f2(x,y)]

The additive property says that if H is the linear operator,the response to a sum of two is equal to

the sum of the two responses.

4. How a degradation process is modeled? AU MAY/JUNE 2013

A system operator H, which together with an additive white noise term _(x,y) a operates on an

input image f(x,y) to produce a degraded image g(x,y).

5. Explain homogenity property in Linear Operator?

H[k1f1(x,y)]=k1 H[f1(x,y)]

The homogeneity property says that,the response to a constant multiple of any input is equal to

the response to that input multiplied by the same constant.

8. Define circulant matrix?

A square matrix, in which each row is a circular shift of the preceding row and the first row is a

circular shift of the last row, is called circulant matrix.

9. What is concept algebraic approach?

The concept of algebraic approach is to estimate the original image which minimizes a predefined

criterion of performances.

10. What are the two methods of algebraic approach?

o Unconstraint restoration approach

o Constraint restoration approach

11. Define Gray-level interpolation?

Gray-level interpolation deals with the assignment of gray levels to pixels in the spatially

transformed image

12. What is meant by Noise probability density function?

The spatial noise descriptor is the statistical behavior of gray level values in the noise component

of the model.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

13. Why the restoration is called as unconstrained restoration?

In the absence of any knowledge about the noise ‘n’, a meaningful criterion function is to seek an

f^ such that H f^ approximates of in a least square sense by assuming the noise term is as small as

possible. Where H = system operator. f^ = estimated input image. g = degraded image.

14. Which is the most frequent method to overcome the difficulty to formulate the spatial relocation

of pixels?

The point is the most frequent method, which are subsets of pixels whose location in the input

(distorted) and output (corrected) imaged is known precisely.

15. What are the three methods of estimating the degradation function?

1. Observation

2. Experimentation

3. Mathematical modeling.

16. What are the types of noise models?

Guassian noise

Rayleigh noise

Erlang noise

Exponential noise

Uniform noise_ Impulse noise

17. Give the relation for guassian noise? AU MAY /JUNE 2013

Guassian noise: The PDF guassian random variable Z is given 1 2 2

p( z) e ( z ) / 2

2

18. Give the relation for rayleigh noise?

Rayleigh noise: The PDF is 2 2

( z a ) e ( z a ) / b for z a

p( z) b

0 for z a

Mean: a b / 4

Variance: b(4 )

2

4

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

19. Give the relation for Gamma noise?

Gamma noise: The PDF a ,b0 is a positive integer,

a b z b 1 a z

e for z 0

p ( z ) (b 1)!

b 0 for z 0

Mean:

a

b

Variance: 2

a2

20. Give the relation for Exponential noise?

Exponential noise The PDF for a>0 ae a z for z 0

p( z)

0 for z 0

Mean: 1

a

Variance: 1

2

a2

21. Give the relation for Uniform noise?

Uniform noise: ab

The PDF is 1 Mean

if a z b 2

p( z) b a

0 otherwise (b a ) 2

2

12

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

Variance

22. Give the relation for Impulse noise?

Impulse noise: The PDF is Pa for z a

p ( z ) Pb for z b

0 otherwise

23. What is inverse filtering?

The simplest approach to restoration is direct inverse filtering,

G(u,v)=F(u,v)H(u,v)+N(u,v)

G (u , v) N (u , v)

Fˆ (u , v) F (u , v)

H (u , v) H (u , v)

24. What is pseudo inverse filter? AU NOV/DEC 2007

It is the stabilized version of the inverse filter.For a linear shift invariant system with frequency

response H(u,v) the pseudo inverse filter is defined as H-(u,v)=1/(H(u,v) H=/0 0 H=0

25. What is meant by least mean square filter?

The limitation of inverse and pseudo inverse filter is very sensitive noise.The wiener filtering is a

method of restoring images in the presence of blurr as well as noise.

26. Give the equation for singular value decomposition of an image?

Any matrix, A, can be written as multiplication of two orthogonal square matrices, U and V, and a

matrix containing the sorted singular values on its main diagonal This equation is called as singular value

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

decomposition of an image.

27. Write the properties of Singular value Decomposition(SVD)?

The SVD transform varies drastically from image to image.

The SVD transform gives best energy packing efficiency for any given image.

The SVD transform is useful in the design of filters finding least square,minimum solution of

linear equation and finding rank of large matrices.

28. What is meant by blind image restoration? AU MAY /JUNE 2009

An information about the degradation must be extracted from the observed image either explicitly

or implicitly.This task is called as blind image restoration

.

29. What are the two approaches for blind image restoration?

(i) Direct measurement (ii)Indirect estimation

30. What is meant by Direct measurement?

In direct measurement the blur impulse response and noise levels are first estimated from an

observed image where this parameter are utilized in the restoration.

31. What is blur impulse response and noise levels? Blur impulse response:

This parameter is measured by isolating an image of a suspected object within a picture.

Noise levels: The noise of an observed image can be estimated by measuring the image covariance

over a region of constant background luminence.

32. What is meant by indirect estimation?

Indirect estimation method employ temporal or spatial averaging to either obtain a restoration or

to obtain key elements of an image restoration algorithm.

33. Give the difference between Enhancement and Restoration? Apr/May 2017

S.No Image Enhancement Image Restoration

1. It gives better Visual representation It removes the effect of sensing

environment

2. No model required Mathematical model of degradation is

required

3. It is subjective process It is an objective process

4. Removal of image blur by applying a Enhancement technique is based

deblurrings function is considered a primarily on the pleasing aspects it

restoration technique. might present to the viewer. For

example: Contrast Stretching.

34. What is segmentation? AU MAY/JUNE 2012

Segmentation subdivides on image in to its constitute regions or objects. The level to which the

subdivides is carried depends on the problem being solved .That is segmentation should when the

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

objects of interest in application have been isolated.

35. Write the applications of segmentation.

* Detection of isolated points.

* Detection of lines and edges in an image.

36. What are the three types of discontinuity in digital image?

Points, lines and edges.

37. How the derivatives are obtained in edge detection during formulation?

The first derivative at any point in an image is obtained by using the magnitude of the gradient at

that point. Similarly the second derivatives are obtained by using the laplacian.

38. Write about linking edge points.

The approach for linking edge points is to analyze the characteristics of pixels in a small

neighborhood (3x3 or 5x5) about every point (x,y)in an image that has undergone edge detection.

All points that are similar are linked, forming a boundary of pixels that share some common

properties.

39. What are the two properties used for establishing similarity of edge pixels?

(1) The strength of the response of the gradient operator used to produce the edge pixel.

(2) The direction of the gradient.

40. What is edge? MAY /APR 2011

An edge isa set of connected pixels that lie on the boundary between two regions edges are more

closely modeled as having a ramplike profile. The slope of the ramp is inversely proportional to the

degree of blurring in the edge.

41. Give the properties of the second derivative around an edge? MAY JUNE 2013

1. The sign of the second derivative can be used to determine whether an edge pixel lies on the

dark or light side of an edge.

2. It produces two values for every edge in an image.

3. An imaginary straightline joining the extreme positive and negative values of the second

derivative would cross zero near the midpoint of the edge.

42. What is meant by object point and background point?

To execute the objects from the background is to select a threshold T that separate these modes.

Then any point (x,y) for which f(x,y)>T is called an object point. Otherwise the point is called

background point.

43. What is global, Local and dynamic or adaptive threshold?

When Threshold T depends only on f(x,y) then the threshold is called global . If T depends both

on f(x,y) and p(x,y) is called local. If T depends on the spatial coordinates x and y the threshold is

called dynamic or adaptive where f(x,y) is the original image.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

44. Define region growing?

Region growing is a procedure that groups pixels or subregions in to layer regions based on

predefined criteria. The basic approach is to start with a set of seed points and from there grow regions by

appending to each seed these neighbouring pixels that have properties similar to the seed.

45. Specify the steps involved in splitting and merging? MAY JUNE 2013

Split into 4 disjoint quadrants any region Ri for which P(Ri)=FALSE.

Merge any adjacent regions Rj and Rk for which P(RjURk)=TRUE.

Stop when no further merging or splitting is positive.

46. What is meant by markers?

An approach used to control over segmentation is based on markers. marker is a connected

component belonging to an image. We have internal markers, associated with objects of interest and

external markers associated with background.

47. Mention two drawbacks of inverse filter.(Nov/Dec 2017)

The inverse filter disadvantages are:

· It cannot be defined in frequency regions It is definite that while ( , ) is 0 or very small at

certain frequency pairs, ( , ) is large. Note that ( , ) is a low pass filter, whereas ( , ) is

an all pass function. Therefore, the term ( , ) / ( , ) can be huge! Inverse filtering fails in

that case.

· The inverse filter is very sensitive to noise presence

48. Which filter will be effective in minimizing the impact of salt and pepper noise in an

image?(Nov/Dec 2017)

Median filtering is a nonlinear operation used in image processing to reduce "salt and pepper" noise. The

median is calculated by first sorting all the pixel values from the surrounding neighborhood into

numerical order and then replacing the pixel being considered with the middle pixel value. (If the

neighboring pixel which is to be considered contains an even number of pixels, than the average of the

two middle pixel values is used.)

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

UNIT IV WAVELETS AND IMAGE COMPRESSION

1. What is image compression?

Image compression refers to the process of redundancy amount of data required to represent the

given quantity of information for digital image. The basis of reduction process is removal of

redundant data.

2. What is Data Compression?

Data compression requires the identification and extraction of source redundancy. In other words,

data compression seeks to reduce the number of bits used to store or transmit information.

3. What are two main types of Data compression? AU MAY/JUNE 2012

(i) Lossless compression can recover the exact original data after compression. It is used mainly

for compressing database records, spreadsheets or word processing files, where exact replication of the

original is essential.

(ii) Lossy compression will result in a certain loss of accuracy in exchange for a substantial

increase in compression. Lossy compression is more effective when used to compress graphic images and

digitised voice where losses outside visual or aural perception can be tolerated.

4. What is the need for Compression? AU APRIL/MAY 2011

In terms of storage, the capacity of a storage device can be effectively increased with methods

that compress a body of data on its way to a storage device and decompress it when it is retrieved.

In terms of communications, the bandwidth of a digital communication link can be effectively

increased by

Compressing data at the sending end and decompressing data at the receiving end.

At any given time, the ability of the Internet to transfer data is fixed. Thus, if data can effectively

be compressed wherever possible, significant improvements of data throughput can be achieved.

Many files can be combined into one compressed document making sending easier.

5. What are different Compression Methods?

Run Length Encoding (RLE),Arithmetic coding,Huffman coding and Transform coding

6. Define is coding redundancy?

If the gray level of an image is coded in a way that uses more code words than necessary to

represent each gray level, then the resulting image is said to contain coding redundancy.

7. Define interpixel redundancy?

The value of any given pixel can be predicted from the values of its neighbors. The information

carried by is small. Therefore the visual contribution of a single pixel to an image is redundant.

Otherwise called as spatial redundant geometric redundant or

8. What is run length coding?

Run-length Encoding, or RLE is a technique used to reduce the size of a repeating string of

characters. This repeating string is called a run; typically RLE encodes a run of symbols into two

bytes, a count and a symbol. RLE can compress any type of data regardless of its information

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

content, but the content of data to be compressed affects the compression ratio. Compression is

normally measured with the compression ratio.

9. Define compression ratio. AU NOV/DEC 2009

Compression Ratio = original size / compressed size: 1

10. Define psycho visual redundancy?

In normal visual processing certain information has less importance than other information. So

this information is said to be psycho visual redundant.

11. Define encoder

Source encoder is responsible for removing the coding and interpixel redundancy and psycho

visual redundancy. There are two components A) Source EncoderB) Channel Encode an interval of

number between 0 and 1

12. Define source encoder

Source encoder performs three operations

1) Mapper -this transforms the input data into non-visual format. It reduces the interpixel redundancy.

2) Quantizer - It reduces the psycho visual redundancy of the input images .This step is omitted if the

system is error free.

3) Symbol encoder- This reduces the coding redundancy .This is the final stage of encoding process.

13. Define channel encoder

The channel encoder reduces reduces the impact of the channel noise by inserting redundant bits

into the source encoded data. Eg: Hamming code

14. What are the types of decoder?

Source decoder- has two components

a) Symbol decoder- This performs inverse operation of symbol encoder.

b) Inverse mapping- This performs inverse operation of mapper. Channel decoder-this is omitted if the

system is error free.

15. What are the operations performed by error free compression? AU MAY /JUNE 2013

1) Devising an alternative representation of the image in which its interpixel redundant are reduced.

2) Coding the representation to eliminate coding redundancy

16. What is Variable Length Coding?

Variable Length Coding is the simplest approach to error free compression. It reduces only the

coding redundancy. It assigns the shortest possible codeword to the most probable gray levels.

17. Define Huffman coding AU NOV/DEC 2013

Huffman coding is a popular technique for removing coding redundancy.

When coding the symbols of an information source the Huffman code yields the smallest possible

number of code words, code symbols per source symbol.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

18. Define Block code

Each source symbol is mapped into fixed sequence of code symbols or code words. So it is called

as block code.

19. Define instantaneous code

A code word that is not a prefix of any other code word is called instantaneous or prefix

codeword.

20. Define uniquely decodable code

A code word that is not a combination of any other codeword is said to be uniquely decodable

code.

21. Define B2 code

Each code word is made up of continuation bit c and information bit which are binary numbers.

This is called B2 code or B code. This is called B2 code because two information bits are used for

continuation bits

22. Define the procedure for Huffman shift

List all the source symbols along with its probabilities in descending order. Divide the total

number of symbols into block of equal size. Sum the probabilities of all the source symbols outside the

reference block.

Now apply the procedure for reference block, including the prefix source symbol. The code words

for the remaining symbols can be constructed by means of one or more prefix code followed by the

reference block as in the case of binary shift code.

23. Define arithmetic coding

In arithmetic coding one to one corresponds between source symbols and code word doesn’t exist

where as the single arithmetic code word assigned for a sequence of source symbols. A code word defines

24. What is bit plane Decomposition?

An effective technique for reducing an image’s interpixel redundancies is to process the image’s

bit plane individually. This technique is based on the concept of decomposing multilevel images into a

series of binary images and compressing each binary image via one of several well-known binary

compression methods.

25. What are three categories of constant area coding?

The three categories of constant area coding are

All white

All black

Mixed intensity.

The most probable or frequency occurring is assign a 1 bit code ‘0’, other two categories area assigned as

2 bit code ‘10’ and ‘11’

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

26.List the image compression standards

Binary image compression standards

Continuous tone still image compression standards

Video compression standards

27. How effectiveness of quantization can be improved?

Introducing an enlarged quantization interval around zero, called a dead zero.

Adapting the size of the quantization intervals from scale to scale. In either case, the selected

quantization intervals must be transmitted to the decoder with the encoded image bit stream.

28. What are the coding systems in JPEG? AU APRIL/MAY 2011

1. A lossy baseline coding system, which is based on the DCT and is adequate for most compression

application.

2. An extended coding system for greater compression, higher precision or progressive

reconstruction applications.

3. A lossless independent coding system for reversible compression.

29. What is JPEG?

The acronym is expanded as "Joint Photographic Expert Group". It is an international standard in

1992. It perfectly Works with color and grayscale images, Many applications e.g., satellite, medical.

30. What are the basic steps in JPEG? AU NOV/DEC 2008

The Major Steps in JPEG Coding involve:

1. DCT (Discrete Cosine Transformation)

2. Quantization

3. Zigzag Scan_ DPCM on DC component

4. RLE on AC Components

5. Entropy Coding

31. Differentiate JPEG and JPEG2000 standard. AU APRIL/MAY 2011

JPEG is a DCT based compression .JPEG 2000 is a wavelet based compression

32.When a code is said to be prefix code? Mention one advantage of prefix code. (Nov/Dec 2017)

1. The Prefix Code is variable length source coding scheme where no code is the prefix of any other code.

2. The prefix code is a uniquely decodable code. given a complete and accurate sequence, a receiver can

identify each word without requiring a special marker between words.

3. But, the converse is not true i.e., all uniquely decodable codes

33.What is run length coding?(Apr/May 2017)

Run-length encoding (RLE) is one of the simplest data compression methods. The basic RLE principle is

that the run of characters is replaced with the number of the same characters and a single character.

Examples may be helpful to understand it better.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

Ex.: Consider a text source: R T A A A A S D E E E E E .

The RLE representation is: R T *4A S D *5E

34.What are the operations performed by error free compression?(Apr/May 2017)

1. Devising an alternate presentation in which its interpixel redundant are reduced.

2.Coding the representation to reduce coding redundancy

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

UNIT V IMAGE REPRESENTATION AND RECOGNITION

1. What are the 2 principles steps involved in marker selection?

The two steps are

1. Preprocessing

2. Definition of a set of criteria that markers must satisfy.

2. Define chain codes? AU APRIL/MAY 2011

Chain codes are used to represent a boundary by a connected sequence of straight line segment of

specified length and direction. Typically this representation is based on 4 or 8 connectivity of the

segments . The direction of each segment is coded by using a numbering scheme.

3. What are the demerits of chain code?

* The resulting chain code tends to be quite long.

* Any small disturbance along the boundary due to noise cause changes in the code that may not

be related to the shape of the boundary.

4. What is thinning or skeletonizing algorithm?(Nov/Dec 2016)

An important approach to represent the structural shape of a plane region is to reduce it to a

graph. This reduction may be accomplished by obtaining the skeletonizing algorithm. It play a central role

in a broad range of problems in image processing, ranging from automated inspection of printed circuit

boards to counting of asbestos fibres in air filter.

5. Specify the various image representation approaches

Chain codes

Polygonal approximation

Boundary segments

6. What is polygonal approximation method ?

Polygonal approximation is a image representation approach in which a digital boundary can be

approximated with arbitary accuracy by a polygon.For a closed curve the approximation is exact when the

Number of segments in polygon is equal to the number of points in the boundary so that each pair of

adjacent points define a segment in the polygon.

7. Specify the various polygonal approximation methods

Minimum perimeter polygons

Merging techniques

Splitting techniques

8. Name few boundary descriptors

Simple descriptors

Shape numbers

Fourier descriptors

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

9. List the detection methods for boundary detection

1. Searching near an approximation

2. Least Squares Fitting

3. Hough Transform

4. Graph Searching

5. Dynamic Programming

6. Contour Following

10. List the classification of representation techniques

11. Define texture.

An image texture is a set of metrics calculated in image processing designed to quantify the

perceived texture of an image. Image texture gives us information about the spatial arrangement

of color or intensities in an image or selected region of an image.

12. Does the use of chain code compress the description information of an object

contour?(Apr/May 2017)

Chain codes are the most size-efficient representations of rasterised binary shapes and contours.

A chain code is a lossless compression algorithm for monochrome images. The basic principle of

chain codes is to separately encode each connected component, or "blob", in the image. For each

such region, a point on the boundary is selected and its coordinates are transmitted. The encoder

then moves along the boundary of the region and, at each step, transmits a symbol representing

the direction of this movement. This continues until the encoder returns to the starting position, at

which point the blob has been completely described, and encoding continues with the next blob in

the image. This encoding method is particularly effective for images consisting of a reasonably

small number of large connected components.

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

13. Define length of a boundary.

The length of a boundary: the number of pixels along a boundary gives a rough approximation of

its length.

14. Define shape numbers

The shape number of a boundary is defined as the first difference of smallest magnitude.

The order n of a shape number is defined as the number of digits in its representation

15. Name few measures used as simple descriptors in region descriptors.

Some simple descriptors

1. The area of a region: the number of pixels in the region

2. The perimeter of a region: the length of its boundary

3. The compactness of a region: (perimeter)2/area

4. The mean and median of the gray levels

5. The minimum and maximum gray-level values

6. The number of pixels with values above and below the mean

16. Define compactness.

The compactness of a region: (perimeter)2/area

17. Define the term Euler number

The Euler number is defined as the number of connected components minus the number of holes

18.What is meant by pattern classes?(Apr/May 2017)

Pattern class: a family of patterns sharing some common properties. – They are denoted by ω1 , ω2 ,…,

ωW, W being the number of classes.

19. Define pattern and pattern classes. (Nov/Dec 2017)

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Dr.N.G.P. Institute of Technology /Department of Electronics and Communication Engineering

A pattern is essentially an arrangement. It is characterized by the order of the elements of which it is

made, rather than by the intrinsic nature of these elements. Pattern: an arrangement of descriptors (or

features).

Pattern class: a family of patterns sharing some common properties. – They are denoted by ω1 , ω2 ,…,

ωW, W being the number of classes.

20. Obtain the 4 directional chain code for the shape shown in the figure. The dot represents the

starting point. (Nov/Dec 2017)

Chain code: 1,0,0,0,0,03,3,3,2,2,1,2,2,2

Question Bank- Two Marks With Answer IT6005/Digital Image Processing

Das könnte Ihnen auch gefallen

- Unit-I Introduction To Image ProcessingDokument23 SeitenUnit-I Introduction To Image ProcessingSiva KumarNoch keine Bewertungen

- Ec8093 Dip - Question Bank With AnswersDokument189 SeitenEc8093 Dip - Question Bank With AnswersSanthosh PaNoch keine Bewertungen

- Digital Image ProcessingDokument151 SeitenDigital Image ProcessingVishnu PriyaNoch keine Bewertungen

- Question BankDokument37 SeitenQuestion BankViren PatelNoch keine Bewertungen

- Image Processing Notes Citstudents inDokument92 SeitenImage Processing Notes Citstudents insmeena100% (1)

- Ec 1034 - Digital Image ProcessingDokument15 SeitenEc 1034 - Digital Image ProcessingSudharsan PadmanabhanNoch keine Bewertungen

- Ec 1009 - Digital Image ProcessingDokument30 SeitenEc 1009 - Digital Image Processingainugiri75% (4)

- Digital Image Processing NotesDokument94 SeitenDigital Image Processing NotesSILPA AJITHNoch keine Bewertungen

- Image Segmentation in Digital Image ProcessingDokument71 SeitenImage Segmentation in Digital Image ProcessingSATYAM GUPTANoch keine Bewertungen

- Storage Allocation and Parameter PassingDokument9 SeitenStorage Allocation and Parameter Passingiqra100% (1)

- Artificial Intelligent - Questn Bank PrintDokument27 SeitenArtificial Intelligent - Questn Bank PrintPuspha Vasanth RNoch keine Bewertungen

- CS8080 Information Retrieval Techniques Reg 2017 Question BankDokument6 SeitenCS8080 Information Retrieval Techniques Reg 2017 Question BankProject 21-22Noch keine Bewertungen

- 17EC72 DIP Question BankDokument12 Seiten17EC72 DIP Question Bankjay bNoch keine Bewertungen

- ML UNIT-IV NotesDokument23 SeitenML UNIT-IV NotesLokeswar Kaushik100% (1)

- Seminar On "Image Processing"Dokument20 SeitenSeminar On "Image Processing"Sudhir Phophaliya100% (1)

- NLP AkashDokument4 SeitenNLP AkashNikhil TiwariNoch keine Bewertungen

- PPTDokument21 SeitenPPTVITHAL BABLENoch keine Bewertungen

- Unit 4Dokument57 SeitenUnit 4HARIPRASATH PANNEER SELVAM100% (1)

- Unit 5 - Compiler Design - WWW - Rgpvnotes.inDokument20 SeitenUnit 5 - Compiler Design - WWW - Rgpvnotes.inAkashNoch keine Bewertungen

- Data Visualization PDFDokument3 SeitenData Visualization PDFpradeep donNoch keine Bewertungen

- Image Caption Generator Using Deep Learning: Guided by Dr. Ch. Bindu Madhuri, M Tech, PH.DDokument9 SeitenImage Caption Generator Using Deep Learning: Guided by Dr. Ch. Bindu Madhuri, M Tech, PH.Dsuryavamsi kakaraNoch keine Bewertungen

- Qustionbank1 12Dokument40 SeitenQustionbank1 12Bhaskar VeeraraghavanNoch keine Bewertungen

- Physical Symbol System Hypothesis PresentationDokument20 SeitenPhysical Symbol System Hypothesis Presentationgaganrism100% (1)

- Image Processing PDFDokument7 SeitenImage Processing PDFRavindar Negi0% (1)

- UNIT-1 Foundations of Deep LearningDokument51 SeitenUNIT-1 Foundations of Deep Learningbhavana100% (1)

- Multimedia Mining PresentationDokument18 SeitenMultimedia Mining PresentationVivek NaragudeNoch keine Bewertungen

- Fundamentals of DigitalDokument11 SeitenFundamentals of Digitalpavani pydiNoch keine Bewertungen

- Digital Image ProcessingDokument39 SeitenDigital Image ProcessingAswatha RNoch keine Bewertungen

- Adaptive Thresholding Using Quadratic Cost FunctionsDokument27 SeitenAdaptive Thresholding Using Quadratic Cost FunctionsAI Coordinator - CSC JournalsNoch keine Bewertungen

- ANPR ReportDokument52 SeitenANPR ReportPuneet Kumar Singh0% (1)

- Digital Image Processing Question BankDokument4 SeitenDigital Image Processing Question BankAishwarya ThawariNoch keine Bewertungen

- UNIT 4 MaterialDokument31 SeitenUNIT 4 MaterialHaritha SasupalliNoch keine Bewertungen

- Call For Book Chapters: DEEP LEARNING FOR IMAGE PROCESSING APPLICATIONSDokument2 SeitenCall For Book Chapters: DEEP LEARNING FOR IMAGE PROCESSING APPLICATIONSVania V. EstrelaNoch keine Bewertungen

- CHP - 1 - Fundamentals of Digital Image MinDokument15 SeitenCHP - 1 - Fundamentals of Digital Image MinAbhijay Singh JainNoch keine Bewertungen

- Object Detector For Blind PersonDokument20 SeitenObject Detector For Blind PersonRabiul islamNoch keine Bewertungen

- Completed Unit II 17.7.17Dokument113 SeitenCompleted Unit II 17.7.17Dr.A.R.KavithaNoch keine Bewertungen

- Digital Image ProcessingDokument15 SeitenDigital Image ProcessingDeepak GourNoch keine Bewertungen

- Detection of Broken Blister Using Canny and Rc-AlgorithmDokument4 SeitenDetection of Broken Blister Using Canny and Rc-AlgorithmijsretNoch keine Bewertungen

- R MaterialDokument38 SeitenR Materialdeepak100% (1)

- Boundary DescriptorDokument10 SeitenBoundary DescriptorMcs Candra Putra100% (1)

- MA7155-Applied Probability and Statistics Question BankDokument15 SeitenMA7155-Applied Probability and Statistics Question Bankselvakrishnan_sNoch keine Bewertungen

- DigitalImageFundamentalas GMDokument50 SeitenDigitalImageFundamentalas GMvpmanimcaNoch keine Bewertungen

- What Is Deep Learning?: Artificial Intelligence Machine LearningDokument3 SeitenWhat Is Deep Learning?: Artificial Intelligence Machine Learninghadi645Noch keine Bewertungen

- Matlab Fonction Image ProcessingDokument5 SeitenMatlab Fonction Image ProcessingJawad MaalNoch keine Bewertungen

- Nidhish Raj Mourya - Depth Buffer Method (Chapter 4)Dokument15 SeitenNidhish Raj Mourya - Depth Buffer Method (Chapter 4)Nidhish raj mourya100% (1)

- Real Time Object Recognition and ClassificationDokument6 SeitenReal Time Object Recognition and ClassificationVirat singhNoch keine Bewertungen

- AL3391 AI UNIT 4 NOTES EduEnggDokument42 SeitenAL3391 AI UNIT 4 NOTES EduEnggKarthik king KNoch keine Bewertungen

- Fundamental Steps in Digital Image ProcessingDokument26 SeitenFundamental Steps in Digital Image Processingboose kutty dNoch keine Bewertungen

- Hsslive XII Computer Science Quick Notes Biju JohnDokument15 SeitenHsslive XII Computer Science Quick Notes Biju JohnSuhail latheef100% (1)

- Introduction: Introduction To Soft Computing Introduction To Fuzzy Sets and Fuzzy Logic Systems IntroductionDokument1 SeiteIntroduction: Introduction To Soft Computing Introduction To Fuzzy Sets and Fuzzy Logic Systems IntroductionYaksh ShahNoch keine Bewertungen

- Bird Species Classification Using Deep Learning Literature SurveyDokument27 SeitenBird Species Classification Using Deep Learning Literature Surveyharshithays100% (2)

- Dip Question BankDokument38 SeitenDip Question BankSanthosh PaNoch keine Bewertungen

- Unit 2aDokument31 SeitenUnit 2aAkshaya GopalakrishnanNoch keine Bewertungen

- Notes On COMPUTER VISIONDokument10 SeitenNotes On COMPUTER VISIONsanaNoch keine Bewertungen

- Unit 4 - Neural Networks PDFDokument12 SeitenUnit 4 - Neural Networks PDFflorinciriNoch keine Bewertungen

- Chapter 2 AIDokument56 SeitenChapter 2 AIOlana TeressaNoch keine Bewertungen

- Deep Neural Network ASICs The Ultimate Step-By-Step GuideVon EverandDeep Neural Network ASICs The Ultimate Step-By-Step GuideNoch keine Bewertungen

- DATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABVon EverandDATA MINING and MACHINE LEARNING. CLASSIFICATION PREDICTIVE TECHNIQUES: SUPPORT VECTOR MACHINE, LOGISTIC REGRESSION, DISCRIMINANT ANALYSIS and DECISION TREES: Examples with MATLABNoch keine Bewertungen

- It6005 Digital Image Processing - QBDokument21 SeitenIt6005 Digital Image Processing - QBJagadeesanSrinivasanNoch keine Bewertungen

- Final Mip NotesDokument171 SeitenFinal Mip NotesMadesh RNoch keine Bewertungen

- SEARO Proposal For Demonstration Project On R&D For Diabetes MellitusDokument17 SeitenSEARO Proposal For Demonstration Project On R&D For Diabetes Mellitussubramanyam62Noch keine Bewertungen

- DAA Notes JntuDokument45 SeitenDAA Notes Jntusubramanyam62Noch keine Bewertungen

- Form 1: Proposal For A New Field of Technical Activity:: IsiriDokument6 SeitenForm 1: Proposal For A New Field of Technical Activity:: Isirisubramanyam62Noch keine Bewertungen

- Integrated Design in Additive Manufacturing Based On Design For ManufacturingDokument8 SeitenIntegrated Design in Additive Manufacturing Based On Design For Manufacturingsubramanyam62Noch keine Bewertungen

- Systems Programming Question Paper-1Dokument1 SeiteSystems Programming Question Paper-1subramanyam62100% (2)

- Programs CodeDokument5 SeitenPrograms Codesubramanyam62Noch keine Bewertungen

- Valliammai Engineering College: Department of Electronics & Communication Engineering Question BankDokument12 SeitenValliammai Engineering College: Department of Electronics & Communication Engineering Question Banksubramanyam62Noch keine Bewertungen

- Counting and Sets Class 1, 18.05 Jeremy Orloff and Jonathan Bloom 1 Learning GoalsDokument9 SeitenCounting and Sets Class 1, 18.05 Jeremy Orloff and Jonathan Bloom 1 Learning Goalssubramanyam62Noch keine Bewertungen

- BDA ExperimentsDokument2 SeitenBDA Experimentssubramanyam62Noch keine Bewertungen

- Flat ProblemsDokument2 SeitenFlat Problemssubramanyam62Noch keine Bewertungen

- 6 Uec ProgramDokument21 Seiten6 Uec Programsubramanyam62Noch keine Bewertungen

- Examples of PosetDokument3 SeitenExamples of Posetsubramanyam62Noch keine Bewertungen

- DMS RelationsDokument66 SeitenDMS Relationssubramanyam62100% (1)

- Graph Theory and ApplicationsDokument45 SeitenGraph Theory and Applicationssubramanyam62Noch keine Bewertungen

- DMS NotesDokument45 SeitenDMS Notessubramanyam62Noch keine Bewertungen

- CS2301 Discrete Mathematics Unit I: Course Material (Lecture Notes)Dokument23 SeitenCS2301 Discrete Mathematics Unit I: Course Material (Lecture Notes)subramanyam62Noch keine Bewertungen

- Computer Programming NotesDokument171 SeitenComputer Programming Notessubramanyam62Noch keine Bewertungen

- 8051 NotesDokument61 Seiten8051 Notessubramanyam62Noch keine Bewertungen

- Jntu r15 CC Lab ProgramsDokument4 SeitenJntu r15 CC Lab Programssubramanyam62Noch keine Bewertungen

- An Introduction To Image CompressionDokument58 SeitenAn Introduction To Image CompressionRajiv KumarNoch keine Bewertungen

- Why Needed?: Without Compression, These Applications Would Not Be FeasibleDokument11 SeitenWhy Needed?: Without Compression, These Applications Would Not Be Feasiblesmile00972Noch keine Bewertungen

- Chapter Five Lossless CompressionDokument49 SeitenChapter Five Lossless CompressionDesu WajanaNoch keine Bewertungen

- Review of CAVLC, Arithmetic Coding, and CABACDokument12 SeitenReview of CAVLC, Arithmetic Coding, and CABACHari KrishnaNoch keine Bewertungen

- Image Compression5Dokument46 SeitenImage Compression5Duaa HusseinNoch keine Bewertungen

- RCS087 Data CompressionDokument8 SeitenRCS087 Data CompressionTushar BakshiNoch keine Bewertungen

- R16 4-1 Dip Unit 4Dokument23 SeitenR16 4-1 Dip Unit 4kuchipudi durga pravallikaNoch keine Bewertungen

- Secure Arthimitic CodingDokument9 SeitenSecure Arthimitic CodingImran KhanNoch keine Bewertungen

- Fundamentals of Image Compression PDFDokument40 SeitenFundamentals of Image Compression PDFNisha AuroraNoch keine Bewertungen

- Arithmetic CodingDokument5 SeitenArithmetic CodingmiraclesureshNoch keine Bewertungen

- Multimedia Communication Vtu Unit 3Dokument44 SeitenMultimedia Communication Vtu Unit 3raghudathesh50% (4)

- Arithmetic CodingDokument6 SeitenArithmetic CodingperhackerNoch keine Bewertungen

- CABAC Encoder: Context Based Adaptive Binary Arithmetic Coding EncoderDokument8 SeitenCABAC Encoder: Context Based Adaptive Binary Arithmetic Coding EncoderKeshav AwasthiNoch keine Bewertungen

- Shannon-Fano-Elias CodingDokument3 SeitenShannon-Fano-Elias CodingKristina RančićNoch keine Bewertungen

- DCDR Important Question Bank (Assignment/DPR Question) : Faculty of Engineering - 083 Information Technology - 16Dokument1 SeiteDCDR Important Question Bank (Assignment/DPR Question) : Faculty of Engineering - 083 Information Technology - 16Dhruvi MandaviyaNoch keine Bewertungen

- Information Theory and Coding PDFDokument150 SeitenInformation Theory and Coding PDFhawk eyesNoch keine Bewertungen

- SolutionsDokument32 SeitenSolutionsJorge Cerda GarciaNoch keine Bewertungen

- Analysis and Comparison of Algorithms For Lossless Data CompressionDokument8 SeitenAnalysis and Comparison of Algorithms For Lossless Data CompressionRachel AvengerNoch keine Bewertungen

- Chapter 08Dokument111 SeitenChapter 08Smita SangewarNoch keine Bewertungen

- 2015 Chapter 7 MMS ITDokument36 Seiten2015 Chapter 7 MMS ITMercy DegaNoch keine Bewertungen

- A Machine Learning Perspective On Predictive Coding With PAQDokument30 SeitenA Machine Learning Perspective On Predictive Coding With PAQperhackerNoch keine Bewertungen

- Introduction To Multimedia Systems NotesDokument25 SeitenIntroduction To Multimedia Systems NotesGeorges Genge50% (2)

- KSV - DC - IT 702 - April 2020 - DTDokument2 SeitenKSV - DC - IT 702 - April 2020 - DTmalavNoch keine Bewertungen

- Chapter08 1Dokument70 SeitenChapter08 1Jangwoo YongNoch keine Bewertungen

- Multimedia Communication - ECE - VTU - 8th Sem - Unit 3 - Text and Image Compression, RamisuniverseDokument30 SeitenMultimedia Communication - ECE - VTU - 8th Sem - Unit 3 - Text and Image Compression, Ramisuniverseramisuniverse83% (6)

- Chapter 7 Lossless Compression AlgorithmsDokument25 SeitenChapter 7 Lossless Compression AlgorithmsToaster97Noch keine Bewertungen

- Context-Based Adaptive Arithmetic CodingDokument13 SeitenContext-Based Adaptive Arithmetic CodingperhackerNoch keine Bewertungen

- FulltextDokument115 SeitenFulltextSiddhi SailNoch keine Bewertungen