Beruflich Dokumente

Kultur Dokumente

Java - lang.OutOfMemoryError - JAVA HEAP SPACE

Hochgeladen von

DdesperadoOriginalbeschreibung:

Originaltitel

Copyright

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Java - lang.OutOfMemoryError - JAVA HEAP SPACE

Hochgeladen von

DdesperadoCopyright:

java.lang.

OutOfMemoryError: Java heap space

Java applications are only allowed to use a limited amount of memory. This limit is specified

during application startup. To make things more complex, Java memory is separated into two

different regions. These regions are called Heap space and Permgen (for Permanent

Generation) *** OR Metaspace- See last of the doc:

I have taken the following settings from my installed NWDS:

…\NWDS\NWCEIDE07P_8-80002366\eclipse\eclipse.ini

Sensitivity: Internal & Restricted

The size of those regions is set during the Java Virtual Machine (JVM) launch and

can be customized by specifying JVM parameters -Xmx and -XX:MaxPermSize.

If you do not explicitly set the sizes, platform-specific defaults will be used.

The java.lang.OutOfMemoryError: Java heap space error will be triggered when the

application attempts to add more data into the heap space area, but there is not enough

room for it.

Note that there might be plenty of physical memory available, but

the java.lang.OutOfMemoryError: Java heap space error is thrown whenever the JVM reaches

the heap size limit.

What is causing it?

There most common reason for the java.lang.OutOfMemoryError: Java heap spaceerror is

simple – you try to fit an XXL application into an S-sized Java heap space. That is – the

application just requires more Java heap space than available to it to operate normally. Other

causes for this OutOfMemoryError message are more complex and are caused by a

programming error:

• Spikes in usage/data volume. The application was designed to handle a certain amount of

users or a certain amount of data. When the number of users or the volume of data

suddenly spikes and crosses that expected threshold, the operation which functioned

normally before the spike ceases to operate and triggers the java.lang.OutOfMemoryError:

Java heap space error.

• Memory leaks. A particular type of programming error will lead your application to

constantly consume more memory. Every time the leaking functionality of the application is

used it leaves some objects behind into the Java heap space. Over time the leaked objects

consume all of the available Java heap space and trigger the already

familiar java.lang.OutOfMemoryError: Java heap space error.

Sensitivity: Internal & Restricted

Give me an example

Trivial example

The first example is truly simple – the following Java code tries to allocate an array of 2M

integers. When you compile it and launch with 12MB of Java heap space (java -Xmx12m OOM),

it fails with the java.lang.OutOfMemoryError: Java heap spacemessage. With 13MB Java heap

space the program runs just fine.

class OOM {

static final int SIZE=2*1024*1024;

public static void main(String[] a) {

int[] i = new int[SIZE];

}

}

Memory leak example:

The second and a more realistic example is of a memory leak. In Java, when developers create

and use new objects e.g. new Integer(5), they don’t have to allocate memory themselves – this

is being taken care of by the Java Virtual Machine (JVM). During the life of the application the

JVM periodically checks which objects in memory are still being used and which are not.

Unused objects can be discarded and the memory reclaimed and reused again. This process is

called Garbage Collection. The corresponding module in JVM taking care of the collection is

called the Garbage Collector (GC).

Java’s automatic memory management relies on GC to periodically look for unused objects and

remove them. Simplifying a bit we can say that a memory leak in Java is a situation where

some objects are no longer used by the application but Garbage Collection fails to recognize

it. As a result these unused objects remain in Java heap space indefinitely. This pileup will

eventually trigger the java.lang.OutOfMemoryError: Java heap space error.

It is fairly easy to construct a Java program that satisfies the definition of a memory leak:

class KeylessEntry {

static class Key {

Integer id;

Key(Integer id) {

this.id = id;

}

Sensitivity: Internal & Restricted

@Override

public int hashCode() {

return id.hashCode();

}

}

public static void main(String[] args) {

Map m = new HashMap();

while (true)

for (int i = 0; i < 10000; i++)

if (!m.containsKey(new Key(i)))

m.put(new Key(i), "Number:" + i);

}

}

When you execute the above code above you might expect it to run forever without any

problems, assuming that the naive caching solution only expands the underlying Map to 10,000

elements, as beyond that all the keys will already be present in the HashMap. However, in

reality the elements will keep being added as the Key class does not contain a

proper equals() implementation next to its hashCode().

As a result, over time, with the leaking code constantly used, the “cached” results end up

consuming a lot of Java heap space. And when the leaked memory fills all of the available

memory in the heap region and Garbage Collection is not able to clean it,

the java.lang.OutOfMemoryError:Java heap space is thrown.

The solution would be easy – add the implementation for the equals() method similar to the

one below and you will be good to go. But before you manage to find the cause, you will

definitely have lose some precious brain cells.

@Override

public boolean equals(Object o) {

boolean response = false;

if (o instanceof Key) {

response = (((Key)o).id).equals(this.id);

}

return response;

}

Sensitivity: Internal & Restricted

What is the solution?

In some cases, the amount of heap you have allocated to your JVM is just not enough to

accommodate the needs of your applications running on that JVM. In that case, you should just

allocate more heap – see at the end of this chapter for how to achieve that.

In many cases however, providing more Java heap space will not solve the problem. For

example, if your application contains a memory leak, adding more heap will just postpone

the java.lang.OutOfMemoryError: Java heap space error. Additionally, increasing the amount of

Java heap space also tends to increase the length of GC pauses affecting your

application’s throughput or latency.

If you wish to solve the underlying problem with the Java heap space instead of masking the

symptoms, you need to figure out which part of your code is responsible for allocating the most

memory. In other words, you need to answer these questions:

1. Which objects occupy large portions of heap

2. where these objects are being allocated in source code

At this point, make sure to clear a couple of days in your calendar (or – see an automated way

below the bullet list). Here is a rough process outline that will help you answer the above

questions:

• Get security clearance in order to perform a heap dump from your JVM. “Dumps” are

basically snapshots of heap contents that you can analyze. These snapshot can thus contain

confidential information, such as passwords, credit card numbers etc, so acquiring such a

dump might not even be possible for security reasons.

• Get the dump at the right moment. Be prepared to get a few dumps, as when taken at a

wrong time, heap dumps contain a significant amount of noise and can be practically

useless. On the other hand, every heap dump “freezes” the JVM entirely, so don’t take too

many of them or your end users start facing performance issues.

• Find a machine that can load the dump. When your JVM-to-troubleshoot uses for example

8GB of heap, you need a machine with more than 8GB to be able to analyze heap contents.

Fire up dump analysis software (we recommend Eclipse MAT, but there are also equally

good alternatives available).

• Detect the paths to GC roots of the biggest consumers of heap. We have covered this

activity in a separate post here. It is especially tough for beginners, but the practice will

make you understand the structure and navigation mechanics.

• Next, you need to figure out where in your source code the potentially hazardous large

amount of objects is being allocated. If you have good knowledge of your application’s

source code you’ll be able to do this in a couple searches.

Sensitivity: Internal & Restricted

Alternatively, we suggest Plumbr, the only Java monitoring solution with automatic root cause

detection. Among other performance problems it catches all java.lang.OutOfMemoryErrors and

automatically hands you the information about the most memory-hungry data structres.

Plumbr takes care of gathering the necessary data behind the scenes – this includes the

relevant data about heap usage (only the object layout graph, no actual data), and also some

data that you can’t even find in a heap dump. It also does the necessary data processing for you

– on the fly, as soon as the JVM encounters an java.lang.OutOfMemoryError. Here is an

example java.lang.OutOfMemoryErrorincident alert from Plumbr:

Without any additional tooling or analysis you can see:

• Which objects are consuming the most memory

(271 com.example.map.impl.PartitionContainer instances consume 173MB out of 248MB

total heap)

• Where these objects were allocated (most of them allocated in

the MetricManagerImpl class, line 304)

• What is currently referencing these objects (the full reference chain up to GC root)

Equipped with this information you can zoom in to the underlying root cause and make sure the

data structures are trimmed down to the levels where they would fit nicely into your memory

pools.

However, when your conclusion from memory analysis or from reading the Plumbr report are

that memory use is legal and there is nothing to change in the source code, you need to allow

your JVM more Java heap space to run properly. In this case, alter your JVM launch

configuration and add (or increase the value if present) the following:

-Xmx1024m

The above configuration would give the application 1024MB of Java heap space. You can use g

or G for GB, m or M for MB, k or K for KB. For example all of the following are equivalent to

saying that the maximum Java heap space is 1GB:

java -Xmx1073741824 com.mycompany.MyClass

java -Xmx1048576k com.mycompany.MyClass

java -Xmx1024m com.mycompany.MyClass

java -Xmx1g com.mycompany.MyClass

Sensitivity: Internal & Restricted

What is the difference between PermGen and Metaspace?

The main difference from a user perspective - Metaspace by default auto increases its size (up

to what the underlying OS provides), while PermGen always has a fixed maximum size. You can

set a fixed maximum for Metaspace with JVM parameters, but you cannot make PermGen auto

increase.

To a large degree it is just a change of name. Back when PermGen was introduced, there was

no Java EE or dynamic class(un)loading, so once a class was loaded it was stuck in memory until

the JVM shut down - thus Permanent Generation. Nowadays classes may be loaded and

unloaded during the lifespan of the JVM, so Metaspace makes more sense for the area where

the metadata is kept.

Both of them contain the java.lang.Class instances and both of them suffer from ClassLoader

leaks. Only difference is that with Metaspace default settings, it takes longer until you notice

the symptoms (since it auto increases as much as it can), i.e. you just push the problem further

away without solving it. OTOH I imagine the effect of running out of OS memory can be more

severe than just running out of JVM PermGen, so I'm not sure it is much of an improvement.

Sensitivity: Internal & Restricted

Das könnte Ihnen auch gefallen

- Structural Engineering Formulas Second EditionDokument224 SeitenStructural Engineering Formulas Second Editionahmed_60709595194% (33)

- Statutory Declaration Sample Format As Required by ACS 30.09.2016Dokument2 SeitenStatutory Declaration Sample Format As Required by ACS 30.09.2016Ddesperado50% (2)

- UntitledDokument59 SeitenUntitledSylvin GopayNoch keine Bewertungen

- Linux Kernel PDFDokument274 SeitenLinux Kernel PDFakumar_277Noch keine Bewertungen

- Lesson Plan Ordinal NumbersDokument5 SeitenLesson Plan Ordinal Numbersapi-329663096Noch keine Bewertungen

- TheBigBookOfTeamCulture PDFDokument231 SeitenTheBigBookOfTeamCulture PDFavarus100% (1)

- Ninja 5e v1 5Dokument8 SeitenNinja 5e v1 5Jeferson Moreira100% (2)

- M14 PManualDokument382 SeitenM14 PManualnz104100% (2)

- Thread DumpDokument27 SeitenThread DumpSreenivasulu Reddy SanamNoch keine Bewertungen

- Java Memory AllocationDokument12 SeitenJava Memory AllocationErick Jhorman RomeroNoch keine Bewertungen

- Thread DumpDokument7 SeitenThread DumpChandu ChowdaryNoch keine Bewertungen

- Thread and Heap DumpDokument2 SeitenThread and Heap DumpLalith MallakuntaNoch keine Bewertungen

- DB Auto RestartDokument2 SeitenDB Auto Restartk10000Noch keine Bewertungen

- Starting JIRA Automatically On Linux - Atlassian DocumentationDokument3 SeitenStarting JIRA Automatically On Linux - Atlassian DocumentationJuanNoch keine Bewertungen

- Shell Scripting by SantoshDokument87 SeitenShell Scripting by SantoshSantosh VenkataswamyNoch keine Bewertungen

- Generating Heap Dumps and Thread DumpsDokument7 SeitenGenerating Heap Dumps and Thread DumpsAravind PottiNoch keine Bewertungen

- WA5912G08 OutOfMemoryDokument74 SeitenWA5912G08 OutOfMemorypmdariusNoch keine Bewertungen

- SSO Tomcat HowtoDokument3 SeitenSSO Tomcat Howtopepin1971Noch keine Bewertungen

- Packages: Core JavaDokument13 SeitenPackages: Core Javaalok singhNoch keine Bewertungen

- PDFDokument130 SeitenPDFAbdul AzeezNoch keine Bewertungen

- Thread Dump AnalysisDokument23 SeitenThread Dump AnalysisrockineverNoch keine Bewertungen

- Weblogic Thread Dump AnalysisDokument9 SeitenWeblogic Thread Dump Analysisthemission2013Noch keine Bewertungen

- Groovy Lang SpecificationDokument643 SeitenGroovy Lang SpecificationguidgenNoch keine Bewertungen

- How To Fix Memory Leaks in Java - JavalobbyDokument16 SeitenHow To Fix Memory Leaks in Java - JavalobbyPriyank PaliwalNoch keine Bewertungen

- Cl1 HowTo Fix Memory LeaksDokument14 SeitenCl1 HowTo Fix Memory Leakselamodeljuego100% (1)

- Unix and Shell Programming Practical FileDokument6 SeitenUnix and Shell Programming Practical Filelorence_lalit19860% (1)

- Apache TomcatDokument19 SeitenApache Tomcatapi-284453517Noch keine Bewertungen

- DW ScriptDokument7 SeitenDW ScriptsaikrishnatadiboyinaNoch keine Bewertungen

- The Basic Syntax of AWKDokument18 SeitenThe Basic Syntax of AWKDudukuru JaniNoch keine Bewertungen

- Unix ScriptDokument124 SeitenUnix ScriptNaveen SinghNoch keine Bewertungen

- Log4j Users GuideDokument118 SeitenLog4j Users GuidengcenaNoch keine Bewertungen

- Splunk Developer Study Notes-2Dokument55 SeitenSplunk Developer Study Notes-2aceNoch keine Bewertungen

- Linux Boot ProcessDokument59 SeitenLinux Boot ProcessViraj BhosaleNoch keine Bewertungen

- Au086inst PDFDokument510 SeitenAu086inst PDFNguyễn CươngNoch keine Bewertungen

- Heap Dump Analysis - Holiday Readiness-2020: Xiaobai WangDokument13 SeitenHeap Dump Analysis - Holiday Readiness-2020: Xiaobai WangShashankSomaNoch keine Bewertungen

- The Java VM Architecture & ApisDokument44 SeitenThe Java VM Architecture & ApisJigar ShahNoch keine Bewertungen

- Jfrog by VijayDokument16 SeitenJfrog by VijaySachin KuchhalNoch keine Bewertungen

- Awk One-Liners Explained (Preview Copy)Dokument12 SeitenAwk One-Liners Explained (Preview Copy)Peteris KruminsNoch keine Bewertungen

- Shell Scripting PresentationDokument91 SeitenShell Scripting PresentationNiranjan DasNoch keine Bewertungen

- Introduction To Java For OO Developers - Student Exercises JA311Dokument254 SeitenIntroduction To Java For OO Developers - Student Exercises JA311Rodrigo ArenasNoch keine Bewertungen

- Log4j 2 Users GuideDokument161 SeitenLog4j 2 Users GuideIvan KurajNoch keine Bewertungen

- Javascript NotesDokument54 SeitenJavascript NotesCharles OtwomaNoch keine Bewertungen

- HP 3PAR HP-UX Implementation Guide PDFDokument51 SeitenHP 3PAR HP-UX Implementation Guide PDFSladur BgNoch keine Bewertungen

- Servlets MaterialDokument98 SeitenServlets MaterialSayeeKumar MadheshNoch keine Bewertungen

- PratyangiraDokument1 SeitePratyangiraSai Ranganath BNoch keine Bewertungen

- Load RunnerDokument3 SeitenLoad RunnerGautam GhoseNoch keine Bewertungen

- Linux Tutorial NetworkingDokument22 SeitenLinux Tutorial Networkinggplai100% (1)

- IIBC, Brigade Road, Mobile: 95350 95999 Loadrunner Interview Questions and AnswersDokument5 SeitenIIBC, Brigade Road, Mobile: 95350 95999 Loadrunner Interview Questions and Answerskan_kishoreNoch keine Bewertungen

- JVM Memory Management & DiagnosticsDokument24 SeitenJVM Memory Management & DiagnosticsKshirabdhi TanayaNoch keine Bewertungen

- Java Plugin Creation Using Atlassian SDKDokument5 SeitenJava Plugin Creation Using Atlassian SDKPriya ArunNoch keine Bewertungen

- InfoQ - Java Performance PDFDokument27 SeitenInfoQ - Java Performance PDFpbecicNoch keine Bewertungen

- Advanced Bash Scripting GuideDokument459 SeitenAdvanced Bash Scripting GuidelosrmanNoch keine Bewertungen

- Soap - WSDL - Uddi: - ContentsDokument30 SeitenSoap - WSDL - Uddi: - ContentsSai KrishnaNoch keine Bewertungen

- Sanskrit Alhad PDFDokument132 SeitenSanskrit Alhad PDFshrikantNoch keine Bewertungen

- Apache Ambari Tutorial-FiqriDokument26 SeitenApache Ambari Tutorial-FiqriYassin RhNoch keine Bewertungen

- Foundation 1 - Lesson 1Dokument54 SeitenFoundation 1 - Lesson 1Alamelu VenkatesanNoch keine Bewertungen

- Fun Mentals of Java in Telugu LanguageDokument22 SeitenFun Mentals of Java in Telugu LanguageRajesh KannaNoch keine Bewertungen

- CoreJava1 NirajDokument308 SeitenCoreJava1 NirajNiraj Koirala100% (1)

- Java API For Kannel SMS - WAP Gateway V 1.0Dokument4 SeitenJava API For Kannel SMS - WAP Gateway V 1.0zembecisseNoch keine Bewertungen

- Linux For AIX Specialists: Similarities and DifferencesDokument66 SeitenLinux For AIX Specialists: Similarities and DifferencesHuynh Sy NguyenNoch keine Bewertungen

- Python Durga NotesDokument369 SeitenPython Durga NotesParshuramPatil100% (1)

- Shell Scripting Interview QuestionsDokument2 SeitenShell Scripting Interview QuestionsSandeep Kumar G AbbagouniNoch keine Bewertungen

- INCEPTEZ TECHNOLOGIES Installation Guide: 1. Install SparkDokument2 SeitenINCEPTEZ TECHNOLOGIES Installation Guide: 1. Install SparkGiridharselvaNoch keine Bewertungen

- Oracle SOA BPEL Process Manager 11gR1 A Hands-on TutorialVon EverandOracle SOA BPEL Process Manager 11gR1 A Hands-on TutorialBewertung: 5 von 5 Sternen5/5 (1)

- SAP Integration Advisor User Guide: Working With Message Implementation GuidelinesDokument8 SeitenSAP Integration Advisor User Guide: Working With Message Implementation GuidelinesDdesperadoNoch keine Bewertungen

- What S New in Sap Process Orchestration 75 Sp00Dokument13 SeitenWhat S New in Sap Process Orchestration 75 Sp00DdesperadoNoch keine Bewertungen

- SAP PI ContextDokument11 SeitenSAP PI ContextDdesperadoNoch keine Bewertungen

- How Do You Activate ABAP Proxies?: 1. Following Inputs Are Required For Technical SettingDokument4 SeitenHow Do You Activate ABAP Proxies?: 1. Following Inputs Are Required For Technical SettingDdesperadoNoch keine Bewertungen

- When To Use Party in PI Configuration?Dokument3 SeitenWhen To Use Party in PI Configuration?DdesperadoNoch keine Bewertungen

- Decs vs. San DiegoDokument7 SeitenDecs vs. San Diegochini17100% (2)

- SAMPLE Forklift Safety ProgramDokument5 SeitenSAMPLE Forklift Safety ProgramSudiatmoko SupangkatNoch keine Bewertungen

- Dell Inspiron 5547 15Dokument7 SeitenDell Inspiron 5547 15Kiti HowaitoNoch keine Bewertungen

- Questionnaire OriginalDokument6 SeitenQuestionnaire OriginalJAGATHESANNoch keine Bewertungen

- Practical Search Techniques in Path Planning For Autonomous DrivingDokument6 SeitenPractical Search Techniques in Path Planning For Autonomous DrivingGergely HornyakNoch keine Bewertungen

- Bo Sanchez-Turtle Always Wins Bo SanchezDokument31 SeitenBo Sanchez-Turtle Always Wins Bo SanchezCristy Louela Pagapular88% (8)

- Root End Filling MaterialsDokument9 SeitenRoot End Filling MaterialsRuchi ShahNoch keine Bewertungen

- Device InfoDokument3 SeitenDevice InfoGrig TeoNoch keine Bewertungen

- Anansi and His Six Sons An African MythDokument3 SeitenAnansi and His Six Sons An African MythShar Nur JeanNoch keine Bewertungen

- Finite Element Modeling Analysis of Nano Composite Airfoil StructureDokument11 SeitenFinite Element Modeling Analysis of Nano Composite Airfoil StructureSuraj GautamNoch keine Bewertungen

- Science: BiologyDokument22 SeitenScience: BiologyMike RollideNoch keine Bewertungen

- Cruiziat Et Al. 2002Dokument30 SeitenCruiziat Et Al. 2002Juan David TurriagoNoch keine Bewertungen

- My LH Cover LetterDokument3 SeitenMy LH Cover LetterAkinde FisayoNoch keine Bewertungen

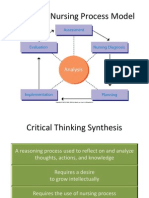

- NUR 104 Nursing Process MY NOTESDokument77 SeitenNUR 104 Nursing Process MY NOTESmeanne073100% (1)

- Lecture Planner - Chemistry - MANZIL For JEE 2024Dokument1 SeiteLecture Planner - Chemistry - MANZIL For JEE 2024Rishi NairNoch keine Bewertungen

- LEARNING ACTIVITY SHEET in Oral CommDokument4 SeitenLEARNING ACTIVITY SHEET in Oral CommTinTin100% (1)

- Datasheet TBJ SBW13009-KDokument5 SeitenDatasheet TBJ SBW13009-KMarquinhosCostaNoch keine Bewertungen

- DesignDokument402 SeitenDesignEduard BoleaNoch keine Bewertungen

- Grade 7 Math Lesson 22: Addition and Subtraction of Polynomials Learning GuideDokument4 SeitenGrade 7 Math Lesson 22: Addition and Subtraction of Polynomials Learning GuideKez MaxNoch keine Bewertungen

- Yazaki BrochureDokument4 SeitenYazaki Brochureguzman_10Noch keine Bewertungen

- High School Department PAASCU Accredited Academic Year 2017 - 2018Dokument6 SeitenHigh School Department PAASCU Accredited Academic Year 2017 - 2018Kevin T. OnaroNoch keine Bewertungen

- A B&C - List of Residents - VKRWA 12Dokument10 SeitenA B&C - List of Residents - VKRWA 12blr.visheshNoch keine Bewertungen

- 5024Dokument2 Seiten5024Luis JesusNoch keine Bewertungen

- Federal Public Service CommissionDokument2 SeitenFederal Public Service CommissionNasir LatifNoch keine Bewertungen

- HLA HART Concept of LawDokument19 SeitenHLA HART Concept of LawHarneet KaurNoch keine Bewertungen