Beruflich Dokumente

Kultur Dokumente

Tdds

Hochgeladen von

Mayur MandrekarOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Tdds

Hochgeladen von

Mayur MandrekarCopyright:

Verfügbare Formate

Process states

Main article: Process state

The various process states, displayed in a state diagram, with arrows indicating possible

transitions between states.

An operating system kernel that allows multitasking needs processes to have certain states.

Names for these states are not standardised, but they have similar functionality.[1]

First, the process is "created" by being loaded from a secondary storage device (hard

disk drive, CD-ROM, etc.) into main memory. After that the process scheduler

assigns it the "waiting" state.

While the process is "waiting", it waits for the scheduler to do a so-called context

switch and load the process into the processor. The process state then becomes

"running", and the processor executes the process instructions.

If a process needs to wait for a resource (wait for user input or file to open, for

example), it is assigned the "blocked" state. The process state is changed back to

"waiting" when the process no longer needs to wait (in a blocked state).

Once the process finishes execution, or is terminated by the operating system, it is no

longer needed. The process is removed instantly or is moved to the "terminated" state.

When removed, it just waits to be removed from main memory.[1][3]

Inter-process communication

Main article: Inter-process communication

When processes communicate with each other it is called "Inter-process communication"

(IPC). Processes frequently need to communicate, for instance in a shell pipeline, the output

of the first process need to pass to the second one, and so on to the other process. It is

preferred in a well-structured way not using interrupts.

It is even possible for the two processes to be running on different machines. The operating

system (OS) may differ from one process to the other, therefore some mediator(s) (called

protocols) are needed.

History

See also: History of operating systems

By the early 1960s, computer control software had evolved from monitor control software,

for example IBSYS, to executive control software. Over time, computers got faster while

computer time was still neither cheap nor fully utilized; such an environment made

multiprogramming possible and necessary. Multiprogramming means that several programs

run concurrently. At first, more than one program ran on a single processor, as a result of

underlying uniprocessor computer architecture, and they shared scarce and limited hardware

resources; consequently, the concurrency was of a serial nature. On later systems with

multiple processors, multiple programs may run concurrently in parallel.

Programs consist of sequences of instructions for processors. A single processor can run only

one instruction at a time: it is impossible to run more programs at the same time. A program

might need some resource, such as an input device, which has a large delay, or a program

might start some slow operation, such as sending output to a printer. This would lead to

processor being "idle" (unused). To keep the processor busy at all times, the execution of

such a program is halted and the operating system switches the processor to run another

program. To the user, it will appear that the programs run at the same time (hence the term

"parallel").

Shortly thereafter, the notion of a "program" was expanded to the notion of an "executing

program and its context". The concept of a process was born, which also became necessary

with the invention of re-entrant code. Threads came somewhat later. However, with the

advent of concepts such as time-sharing, computer networks, and multiple-CPU shared

memory computers, the old "multiprogramming" gave way to true multitasking,

multiprocessing and, later, multithreading.

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Spin DumpDokument591 SeitenSpin DumpshabazzelallahNoch keine Bewertungen

- 3.1. Schematic Explorer View Schematic Explorer: 3 Working With The Diagrams ApplicationDokument4 Seiten3.1. Schematic Explorer View Schematic Explorer: 3 Working With The Diagrams ApplicationMayur MandrekarNoch keine Bewertungen

- New SCGROUP: Schematic Group Rename Apply Dismiss: All Button Can Be UsedDokument3 SeitenNew SCGROUP: Schematic Group Rename Apply Dismiss: All Button Can Be UsedMayur MandrekarNoch keine Bewertungen

- Savework / Getwork SaveworkDokument4 SeitenSavework / Getwork SaveworkMayur MandrekarNoch keine Bewertungen

- GWWDDokument3 SeitenGWWDMayur MandrekarNoch keine Bewertungen

- XsxefcrDokument5 SeitenXsxefcrMayur MandrekarNoch keine Bewertungen

- Weights Are All Non-Negative and For Any Value of The Parameter, They Sum To UnityDokument2 SeitenWeights Are All Non-Negative and For Any Value of The Parameter, They Sum To UnityMayur MandrekarNoch keine Bewertungen

- Y FDN GD Kfy GD DN: K FD DDokument5 SeitenY FDN GD Kfy GD DN: K FD DMayur MandrekarNoch keine Bewertungen

- Gate Solved Paper - Me: X Aq Q y A Q Dy Q Q Q QDokument51 SeitenGate Solved Paper - Me: X Aq Q y A Q Dy Q Q Q QMayur MandrekarNoch keine Bewertungen

- British Steel Universal Beams PFC Datasheet PDFDokument2 SeitenBritish Steel Universal Beams PFC Datasheet PDFMayur MandrekarNoch keine Bewertungen

- 2.6 Computer Aided Assembly of Rigid Bodies: P P Q Q P P QDokument4 Seiten2.6 Computer Aided Assembly of Rigid Bodies: P P Q Q P P QMayur MandrekarNoch keine Bewertungen

- New Doc 2017-07-27 - 1Dokument1 SeiteNew Doc 2017-07-27 - 1Mayur MandrekarNoch keine Bewertungen

- Check Opc Types: V-111S Process VesselDokument1 SeiteCheck Opc Types: V-111S Process VesselMayur MandrekarNoch keine Bewertungen

- 00 PDFDokument2 Seiten00 PDFMayur MandrekarNoch keine Bewertungen

- 01 PDFDokument2 Seiten01 PDFMayur MandrekarNoch keine Bewertungen

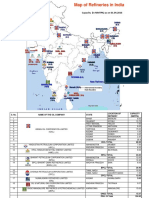

- RefineriesMap PDFDokument2 SeitenRefineriesMap PDFMayur MandrekarNoch keine Bewertungen

- Multi Programming Tasking Processing Threading Time Sharing & RTOSDokument5 SeitenMulti Programming Tasking Processing Threading Time Sharing & RTOSHussain MujtabaNoch keine Bewertungen

- Transaction Management and Concurrency ControlDokument9 SeitenTransaction Management and Concurrency ControlakurathikotaiahNoch keine Bewertungen

- POSIX Threads ProgrammingDokument15 SeitenPOSIX Threads ProgrammingMaria Luisa Nuñez MendozaNoch keine Bewertungen

- OS LAbDokument5 SeitenOS LAbUday KumarNoch keine Bewertungen

- Research Papers On Deadlock in Distributed SystemDokument7 SeitenResearch Papers On Deadlock in Distributed Systemgw219k4yNoch keine Bewertungen

- Platform ScriptDokument2 SeitenPlatform Scriptandres tabaldoNoch keine Bewertungen

- GPU Computing With CUDA Lecture 3 - Efficient Shared Memory UseDokument52 SeitenGPU Computing With CUDA Lecture 3 - Efficient Shared Memory UseSandeep SrikumarNoch keine Bewertungen

- MTX TutorialDokument5 SeitenMTX TutorialTuomo Vendu VenäläinenNoch keine Bewertungen

- 09 MultitaskingDokument68 Seiten09 Multitasking21139019Noch keine Bewertungen

- Operating Systems Unit-1: Tribhuvan Singh (GLA120252) Assistant ProfessorDokument61 SeitenOperating Systems Unit-1: Tribhuvan Singh (GLA120252) Assistant ProfessorJATIN glaNoch keine Bewertungen

- Akka ScalaDokument771 SeitenAkka ScalaSHOGUN131Noch keine Bewertungen

- School of Computing Science and Engineering, VIT University, Vellore-632014, Tamilnadu, India +91-8056469636Dokument8 SeitenSchool of Computing Science and Engineering, VIT University, Vellore-632014, Tamilnadu, India +91-8056469636Gopichand VuyyuruNoch keine Bewertungen

- 04 Quiz 1 - ARGDokument2 Seiten04 Quiz 1 - ARGRobert BasanNoch keine Bewertungen

- 300+ TOP Operating System LAB VIVA Questions and AnswersDokument25 Seiten300+ TOP Operating System LAB VIVA Questions and AnswersRohit AnandNoch keine Bewertungen

- Unit-7: Transaction ProcessingDokument81 SeitenUnit-7: Transaction ProcessingBhavya JoshiNoch keine Bewertungen

- Transaction: Controlling Concurrent BehaviorDokument63 SeitenTransaction: Controlling Concurrent BehaviorPhan Chi BaoNoch keine Bewertungen

- Case Study IPC in LinuxDokument2 SeitenCase Study IPC in LinuxveguruprasadNoch keine Bewertungen

- By Asst. Professor Dept. of Computer Application ST - Joseph's College (Arts & Science) Kovur, ChennaiDokument29 SeitenBy Asst. Professor Dept. of Computer Application ST - Joseph's College (Arts & Science) Kovur, ChennaiCheril Renjit D RNoch keine Bewertungen

- Types of Parallel ComputingDokument11 SeitenTypes of Parallel Computingprakashvivek990Noch keine Bewertungen

- Concurrency in OOPSDokument62 SeitenConcurrency in OOPSMeenakshi TripathiNoch keine Bewertungen

- Inter-Process CommunicationDokument39 SeitenInter-Process CommunicationRajesh PandaNoch keine Bewertungen

- Operating Systems Question BankDokument10 SeitenOperating Systems Question BankAman AliNoch keine Bewertungen

- Locking in SQL ServerDokument20 SeitenLocking in SQL ServerPrashant KumarNoch keine Bewertungen

- Module 4 - Synchronization ToolsDokument28 SeitenModule 4 - Synchronization ToolsKailashNoch keine Bewertungen

- Minggu 7 RTOS 2Dokument11 SeitenMinggu 7 RTOS 2Pahala SamosirNoch keine Bewertungen

- Thread in JavaDokument26 SeitenThread in JavaVinayKumarSinghNoch keine Bewertungen

- Operating System Questions and AnswersDokument13 SeitenOperating System Questions and Answersnavinsehgal100% (1)

- Processes: Process Concept Process Scheduling Operation On Processes Cooperating Processes Interprocess CommunicationDokument27 SeitenProcesses: Process Concept Process Scheduling Operation On Processes Cooperating Processes Interprocess CommunicationMaha IbrahimNoch keine Bewertungen