Beruflich Dokumente

Kultur Dokumente

74934

Hochgeladen von

jotaroxoOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

74934

Hochgeladen von

jotaroxoCopyright:

Verfügbare Formate

Torch: a tool for building topic hierarchies

from growing text collections∗

Ricardo M. Marcacini Solange O. Rezende

Mathematical and Computer Sciences Institute - ICMC Mathematical and Computer Sciences Institute - ICMC

University of São Paulo - USP University of São Paulo - USP

Sao Carlos, SP, Brazil Sao Carlos, SP, Brazil

rmm@icmc.usp.br solange@icmc.usp.br

ABSTRACT Hierarchical clustering methods have been used to sup-

Topic hierarchies are very useful for managing, searching port the construction of topic hierarchies in a wider vari-

and browsing large repositories of text documents. Several ety of applications such as digital libraries, web directories

studies have investigated the use of hierarchical clustering and document engineering [3, 2, 5]. The main motivation

methods to support the construction of topic hierarchies in for the use of hierarchical clustering is the ability to orga-

an unsupervised way. In this paper we present the Torch nize the textual collection in different levels of granularity.

- Topic Hierarchies software tool that implements the Thus, the most generic knowledge is represented by clusters

main features of the method IHTC - Incremental Hierar- of higher hierarchy levels while their details, or more specific

chical Term Cluster, aiming to build topic hierarchies from knowledge, by clusters of lower levels. This result is similar

growing text collections. A simple experiment is described to the concept of topic hierarchies, facilitating the process

in order to illustrate the use of the Torch to support the of building these hierarchies. However, despite recent ad-

construction of digital libraries. vances, there are still some challenges related to building

topic hierarchies, as presented below.

Categories and Subject Descriptors • Incremental clustering: most algorithms require that

all documents are available before starting the clustering

H.4 [Information Systems Applications]: Miscellaneous

process. Furthermore, when new documents are inserted,

the cluster tree needs to be rebuilt and this is computation-

General Terms ally expensive when dealing with growing text collections.

Algorithms, Experimentation Thus, it is necessary to update the clusters dynamically as

new documents are inserted, ie, performing clustering in an

Keywords incremental way.

• Understandable cluster labels: the interpretation of

Topic Hierarchies, Incremental Clustering, Digital Libraries

the results of clustering is a difficult task for users. There-

fore, for the building topic hierarchies it is important that

1. INTRODUCTION clusters have simple descriptions, ie, terms (words) that in-

The online platforms for publishing and storing digital dicate the contents of the documents in the clusters.

content has contributed significantly to the growth of sev- • Topics overlapping: a particular feature of textual

eral text repositories. In this scenario, large numbers of new collections is that documents can belong to more than one

documents are generated everyday, resulting in the so-called topic. Thus, the topics overlapping is a desirable effect, since

growing text collections. Due to the need to extract useful it allows to maintain the multi-topic property of the texts.

knowledge from these repositories, methods for automatic

and intelligent organization of text collections have received Since these challenges are requirements in many real sce-

great attention in the literature [1]. The use of topic hier- narios, we proposed and evaluated in [4] a method called

archies is one of the most popular approaches for this orga- IHTC (Incremental Hierarchical Term Clustering). The IHTC

nization because they allow users to explore the collection method provides algorithms to incrementally perform pre-

interactively through topics that indicate the contents of the processing and hierarchical clustering of textual data, there-

available documents. fore allowing the construction of topic hierarchies with the

∗This work was sponsored by FAPESP and CNPq. requirements identified above.

In view of this, in this paper we present the Torch - Topic

Hierarchies software tool that implements the main fea-

tures of the method IHTC aiming to build topic hierarchies

from growing text collections. The Torch tool helps users

to organize and manage text collections and can be used in

various applications.

The outline of this paper is as follows. In Section 2, we

present the architecture and the main functionalities of the

Torch. A simple experiment is described in Section 3 in order

to illustrate the use of the tool to support the construction

of digital libraries. Finally, Section 4 summarizes the paper • Stemming: performs the process of reducing words for

and outlines some directions for future work. their stems, ie, the portion of a word that is left after remov-

ing its prefixes and suffixes. The tool has options to stem

2. TORCH - TOPIC HIERARCHIES Portuguese and English texts using the Porter algorithm1 .

The Torch tool (TOpic HieraRCH ies) was developed to • Term Selection: selects a small subset of terms for

help users to “see hidden topics” in unstructured textual each document, providing a concise representation with little

collections. The tool was implemented in the Java language loss of information. This functionality is based on the Zipf’s

and its architecture is organized into three main modules, as law to identify the most important terms of the texts.

illustrated in Figure 1: (1) Text Preprocessing, (2) Hierar- • Co-occurrence Network Construction: builds a

chical Term Cluster and (3) Discovering Topic Hierarchies. co-occurrence network from terms extracted from each docu-

An overview of the tool can be summarized as follows. ment. The network is built incrementally using an algorithm

First, a text data source is monitored to identify when new that dynamically estimates the pairs of frequent terms [4].

documents are added. Each new document is preprocessed Thus, if two terms occur with high frequency during the text

(Text Preprocessing module) and the most significant preprocessing then a new edge is added to connect the pair

terms are selected for the construction of a co-occurrence of terms in the co-occurrence network.

network. Then the cluster of terms (candidate topics) are

identified in the Hierarchical Term Clustering module Through the functionalities described above, the Text Pre-

through a hierarchical clustering algorithm that is applied in processing module builds a co-occurrence network incremen-

the co-ocurrence network. Finally, the Discovering Topic tally as new documents are inserted. The co-occurrence

Hierarchies module selects the best clusters of terms for network is used as input for the module Hierarchical Term

the building of the final topic hierarchy. Clustering, as described in the next section.

2.2 Hierarchical Term Clustering

1 2 3

Hierarchical Term Discovering Topic The aim of this module is to organize the co-occurrence

Text Preprocessing

Clustering Hierarchies

network into cluster of terms, where terms from the same

Term7 ROOT

Term6 cluster are similar compared with terms from different clus-

Term1

Term2

ters. The Torch has two options to compute the similarity

Term5 Term3

between terms.

Term4

• Jaccard: the similarity is computed as the ratio be-

tween the number of documents in which the two terms

occur together (intersection) and the number of different

Growing Text Applications / Users documents (union).

Collections

• SNN (Shared Nearest Neighbors): uses only the

topology of the co-ocurrence network and defines the simi-

Figure 1: Torch architecture larity between a pair of terms in accordance of their shared

nearest neighbors.

The modules of the tool are implemented according to

After choosing a similarity measure, a hierarchical cluster-

the method IHTC [4]. In the next sections we describe in

ing algorithm is executed to construct a dendrogram with

more details the functionalities of each module and their

cluster of terms. The dendrogram is a binary tree that orga-

relationships.

nizes the terms into clusters and subclusters. This process is

2.1 Text Preprocessing illustrated in Figure 2, where the co-ocurrence network (a)

is processed resulting in the dendrogram (b).

Textual collections can easily contain thousands of terms,

many of them redundant and unnecessary, that slow the pro- retrieval machine

systems

cess of building topic hierarchies and decreases the quality of information

learning

results. Moreover, in this work we consider that the textual text

data

data is completely unstructured requiring specific tasks to visual

mining

learning

obtain a structured representation of texts. Thus, the goals discovery

of the text preprocessing module is to maintain a subset of mining knowledge

data

representative terms and organize them in a co-occurrence text machine visual

network. The co-occurrence network is a structured rep- information

retrieval

resentation where terms are organized in a graph and two knowledge discovery

systems

terms are connected if there is a significant co-occurrence (a) Co-occurrence network (b) Dendrogram

value between them.

Each new document inserted to the text collection is pre-

processed individually. To this end, the Text Preprocessing Figure 2: Example of Hierarchical Term Clustering

module has the following functionalities. The hierarchical clustering algorithm available in the Torch

tool is an algorithm for online clustering of terms [4]. This

• Stopwords removal: non-significant words are re-

algorithm allows a fast reconstruction of the dendrogram as

moved from the text, such as articles, prepositions and con-

the co-occurrence network changes and, therefore, the up-

junctions. The tool provides stopwords lists for English and

date process can be performed in an incremental way.

Portuguese texts. However, the user can add new stopwords

1

as needed. http://tartarus.org/∼martin/PorterStemmer/

2.3 Discovering Topic Hierarchies Note that a perfect fit would have produced a FScore index

The dendrogram obtained from the previous module is of 1.0.

used as the basis for building a topic hierarchy. This process Some of the results are presented in Figure 4. In this

involves the execution of two steps. figure, the left column is the predetermined topic according

to ACM and the right column is the topic obtained using

• Documents mapping: the clusters of terms of the the Torch for the same set of documents.

dendrogram should be associated with sets of documents.

ACM Topic Topics obtained using the Torch

This mapping is performed recovering all documents that

VirtualReality virtual, environ, realiti, augment

have the terms of a given cluster. Thus, it is possible to

SoftwareReusability softwar, reus, compon

obtain sets of documents with terms (labels) identifying the MobileMultimedia wireless, network, node

topics of textual collection. SoftwareEngine test, case, studi, program, execut, suit

• Topics overlapping: since a document may be asso- DistributedSimulation simul, distribut, parallel

ciated with more than one cluster of terms during “Docu- WebIntelligence web, page, semant, ontolog, concept, relat, document

ments mapping”, the multi-topic property is obtained nat- Figure 4: Examples of topics obtained using the

urally. However, some topics may be redundant because Torch

they can cover the same set of documents. Thus, the fi-

nal topic hierarchy is constructed by combining the topics The results indicate that the Torch can effectively help

of the same level of the hierarchy according to the equation users to build topic hierarchies from growing text collections.

| Ti ∩ Tj | / | Ti ∪ Tj |6 α, where | Ti | is the number of For example, the topic hierarchy obtained can be used as a

documents in the topic Ti and α is a value that defines the first structure to be improved by the user or can also be used

level of overlap. as metadata in information retrieval systems.

After performing these steps, a topic hierarchy is available 4. CONCLUDING REMARKS

for users and applications. The Torch uses a XML format In this paper we presented an overview of the Torch tool,

as output of the results, since it is easy to understand and developed with the goal of building topic hierarchies from

can be used in various applications. growing text collections. The architecture of the tool and

It is important to note that the requirements discussed its main functionalities were described and also discussed

above have been met. Thus, the building of the topic hierar- how the tool meets the requirements: incremental clustering,

chies is performed incrementally and, moreover, documents understandable cluster labels, and topics overlapping.

can belong to more than one topic. A simple controlled experiment is carried out to illustrate

the functionalities of the tool in the construction of digital

3. EXPERIMENT: BUILDING TOPIC HIER- libraries. In this experiment, we identified and evaluated the

ARCHIES FOR DIGITAL LIBRARIES main topics of eight text collections.

In order to illustrate the main functionalities of the tool The Torch tool and text collections are available online at:

Torch, we carried out a simple controlled experiment with http://labic.icmc.usp.br/softwares-and-application-tools/.

the aim of supporting the construction of digital libraries. In In the future, we plan to improve the Torch tool by in-

our experiments we used eight different real text collection cluding new similarity measures between terms. Further-

obtained from ACM’s digital library. Each text collection more, we intend to investigate visualization techniques of

contains about 500 articles about computer science and is topic hierarchies to facilitate analysis of results.

organized into 40 predefined topics catalogued according to

the ACM library. The aim of the experiment is to obtain a 5. REFERENCES

topic hierarchy from the tool Torch and compare it with the [1] M. J. A. Berry and J. Kogan. Text Mining:

predefined topics. During this process, we inserted one arti- Applications and Theory. Wiley, West Sussex, 2010.

cle at a time to assess the incremental effect and to simulate [2] B. C. M. Fung, K. Wang, and M. Ester. Encyclopedia

a scenario involving growing text collections. The results of data warehousing and mining, volume 1, chapter

were evaluated by the FScore index, which compares the Hierarchical Document Clustering, pages 555–560.

topic hierarchy and predefined topics using precision and Information Science Reference - IGI Publishing, 2008.

recall measures. [3] R. M. Marcacini, M. F. Moura, and S. O. Rezende.

0.90

IFM’s Digital Library: an application for organizing

0.80 information using hierarchical clustering (In

0.70 portuguese). In III Workshop on Digital Libraries

0.60

FScore Inde x

0.50

(WDL), XIII Webmedia, pages 1–8, 2007.

0.40 [4] R. M. Marcacini and S. O. Rezende. Incremental

0.30 construction of topic hierarchies using hierarchical term

0.20

0.10

clustering. In Proceedings of the 22nd International

0.00 Conference on Software Engineering and Knowledge

ACM1 ACM2 ACM3 ACM4 ACM5 ACM6 ACM7 ACM8

Te xt Colle ctions

Engineering (SEKE), pages 553–558. KSI - Knowledge

Figure 3: FScore values obtained in the experiment Systems Institute, 2010.

[5] M. F. Moura, R. M. Marcacini, B. M. Nogueira, M. S.

Figure 3 shows the graph with the FScore values obtained Conrado, and S. O. Rezende. A proposal for building

from each text collection. The highest FScore value obtained domain topic taxonomies. In Proceedings of 1st

is 0.79 while the lowest FScore is 0.63, indicating a good rela- International Workshop on Web and Text Intelligence

tionship between the topic hierarchy and predefined topics. (WTI), XIX SBIA, pages 83–84, 2008.

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- CS772 Lec7Dokument13 SeitenCS772 Lec7juggernautjhaNoch keine Bewertungen

- Mech Vibration Intro - RahulDokument36 SeitenMech Vibration Intro - Rahulrs100788Noch keine Bewertungen

- Specifications: FANUC Series 16/18-MB/TB/MC/TC FANUC Series 16Dokument15 SeitenSpecifications: FANUC Series 16/18-MB/TB/MC/TC FANUC Series 16avalente112Noch keine Bewertungen

- Research Methods I FinalDokument9 SeitenResearch Methods I Finalapi-284318339Noch keine Bewertungen

- Paper 14Dokument19 SeitenPaper 14RakeshconclaveNoch keine Bewertungen

- Inverse Functions Code PuzzleDokument3 SeitenInverse Functions Code PuzzleDarrenPurtillWrightNoch keine Bewertungen

- CIV 413 IntroductionDokument61 SeitenCIV 413 IntroductionحمدةالنهديةNoch keine Bewertungen

- State Estimation Using Kalman FilterDokument32 SeitenState Estimation Using Kalman FilterViraj ChaudharyNoch keine Bewertungen

- 4pm1 02r Que 20230121Dokument36 Seiten4pm1 02r Que 20230121M.A. HassanNoch keine Bewertungen

- Quantitative - Methods Course TextDokument608 SeitenQuantitative - Methods Course TextJermaine RNoch keine Bewertungen

- 5th MathsDokument6 Seiten5th MathsMaricruz CuevasNoch keine Bewertungen

- Projectiles 2021Dokument25 SeitenProjectiles 2021Praveen KiskuNoch keine Bewertungen

- Geo Experiments Final VersionDokument8 SeitenGeo Experiments Final VersionmanuelomanueloNoch keine Bewertungen

- Planning Plant ProductionDokument10 SeitenPlanning Plant ProductionjokoNoch keine Bewertungen

- Equilibrium II: Physics 106 Lecture 8Dokument20 SeitenEquilibrium II: Physics 106 Lecture 8riyantrin_552787272Noch keine Bewertungen

- TIPERs Sensemaking Tasks For Introductory Physics 1st Edition Hieggelke Solutions Manual DownloadDokument167 SeitenTIPERs Sensemaking Tasks For Introductory Physics 1st Edition Hieggelke Solutions Manual DownloadWilliams Stjohn100% (24)

- (Biomedical Engineering) : BM: Biosciences and BioengineeringDokument22 Seiten(Biomedical Engineering) : BM: Biosciences and BioengineeringDevasyaNoch keine Bewertungen

- 5950 2019 Assignment 7 Spearman RhoDokument1 Seite5950 2019 Assignment 7 Spearman RhoNafis SolehNoch keine Bewertungen

- Bahan Reading Hydraulic PneumaticDokument6 SeitenBahan Reading Hydraulic PneumaticAhmad HaritsNoch keine Bewertungen

- Cryptool LabDokument11 SeitenCryptool Labimbo9Noch keine Bewertungen

- Soil Mechanics Laboratory Report Lab #2 - Atterberg LimitsDokument4 SeitenSoil Mechanics Laboratory Report Lab #2 - Atterberg LimitsAndrew ChapmanNoch keine Bewertungen

- 1 Density and Relative DensityDokument32 Seiten1 Density and Relative DensityOral NeedNoch keine Bewertungen

- Multiple Choice - Circle The Correct ResponseDokument7 SeitenMultiple Choice - Circle The Correct ResponsesharethefilesNoch keine Bewertungen

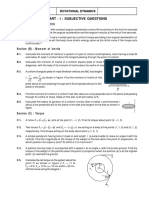

- CPP1 RotationaldynamicsDokument14 SeitenCPP1 RotationaldynamicsBNoch keine Bewertungen

- Contrast Weighting Glascher Gitelman 2008Dokument12 SeitenContrast Weighting Glascher Gitelman 2008danxalreadyNoch keine Bewertungen

- 2.2.2.5 Lab - Basic Data AnalyticsDokument7 Seiten2.2.2.5 Lab - Basic Data Analyticsabdulaziz doroNoch keine Bewertungen

- My Courses: Home UGRD-GE6114-2113T Week 6-7: Linear Programming & Problem Solving Strategies Midterm Quiz 1Dokument3 SeitenMy Courses: Home UGRD-GE6114-2113T Week 6-7: Linear Programming & Problem Solving Strategies Midterm Quiz 1Miguel Angelo GarciaNoch keine Bewertungen

- MiniZinc - Tutorial PDFDokument82 SeitenMiniZinc - Tutorial PDFDavidNoch keine Bewertungen

- Chapter 3: Continuous Random Variables: Nguyễn Thị Thu ThủyDokument69 SeitenChapter 3: Continuous Random Variables: Nguyễn Thị Thu ThủyThanh NgânNoch keine Bewertungen

- Math 2701 - Week 1 - Simple and Compound InterestDokument28 SeitenMath 2701 - Week 1 - Simple and Compound InterestjasonNoch keine Bewertungen