Beruflich Dokumente

Kultur Dokumente

Project2 NN Digit Classification Brief Updated PDF

Hochgeladen von

John PrashanthOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Project2 NN Digit Classification Brief Updated PDF

Hochgeladen von

John PrashanthCopyright:

Verfügbare Formate

Project 2:

The Real Problem

Recognizing multi-digit numbers in photographs captured at street level is an important

component of modern-day map making. A classic example of a corpus of such street

level photographs are Google’s Street View imagery comprised of hundreds of millions of

geo-located 360-degree panoramic images. The ability to automatically transcribe an

address number from a geo-located patch of pixels and associate the transcribed

number with a known street address helps pinpoint, with a high degree of accuracy, the

location of the building it represents.

More broadly, recognizing numbers in photographs is a problem of interest to the optical

character recognition community. While OCR on constrained domains like document

processing is well studied, arbitrary multi-character text recognition in photographs is

still highly challenging. This difficulty arises due to the wide variability in the visual

appearance of text in the wild on account of a large range of fonts, colors, styles,

orientations, and character arrangements. The recognition problem is further

complicated by environmental factors such as lighting, shadows, specularities, and

occlusions as well as by image acquisition factors such as resolution, motion, and focus

blurs.

In this project, we will use the dataset with images centered around a single digit (many of the

images do contain some distractors at the sides). Although we are taking a sample of

the data which is simpler, it is more complex than MNIST because of the distractors.

The Street View House Numbers (SVHN) Dataset

SVHN is a real-world image dataset for developing machine learning and object

recognition algorithms with the minimal requirement on data formatting but comes from a

significantly harder, unsolved, real-world problem (recognizing digits and numbers in

natural scene images). SVHN is obtained from house numbers in Google Street View

images.

Link to the dataset:

https://drive.google.com/file/d/1L2-WXzguhUsCArrFUc8EEkXcj33pahoS/view?usp=sharing

Code to Load the dataset -

Acknowledgment for the datasets.

Yuval Netzer, Tao Wang, Adam Coates, Alessandro Bissacco, Bo Wu, Andrew Y. Ng

Reading Digits in Natural Images with Unsupervised Feature Learning NIPS Workshop

on Deep Learning and Unsupervised Feature Learning 2011. PDF

http://ufldl.stanford.edu/housenumbers as the URL for this site when necessary

The objective of the project is to learn how to implement a simple image classification pipeline

based on a deep neural network. The goals of this project are as follows:

● Understand the basic Image Classification pipeline and the data-driven approach

(train/predict stages)

● Data fetching and understand the train/test splits. (5 points)

● Implement and apply a deep neural network classifier including (15 points)

● Implement batch normalization for training the neural network (5 points)

● Print the classification accuracy metrics (10 points)

Happy Learning!

Das könnte Ihnen auch gefallen

- More Terrain For The Ogre KingdomsDokument13 SeitenMore Terrain For The Ogre KingdomsAndy Kirkwood100% (2)

- Wedding WorkbookDokument14 SeitenWedding WorkbookRideforlifeNoch keine Bewertungen

- Kanu Behl's Titli - ScriptDokument94 SeitenKanu Behl's Titli - Scriptmoifightclub69% (13)

- Real Time Object Detection Using SSD and MobileNetDokument6 SeitenReal Time Object Detection Using SSD and MobileNetIJRASETPublicationsNoch keine Bewertungen

- 20 Photoshop Retouching TipsDokument196 Seiten20 Photoshop Retouching TipsAyhan Benlioğlu100% (1)

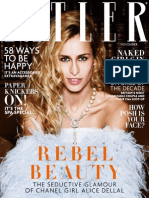

- Tatler - November 2014 UKDokument270 SeitenTatler - November 2014 UKIrina Nechita100% (1)

- Deep Collaborative Learning Approach Over Object RecognitionDokument5 SeitenDeep Collaborative Learning Approach Over Object RecognitionIjsrnet EditorialNoch keine Bewertungen

- NN Project2 Brief PDFDokument5 SeitenNN Project2 Brief PDFbharathi0% (1)

- Neural Network Exercise PDFDokument5 SeitenNeural Network Exercise PDFbharathiNoch keine Bewertungen

- SR22804211151Dokument8 SeitenSR22804211151Paréto BessanhNoch keine Bewertungen

- Multi-Digit Number Recognition From Street View Imagery Using Deep Convolutional Neural NetworksDokument12 SeitenMulti-Digit Number Recognition From Street View Imagery Using Deep Convolutional Neural NetworksSalvation TivaNoch keine Bewertungen

- Content Based Image RetrievalDokument18 SeitenContent Based Image RetrievalDeepaDunderNoch keine Bewertungen

- Two-Stage Generative Adversarial Networks For Docu PDFDokument30 SeitenTwo-Stage Generative Adversarial Networks For Docu PDFbroNoch keine Bewertungen

- Satellite Image Processing Research PapersDokument6 SeitenSatellite Image Processing Research Papersgz49w5xr100% (1)

- Robio 2012 6491076Dokument6 SeitenRobio 2012 6491076rohit mittalNoch keine Bewertungen

- Synopsis of Real Time Security System: Submitted in Partial Fulfillment of The Requirements For The Award ofDokument6 SeitenSynopsis of Real Time Security System: Submitted in Partial Fulfillment of The Requirements For The Award ofShanu Naval SinghNoch keine Bewertungen

- Graphics Ass GnmentDokument5 SeitenGraphics Ass GnmenttinoNoch keine Bewertungen

- Jiang 2021Dokument11 SeitenJiang 2021Aadith Thillai Arasu SNoch keine Bewertungen

- Image-Based Indoor Localization Using Smartphone CameraDokument9 SeitenImage-Based Indoor Localization Using Smartphone Cameratoufikenfissi1999Noch keine Bewertungen

- Project Computer GraphicsDokument18 SeitenProject Computer Graphicsvaku0% (2)

- Automated Student Attendance Using Facial Recognition Based On DRLBP &DRLDPDokument4 SeitenAutomated Student Attendance Using Facial Recognition Based On DRLBP &DRLDPDarshan PatilNoch keine Bewertungen

- Combining Semantic and Geometric Features For Object Class Segmentation of IndoorDokument5 SeitenCombining Semantic and Geometric Features For Object Class Segmentation of Indoorcoolguy0007Noch keine Bewertungen

- Digital Image Processing Full ReportDokument4 SeitenDigital Image Processing Full ReportLovepreet VirkNoch keine Bewertungen

- Information Extraction From Remotely Sensed ImagesDokument39 SeitenInformation Extraction From Remotely Sensed ImagesMAnohar KumarNoch keine Bewertungen

- Final Doc Cbir (Content Based Image Retrieval) in Matlab Using Gabor WaveletDokument69 SeitenFinal Doc Cbir (Content Based Image Retrieval) in Matlab Using Gabor WaveletSatyam Yadav100% (1)

- Handwritten Digit Recognition Using Machine Learning: Mahaboob Basha - SK, Vineetha.J, Sudheer.DDokument9 SeitenHandwritten Digit Recognition Using Machine Learning: Mahaboob Basha - SK, Vineetha.J, Sudheer.DSudheer DuggineniNoch keine Bewertungen

- Ref 1Dokument5 SeitenRef 1Arthi SNoch keine Bewertungen

- Fast Multiresolution Image Querying: Charles E. Jacobs Adam Finkelstein David H. SalesinDokument10 SeitenFast Multiresolution Image Querying: Charles E. Jacobs Adam Finkelstein David H. Salesinsexy katriNoch keine Bewertungen

- Artificial Intelligence Based Mobile RobotDokument19 SeitenArtificial Intelligence Based Mobile RobotIJRASETPublicationsNoch keine Bewertungen

- Human Action Recognition On Raw Depth MapsDokument13 SeitenHuman Action Recognition On Raw Depth Mapsjacekt89Noch keine Bewertungen

- Digital Image ProcessingDokument10 SeitenDigital Image ProcessingAkash SuryanNoch keine Bewertungen

- Duplicate-Search-Based Image Annotation Using Web-Scale DataDokument18 SeitenDuplicate-Search-Based Image Annotation Using Web-Scale Dataprayag pradhanNoch keine Bewertungen

- Free Research Papers On Digital Image ProcessingDokument5 SeitenFree Research Papers On Digital Image Processingkcjzgcsif100% (1)

- Thesis Based On Digital Image ProcessingDokument7 SeitenThesis Based On Digital Image Processingkristenwilsonpeoria100% (1)

- SC-YOLO A Object Detection Model For Small Traffic SignsDokument11 SeitenSC-YOLO A Object Detection Model For Small Traffic SignsthewildmusicworldNoch keine Bewertungen

- 3 D Point Cloud ClassificationDokument7 Seiten3 D Point Cloud ClassificationICRDET 2019Noch keine Bewertungen

- Duplicate Image Detection and Comparison Using Single Core, Multiprocessing, and MultithreadingDokument8 SeitenDuplicate Image Detection and Comparison Using Single Core, Multiprocessing, and MultithreadingInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- Currency Recognition On Mobile Phones Proposed System ModulesDokument26 SeitenCurrency Recognition On Mobile Phones Proposed System Moduleshab_dsNoch keine Bewertungen

- Real-Time Semantic Slam With DCNN-based Feature Point Detection, Matching and Dense Point Cloud AggregationDokument6 SeitenReal-Time Semantic Slam With DCNN-based Feature Point Detection, Matching and Dense Point Cloud AggregationJUAN CAMILO TORRES MUNOZNoch keine Bewertungen

- Semantic Segmentation Algorithms For Aerial Image Analysis and ClassificationDokument2 SeitenSemantic Segmentation Algorithms For Aerial Image Analysis and ClassificationMarius_2010Noch keine Bewertungen

- Vanity Plate IdentificationDokument7 SeitenVanity Plate Identificationswathi8903422971Noch keine Bewertungen

- Satellite Image Processing Research PaperDokument4 SeitenSatellite Image Processing Research Paperaflbrpwan100% (3)

- Term Paper On Digital Image ProcessingDokument5 SeitenTerm Paper On Digital Image Processingafdtygyhk100% (1)

- A Voluminous Survey On Content Based Image Retrieval: AbstractDokument8 SeitenA Voluminous Survey On Content Based Image Retrieval: AbstractInternational Journal of Engineering and TechniquesNoch keine Bewertungen

- Image ProcessingDokument9 SeitenImage Processingnishu chaudharyNoch keine Bewertungen

- Remotesensing 11 01499Dokument29 SeitenRemotesensing 11 01499ibtissam.boumarafNoch keine Bewertungen

- Thesis On Image RetrievalDokument6 SeitenThesis On Image Retrievallanawetschsiouxfalls100% (2)

- Research Paper Topics Digital Image ProcessingDokument5 SeitenResearch Paper Topics Digital Image Processingefjr9yx3100% (1)

- Heritage Identification of MonumentsDokument8 SeitenHeritage Identification of MonumentsIJRASETPublicationsNoch keine Bewertungen

- 19BCS2675 AbhinavSinhaDokument67 Seiten19BCS2675 AbhinavSinhaLakshay ChoudharyNoch keine Bewertungen

- Thesis On Content Based Image RetrievalDokument7 SeitenThesis On Content Based Image Retrievalamberrodrigueznewhaven100% (2)

- International Journal of Engineering Research and Development (IJERD)Dokument8 SeitenInternational Journal of Engineering Research and Development (IJERD)IJERDNoch keine Bewertungen

- Edited IPDokument10 SeitenEdited IPdamarajuvarunNoch keine Bewertungen

- Reverse Image QueryingDokument8 SeitenReverse Image QueryingIJRASETPublicationsNoch keine Bewertungen

- Photo Model - 01Dokument10 SeitenPhoto Model - 01HulaimiNoch keine Bewertungen

- Deep Learning Based Object Recognition Using Physically-Realistic Synthetic Depth ScenesDokument21 SeitenDeep Learning Based Object Recognition Using Physically-Realistic Synthetic Depth ScenesAn HeNoch keine Bewertungen

- Using Grayscale Images For Object Recognition With Convolutional-Recursive Neural NetworkDokument5 SeitenUsing Grayscale Images For Object Recognition With Convolutional-Recursive Neural NetworkNugraha BintangNoch keine Bewertungen

- B.M.S College of Engineering: (Autonomous Institution Under VTU) Bangalore-560 019Dokument25 SeitenB.M.S College of Engineering: (Autonomous Institution Under VTU) Bangalore-560 019Aishwarya NaiduNoch keine Bewertungen

- NW ReportDokument76 SeitenNW ReportAshaNoch keine Bewertungen

- Research Paper On Image RetrievalDokument7 SeitenResearch Paper On Image Retrievalafmbvvkxy100% (1)

- Route Planning For Emergency Evacuation Using Graph Traversal AlgorithmsDokument18 SeitenRoute Planning For Emergency Evacuation Using Graph Traversal AlgorithmsJose BarbacoaNoch keine Bewertungen

- Semantic SegmentationDokument22 SeitenSemantic Segmentationnagwa AboEleneinNoch keine Bewertungen

- Image Segmentation Using Obia in Ecognition, Grass and OpticksDokument5 SeitenImage Segmentation Using Obia in Ecognition, Grass and OpticksDikky RestianNoch keine Bewertungen

- Real Time Object Detection Using Deep Learning Andmachine Learning ProjectDokument56 SeitenReal Time Object Detection Using Deep Learning Andmachine Learning ProjectShashwat srivastavaNoch keine Bewertungen

- Videoinformacionnaya Sistema VISERA ELITE IIDokument8 SeitenVideoinformacionnaya Sistema VISERA ELITE II郭小龙Noch keine Bewertungen

- Agisoft Metashape Change Log: Version 1.6.0 Build 9397 (21 October 2019, Preview Release)Dokument41 SeitenAgisoft Metashape Change Log: Version 1.6.0 Build 9397 (21 October 2019, Preview Release)haroladriano21Noch keine Bewertungen

- Radiology X-Rayfilm ScreensDokument39 SeitenRadiology X-Rayfilm ScreensFourthMolar.comNoch keine Bewertungen

- SureColor SC P800 DatasheetDokument2 SeitenSureColor SC P800 Datasheetlorez22Noch keine Bewertungen

- Better Homes and Other Fictions by Connie MaraanDokument13 SeitenBetter Homes and Other Fictions by Connie MaraanUST Publishing HouseNoch keine Bewertungen

- New Framework Level 2Dokument129 SeitenNew Framework Level 2eletrodavid86% (7)

- Garcia Salgado - Vermeer's The Music Lesson in Modular Perspective - Bridges2006-663Dokument2 SeitenGarcia Salgado - Vermeer's The Music Lesson in Modular Perspective - Bridges2006-663alfonso557Noch keine Bewertungen

- Pornography EssayDokument8 SeitenPornography EssayddianwNoch keine Bewertungen

- Manual Colorview IiiDokument82 SeitenManual Colorview IiiemadhsobhyNoch keine Bewertungen

- Altra20 enDokument40 SeitenAltra20 enemadhsobhyNoch keine Bewertungen

- DNA User Manual en PDFDokument158 SeitenDNA User Manual en PDFWilman Godofredo Avilés RomeroNoch keine Bewertungen

- CLS Aipmt 18 19 XII Che Study Package 5 SET 1 Chapter 5Dokument10 SeitenCLS Aipmt 18 19 XII Che Study Package 5 SET 1 Chapter 5Ûdây RäjpütNoch keine Bewertungen

- Autofocus CameraDokument54 SeitenAutofocus Cameraharisrauf1995Noch keine Bewertungen

- Teoria de SwitchgearDokument108 SeitenTeoria de SwitchgearMarcos GoianoNoch keine Bewertungen

- TKH Security Siqura bc822v2h3 AsDokument6 SeitenTKH Security Siqura bc822v2h3 AsEhsan RohaniNoch keine Bewertungen

- Kelcy Christy ResumeDokument1 SeiteKelcy Christy ResumekelcychristyNoch keine Bewertungen

- Short Film Analysis - SightDokument3 SeitenShort Film Analysis - Sightashleyhamilton970% (1)

- Project Memento Getting Started PDFDokument41 SeitenProject Memento Getting Started PDFManuelHernándezNoch keine Bewertungen

- Video Camera Recorder: CCD-TRV14E/TRV24E HDokument88 SeitenVideo Camera Recorder: CCD-TRV14E/TRV24E HIrfan Andi SuhadaNoch keine Bewertungen

- The University Murders-Richard MacAndrewDokument43 SeitenThe University Murders-Richard MacAndrewDavid Burgos E Irene del PozoNoch keine Bewertungen

- A Photograph (Shirley Toulson)Dokument15 SeitenA Photograph (Shirley Toulson)Tia LathNoch keine Bewertungen

- Why The Book Isn't Dead: An Essay On Ebooks and The Future of The Publishing IndustryDokument6 SeitenWhy The Book Isn't Dead: An Essay On Ebooks and The Future of The Publishing Industrytangiblepublications100% (2)

- 5222 SSDokument2 Seiten5222 SSWilliamCondeNoch keine Bewertungen

- Semi-Automatic Classification Plugin - TutorialDokument20 SeitenSemi-Automatic Classification Plugin - TutorialtoarunaNoch keine Bewertungen

- GearsjohannesDokument7 SeitenGearsjohannesapi-238276665Noch keine Bewertungen