Beruflich Dokumente

Kultur Dokumente

TERR Ebook PDF

Hochgeladen von

GuildosOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

TERR Ebook PDF

Hochgeladen von

GuildosCopyright:

Verfügbare Formate

TIBCO Spotfire®

TERR

Tutorial

A STEP BY STEP GUIDE

By Daniel Smith,

Director of Data Science and Innovation

Syntelli Solutions Inc: TERR® Tutorial eBook 1

info@syntelli.com

Table of Contents

Note From The Author…………………………………………………………………………….3

Ch. 1 – Introduction to TIBCO Spotfire® TERR………………………….5

Ch. 2 – How to use R-Scripts in TIBCO Spotfire® TERR……..17

Ch. 3 – An Example of R in TERR (package “RINR”) Using

The Census Rest API…………………………………………………………...23

Ch. 4 – Passing Data From TERR into R in Spotfire®……………32

Ch. 5 – Using TERR in Rstudio………………………………………………………..37

Syntelli Solutions Inc: TERR® Tutorial eBook 2

info@syntelli.com

Note from the author

By Daniel Smith

I’ll admit, when I first started doing analytics, I didn’t know how to

code a thing… I leaned heavily on tools like SPSS and JMP – tools

which allowed me to see all my data like a spreadsheet, then point

and click to compare columns or add columns and factors to a

model.

My idea of an “advanced” model was something put together in a

spreadsheet or that I pieced together with a calculator.

I knew there were more powerful analytical tools out there, but in the

early 2000’s the concept of a data scientist was immature at best,

unknown at worst. The only community of programming statisticians

with a low knowledge barrier to entry was SAS and I had neither the

financial means nor the inclination to become a SAS developer.

Therefore, I turned to the only option for a broke analyst to become a

not-so-broke statistician (as they were called at the time) – the R

language.

After lots of books and wading through obtuse forum posts, I

managed to obtain a working knowledge of the language. My

analytics ability, and job opportunities, skyrocketed.

Now, lucky for us all, R is much more accessible: point and click tools

like Rattle and RCommander easily extend the platform; quick start

guides are available online for free; and RStudio has become a

mature IDE (No more command line!).

But there is still reluctance for many organizations to adopt R. It is

open source, meaning it is community developed as opposed to

organizationally developed – there is no centralized documentation

and no Enterprise Quality Assurance – causing businesses to either:

A) Invest in high cost platforms like SAS or B) resist increasing their

analytics maturity at the cost of competitive advantage.

Syntelli Solutions Inc: TERR® Tutorial eBook 3

info@syntelli.com

Enter TERR, TIBCO® Enterprise Runtime for R.

TERR provides a well-documented R scripting environment designed

by TIBCO®'s data science team. It also comes pre-installed in

TIBCO’s Spotfire® Business Intelligence Platform, allowing raw R

code to integrate into Spotfire and create procedurally generated

scripts through a point and click interface - if that’s your cup of tea.

I am no longer a big fan of point and click. I rarely find myself doing

anything linear enough for a GUI to save time… However, if it hadn’t

been for the point and click tools, I never would have made the leap

from junior analyst hack to R programming hack.

Although brief, the purpose of this eBook is to progress through a

similar journey. We will begin pointing out the built in TERR features

of Spotfire, discuss how to use custom TERR scripts within Spotfire,

then provide some use cases that demonstrate TERR and R are not

only for calculating statistics, but also for Extracting and Transforming

Data.

If you have any questions about this content, feel free to reach out at

Daniel.Smith@Syntelli.com

Syntelli Solutions Inc: TERR® Tutorial eBook 4

info@syntelli.com

Chapter 1

INTRODUCTION TO

TIBCO® SPOTFIRE® TERR

TIBCO® ENTERPRISE RUNTIME FOR R (“TERR”)

As mentioned previously, TIBCO

Enterprise Runtime for R (TERR) is an

enterprise level statistical engine built

upon the extremely powerful open

source R language. It provides a best of

both worlds compromise between the

Enterprise needs process validation and

documentation versus the flexibility and

affordability of open source.

Out of the box, Spotfire Analyst contains TERR. The only requirement

is to be connected to some data to analyze and have the appropriate

permissions.

Let’s start exploring TERR with the basics, a point and click linear

regression. With every Spotfire installation, there should be a set

demo data in your library. I’ll be using the Baseball data set in this

example. If you don’t have it, don’t worry, you can follow along, it’s

really simple!

I’m curious to find what other performance is related to home runs, so

I want to regress a few metrics such as walks and hits against home

runs per player per season. But any good analyst knows to first

explore the data before we start messing around with a model.

Since we are pulling this data into Spotfire to start, exploring our data

set is super simple. In a matter of seconds I can check out my data

relationships. Just for this example I’m going to use hits and errors as

predictors of homeruns, so I want to see how they relate to the

dependent variable and if they share a relationship.

Syntelli Solutions Inc: TERR® Tutorial eBook 5

info@syntelli.com

As one would expect, hits are somewhat correlated with homeruns,

whereas errors are not really correlated with hits or HRs. This is

expected as errors occur while on defense and hits and homeruns

occur while on offense.

Note:

There is a slight correlation between hits and errors, but not enough to

not do this example. This is likely an artifact of number of games

played, we could probably control for this by dividing all our values by

number of games played; however, this example is about using TERR,

not modeling best practices.

Syntelli Solutions Inc: TERR® Tutorial eBook 6

info@syntelli.com

I want to do a linear model, so I also need to check distribution. A

quick and dirty histogram shows a likely exponential distribution for

home runs and errors, but a fairly normal distribution for hits.

Nevertheless, I’m just going to add the values into my model “as is”

for explanatory purposes.

Syntelli Solutions Inc: TERR® Tutorial eBook 7

info@syntelli.com

For a linear regression we go to Tools >

Regression Modeling…

Here you also see all the other out of the box statistical capabilities

Spotfire offers. All of these use TERR to provide insights.

In our regression model dialog box, we see a few options:

Syntelli Solutions Inc: TERR® Tutorial eBook 8

info@syntelli.com

Feel free to explore other model options, etc. but we are concerned

about making a linear model. Spotfire uses the terms Response (i.e.

dependent or outcome) and Predictor (i.e. independent) variables for

model creation.

Notice at the bottom of the window an equation is constructed. This

equation is the generated TERR code! It is fully editable in the dialog

box, which is great for transformation and custom models.

Let’s run our simple model. Select HOME_RUNS as the Response,

highlight “HITS” and click “Add”, and highlight “ERRORS” and click

“Add”.

Then click OK and we have our first linear regression!

HOME_RUNS ~ HITS + ERRORS

Syntelli Solutions Inc: TERR® Tutorial eBook 9

info@syntelli.com

Now Spotfire and TERR have done a lot of our model validation for us.

There are even a bunch of diagnostic visualizations already created,

but by and large I use the Residuals vs. Fitted Plot exclusively at this

stage.

In this case, we see a classic residual pattern of exponential error

distribution, which indicates a Natural Log transformation is required

on our dependent variable. We also noticed ERRORS follow a similar

distribution, so we’ll go ahead and transform those too.

Edit your model by clicking the little

calculator (or is it a table?) by “Model

Summary”:

If you don’t know the

syntax, Spotfire

makes it easy to do

the most common

transformations.

You’ll only need to

select “Log: log(x)”

from the drop-down

below your Predictor

column before you

add it to the model:

Syntelli Solutions Inc: TERR® Tutorial eBook 10

info@syntelli.com

Just keep in mind “Log” in R

and TERR means “Natural

Log” and “Log 10” is the log

base 10 you would see as

“Log” on a calculator. This

gives us the following

equation:

And the associated model output:

log(HOME_RUNS) ~ HITS + log(ERRORS)

Finally, if we want to explore

the interaction between our

two predictors, we only need

to change the “plus” to an

asterisk in our model

equation:

In TERR this evaluates to HITS + log(ERRORS) + (HITS:log(ERRORS))

Syntelli Solutions Inc: TERR® Tutorial eBook 11

info@syntelli.com

Although the point and click functionality is nice, to really use the

power of TERR in Spotfire, you’ll need to start making your own data

functions. In this example I’m going to make a very simple

normalization equation in which I take an individual value in a column,

subtract it from the column mean, and then divide it by the column

standard deviation.

To make a custom function we go to “Tools” > “Register Data

Functions…”

We will name our script “Normalization”, select the Type as “R script –

TIBCO® Enterprise Runtime for R” and enter the following for our

script:

output <- (column1 - mean(column1)) / sd(column1)

Syntelli Solutions Inc: TERR® Tutorial eBook 12

info@syntelli.com

Now go to the “Input

Parameter” tab to

tell spotfire what we

want to pass into the

script. Select “Add…”

to add an input

parameter:

The two most important items here are the “Input parameter name”

field and “Type” drop down. Our input parameter tells Spotfire what

value in our script we want to accept our data. In this example it is

column1. As you can see below, the blue text matches the blue

highlighted value in the above image.

output <- (column1 - mean(column1)) / sd(column1)

“Type” tells Spotfire if this parameter needs to be a single value, a

column from a table, or an entire table. For those familiar with R these

correspond to values, vectors, and dataframes or matrices

respectively.

The output parameter tells

Spotfire what value to expect

to be returned from the

function into Spotfire.

Syntelli Solutions Inc: TERR® Tutorial eBook 13

info@syntelli.com

Just like our input parameter, the important parts are the “Result

parameter name” and “Type”. Result parameter name corresponds to

the data we want to extract from the function. As before, we have

highlighted the value above in and the associated variable below in

blue.

output <- (column1 - mean(column1)) / sd(column1)

Now that the script is defined and we have our input and output

parameters linked to the script, it is time to run the function!

Save the function if you like (you’ll have to be connected to a spotfire

server as data functions are saved in the library) and click “Run”.

Before the function completely executes you will need to complete

the handshake between the script parameters and the Spotfire

application. First we’ll need to tell the function what Spotfire data to

pass into the input parameter and where to put the data from the

output parameter.

Syntelli Solutions Inc: TERR® Tutorial eBook 14

info@syntelli.com

On the input tab I specify that I want to use a column via the input

handler, that column is coming from the data table “Baseball

Information Link” and the column name is HOME_RUNS.

Next, I do the same for the output tab and say I want to add a column

via Output handler to the Data table “Baseball Information Link”

Click OK and you should see a new column in your data table!

Syntelli Solutions Inc: TERR® Tutorial eBook 15

info@syntelli.com

Next Steps

This is just scratching the surface of TERR capabilities. TIBCO® has

copious documentation on what you can do. Here are some

resources to get you started:

Parallel Processing: https://docs.tibco.com/pub/enterprise-

runtime-for-R/2.5.0/doc/html/parallel/parallel-package.html

Library of Statistical Functions:

https://docs.tibco.com/pub/enterprise-runtime-for-

R/2.5.0/doc/html/stats/00Index.html

Run SQL directly in TERR with installed JDBC Driver:

https://docs.tibco.com/pub/enterprise-runtime-for-

R/2.5.0/doc/html/sjdbc/executeJDBC.html

The next chapters will focus on functionality that may not be obvious

in TERR, such as pulling from an API, using R inside TERR, and how

to develop your TERR scripts inside the RStudio IDE. However, all will

build upon the concepts of getting data into and out of Spotfire. If

you are not completely confident in the Spotfire TERR dataflow,

there will be a brief review in next chapter.

Syntelli Solutions Inc: TERR® Tutorial eBook 16

info@syntelli.com

Chapter 2

HOW TO USE R-SCRIPTS IN TIBCO

SPOTFIRE® TERR

AN EXAMPLE OF R IN TERR (PACKAGE “RINR”)

USING THE CENSUS REST API

In this chapter we will walk through an example of acquiring data from

the US Census RESTful web service API (Application Program

Interface) via URL parameters. In simple terms, a RESTful URL allows

you to pass URL parameters into an online datasource and returns the

data specified by the parameters. Using a RESTful source enables

access to data without having to store this information locally in a

database or flat file.

Please note, I will be referring to the Census web service as the “Census

API” for much of this chapter; however, at times I may say “Census URL”

particularly when speaking of the parameter’s required in the URL. For

clarity, the Census URL is the “Interface” portion of the Census

Application Program Interface.

Click here to learn more about REST

The full Census API to Spotfire process consists of four parts:

1) Installing R alongside TERR in your local machine

2) Adding new packages to your R instance

3) Manipulating the Census Data API via R

4) Learning how to access R within TERR using the TERR package

“RinR”

This chapter will go over parts one and two.

But first, a warning: This solution is only for a local device running TERR

and R. It does not address using Statistics Service or configuring

analysis for online consumers.

With that out of the way, let’s install R!

Syntelli Solutions Inc: TERR® Tutorial eBook 17

info@syntelli.com

Installing R starts with downloading the

installer from CRAN. R is made available

through the Comprehensive R Archive

Network (CRAN). This is arguably the most

confusing step in installing R – finding

INSTALLING what you need to download!

R To make things easy here’s the download

location for Windows users. Other users

can navigate to the download here.

ON YOUR

While CRAN does provide installation

LOCAL instructions, you’ll find these few short

steps most helpful:

MACHINE 1. Download and run the R installer

2. Select the installation directory, the

default is C:\Program

Files\R\<version number>

3. Select if you want both or just the 32-

or 64-bit install (R will figure out which

one your need in most cases)

4. Select start up options (this just

customizes how the R default GUI will

display and it will not impact

performance)

5. Select your preference of start menu

and desktop options

6. Install

You now have R installed!

Syntelli Solutions Inc: TERR® Tutorial eBook 18

info@syntelli.com

1. Original Console /command line / terminal

interface (R.exe)

There Are Several R was initially entered line by line and that option

Ways to Access R remains today. Assuming you installed R in your

default directory you can access R Console

here: C:\Program Files\R\R-3.0.2\bin. Simply

double click R.exe to open the Console. It looks

just like a command line interface in Windows or

a Terminal in UNIX.

2. R GUI (Rgui.exe)

R also comes packaged with a basic GUI application. There are 32-

and 64-bit versions, both found in the x32and x64 folders contained in

the “bin” folder you found R.exe and both named Rgui.exe. The R GUI

is also the application opened when selecting the R application from

your start menu or desktop, if you elected to have those shortcuts

created when installing R.

3. Third Party Application

There are many different applications which use R (like Spotfire!);

however, creating complex scripts in Spotfire’s interface can be

difficult, almost as difficult as using R’s command line interface.

Therefore, several third party applications exist to make your R

coding experience much easier. We will not go into these here, but in

an upcoming post we’ll go in-depth on setting up Rstudio to program

in both R and TERR.

Now you’ve installed R and you can open it, so let’s use it!

Syntelli Solutions Inc: TERR® Tutorial eBook 19

info@syntelli.com

Let’s open up our R GUI application. You should be able to find it in

your start menu. If it’s not there, check in your R “bin”

folder C:\Program Files\R\R-3.0.2\bin\<64 or 32> for the file

called Rgui.exe.

See the image below for some help if this is your first time using R:

All you get initially is a fancy version of the R Console. The red cursor

at the bottom is where you enter commands, so let’s enter a

command.

First, to verify R is working, let’s try something simple. Type 2+2 and

hit Enter:

It should return 4.

Click here for an excellent tutorial and explanation on R functionality

and commands.

Syntelli Solutions Inc: TERR® Tutorial eBook 20

info@syntelli.com

Now that we have confirmed R is working, it is time to extend its

functionality. As we mentioned before, in order to get the most out of

R, we need to install packages. In this example, we are installing

the jsonlite package (R is case sensitive) in order to extract and

transform JSON data from the Census API.

Installing packages is a relatively painless process. It is as simple as

executing install.packages(<packagename>) if you know the exact

name and case of your package. You can also select Packages >

Install Packages… from your R GUI for a list of available packages.

In this example we will install jsonlite by

typing install.packages(“jsonlite”) in the console and hit Enter.

What. Is.

Happening?

A feature of R GUI is an easy way to select your CRAN mirror. A CRAN

mirror is an online repository of R packages. Typically highlighting the

location closest to you geographically, then clicking “OK” will work

fine.

Syntelli Solutions Inc: TERR® Tutorial eBook 21

info@syntelli.com

Also notice the line on the Console output à Installing package

into C:/Users/<user>/Documents/R/win-library/3.0(as ‘lib’ is

unspecified)

This is your default library location. It is where packages are installed.

In TERR this will be different; therefore, if you run into issues

combining TERR and R, the first step is to define the library location of

scripts when loading libraries. There will be additional info on library

locations in the next chapter.

Once you select a CRAN mirror, jsonlite will begin to install. R may

also install other dependent packages required for jsonlite to function

properly if they are not already present. So don’t panic if it starts

“Installing Dependencies.”

Finally, confirm jsonlite has been successfully installed by loading it

from the package library via library(jsonlite) or

requrie(jsonlite). library(<package name>) is how you tell R to use a

particular package in your script. Remember, a library is the location a

package is stored in, but in most cases you can use the terms “library”

and “package” interchangeably when talking about these things.

If there are no error messages when calling the library, you have

successfully installed jsonlite.

With R and jsonlite successfully installed our next step is to access

data, then manipulate it with Spotfire. Our next chapter will show you

how to use the jsonlite package to access data via the Census REST

URL, then use the R jsonlite code within a TERR script to run

everything within Spotfire.

Syntelli Solutions Inc: TERR® Tutorial eBook 22

info@syntelli.com

Chapter 3

AN EXAMPLE OF R IN TERR (PACKAGE “RINR”)

USING THE CENSUS REST API

In our previous chapter we installed R and downloaded the jsonlite

package. Now we are going to use R and jsonlite to access US

Census data directly within R, then use the TERR package RinR to

bring the Census data into TIBCO Spotfire®.

Our first step is figuring out how to use the Census API within R.

The Census API is extremely easy to use:

1) Click here to learn about the API

2) Request a key

3) Determine the dataset and parameters you wish to use

4) Paste your parameterized link including the key acquired in

step two into your browsers address bar to see if it works

The following link returns the total population by state

from the SF1 2010 Census:

Example http://api.census.gov/data/2010/sf1?key=[KEY]&get=

P0010001,NAME&for=state:*

(Insert your own key at [KEY], but do not include

brackets!)

Syntelli Solutions Inc: TERR® Tutorial eBook 23

info@syntelli.com

The result will look

like this

I changed the key after entering the URL, but it gives an example of

what the final product will look like.

With the connection confirmed, we can use R to read the data

provided by the URL and turn it into a data table for analysis. This is

why we downloaded the package jsonlite.

jsonlite uses another R Package RCurl to display content returned by

a URL. Originally this package was for searching online text data like

emails and forum posts as well as automatically completing online

forms for manipulating sites through scripting; however, with the rise

of RESTful data, RCurl has become a great way to access data.

jsonlite makes the complicated functions of RCurl much easier to use.

In order to create a dataframe (R’s version of a flat file) from the

Census API, all that’s needed is the fromJSON() function and a

Census API URL.

Syntelli Solutions Inc: TERR® Tutorial eBook 24

info@syntelli.com

To use the Census API URL we have just created in R, we need to

create a script.

Open up the R GUI if it isn’t already, then go to File > New Script.

A new window will open. This window will allow you to type multiple

lines of R code which can be ran in a batch. Copy and paste the

following, and make sure to use with your API key instead of [KEY]:

library(jsonlite)

data <- data.frame(

fromJSON(http://api.census.gov/data/2010/sf1?key=[KEY]&get=P00

10001,NAME&for=state:*

,stringsAsFactors = FALSE

data

Highlight everything, then hit F5 or Ctrl + R to run the script., the output

should look like this:

Syntelli Solutions Inc: TERR® Tutorial eBook 25

info@syntelli.com

A few quick notes on what that script is doing:

data.frame(): This tells R the data should be read as a dataframe

which we can think of as a flat file or spread sheet.

stringsAsFactors = FALSE: An argument of data.frame() strings as

factors tells R we do not want additional metadata attached to any

strings in the dataframe. Using strings as factors is helpful in statistical

analysis but it becomes a nuisance in Spotfire.

data <- : “<-” is the assignment operator in R. Here we are telling R to

put the dataframe created by the operation into a variable called

“data.” At the end of the script we call data so it is displayed in the

console / terminal.

We are pulling in data with the Census API, fantastic! Now we just

copy and paste this into the register data function window and call it a

day, right? Nope… turns out jsonlite is one of those packages that

doesn’t work in TERR.

But all is not lost, TERR includes a package “RinR” pre-installed and

“RinR” includes a function “REvaluate” that allows the use of a local R

instance within TERR.

This deserves repeating – REvaluate allows the use of a local R

instance within TERR. Why is this so important? It allows every CRAN

package to be used locally in Spotfire! Every clustering, forecasting,

and graphing function that doesn’t require external devices (like R

Commander) can now be used locally in Spotfire.

But first things first, let’s use jsonlite in TERR.

Note:

this will not work in R GUI, so keep reading for the chapterdescribing

how to create a TERR and R development environment in Rstudio.

Syntelli Solutions Inc: TERR® Tutorial eBook 26

info@syntelli.com

Here’s the final script which we will run in Spotfire:

data_pre <- REvaluate(

library(jsonlite);

data_R <-data.frame(

fromJSON(“http://api.census.gov/data/2010/sf1?key=[KEY]&get=P0

010001,NAME&for=state:*”)

,stringsAsFactors = FALSE)

data_R

)

colnames(data_pre) <- as.character((data_pre[1,, drop = TRUE]))

data <- data_pre[-1,]

Well that looks a little different than what we had before.

Read on for a breakdown of the changes.

Syntelli Solutions Inc: TERR® Tutorial eBook 27

info@syntelli.com

The changes:

Revaluate({}): The function contains the entire previous script we

executed in R, the dataframe it returns is being assigned to the

variable data_pre. The original “data” variable has been renamed

“data_R” to reduce conflicts.

data_pre: We are using an intermediate variable to clean our

dataframe before our final assignment. This is to eliminate any

conflicts when assigning an output variable in Spotfire.

colnames(data_pre)… : Unfortunately fromJSON doesn’t read the first

lines of the Census data as column names. The operations in this line

convert the first row of data_pre (data_pre[1,]) into characters

(as.character) then assign those characters to the column names

of data_pre (colnames(data_pre)).

data <- data_pre[-1,]: Finally, we do not need the first row anymore,

so we delete the first row of data_pre (data_pre[-1,])and assign it to

our ultimate data variable “data.”

Syntelli Solutions Inc: TERR® Tutorial eBook 28

info@syntelli.com

Now for the easy part! As long as you are using your local TERR

engine in Spotfire, this is a piece of cake.

1) Open Spotfire and go to Tools > Register Data Functions

2) Select script type “R script – TIBCO® Enterprise Runtime for R”

3) Indicate “RinR” as the only package you need to use

4) Insert the TERR script above into the “script” window

5) Assign the “data” variable to an output parameter with data type

as Table

a. The script is pulling in data from the Census API only;

therefore, no input parameter is required in this example

6) Tell Spotfire to create or refresh a table with the output

parameter via an “Output Handler”

Final Script:

Syntelli Solutions Inc: TERR® Tutorial eBook 29

info@syntelli.com

Output parameter

Now select “Run” at the top to try it out. If everything goes according

to plan you will get a prompt telling you where your data needs to go

in Spotfire and which Spotfire tables you are retrieving data from, if

you had inputs.

As we only have data going to Spotfire, we only need to set an

output. Let Spotfire know the data parameter is going to produce a

table with the Output Handler, then select that you want a new table.

Syntelli Solutions Inc: TERR® Tutorial eBook 30

info@syntelli.com

Click “OK” and you have now connected Spotfire to the Census API!

Now that we have the connection there is a lot more we can do with

the Census API. In subsequent chapters we will explore how to pass

different values into the API URL via input parameters – creating a

way to dynamically change the data pulled from the Census API

without ever having to store files locally.

We will also explore as how to properly set up a development

environment for TERR and R. RStudio allows us to create R Scripts

referencing different R engines. This is very helpful for debugging the

complex operations of which R and TERR are capable.

Syntelli Solutions Inc: TERR® Tutorial eBook 31

info@syntelli.com

Chapter 4

PASSING DATA FROM TERR INTO R IN SPOTFIRE

In one of our blog posts, our interns demonstrated how to perform a

simple sentiment analysis on Tweets during the World Cup Match.

Recently, we had an interesting request from a client to analyze Tweets in

a similar manner. The difference here will be to analyze Tweets which are

specifically determined by Spotfire end users.

The request was to query Twitter from Spotfire then score the returned

Tweets. We decided to welcome this challenge by treating it as another

formidable task that could not be ignored. In other words, we couldn’t turn

down a chance to just go for it.

An additional challenge for this particular request was that it needed to be

contained within the standard Spotfire tools as opposed to the Spotfire

Statistics Service. To accomplish this, we had to perform the API call either

in TIBCO Enterprise Runtime for R (TERR), IronPython, or C#.

We decided to go with TERR.

The basis for making the solution work in TERR was twofold:

• R has a robust community development of Twitter analytics tools.

• Our existing code base for Twitter sentiment analysis was already in R.

As you may recall

from my previous chapter, TERR does not play nice with Curl. This

meant we had to use REvaluate() to access Twitter. However, using

REvaluate() presented another challenge in and of itself. That is, we

knew how to get data out of REvaluate() and into Spotfire, but we did

not know how to get data into REvaluate().

Due to a non-disclosure agreement, I cannot provide code specifics.

But what I can do is share a brief tutorial of how to get data from

Spotfire into TERR, then into R, and finally, how to get the data right

back out.

Syntelli Solutions Inc: TERR® Tutorial eBook 32

info@syntelli.com

Step 1:

1. Create a document property in Spotfire for your search criteria.

2. Go to a text area or make a new one, then click on the“Insert

Property Control” button.

3. Choose "Input Field."

4. Make a new document property with data type "String" then click

“OK.”

5. Now enter the first value you would like to search in Twitter. (e.g.

#cats)

Don’t ever forget to include a

value in your document property! Tip

Syntelli Solutions Inc: TERR® Tutorial eBook 33

info@syntelli.com

Step 2:

1. To insert your script, go to Tools > Register Data Functions.

2. Select your Data Function Type to be "R Script –TIBCO® Enterprise

Runtime for R".

3. In the Packages field, only use RinR (without quotes).

4. Your script will need some value for Spotfire to input your

document property as well as an output value from REvaluate().

See the image below for details.

Syntelli Solutions Inc: TERR® Tutorial eBook 34

info@syntelli.com

Tips:

For our input parameter, we are using "prop_tweetSearch".

For our output parameter, we are using "data".

For more information on parameters, see "How to use TERR in

Spotfire part 1 and part 2."

For our Syntax, we are using REvaluate()

REvaluate( {R Script}, REvaluator , data = stuff)

Do not forget to include REvaluator and data. By doing so, you will get

that input value that was just set into your regular R instance.

For more information on RinR, see TIBCO documentation:

https://docs.tibco.com/pub/enterprise-runtime-for-

R/2.5.0/doc/html/RinR/RinR-package.html

Step 3:

1. To assign the document property to your input parameter, you will

use REvaluate()

2. Tell your script what you want to pass into your R script – through

REvaluate() of course.

3. Select a document property to use as the Input Handler.

4. Set the property to document property as was created and

demonstrated in Step 1.

5. Assign your output parameter to your desired output and you are

good to go!

Syntelli Solutions Inc: TERR® Tutorial eBook 35

info@syntelli.com

The image below captures some of these directions, namely,

numbers 3 and 4:

Tip:

If you want the data to refresh every time someone types a new value

into the property control, select refresh function automatically.

Syntelli Solutions Inc: TERR® Tutorial eBook 36

info@syntelli.com

Chapter 5

USING TERR IN RSTUDIO

In a previous chapter I mentioned using RStudio as a development

environment for Spotfire TERR (TIBCO® Enterprise Runtime for R).

For those of you using Spotfire and not using TERR, it's an incredibly

powerful feature you really need to add to your toolbox. It effectively

gives you the ability to use every type of analytical function and data

manipulation process ever developed. From Latent Factor Clustering

to scripted ETL - it pretty much does it all. However, trying to

develop code inside the Spotfire TERR console can be a bit of a pain.

Why? Because you have to click through three menus to execute the

code, then if there is an error, you have to parse through Spotfire and

TERR's errors to find the cause.

Therefore, it makes sense to develop in an IDE (such as RStudio) then

copy paste your code into Spotfire.

"But RStudio uses R not TERR, so you can't do that!" Actually, you

can! But it requires a few not so obvious steps. Let me show you

how:

Syntelli Solutions Inc: TERR® Tutorial eBook 37

info@syntelli.com

If you don't have it already, RStudio is the best development

environment for R, so you should get it even if you're not using TERR.

(Why are you reading this article by the way?)

Plus the Open Source version is completely free so you have no

excuse.

Get it here: http://www.RStudio.com/products/RStudio/#Desk

There's a whole bunch of little configuration tricks we can discuss, but

that's for another article.

Spotfire by default hides the folder containing the TERR engine, so to

find it, you have to make it stop hiding. To accomplish that you have

to "Show Hidden Folders."

Here's how you do it on Windows 7:

http://windows.microsoft.com/en-us/windows/show-hidden-

files#show-hidden-files=windows-7

Here's how to for Windows 8:

http://www.bleepingcomputer.com/tutorials/show-hidden-files-in-

windows-8/

Syntelli Solutions Inc: TERR® Tutorial eBook 38

info@syntelli.com

Now assuming you have successfully installed RStudio and you have

Spotfire installed on your local machine (Again, why are you reading

this if you don't have Spotfire?) you can locate the application Spotfire

uses to process R code - The TIBCO® Enterprise Runtime for R

engine.

It is typically located in C:\Program Files

(x86)\TIBCO\Spotfire\[SPOTFIRE VERSION]\Modules\TIBCO

Enterprise Runtime for R_[TERR VERSION]\engine\bin

With [SPOTFIRE VERSION] as the version of Spotfire installed on your

computer and [TERR VERSION] the TERR version you are currently

running.

If you can find the directory …\TIBCO\Spotfire\[SPOTFIRE

VERSION]\ but you can't find "Modules" That directory is still

hidden, stop skipping steps, go back to step number two and try

again.

RStudio defaults to a local instance of R. You probably installed it

when you installed RStudio. But we need to point RStudio to TERR

not R.

Syntelli Solutions Inc: TERR® Tutorial eBook 39

info@syntelli.com

We verified the location of TERR in step three, so now we need to tell

RStudio where to find TERR.

1. In RStudio go to Tools > Global Options…

2. In the General menu, click Change… to change your R version:

Syntelli Solutions Inc: TERR® Tutorial eBook 40

info@syntelli.com

3. TERR Typically does not show up by default, so we'll need to click

"Browse…"

4. Navigate to the "TIBCO Enterprise Runtime for R_[TERR

VERSION]\engine" folder on the path to the TERR engine

Syntelli Solutions Inc: TERR® Tutorial eBook 41

info@syntelli.com

5. Take your pick of either 32 or 64 bit TERR (There isn't much

difference)

6. Make sure TERR is selected in the "Choose R Installation"

Window, click "OK", and you are ready to go! (After you restart

RStudio for the changes to take effect…)

Syntelli Solutions Inc: TERR® Tutorial eBook 42

info@syntelli.com

When RStudio restarts, take a look at the console. It will tell you what

version of R, or in this case TERR, is running. If you see "TIBCO®

Enterprise Runtime for R.." then you are successfully running TERR in

RStudio! Yay!

Syntelli Solutions Inc: TERR® Tutorial eBook 43

info@syntelli.com

About Syntelli Solutions Inc.

Syntelli Solutions is focused on leveraging the ‘Power of Data.’

Everything we do is analytics – shaping and transforming data,

forming the right hypothesis, building the right models, and visualizing

the data so that analytics and predictive capabilities come to bear

pervasively within your organization. Our legacy is in building financial

analytics solutions using Essbase and Hyperion and applying

‘probabilistic decision’ techniques. Our expertise is in bringing

business context to data and providing enhanced decision making

capabilities, not just the technical aspect of implementing a data-

warehouse or the analytical product. The passion for answering the

‘what-if,’ ‘what else,’ and ‘so what’ questions is shared by all

Syntellians, and we are sure to transfer that passion to your team as

well.

Over the last few years, we have gained significant expertise in TIBCO

®Spotfire, which is our solution of choice for deeper analytics and

integration with R. We have done over 100 TIBCO® Spotfire projects

and employ some of the most experienced consultants in the

industry. The verticals in which we have worked include

sports/entertainment, life sciences/pharmaceuticals, oil & gas, retail,

manufacturing, professional services, hospitality, telecom, financials,

and healthcare. The clients we’ve worked with have revenues from

$100M to $100BN. The horizontals in which we have expertise include

sales analytics, marketing analytics, campaign analytics, and supply

chain and distribution analytics.

Interested in learning how Syntelli can help your organization

in tackling data science and analytics problems?

Contact us at: 1-877-SYNTELLI

Or info@Syntelli.com

44

Das könnte Ihnen auch gefallen

- TIBCO Spotfire A Comprehensive Primer by Andrew Berridge Michael Phillips PDFDokument566 SeitenTIBCO Spotfire A Comprehensive Primer by Andrew Berridge Michael Phillips PDFgarg.achin100% (1)

- Self-Service AI with Power BI Desktop: Machine Learning Insights for BusinessVon EverandSelf-Service AI with Power BI Desktop: Machine Learning Insights for BusinessNoch keine Bewertungen

- TIBCO Spotfire Guided AnalyticsDokument12 SeitenTIBCO Spotfire Guided AnalyticsRajendra PrasadNoch keine Bewertungen

- DAX in Power BIDokument4 SeitenDAX in Power BIBHARAT GOYAL0% (1)

- Assistant Workers' Compensation Examiner: Passbooks Study GuideVon EverandAssistant Workers' Compensation Examiner: Passbooks Study GuideNoch keine Bewertungen

- TIBCO Spotfire - A Comprehensive Primer - Sample ChapterDokument30 SeitenTIBCO Spotfire - A Comprehensive Primer - Sample ChapterPackt Publishing100% (1)

- Applying SOA Principles in Informatica - Keshav VadrevuDokument91 SeitenApplying SOA Principles in Informatica - Keshav VadrevuZakir ChowdhuryNoch keine Bewertungen

- SQL Server Functions and tutorials 50 examplesVon EverandSQL Server Functions and tutorials 50 examplesBewertung: 1 von 5 Sternen1/5 (1)

- Proc ReportDokument32 SeitenProc ReportgeethathanumurthyNoch keine Bewertungen

- Pro Microsoft Power BI Administration: Creating a Consistent, Compliant, and Secure Corporate Platform for Business IntelligenceVon EverandPro Microsoft Power BI Administration: Creating a Consistent, Compliant, and Secure Corporate Platform for Business IntelligenceNoch keine Bewertungen

- Concept Based Practice Questions for Tableau Desktop Specialist Certification Latest Edition 2023Von EverandConcept Based Practice Questions for Tableau Desktop Specialist Certification Latest Edition 2023Noch keine Bewertungen

- SQLDokument98 SeitenSQLRohit GhaiNoch keine Bewertungen

- Time Series Analysis A Complete Guide - 2020 EditionVon EverandTime Series Analysis A Complete Guide - 2020 EditionNoch keine Bewertungen

- Expert T-SQL Window Functions in SQL Server 2019: The Hidden Secret to Fast Analytic and Reporting QueriesVon EverandExpert T-SQL Window Functions in SQL Server 2019: The Hidden Secret to Fast Analytic and Reporting QueriesNoch keine Bewertungen

- MDX TutorialDokument5 SeitenMDX Tutoriallearnwithvideotutorials100% (1)

- Questions - TableauDokument1 SeiteQuestions - TableauHsnMustafaNoch keine Bewertungen

- Big Data Architecture A Complete Guide - 2019 EditionVon EverandBig Data Architecture A Complete Guide - 2019 EditionNoch keine Bewertungen

- SQL TutorialDokument207 SeitenSQL TutorialMd FasiuddinNoch keine Bewertungen

- Tableau Syllabus PDFDokument19 SeitenTableau Syllabus PDFyrakesh782413100% (1)

- Week 2 Teradata Practice Exercises GuideDokument7 SeitenWeek 2 Teradata Practice Exercises GuideFelicia Cristina Gune50% (2)

- Getting Started with Greenplum for Big Data AnalyticsVon EverandGetting Started with Greenplum for Big Data AnalyticsNoch keine Bewertungen

- Joinpoint Help 4.5.0.1Dokument147 SeitenJoinpoint Help 4.5.0.1Camila Scarlette LettelierNoch keine Bewertungen

- Basic Teradata Query Optimization TipsDokument12 SeitenBasic Teradata Query Optimization TipsHarsha VardhanNoch keine Bewertungen

- Tableau Desktop Windows 9.2Dokument1.329 SeitenTableau Desktop Windows 9.2vikramraju100% (2)

- Custom Logging in SQL Server Integration Services SSISDokument3 SeitenCustom Logging in SQL Server Integration Services SSISjokoarieNoch keine Bewertungen

- Week 3 Teradata Exercise Guide: Managing Big Data With Mysql Dr. Jana Schaich Borg, Duke UniversityDokument6 SeitenWeek 3 Teradata Exercise Guide: Managing Big Data With Mysql Dr. Jana Schaich Borg, Duke UniversityNutsNoch keine Bewertungen

- The Essential PROC SQLDokument10 SeitenThe Essential PROC SQLdevidasckulkarniNoch keine Bewertungen

- Microsoft Data Mining: Integrated Business Intelligence for e-Commerce and Knowledge ManagementVon EverandMicrosoft Data Mining: Integrated Business Intelligence for e-Commerce and Knowledge ManagementNoch keine Bewertungen

- Top 50 Tableau Real-Time Interview Questions and Answers PDFDokument11 SeitenTop 50 Tableau Real-Time Interview Questions and Answers PDFPandian NadaarNoch keine Bewertungen

- Base SAS Interview QuestionsDokument14 SeitenBase SAS Interview QuestionsDev NathNoch keine Bewertungen

- SAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesVon EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNoch keine Bewertungen

- PowerBI Developer - Business Analyst Resume - Hire IT People - We Get IT DoneDokument5 SeitenPowerBI Developer - Business Analyst Resume - Hire IT People - We Get IT Donesparjinder8Noch keine Bewertungen

- TIBCO - SpotfireDokument15 SeitenTIBCO - SpotfireRamki Jagadishan100% (1)

- Pro Power BI Theme Creation: JSON Stylesheets for Automated Dashboard FormattingVon EverandPro Power BI Theme Creation: JSON Stylesheets for Automated Dashboard FormattingNoch keine Bewertungen

- Smart Data Discovery Using SAS Viya: Powerful Techniques for Deeper InsightsVon EverandSmart Data Discovery Using SAS Viya: Powerful Techniques for Deeper InsightsNoch keine Bewertungen

- My SQL For BeginnersDokument3 SeitenMy SQL For BeginnersPallavi SinghNoch keine Bewertungen

- OrdinalexampleR PDFDokument9 SeitenOrdinalexampleR PDFDamon CopelandNoch keine Bewertungen

- Tableau Interview Q& ADokument198 SeitenTableau Interview Q& ANaresh NaiduNoch keine Bewertungen

- Getting Started With Tableau PrepDokument3 SeitenGetting Started With Tableau PrepmajujmathewNoch keine Bewertungen

- Tableau Desktop Introduction & FundamentalsDokument56 SeitenTableau Desktop Introduction & FundamentalsVarun GuptaNoch keine Bewertungen

- 67 Tableau Interview Questions For Experienced Professionals Updated in 2020Dokument17 Seiten67 Tableau Interview Questions For Experienced Professionals Updated in 2020Pandian NadaarNoch keine Bewertungen

- Exam 70-778 OD ChangesDokument3 SeitenExam 70-778 OD ChangesJagadeesh KumarNoch keine Bewertungen

- SpotfireDokument144 SeitenSpotfireVenu Babu KolasaniNoch keine Bewertungen

- Data Governance and Data Management: Contextualizing Data Governance Drivers, Technologies, and ToolsVon EverandData Governance and Data Management: Contextualizing Data Governance Drivers, Technologies, and ToolsNoch keine Bewertungen

- OMNICOM Quick Start GuideDokument10 SeitenOMNICOM Quick Start GuideLuis Alonso Osorio MolinaNoch keine Bewertungen

- ADA - Architecture Blueprint For Solution or Technology Template v1.101Dokument72 SeitenADA - Architecture Blueprint For Solution or Technology Template v1.101ishrat shaikhNoch keine Bewertungen

- Assembler/Linker/Librarian User's Manual: Literature Number 620896-003 November 1996Dokument224 SeitenAssembler/Linker/Librarian User's Manual: Literature Number 620896-003 November 1996Sergey SalnikovNoch keine Bewertungen

- Faculty Time TableDokument1.097 SeitenFaculty Time TablesdfwexdNoch keine Bewertungen

- Report Builder PDFDokument141 SeitenReport Builder PDFalrighting619Noch keine Bewertungen

- Memory and StorageDokument2 SeitenMemory and Storagefahmi rizaldiNoch keine Bewertungen

- Clear Quest User GuideDokument60 SeitenClear Quest User GuiderajatkatariaNoch keine Bewertungen

- Java VM Options You Should Always Use in Production - Software Engineer SandboxDokument7 SeitenJava VM Options You Should Always Use in Production - Software Engineer SandboxBrenda Yasmín Conlazo ZavalíaNoch keine Bewertungen

- Palo Alto Sample WorkbookDokument12 SeitenPalo Alto Sample WorkbookRavi ChaurasiaNoch keine Bewertungen

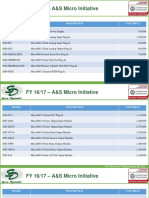

- FY 16/17 - A&S Micro Initiative: Model Description Unit PriceDokument6 SeitenFY 16/17 - A&S Micro Initiative: Model Description Unit PriceVu SonNoch keine Bewertungen

- Shark Bay: ECS ConfidentialDokument43 SeitenShark Bay: ECS ConfidentialyencoNoch keine Bewertungen

- WCDMA Idle Mode Behaviour HuaweiDokument32 SeitenWCDMA Idle Mode Behaviour Huaweihuseyin100% (2)

- Importing Core Configuration Files Into Nagios XI PDFDokument5 SeitenImporting Core Configuration Files Into Nagios XI PDFarun_sakreNoch keine Bewertungen

- Batching RS Logix5000Dokument18 SeitenBatching RS Logix5000amritpati14100% (1)

- Edt Testkompress by Kiran K S: Embedded Deterministic TestDokument51 SeitenEdt Testkompress by Kiran K S: Embedded Deterministic Testsenthilkumar100% (8)

- Computer Architecture and OrganizationDokument13 SeitenComputer Architecture and Organizationdhruba717Noch keine Bewertungen

- 95386-Hia XNMR DSDokument2 Seiten95386-Hia XNMR DSPalika MedinaNoch keine Bewertungen

- CS-2000i/CS-2100i ASTM Host Interface Specification For SiemensDokument39 SeitenCS-2000i/CS-2100i ASTM Host Interface Specification For SiemensJavier Andres leon Higuera100% (1)

- MemoQ Machine Translation SDKDokument17 SeitenMemoQ Machine Translation SDKLeonNoch keine Bewertungen

- What Is Metadata?Dokument3 SeitenWhat Is Metadata?durai muruganNoch keine Bewertungen

- Sony KdsDokument39 SeitenSony KdsChava RaviNoch keine Bewertungen

- PLC ExamDokument5 SeitenPLC ExamS. Magidi100% (8)

- Symantec DLP 15.0 Oracle 11g Installation GuideDokument54 SeitenSymantec DLP 15.0 Oracle 11g Installation Guideevodata5217Noch keine Bewertungen

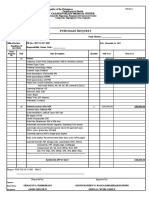

- Purchase Request: Cagayan Valley Medical Center Cagayan Valley Medical CenterDokument7 SeitenPurchase Request: Cagayan Valley Medical Center Cagayan Valley Medical CenterJZik SibalNoch keine Bewertungen

- Case StudyDokument4 SeitenCase StudyWild CatNoch keine Bewertungen

- VL7002 Question BankDokument34 SeitenVL7002 Question BankDarwinNoch keine Bewertungen

- 04 Professional Resume Template in WordDokument1 Seite04 Professional Resume Template in WordDevarajanNoch keine Bewertungen

- Bank Po TestDokument47 SeitenBank Po TestVishalNoch keine Bewertungen

- AWR AnalysisDokument20 SeitenAWR AnalysisPriyadarsini RoutNoch keine Bewertungen

- Chapter 05 x86 AssemblyDokument82 SeitenChapter 05 x86 AssemblyArun Kumar MeenaNoch keine Bewertungen