Beruflich Dokumente

Kultur Dokumente

Model AI Gov Framework Primer

Hochgeladen von

Clement ChanCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Model AI Gov Framework Primer

Hochgeladen von

Clement ChanCopyright:

Verfügbare Formate

RESPONSIBLE AI MADE EASY

FOR ORGANISATIONS

Using Artificial Intelligence (AI) in your company?

Help your customers understand and build their confidence in your AI solutions.

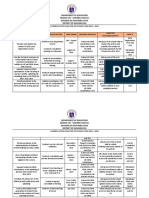

PRINCIPLES FOR RESPONSIBLE AI

DECISIONS MADE BY AI SHOULD BE AI SYSTEMS, ROBOTS AND DECISIONS

EXPLAINABLE, SHOULD BE

TRANSPARENT AND FAIR HUMAN-CENTRIC

FACTORS TO CONSIDER

INTERNAL RISK CUSTOMER

GOVERNANCE MANAGEMENT OPERATIONS

STRUCTURES & RELATIONSHIP

IN AI DECISION- MANAGEMENT

MEASURES MANAGEMENT

MAKING

• Clear roles and • Appropriate • Minimise bias in • Make AI policies

responsibilities in degree of human data and model known to users

your organisation involvement

• Regular reviews • Allow users to

• SOPs to monitor • Minimise the of data and provide feedback,

and manage risks risk of harm to model if possible

individuals

• Staff training • Use simple

language

FIND OUT MORE ABOUT THE PDPC’S MODEL AI GOVERNANCE FRAMEWORK AT

WWW.PDPC.GOV.SG/MODEL-AI-GOV.

An initiative by: In support of:

DEGREE OF HUMAN

INVOLVEMENT

Determine the degree of human involvement in your AI solution

that will minimise the risk of adverse impact on individuals.

SEVERITY OF HARM

LOW HIGH

Human-out-of-the-loop Human-over-the-loop Human-in-the-loop

AI makes the final decision AI decides but the user User makes the final

without human involvement, can override the choice, decision with recommendations

e.g. recommendation e.g. GPS map navigations. or input from AI, e.g. medical

engines. diagnosis solutions.

HUMAN INVOLVEMENT: HOW MUCH IS JUST RIGHT?

An online retail store wishes to use AI to fully automate the HIGHLY

RECOMMEND!

recommendation of food products to individuals based on

their browsing behaviours and purchase history.

What should be assessed?

What is the harm?

One possible harm could be recommending products that

the customer does not need or want.

Is it a serious problem?

Wrong product recommendations would not be a serious

problem since the customer can still decide whether or not

to accept the recommendations.

Recommendation:

Given the low severity of harm, the human-out-of-the loop

model could be considered for adoption.

Das könnte Ihnen auch gefallen

- Artificial Intelligence: Presented By: Ms. Aashita Jhamb & Ms. Jaismin KaurDokument20 SeitenArtificial Intelligence: Presented By: Ms. Aashita Jhamb & Ms. Jaismin KaurNisha SinghNoch keine Bewertungen

- A320 TakeoffDokument17 SeitenA320 Takeoffpp100% (1)

- Your Guide To Iso 27701Dokument14 SeitenYour Guide To Iso 27701Clement Chan0% (1)

- Information Security Management System (Isms) and Handling of Personal DataDokument40 SeitenInformation Security Management System (Isms) and Handling of Personal DataClement ChanNoch keine Bewertungen

- Designing A Captivating Customer Experience: Aditya Chaudhary 180501003Dokument21 SeitenDesigning A Captivating Customer Experience: Aditya Chaudhary 180501003Dimple GuptaNoch keine Bewertungen

- AI-900 SlidesDokument193 SeitenAI-900 SlidesSenan Mehdiyev100% (1)

- Artificial IntelligenceDokument35 SeitenArtificial IntelligenceChandanSingh100% (1)

- AI Playbook For The Enterprise - Quantiphi - FINALDokument14 SeitenAI Playbook For The Enterprise - Quantiphi - FINALlikhitaNoch keine Bewertungen

- PLRD1.1.10 - SOP For Planning Bomb Threat ExerciseDokument5 SeitenPLRD1.1.10 - SOP For Planning Bomb Threat ExerciseClement ChanNoch keine Bewertungen

- Forrester Predictions 2022 - AutomationDokument4 SeitenForrester Predictions 2022 - Automationryan shecklerNoch keine Bewertungen

- Lockbox Br100 v1.22Dokument36 SeitenLockbox Br100 v1.22Manoj BhogaleNoch keine Bewertungen

- Derisking Ai by Design Build Risk Management Into Ai DevDokument11 SeitenDerisking Ai by Design Build Risk Management Into Ai DevHassan SheikhNoch keine Bewertungen

- On CatiaDokument42 SeitenOn Catiahimanshuvermac3053100% (1)

- ACIS - Auditing Computer Information SystemDokument10 SeitenACIS - Auditing Computer Information SystemErwin Labayog MedinaNoch keine Bewertungen

- Viceversa Tarot PDF 5Dokument1 SeiteViceversa Tarot PDF 5Kimberly Hill100% (1)

- Is Deep Learning Is A Game Changer For Marketing AnalyticsDokument23 SeitenIs Deep Learning Is A Game Changer For Marketing AnalyticsT BINDHU BHARGAVINoch keine Bewertungen

- Ethics On Artificial IntelligenceDokument16 SeitenEthics On Artificial IntelligenceRizky AbdillahNoch keine Bewertungen

- OSG Capabilities January 2022Dokument33 SeitenOSG Capabilities January 2022mohit bhallaNoch keine Bewertungen

- BrochureDokument4 SeitenBrochureSeifeddine Zammel100% (1)

- Three Questions Marketing Leaders Should Ask Before Approving An Ai PlanDokument8 SeitenThree Questions Marketing Leaders Should Ask Before Approving An Ai PlanCarmenNoch keine Bewertungen

- Be The One - Season 3 - Tech Geeks - MDIDokument6 SeitenBe The One - Season 3 - Tech Geeks - MDIYASH KOCHARNoch keine Bewertungen

- SOLUS Is An Autonomous System That Enables Hyper-Personalized Engagement With Individual Customers at ScaleDokument3 SeitenSOLUS Is An Autonomous System That Enables Hyper-Personalized Engagement With Individual Customers at ScaleShikhaNoch keine Bewertungen

- DTM ProjectDokument30 SeitenDTM ProjectAyush kumarNoch keine Bewertungen

- Responsible Artificial Intelligence Principles at RELXDokument10 SeitenResponsible Artificial Intelligence Principles at RELXcvpn55Noch keine Bewertungen

- Report 2 Current Trends Clinical PsychDokument16 SeitenReport 2 Current Trends Clinical PsychJoyce MercadoNoch keine Bewertungen

- Incedo TM Lighthouse For Operational Decision AutomationDokument16 SeitenIncedo TM Lighthouse For Operational Decision AutomationWilliam HenryNoch keine Bewertungen

- Sea To Sea 2022 - Round1 - Capitalist CrewDokument20 SeitenSea To Sea 2022 - Round1 - Capitalist Crew34-12C2A- Huỳnh Thị Thu UyênNoch keine Bewertungen

- Brochure - Predictive Analytics Summit 2020 PDFDokument10 SeitenBrochure - Predictive Analytics Summit 2020 PDFKaustubh SinghNoch keine Bewertungen

- Management Information System: 2nd Semester, School Year 2016 - 2017 Instructor: Mr. Ronald Gomez Subject Code: MGT 114Dokument4 SeitenManagement Information System: 2nd Semester, School Year 2016 - 2017 Instructor: Mr. Ronald Gomez Subject Code: MGT 114anon_461820450Noch keine Bewertungen

- Ujet AiDokument2 SeitenUjet AiSudip GhoshNoch keine Bewertungen

- AR Case StudyDokument15 SeitenAR Case StudyRajesh RamakrishnanNoch keine Bewertungen

- AI Machine LearningDokument18 SeitenAI Machine LearningAbdelhkim KechNoch keine Bewertungen

- Artificial Intelligence: Decoding AI For Internal Audit Pankaj Kumar Ca, Cfa, IsaDokument9 SeitenArtificial Intelligence: Decoding AI For Internal Audit Pankaj Kumar Ca, Cfa, Isabooks oneNoch keine Bewertungen

- Artificial Intelligence DemoDokument15 SeitenArtificial Intelligence DemoRahul RajNoch keine Bewertungen

- Unit I Introduction To Lean ManufacturingDokument14 SeitenUnit I Introduction To Lean Manufacturingtamilselvan nNoch keine Bewertungen

- Transforming Healthcare Through Artificial Intelligence SystemsDokument19 SeitenTransforming Healthcare Through Artificial Intelligence Systemspharm.ajbashirNoch keine Bewertungen

- PWC - Sizing The PrizeDokument32 SeitenPWC - Sizing The PrizeAlpArıbalNoch keine Bewertungen

- A Pac CX Summit 1591760220138Dokument58 SeitenA Pac CX Summit 1591760220138A.V.RamyaNoch keine Bewertungen

- PDF ProposalDokument20 SeitenPDF ProposalrearcowNoch keine Bewertungen

- Lecture 2 - Customer BehaviourDokument58 SeitenLecture 2 - Customer BehaviourLIN XIAOLINoch keine Bewertungen

- Engagemen T On Doctorrx: Shubham AgarwalDokument11 SeitenEngagemen T On Doctorrx: Shubham AgarwalSanika ChhabraNoch keine Bewertungen

- AI in Leadership: Navigating the FutureVon EverandAI in Leadership: Navigating the FutureNoch keine Bewertungen

- Group 17 BRM ProjectDokument24 SeitenGroup 17 BRM ProjectRishab MaheshwariNoch keine Bewertungen

- The Cognitive Enterprise For HCM in Retail Powered by Ibm and Oracle - 46027146USENDokument29 SeitenThe Cognitive Enterprise For HCM in Retail Powered by Ibm and Oracle - 46027146USENByte MeNoch keine Bewertungen

- ECH5514 Chap3 1032017 StudentDokument56 SeitenECH5514 Chap3 1032017 Studentmohamad azrilNoch keine Bewertungen

- Ibm SecurityDokument12 SeitenIbm SecurityEmanuil RednicNoch keine Bewertungen

- Workbook Week 7Dokument12 SeitenWorkbook Week 7manolosailesNoch keine Bewertungen

- Notes On Cognitive IntelligenceDokument4 SeitenNotes On Cognitive IntelligenceshamNoch keine Bewertungen

- Product DevelopmentDokument12 SeitenProduct DevelopmentNina Nursita RamadhanNoch keine Bewertungen

- Ai PresentationDokument10 SeitenAi Presentationds1048Noch keine Bewertungen

- Collaborative IntelligenceDokument37 SeitenCollaborative Intelligencearun singhNoch keine Bewertungen

- HP ArcSight User BehaviorDokument2 SeitenHP ArcSight User BehaviorIcursoCLNoch keine Bewertungen

- Product Thinking EthicsDokument28 SeitenProduct Thinking Ethics朱宸烨Noch keine Bewertungen

- Executive Summary Customer Experience DesignDokument32 SeitenExecutive Summary Customer Experience DesignAdrian NewNoch keine Bewertungen

- Konica Minolta ICW Personae BrochureDokument22 SeitenKonica Minolta ICW Personae BrochureahmedNoch keine Bewertungen

- Anthropic - Constitutional AIDokument2 SeitenAnthropic - Constitutional AImsmmcpbtergjmalueuNoch keine Bewertungen

- Shostack - Designing Services That DeliverDokument8 SeitenShostack - Designing Services That Delivermother materialNoch keine Bewertungen

- HBR Report The Great Data ExchangeDokument16 SeitenHBR Report The Great Data Exchangeapurva0jainNoch keine Bewertungen

- Deloitte Belgium - AI BrochureDokument4 SeitenDeloitte Belgium - AI BrochureMorad FaragNoch keine Bewertungen

- The 7 Most Popular Prioritization Frameworks: Comparison: Moscow Value Vs Effort RiceDokument2 SeitenThe 7 Most Popular Prioritization Frameworks: Comparison: Moscow Value Vs Effort Ricesreehitha bharadwajNoch keine Bewertungen

- Nextgen Risk Management: How Do Machines Make Decisions?Dokument18 SeitenNextgen Risk Management: How Do Machines Make Decisions?Piyush KulkarniNoch keine Bewertungen

- Winner Tech Sol.Dokument10 SeitenWinner Tech Sol.primeforenjoyNoch keine Bewertungen

- OpenSAP Aie1 Unit 3 PILLAR PresentationDokument10 SeitenOpenSAP Aie1 Unit 3 PILLAR Presentationessiekamau13Noch keine Bewertungen

- By Team MinddevDokument22 SeitenBy Team Minddevna feNoch keine Bewertungen

- Qualitative ResearchDokument18 SeitenQualitative ResearchLily HânNoch keine Bewertungen

- Detail - Security SolutionsDokument2 SeitenDetail - Security SolutionsCarlos RiveraNoch keine Bewertungen

- MosDokument20 SeitenMosgagananeja11Noch keine Bewertungen

- A Reimagined Future of PossibilitiesDokument11 SeitenA Reimagined Future of PossibilitiesAbha SinghNoch keine Bewertungen

- PLRD1.1.14 - SOP For Planning Suspicious Person, Vehicle or Activities ExerciseDokument7 SeitenPLRD1.1.14 - SOP For Planning Suspicious Person, Vehicle or Activities ExerciseClement Chan100% (2)

- PLRD1.1.16 - SOP For Planning A Red Teaming ExerciseDokument8 SeitenPLRD1.1.16 - SOP For Planning A Red Teaming ExerciseClement ChanNoch keine Bewertungen

- Standards For Privacy-By-Design: Antonio Kung, CTO 25 Rue Du Général Foy, 75008 ParisDokument39 SeitenStandards For Privacy-By-Design: Antonio Kung, CTO 25 Rue Du Général Foy, 75008 ParisClement ChanNoch keine Bewertungen

- Nymity Terry Mcquay Teresa Troester-Falk 002Dokument19 SeitenNymity Terry Mcquay Teresa Troester-Falk 002Clement ChanNoch keine Bewertungen

- Step by Step Guidebook On Best SourcingDokument98 SeitenStep by Step Guidebook On Best SourcingClement ChanNoch keine Bewertungen

- Advisory Guidelines On Key Concepts in The Pdpa (Revised 9 October 2019)Dokument7 SeitenAdvisory Guidelines On Key Concepts in The Pdpa (Revised 9 October 2019)Clement ChanNoch keine Bewertungen

- Advisory Guidelines On Key Concepts in The Pdpa (Revised 9 October 2019)Dokument4 SeitenAdvisory Guidelines On Key Concepts in The Pdpa (Revised 9 October 2019)Clement ChanNoch keine Bewertungen

- Chapter 13 9 Oct 2019Dokument2 SeitenChapter 13 9 Oct 2019Clement ChanNoch keine Bewertungen

- Chapter 17 9 Oct 2019Dokument3 SeitenChapter 17 9 Oct 2019Clement ChanNoch keine Bewertungen

- The Much Anticipated: ISO/IEC 27701:2019Dokument6 SeitenThe Much Anticipated: ISO/IEC 27701:2019Clement ChanNoch keine Bewertungen

- Chapter 15 9 Oct 2019Dokument19 SeitenChapter 15 9 Oct 2019Clement ChanNoch keine Bewertungen

- Data Protection Management Programme: SGD (Incl. 7% GST) (Incl. 7% GST) SGD Self-Funded Company-SponsoredDokument1 SeiteData Protection Management Programme: SGD (Incl. 7% GST) (Incl. 7% GST) SGD Self-Funded Company-SponsoredClement ChanNoch keine Bewertungen

- Chapter 11 9 Oct 2019Dokument2 SeitenChapter 11 9 Oct 2019Clement ChanNoch keine Bewertungen

- Chapter 10 9 Oct 2019Dokument2 SeitenChapter 10 9 Oct 2019Clement ChanNoch keine Bewertungen

- Chapter 3to9 9 Oct 2019Dokument23 SeitenChapter 3to9 9 Oct 2019Clement ChanNoch keine Bewertungen

- Guide To Developing A Data Protection Management Programme 22 May 2019Dokument29 SeitenGuide To Developing A Data Protection Management Programme 22 May 2019Clement ChanNoch keine Bewertungen

- Chapter 1to2 9 Oct 2019Dokument3 SeitenChapter 1to2 9 Oct 2019Clement ChanNoch keine Bewertungen

- Advisory Guidelines For Management Corporations - 110319Dokument18 SeitenAdvisory Guidelines For Management Corporations - 110319Clement ChanNoch keine Bewertungen

- A Guide To Notification 11 SEPTEMBER 2014Dokument37 SeitenA Guide To Notification 11 SEPTEMBER 2014Clement ChanNoch keine Bewertungen

- Guide For Printing Processes For Organisations Published 3 May 2018Dokument18 SeitenGuide For Printing Processes For Organisations Published 3 May 2018Clement ChanNoch keine Bewertungen

- Ag PDPC Updates Guide To Managing Data Breaches 20 and Issues New Guide On Active EnforcementDokument4 SeitenAg PDPC Updates Guide To Managing Data Breaches 20 and Issues New Guide On Active EnforcementClement ChanNoch keine Bewertungen

- Advisory Guidelines On In-Vehicle Recordings - Updated-22 May 2018Dokument19 SeitenAdvisory Guidelines On In-Vehicle Recordings - Updated-22 May 2018Clement ChanNoch keine Bewertungen

- Advisory Guidelines For The Real Estate Agency Sector 16 MAY 2014Dokument21 SeitenAdvisory Guidelines For The Real Estate Agency Sector 16 MAY 2014Clement ChanNoch keine Bewertungen

- Guide To Managing Data Breaches 2-0Dokument39 SeitenGuide To Managing Data Breaches 2-0Longyin WangNoch keine Bewertungen

- Advisory Guidelines For The Healthcare SectorDokument28 SeitenAdvisory Guidelines For The Healthcare SectorClement ChanNoch keine Bewertungen

- Guide To Handling Access Requests 09 JUNE 2016Dokument14 SeitenGuide To Handling Access Requests 09 JUNE 2016Clement ChanNoch keine Bewertungen

- Escario Vs NLRCDokument10 SeitenEscario Vs NLRCnat_wmsu2010Noch keine Bewertungen

- Micron Interview Questions Summary # Question 1 Parsing The HTML WebpagesDokument2 SeitenMicron Interview Questions Summary # Question 1 Parsing The HTML WebpagesKartik SharmaNoch keine Bewertungen

- KSU OGE 23-24 AffidavitDokument1 SeiteKSU OGE 23-24 Affidavitsourav rorNoch keine Bewertungen

- Chapter 5Dokument3 SeitenChapter 5Showki WaniNoch keine Bewertungen

- CoDokument80 SeitenCogdayanand4uNoch keine Bewertungen

- Underwater Wellhead Casing Patch: Instruction Manual 6480Dokument8 SeitenUnderwater Wellhead Casing Patch: Instruction Manual 6480Ragui StephanosNoch keine Bewertungen

- P 1 0000 06 (2000) - EngDokument34 SeitenP 1 0000 06 (2000) - EngTomas CruzNoch keine Bewertungen

- Internship ReportDokument46 SeitenInternship ReportBilal Ahmad100% (1)

- Online EarningsDokument3 SeitenOnline EarningsafzalalibahttiNoch keine Bewertungen

- Instructions For Microsoft Teams Live Events: Plan and Schedule A Live Event in TeamsDokument9 SeitenInstructions For Microsoft Teams Live Events: Plan and Schedule A Live Event in TeamsAnders LaursenNoch keine Bewertungen

- Using Boss Tone Studio For Me-25Dokument4 SeitenUsing Boss Tone Studio For Me-25Oskar WojciechowskiNoch keine Bewertungen

- Sustainable Urban Mobility Final ReportDokument141 SeitenSustainable Urban Mobility Final ReportMaria ClapaNoch keine Bewertungen

- BMA Recital Hall Booking FormDokument2 SeitenBMA Recital Hall Booking FormPaul Michael BakerNoch keine Bewertungen

- COOKERY10 Q2W4 10p LATOJA SPTVEDokument10 SeitenCOOKERY10 Q2W4 10p LATOJA SPTVECritt GogolinNoch keine Bewertungen

- BluetoothDokument28 SeitenBluetoothMilind GoratelaNoch keine Bewertungen

- PCDokument4 SeitenPCHrithik AryaNoch keine Bewertungen

- Difference Between Mountain Bike and BMXDokument3 SeitenDifference Between Mountain Bike and BMXShakirNoch keine Bewertungen

- Mid Term Exam 1Dokument2 SeitenMid Term Exam 1Anh0% (1)

- 18PGHR11 - MDI - Aditya JainDokument4 Seiten18PGHR11 - MDI - Aditya JainSamanway BhowmikNoch keine Bewertungen

- BASUG School Fees For Indigene1Dokument3 SeitenBASUG School Fees For Indigene1Ibrahim Aliyu GumelNoch keine Bewertungen

- Ajp Project (1) MergedDokument22 SeitenAjp Project (1) MergedRohit GhoshtekarNoch keine Bewertungen

- Hayashi Q Econometica 82Dokument16 SeitenHayashi Q Econometica 82Franco VenesiaNoch keine Bewertungen

- Action Plan Lis 2021-2022Dokument3 SeitenAction Plan Lis 2021-2022Vervie BingalogNoch keine Bewertungen

- Amare Yalew: Work Authorization: Green Card HolderDokument3 SeitenAmare Yalew: Work Authorization: Green Card HolderrecruiterkkNoch keine Bewertungen

- WEEK6 BAU COOP DM NextGen CRMDokument29 SeitenWEEK6 BAU COOP DM NextGen CRMOnur MutluayNoch keine Bewertungen