Beruflich Dokumente

Kultur Dokumente

08 Reliability and Validity

Hochgeladen von

Tomáš KrajíčekCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

08 Reliability and Validity

Hochgeladen von

Tomáš KrajíčekCopyright:

Verfügbare Formate

[ evidence in practice ]

STEVEN J. KAMPER, PhD1

Reliability and Validity:

Linking Evidence to Practice

J Orthop Sports Phys Ther 2019;49(4):286-287. doi:10.2519/jospt.2019.0702

T

he previous Evidence in Practice article introduced the idea we might ask a patient to answer the

of the “construct,” or what you are interested in measuring, 24 questions of the Roland-Morris Dis-

for example, pain, disability, or strength. As there are often ability Questionnaire (the measure) and

score his or her level of back pain–related

numerous measures available for any given construct, how

disability (construct) by adding up the

do you choose which to use? Of the number of considerations that number of “yes” responses. The patient’s

go into this, none are more important than reliability and validity. score out of 24 is valid to the extent that

Downloaded from www.jospt.org by Springfield College on 04/01/19. For personal use only.

It is no overstatement to say that if a able measures do not provide useful in- the questions really reflect the construct

measure is not both sufficiently reliable formation; a measure that is not reliable of disability and to the extent that having

and valid, then it is not fit for purpose. cannot be valid. difficulty with more of the items reflects

There are several different types of greater disability.

Reliability reliability, each uniquely relevant to situ- There are several different types of

Formally, reliability is the extent to ations in which the measures might be validity relevant to clinical measures,

which a measurement is free from er- used. Intrarater reliability refers to the the most commonly assessed being con-

ror. In practice, a reliable measure is one situation where the same rater takes the struct validity. When researchers assess

that gives you the same answer when measure on one patient on several occa- the construct validity of a measure, they

you measure the same construct several sions, and reliability is the extent to which are ideally able to compare their mea-

times. Consider the example of measur- the scores from the successive measure- sure to a “gold standard.” For example,

J Orthop Sports Phys Ther 2019.49:286-287.

ing height (the construct) with a tape ments are the same. Interrater reliability arthroscopic visualization of the anterior

measure (the measure). You might mea- is relevant when multiple raters use the cruciate ligament is considered a gold

sure a person’s height in millimeters 3 same measure on a single person, and re- standard of anterior cruciate ligament

times with the tape measure; the extent liability is the extent to which scores from rupture, so a study might compare results

to which the number of millimeters is the the different raters are the same. from Lachman’s test to the findings from

same on each occasion is the reliability of arthroscopy to assess the validity of Lach-

the measure. Validity man’s test.

The implications of unreliable mea- Validity is the extent to which the score Unfortunately, there are no gold stan-

sures are serious. If an unreliable diag- on a measure truly reflects the construct dards for many constructs in which we

nostic test (measure) was applied to a it is supposed to measure. This is rela- are interested (eg, latent constructs such

patient several times, then the same pa- tively straightforward when it comes to as disability and pain). In these cases,

tient might be diagnosed as both having things like height or strength, but waters construct validity is tested against a “ref-

and not having the condition on differ- quickly become murky when we consider erence standard,” which is a sort of im-

ent occasions or by different people. If an unobservable or “latent” constructs such perfect gold standard. When there is no

unreliable measure of symptom severity as pain, quality of life, or disability. For gold standard, the best way to test valid-

was collected from a patient before and these sorts of constructs, we collect in- ity is via hypothesis testing. This involves

after an intervention, then it would be direct measures, such as self-reported setting out a series of hypotheses before

impossible to tell whether that symptom experiences and behaviors, or recall of collecting the data. These hypotheses are

improved, stayed the same, or got worse. beliefs and emotions, and assume that theorized relationships between a score

Essentially, data collected from unreli- these reflect the construct. For example, on the measure and other characteris-

School of Public Health, University of Sydney, Camperdown, Australia; Centre for Pain, Health and Lifestyle, Australia. t Copyright ©2019 Journal of Orthopaedic & Sports Physical

1

Therapy®

286 | april 2019 | volume 49 | number 4 | journal of orthopaedic & sports physical therapy

tics, for example, that the score will be coefficients, correlations, limits of agree- no perfectly reliable and perfectly valid

strongly correlated with scores on anoth- ment, R2). measure. Even in the case of measuring

er measure of the same construct and less height with a tape measure, successive

strongly correlated with scores on a dif- Conclusion measures are likely to differ by a few mil-

ferent but related construct. The extent There are a couple of important general limeters here and there. Finally, there

to which the data are consistent with the points to note about reliability and valid- are practical concerns when it comes to

predetermined hypotheses will be evi- ity. First, both are on a spectrum, so mea- choosing a measure, including how long

dence supporting validity of the measure. sures are not “unreliable” or “reliable,” but it takes to administer, whether the patient

more or less reliable and more or less valid. can comprehend text or instructions, and

Statistics Of course, this makes choosing measures how data will be stored and used.

Testing reliability and validity generally more difficult; we need to make a subjec- Measurement is an entire field of re-

involves assessing agreement between 2 tive judgment as to whether a measure is search by itself. Although the general

scores, either scores on the same measure “reliable enough” and “valid enough” in a concepts are quite straightforward, you

collected twice (reliability) or scores on particular situation. General guidelines do not have to scratch too far below the

different measures (validity). The statis- exist to help interpret reliability and va- surface before things become complicat-

tics used to describe agreement depend lidity statistics, but these guidelines do not ed. When reading research, you should

on whether the measures are dichoto- and should not replace clinical judgment. look for information that reassures you

mous (eg, kappa, sensitivity/specificity) The second point is that no measure sits that the measures used are sufficiently re-

or continuous (eg, intraclass correlation on the very end of the spectrum; there is liable and valid. The take-home message:

Downloaded from www.jospt.org by Springfield College on 04/01/19. For personal use only.

be very cautious about using, or trying to

“Essentially, data collected from unreliable interpret, information from a measure if

you have no information about its reli-

measures do not provide useful information.” ability and validity. t

J Orthop Sports Phys Ther 2019.49:286-287.

BROWSE Collections of Articles on JOSPT’s Website

JOSPTs website (www.jospt.org) offers readers the opportunity to browse

published articles by Previous Issues with accompanying volume and issue

numbers, date of publication, and page range; the table of contents of the

Upcoming Issue; a list of available accepted Ahead of Print articles; and

a listing of Categories and their associated article collections by type

of article (Research Report, Case Report, etc).

Features further curates 3 primary JOSPT article collections:

Musculoskeletal Imaging, Clinical Practice Guidelines, and Perspectives

for Patients, and provides a directory of Special Reports published

by JOSPT.

journal of orthopaedic & sports physical therapy | volume 49 | number 4 | april 2019 | 287

Das könnte Ihnen auch gefallen

- Evaluating a Psychometric Test as an Aid to SelectionVon EverandEvaluating a Psychometric Test as an Aid to SelectionBewertung: 5 von 5 Sternen5/5 (1)

- Confidence Intervals: Linking Evidence To PracticeDokument2 SeitenConfidence Intervals: Linking Evidence To PracticeTomáš KrajíčekNoch keine Bewertungen

- Validity and ReliabilityDokument5 SeitenValidity and Reliabilityrawan.taha2000.rtmNoch keine Bewertungen

- Validity and Reliability in Quantitative Studies: Roberta Heale, Alison TwycrossDokument2 SeitenValidity and Reliability in Quantitative Studies: Roberta Heale, Alison TwycrossAnita DuwalNoch keine Bewertungen

- Reliability What Type Please PDFDokument4 SeitenReliability What Type Please PDFckNoch keine Bewertungen

- 66 FullDokument3 Seiten66 FullJepe LlorenteNoch keine Bewertungen

- Validity and Reliability in Quantitative Studies: Roberta Heale, Alison TwycrossDokument1 SeiteValidity and Reliability in Quantitative Studies: Roberta Heale, Alison TwycrossJemarie FernandezNoch keine Bewertungen

- Unit 2 Reliability and Validity (External and Internal) : StructureDokument19 SeitenUnit 2 Reliability and Validity (External and Internal) : StructureVeenaVidhyaSri VijayakumarNoch keine Bewertungen

- Classics in The History of Psychology: Psyc 115: Test ConstructionDokument3 SeitenClassics in The History of Psychology: Psyc 115: Test Constructionjulie_colegadoNoch keine Bewertungen

- Unit 9 - Validity and Reliability of A Research InstrumentDokument17 SeitenUnit 9 - Validity and Reliability of A Research InstrumentazharmalurNoch keine Bewertungen

- Testing The Reliability and Validity of The Self-Effica.. PDFDokument13 SeitenTesting The Reliability and Validity of The Self-Effica.. PDFnurona azizahNoch keine Bewertungen

- Summary Chapter Vi To X (Io Psych Aamodt)Dokument16 SeitenSummary Chapter Vi To X (Io Psych Aamodt)Joseanne Marielle100% (2)

- Validity and ReliabilityDokument3 SeitenValidity and ReliabilitybarbaragodoycensiNoch keine Bewertungen

- Concept of Reliability and ValidityDokument6 SeitenConcept of Reliability and ValidityAnjaliNoch keine Bewertungen

- Reliability and Validity of Research Instruments: Correspondence ToDokument19 SeitenReliability and Validity of Research Instruments: Correspondence TonurwadiaNoch keine Bewertungen

- Measures of Reliability in Sports Medicine and Science: Will G. HopkinsDokument15 SeitenMeasures of Reliability in Sports Medicine and Science: Will G. HopkinsAlexandre FerreiraNoch keine Bewertungen

- Evaluating An Assessment Scale of Irrational Beliefs For People With Mental Health ProblemsDokument15 SeitenEvaluating An Assessment Scale of Irrational Beliefs For People With Mental Health Problemsirina97mihaNoch keine Bewertungen

- Valadity and ReliabilityDokument12 SeitenValadity and ReliabilitySuraj ThakuriNoch keine Bewertungen

- Strong Theory Testing Using The Prior Predictive and The Data PriorDokument10 SeitenStrong Theory Testing Using The Prior Predictive and The Data PriorwlfgngvnpmlNoch keine Bewertungen

- AM Last Page: Reliability and Validity in Educational MeasurementDokument2 SeitenAM Last Page: Reliability and Validity in Educational MeasurementKhurram FawadNoch keine Bewertungen

- Validity and ReliabilityDokument15 SeitenValidity and ReliabilityMelii SafiraNoch keine Bewertungen

- G-8 ReportDokument14 SeitenG-8 ReportBlesse PateñoNoch keine Bewertungen

- Lesson 15 Validity of Measurement and Reliability PDFDokument3 SeitenLesson 15 Validity of Measurement and Reliability PDFMarkChristianRobleAlmazanNoch keine Bewertungen

- Validity and Reliability in Quantitative Research: Evidence-Based Nursing January 2015Dokument4 SeitenValidity and Reliability in Quantitative Research: Evidence-Based Nursing January 2015PascalinaNoch keine Bewertungen

- Introduction To Validity and ReliabilityDokument6 SeitenIntroduction To Validity and ReliabilityWinifridaNoch keine Bewertungen

- Submitted To: Dr. Ghias Ul Haq. Submitted By: Noorulhadi Qureshi (PHD Scholar)Dokument27 SeitenSubmitted To: Dr. Ghias Ul Haq. Submitted By: Noorulhadi Qureshi (PHD Scholar)iqraNoch keine Bewertungen

- Instrument Reliability and ValidityDokument2 SeitenInstrument Reliability and ValidityNathoNoch keine Bewertungen

- 8602 2nd AssignmentDokument48 Seiten8602 2nd AssignmentWaqas AhmadNoch keine Bewertungen

- 4 Ways of Knowing (Reference Balita C. Et Al)Dokument5 Seiten4 Ways of Knowing (Reference Balita C. Et Al)JEREMY MAKALINTALNoch keine Bewertungen

- Reliability Vs Validity in ResearchDokument12 SeitenReliability Vs Validity in ResearchnurwadiaNoch keine Bewertungen

- STAR CriteriaDokument5 SeitenSTAR CriteriaragahkbsNoch keine Bewertungen

- Unit 2 Reliability and Validity (External and Internal) : StructureDokument15 SeitenUnit 2 Reliability and Validity (External and Internal) : StructureBikash Kumar MallickNoch keine Bewertungen

- Week 9 SlidesDokument64 SeitenWeek 9 Slidesnokhochi55Noch keine Bewertungen

- Validity and Reliability in Quantitative Research: Evidence-Based Nursing January 2015Dokument4 SeitenValidity and Reliability in Quantitative Research: Evidence-Based Nursing January 2015Rohan SawantNoch keine Bewertungen

- Kamper2020 EvidenceinPract 14-RiskofBias JOSPTDokument3 SeitenKamper2020 EvidenceinPract 14-RiskofBias JOSPTRoger AndreyNoch keine Bewertungen

- OnlundDokument12 SeitenOnlundapi-3764755100% (2)

- Reliability by Vartika VermaDokument17 SeitenReliability by Vartika VermaVartika VermaNoch keine Bewertungen

- Psychology of Sport and Exercise: Stuart Beattie, Lew Hardy, Jennifer Savage, Tim Woodman, Nichola CallowDokument8 SeitenPsychology of Sport and Exercise: Stuart Beattie, Lew Hardy, Jennifer Savage, Tim Woodman, Nichola CallowMuhammad SaifulahNoch keine Bewertungen

- ValidityDokument4 SeitenValidityRochelle Alava CercadoNoch keine Bewertungen

- What Is Validity?: The Graphic Below Portrays The Same IdeaDokument5 SeitenWhat Is Validity?: The Graphic Below Portrays The Same IdeaBharti KumariNoch keine Bewertungen

- Reliability Vs Validity in Research - Differences, Types and ExamplesDokument10 SeitenReliability Vs Validity in Research - Differences, Types and ExamplesHisham ElhadidiNoch keine Bewertungen

- CHAPTER 11: Establishing The Validity and Reliability of A Research InstrumentDokument2 SeitenCHAPTER 11: Establishing The Validity and Reliability of A Research Instrumentalia.delareine100% (3)

- Thursday Validity, Reliability and GeneralizabilityDokument16 SeitenThursday Validity, Reliability and GeneralizabilityThomas Adam Johnson100% (1)

- 07 Fundamentals of MeasurementDokument2 Seiten07 Fundamentals of MeasurementTomáš KrajíčekNoch keine Bewertungen

- 8602 Assignment No 2Dokument24 Seiten8602 Assignment No 2Fatima YousafNoch keine Bewertungen

- 2 - Validitas Dalam Penelitian EksperimenDokument44 Seiten2 - Validitas Dalam Penelitian Eksperimensilmi sofyanNoch keine Bewertungen

- Validity and Reliability of A Research InstrumentDokument11 SeitenValidity and Reliability of A Research InstrumentJavaria EhsanNoch keine Bewertungen

- Medidas de Stress en Ambientes Virtuales MehaanDokument8 SeitenMedidas de Stress en Ambientes Virtuales MehaanAleVásquezNoch keine Bewertungen

- Uncertainty Measure in Evidence Theory With Its ApplicationsDokument17 SeitenUncertainty Measure in Evidence Theory With Its Applications弘瑞 蒋Noch keine Bewertungen

- The Reliability Factor Estimating Individual Reliability With Multiple Items On A Single Occasion V2Dokument19 SeitenThe Reliability Factor Estimating Individual Reliability With Multiple Items On A Single Occasion V2kurniawan saputraNoch keine Bewertungen

- Unit 2Dokument26 SeitenUnit 2MmaNoch keine Bewertungen

- Types of ValidityDokument5 SeitenTypes of ValidityMelody BautistaNoch keine Bewertungen

- Expe Chap 7 G3Dokument11 SeitenExpe Chap 7 G3Nadine Chanelle QuitorianoNoch keine Bewertungen

- ValidityDokument2 SeitenValidityYvette OmNoch keine Bewertungen

- 23 March 2021 Characteristics of A Good Test (Reliability)Dokument6 Seiten23 March 2021 Characteristics of A Good Test (Reliability)Bunga RefiraNoch keine Bewertungen

- 5 1 1 Reliability and ValidityDokument2 Seiten5 1 1 Reliability and ValiditySonia MiminNoch keine Bewertungen

- TD Chapter3Dokument44 SeitenTD Chapter3Juan MayorgaNoch keine Bewertungen

- Getting Serious About Test-Retest Reliability: A Critique of Retest Research and Some RecommendationsDokument8 SeitenGetting Serious About Test-Retest Reliability: A Critique of Retest Research and Some Recommendationslengers poworNoch keine Bewertungen

- Presentation OutlineDokument6 SeitenPresentation OutlineMKWD NRWMNoch keine Bewertungen

- ValidityDokument26 SeitenValidityRathiNoch keine Bewertungen

- PE067 Handout Shoulder Special Tests and The Rotator Cuff With DR Chris LittlewoodDokument5 SeitenPE067 Handout Shoulder Special Tests and The Rotator Cuff With DR Chris LittlewoodTomáš KrajíčekNoch keine Bewertungen

- PE064 Calf Pain in Runners HandoutDokument3 SeitenPE064 Calf Pain in Runners HandoutTomáš KrajíčekNoch keine Bewertungen

- McKenzie Mechanical Syndromes Coincide With Biopsychosocial InfluencesDokument14 SeitenMcKenzie Mechanical Syndromes Coincide With Biopsychosocial InfluencesTomáš KrajíčekNoch keine Bewertungen

- Tendinopathy Rehabilitation - PhysiopediaDokument11 SeitenTendinopathy Rehabilitation - PhysiopediaTomáš KrajíčekNoch keine Bewertungen

- PE066 Treatment of Calf Pain in Runners HandoutDokument3 SeitenPE066 Treatment of Calf Pain in Runners HandoutTomáš KrajíčekNoch keine Bewertungen

- PE 065 InfographicDokument1 SeitePE 065 InfographicTomáš KrajíčekNoch keine Bewertungen

- 1 PE 067 InfographicDokument1 Seite1 PE 067 InfographicTomáš KrajíčekNoch keine Bewertungen

- The Ef Cacy of Systematic Active Conservative Treatment For Patients With Severe SciaticaDokument12 SeitenThe Ef Cacy of Systematic Active Conservative Treatment For Patients With Severe SciaticaTomáš KrajíčekNoch keine Bewertungen

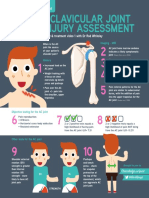

- AC Joint InfographicDokument1 SeiteAC Joint InfographicTomáš KrajíčekNoch keine Bewertungen

- Construct Validity of Lumbar Extension Measures in Mckenzie'S Derangement SyndromeDokument7 SeitenConstruct Validity of Lumbar Extension Measures in Mckenzie'S Derangement SyndromeTomáš KrajíčekNoch keine Bewertungen

- Randomization: Linking Evidence To PracticeDokument2 SeitenRandomization: Linking Evidence To PracticeTomáš KrajíčekNoch keine Bewertungen

- Generalizability: Linking Evidence To PracticeDokument2 SeitenGeneralizability: Linking Evidence To PracticeTomáš KrajíčekNoch keine Bewertungen

- 07 Fundamentals of MeasurementDokument2 Seiten07 Fundamentals of MeasurementTomáš KrajíčekNoch keine Bewertungen

- Control Groups: Linking Evidence To PracticeDokument2 SeitenControl Groups: Linking Evidence To PracticeTomáš KrajíčekNoch keine Bewertungen

- Interpreting Outcomes 3 - Clinical Meaningfulness: Linking Evidence To PracticeDokument2 SeitenInterpreting Outcomes 3 - Clinical Meaningfulness: Linking Evidence To PracticeTomáš KrajíčekNoch keine Bewertungen

- Blinding: Linking Evidence To PracticeDokument2 SeitenBlinding: Linking Evidence To PracticeTomáš KrajíčekNoch keine Bewertungen

- Engaging With Research: Linking Evidence With PracticeDokument2 SeitenEngaging With Research: Linking Evidence With PracticeTomáš KrajíčekNoch keine Bewertungen

- Bias: Linking Evidence With PracticeDokument2 SeitenBias: Linking Evidence With PracticeTomáš KrajíčekNoch keine Bewertungen

- Gufoni Maneuver: Horizontal Canal BPPVDokument19 SeitenGufoni Maneuver: Horizontal Canal BPPVTomáš KrajíčekNoch keine Bewertungen

- Cholera & DysenteryDokument28 SeitenCholera & DysenterySherbaz Sheikh100% (1)

- Blood Transfusion Guidelines 2014Dokument10 SeitenBlood Transfusion Guidelines 2014Trang HuynhNoch keine Bewertungen

- Running Head: VICTIM ADVOCACYDokument5 SeitenRunning Head: VICTIM ADVOCACYCatherineNoch keine Bewertungen

- Automated Drain CleanerDokument43 SeitenAutomated Drain Cleanervrinda hebbarNoch keine Bewertungen

- Cancer AwarenessDokument20 SeitenCancer AwarenessArul Nambi Ramanujam100% (3)

- Love, Lust, and Attachment: Lesson 5 - 1 - 2Dokument12 SeitenLove, Lust, and Attachment: Lesson 5 - 1 - 2Mary PaladanNoch keine Bewertungen

- Social Pragmatic Deficits Checklist Sample For Preschool ChildrenDokument5 SeitenSocial Pragmatic Deficits Checklist Sample For Preschool ChildrenBianca GonzalesNoch keine Bewertungen

- Tetralogy Hypercyanotic SpellDokument3 SeitenTetralogy Hypercyanotic SpellJunior PratasikNoch keine Bewertungen

- Drug Study For HELLP SyndromeDokument19 SeitenDrug Study For HELLP SyndromeRosemarie CarpioNoch keine Bewertungen

- Lecture Notes Module 1Dokument60 SeitenLecture Notes Module 1Bommineni Lohitha Chowdary 22213957101Noch keine Bewertungen

- Bladder Diverticulum and SepsisDokument4 SeitenBladder Diverticulum and SepsisInternational Medical PublisherNoch keine Bewertungen

- The Preoperative EvaluationDokument25 SeitenThe Preoperative Evaluationnormie littlemonsterNoch keine Bewertungen

- Schistosomes Parasite in HumanDokument27 SeitenSchistosomes Parasite in HumanAnonymous HXLczq3Noch keine Bewertungen

- Mood DisordersDokument1 SeiteMood DisordersTeresa MartinsNoch keine Bewertungen

- Laporan Master Tarif Pelayanan Rawat Jalan: Kode Nama TindakanDokument9 SeitenLaporan Master Tarif Pelayanan Rawat Jalan: Kode Nama TindakanRetna Wahyu WulandariNoch keine Bewertungen

- B.SC - Nursing 1st Year PDFDokument9 SeitenB.SC - Nursing 1st Year PDFSunita RaniNoch keine Bewertungen

- What Is Septic ShockDokument6 SeitenWhat Is Septic Shocksalome carpioNoch keine Bewertungen

- Land of NodDokument7 SeitenLand of NodMaltesers1976Noch keine Bewertungen

- Neal Sal Z Testimonials OnDokument6 SeitenNeal Sal Z Testimonials OnCarlos LiraNoch keine Bewertungen

- Sample Size PilotDokument5 SeitenSample Size PilotmsriramcaNoch keine Bewertungen

- New Prescription For Drug Makers - Update The Plants - WSJDokument5 SeitenNew Prescription For Drug Makers - Update The Plants - WSJStacy Kelly100% (1)

- SC Writ of Continuing Mandamus Case MMDA Et Al. vs. Concerned Residents of Manila Bay Et Al. GR Nos. 171947 48 2 15 2011Dokument25 SeitenSC Writ of Continuing Mandamus Case MMDA Et Al. vs. Concerned Residents of Manila Bay Et Al. GR Nos. 171947 48 2 15 2011Mary Grace Dionisio-RodriguezNoch keine Bewertungen

- Current Evidence For The Management of Schizoaffective DisorderDokument12 SeitenCurrent Evidence For The Management of Schizoaffective DisorderabdulNoch keine Bewertungen

- Compresion Isquemica PDFDokument6 SeitenCompresion Isquemica PDFIvanRomanManjarrezNoch keine Bewertungen

- The Magic of Ilizarov PDFDokument310 SeitenThe Magic of Ilizarov PDFOrto Mesp83% (6)

- Lymphoma HandoutDokument5 SeitenLymphoma Handoutapi-244850728Noch keine Bewertungen

- Cerebral EdemaDokument15 SeitenCerebral EdemaMae FlagerNoch keine Bewertungen

- Ankle SprainsDokument18 SeitenAnkle Sprainsnelson1313Noch keine Bewertungen

- NCP PcapDokument1 SeiteNCP PcapLhexie CooperNoch keine Bewertungen