Beruflich Dokumente

Kultur Dokumente

Standardized Multiple Regression Analysis

Hochgeladen von

Mon LuffyOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Standardized Multiple Regression Analysis

Hochgeladen von

Mon LuffyCopyright:

Verfügbare Formate

CHAPTER 6

STANDARDIZED MULTIPLE REGRESSION ANALYSIS

6.1 Introduction

The general form of a standardize multiple linear regression model is:

• Employed to control roundoff errors in normal equations calculations, and

• To permit comparisons of the estimated regression coefficients in common unit.

The least squares method is very sensitive to the rounding of error in the intermediate stage of

calculation.

Roundoff errors in Normal Equations Calculations:

• Roundoff errors tend to enter normal equations calculations primarily when inverse of

𝑋𝑋′𝑋𝑋 is taken.

• The danger of serious roundoff errors in (𝑋𝑋 ′ 𝑋𝑋)−1 when:

𝑋𝑋′𝑋𝑋 has a determinant that is close to zero

The elements of 𝑋𝑋′𝑋𝑋 differ substantially in order of magnitude.

• A solution for this condition is to transform the variables and thereby reparameterize

the regression model into the standardized regression model.

• The transformation to obtain the standardized regression model, called the correlation

transformation.

6.2 Correlation Transformation

Objective: To help with controlling roundoff errors.

The correlation transformation is a simple modification of the usual standardization of a

variable. The usual standardization of the response variable Y and the predictor variables

𝑋𝑋1 , 𝑋𝑋2 , … , 𝑋𝑋𝑝𝑝−1 are as follows:

𝑌𝑌𝑖𝑖 − 𝑌𝑌�

(6.1)

𝑠𝑠𝑌𝑌

𝑋𝑋𝑖𝑖𝑖𝑖 − 𝑋𝑋�𝑘𝑘

(𝑘𝑘 = 1, … , 𝑝𝑝 − 1 (6.2)

𝑠𝑠𝑘𝑘

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 1

where;

2

∑(𝑌𝑌𝑖𝑖 − 𝑌𝑌�)2 �∑(𝑋𝑋𝑖𝑖𝑖𝑖 − 𝑋𝑋�𝑘𝑘 )

𝑠𝑠𝑌𝑌 = � 𝑠𝑠𝑘𝑘 =

𝑛𝑛 − 1 𝑛𝑛 − 1

The correlation transformation is a simple function of the standardized variables in equation

(6.1) & (6.2):

1 𝑌𝑌𝑖𝑖 − 𝑌𝑌�

𝑌𝑌𝑖𝑖∗ = � � (6.3)

√𝑛𝑛 − 1 𝑠𝑠𝑌𝑌

∗

1 𝑋𝑋𝑖𝑖𝑖𝑖 − 𝑋𝑋�𝑘𝑘

𝑋𝑋𝑖𝑖𝑖𝑖 = � � (6.4)

√𝑛𝑛 − 1 𝑠𝑠𝑘𝑘

6.3 Standardized Regression Model

Consider multiple linear regression model

𝑦𝑦𝑖𝑖 = 𝛽𝛽0 + 𝛽𝛽1 𝑥𝑥𝑖𝑖1 + 𝛽𝛽2 𝑥𝑥𝑖𝑖2 + ⋯ + 𝛽𝛽𝑝𝑝−1 𝑥𝑥𝑖𝑖,𝑝𝑝−1 + 𝜀𝜀𝑖𝑖

The regression model with transformed variables 𝑌𝑌 ∗ and 𝑋𝑋𝑘𝑘∗ is called a standardized regression

model as follows

𝑦𝑦𝑖𝑖∗ = 𝛽𝛽1∗ 𝑋𝑋𝑖𝑖1

∗ ∗

+ ⋯ + 𝛽𝛽𝑝𝑝−1 ∗

𝑋𝑋𝑖𝑖,𝑝𝑝−1 + 𝜀𝜀𝑖𝑖∗ (6.5)

Why there is no intercept parameter? The least squares or maximum likelihood calculations

always would lead to an estimated intercept term of zero if an intercept parameter were present

in the model.

The calculation of parameter in the standardized regression model as follows:

𝑠𝑠𝑌𝑌

𝛽𝛽𝑘𝑘 = � � 𝛽𝛽𝑘𝑘∗ (6.6)

𝑠𝑠𝑘𝑘

𝛽𝛽0 = 𝑌𝑌� − 𝛽𝛽1 𝑋𝑋�1 − ⋯ − 𝛽𝛽𝑝𝑝 𝑋𝑋�𝑝𝑝−1 (6.6)

6.4 𝑿𝑿′𝑿𝑿 Matrix for Transformed Variables

When the variables have been transformed by the correlation transformation, to study the

inverse matrix and the least squares normal equations, we need to decompose the correlation

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 2

matrix containing all pairwise coefficients among the response and predictor variables into two

matrices:

1. The correlation matrix of the X variables. This matrix is defined as follows:

1 𝑟𝑟12 ⋯ 𝑟𝑟1,𝑝𝑝−1

𝑟𝑟21 1 ⋯ 𝑟𝑟2,𝑝𝑝−1

𝑟𝑟𝑋𝑋𝑋𝑋 =� ⋮ ⋮ � (6.7)

⋱ ⋮

𝑟𝑟𝑝𝑝−1,1 𝑟𝑟𝑝𝑝−1,2 ⋯ 1

2. The vector containing the coefficients of simple correlation between the response

variables Y and each of the X variables:

𝑟𝑟𝑌𝑌1

𝑟𝑟𝑌𝑌2

𝑟𝑟𝑌𝑌𝑌𝑌 =� ⋮ � (6.8)

𝑟𝑟𝑌𝑌,𝑝𝑝−1

The X matrix is:

𝑋𝑋 ∗ ⋯ ∗

𝑋𝑋1,𝑝𝑝−1

⎡ 11 ⎤

𝑋𝑋 ∗ ⋯ ∗

𝑋𝑋2,𝑝𝑝−1

𝑋𝑋 = ⎢ 21 ⎥ (6.9)

⎢ ⋮ ⋱ ⋮ ⎥

∗ ∗

⎣𝑋𝑋𝑛𝑛1 ⋯ 𝑋𝑋𝑛𝑛,𝑝𝑝−1 ⎦

Simply the correlation matrix of the X variables

𝑋𝑋 ′ 𝑋𝑋 = 𝑟𝑟𝑋𝑋𝑋𝑋 (6.10)

Estimated Standardized Regression Coefficients

The least squares normal equations; 𝑋𝑋 ′ 𝑋𝑋𝑋𝑋 = 𝑋𝑋′𝑌𝑌

The least squares estimators; 𝑏𝑏 = (𝑋𝑋′𝑋𝑋)−1 𝑋𝑋′𝑌𝑌

So, the transformation variables X’Y becomes: 𝑋𝑋 ′ 𝑌𝑌 = 𝑟𝑟𝑌𝑌𝑌𝑌

Thus, the least squares normal equations and estimators of the regression coefficients of the

standardized regression model as follows:

𝑟𝑟𝑋𝑋𝑋𝑋 𝑏𝑏 = 𝑟𝑟𝑌𝑌𝑌𝑌 (6.11)

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 3

−1

𝑏𝑏 = 𝑟𝑟𝑋𝑋𝑋𝑋 𝑟𝑟𝑌𝑌𝑌𝑌 (6.12)

𝑏𝑏1∗

𝑏𝑏 ∗

where; 𝑏𝑏 = � 2 �

⋮

∗

𝑏𝑏𝑝𝑝−1

For example: When there are two independent variables

𝑦𝑦1∗

∗ ∗ ∗ 𝑟𝑟𝑦𝑦1

𝑥𝑥 𝑥𝑥21 ⋯ 𝑥𝑥𝑛𝑛1 𝑦𝑦2∗

𝑋𝑋 ′ 𝑌𝑌 = � 11∗ ∗ ∗ � � � = �𝑟𝑟 �

𝑥𝑥12 𝑥𝑥22 ⋯ 𝑥𝑥𝑛𝑛2 ⋮ 𝑦𝑦2

∗

𝑦𝑦𝑛𝑛

1 𝑟𝑟

12

𝑋𝑋 ′ 𝑋𝑋 = � � = 𝑟𝑟𝑋𝑋𝑋𝑋

𝑟𝑟21 1

Hence,

1 1 −𝑟𝑟12 𝑟𝑟𝑦𝑦1

𝑏𝑏 = 2 �−𝑟𝑟 � �𝑟𝑟 �

1 − 𝑟𝑟12 21 1 𝑦𝑦2

Thus,

𝑟𝑟𝑌𝑌1 − 𝑟𝑟12 𝑟𝑟𝑦𝑦2 −𝑟𝑟21 𝑟𝑟𝑦𝑦1 + 𝑟𝑟𝑦𝑦2

𝑏𝑏1∗ = 2 and 𝑏𝑏2∗ = 2

1 − 𝑟𝑟12 1 − 𝑟𝑟12

Example 1: From the previous dataset, Dwaine Studios, Inc., operates portrait studios in 21

cities of medium size. These studios specialize in portraits of children.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 4

We illustrate the calculation of the transformed data for the first case using the means and

standard deviations.

6.5 Multicollinearity

One basic assumptions of the multiple regression model is that there is no exact linear

relationship between any of the independent variables in the model. If such an exact linear

relationship does exist, we called that the independent variables are perfect collinear exists.

Multicollinearity arises when two or more variables (or combinations of variables) are highly

correlated with each other.

When the predictor variables are correlated among themselves, intercorrelation or

multicollinearity among them is said to exist (when correlation among variables is very high).

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 5

Source of multicollinearity:

1. Method of data collection

It is expected that the data is collected over the whole cross-section of variables. It may

happen that the data is collected over a subspace of the explanatory variables where the

variables are linearly dependent. For example, sampling is done only over a limited range

of explanatory variables in the population.

2. Model and population constrain

There may exists some constraints on the model or on the population from where the

sample is drawn. The sample may be generated from that part of population having linear

combinations.

3. Existence of identities or definitional relationships:

There may exist some relationships among the variables which may be due to the definition

of variables or any identity relation among them. For example, if data is collected on the

variables like income, saving and expenditure, then income = saving + expenditure. Such

relationship will not change even when the sample size increases.

4. Imprecise formulation of model

The formulation of the model may unnecessarily be complicated. For example, the

quadratic (or polynomial) terms or cross product terms may appear as explanatory

variables.

5. An over-determined model

Sometimes, due to over enthusiasm, large number of variables are included in the model

to make it more realistic and consequently the number of observations, n, becomes smaller

than the number of explanatory variables, k.

NOTE

• be careful not to apply t tests mechanically without checking for multicollinearity.

• multicollinearity is a data problem, not a misspecification problem.

Effects of Multicollinearity

• Wrong interpretation of the regression coefficients.

• Large variance and covariance for the OLS estimators of the regression parameters.

• Unduly large (in absolute value) estimates of the regression parameters.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 6

Example 1: Wrong sign problem

Let consider the following data taken from Montgomery et al. (2006).

Y 𝑿𝑿𝟏𝟏 𝑿𝑿𝟐𝟐

1 2 1

5 4 2

3 5 2

8 6 4

5 8 4

3 10 4

10 11 6

When we fit 𝑌𝑌 on 𝑋𝑋1 only we obtain the least squares line as:

𝑌𝑌� = 1.3901 + 0.5493𝑋𝑋1

But this line becomes

𝑌𝑌� = 1.014 − 1.215𝑋𝑋1 + 3.643𝑋𝑋2

The above two fits clearly show that the sign of the coefficient of 𝑋𝑋1 change which is known

as the wrong sign problem.

For a possible explanation we plot two explanatory variables against one another and it shows

a linear relationship with a correlation coefficient 0.945 with p-value 0.000. This finding

confirms our suspicion that multicollinearity causes wrong sign problem.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 7

Example 2: Let us consider dataset from Kutner et al. (2008).

Important Conclusion

• When predictor variables are correlated, the regression coefficient of any one variable

depend on which other predictor var. are included and which ones are left out in the

model.

• Regression coefficients doesn't reflect the effect of individual predictor var. on the

response, but only a marginal or partial effect, given whatever other correlated predictor

variables are included in the model.

Another important is related to the error sums of squares (ESS). In general, when two or more

predictor variables are uncorrelated, the marginal contribution of one predictor variable in

reducing the error sum of squares when the other predictor variables are in the model is exactly

same as when this predictor variable is in the model alone.

𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 |𝑋𝑋2 ) = 𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 ) (6.13)

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 8

From table,

𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 |𝑋𝑋2 ) = 𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋2 ) − 𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 , 𝑋𝑋2 )

= 248.750 − 17.625 = 231.15

𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 ) = 231.125

Otherwise, when predictor variables are correlated, the marginal contribution of any one

predictor variable in reducing the error sum of squares varies, depending on which other

variables are already in the regression model, just as for regression coefficients.

𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 |𝑋𝑋2 ) ≠ 𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 ) (6.14)

From previous Body Fat example, the ESS for 𝑋𝑋1

𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 ) = 352.27

𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 |𝑋𝑋2 ) = 3.47

• 𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 |𝑋𝑋2 ) so small compared with 𝑆𝑆𝑆𝑆𝑆𝑆(𝑋𝑋1 ) because 𝑋𝑋1 and 𝑋𝑋2 are highly correlated

with each other and with response variable.

Thus, when 𝑋𝑋2 already in the model, marginal contribution of 𝑋𝑋1 in reducing SSE is

comparatively small because 𝑋𝑋2 contain much of the same information as 𝑋𝑋1.

6.6 Indications of Multicollinearity

High Correlation Values

Relatively high simple correlation between one or more pairs of explanatory variables.

Calculate regression coefficients between all explanatory variables and test the maximum (in

absolute value) correlation coefficient by the statistic

𝑟𝑟𝑖𝑖𝑖𝑖 √𝑛𝑛 − 2

𝑡𝑡 = ~𝑡𝑡𝑛𝑛−2 (6.15)

�1 − 𝑟𝑟𝑖𝑖𝑖𝑖2

There is an evidence of multicollinearity at the 5% level of significance if

|𝑡𝑡| > 𝑡𝑡0.975, 𝑛𝑛−2

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 9

Variance Inflation Factors (VIF)

VIF commonly used diagnostic method to identify multicollinearity. The VIF is the ratio of

variance in a model with multiple terms, where to measures how much the variance of an

estimated regression coefficient increases if your predictors are correlated (multicollinear).

The largest VIF value among all variables an indicator of multicollinearity. The rule of thumb:

𝑉𝑉𝑉𝑉𝑉𝑉 < 5 No Multicollinearity

5 ≤ 𝑉𝑉𝑉𝑉𝑉𝑉 ≤ 10 Moderate Multicollinearity

𝑉𝑉𝑉𝑉𝑉𝑉 > 10 Severe Multicollinearity

The VIF is the most popular method to identify multicollinearity and it is given by:

1

𝑉𝑉𝑉𝑉𝑉𝑉𝑖𝑖 = (6.16)

1 − 𝑅𝑅𝑗𝑗2

where; 𝑅𝑅𝑗𝑗2 denotes the coefficient of determination obtained when 𝑋𝑋𝑗𝑗 is regressed on other 𝑋𝑋𝑝𝑝−1

variables in the model.

One or more large VIFs indicate the presence of multicollinearity in the data.

6.7 Corrections for Multicollinearity

As remedial measures of multicollinearity problem we can take the following steps:

• Collection of more data

Additional data may help in reducing the sampling variance of the estimates. The data need

to be collected such that it helps in breaking up the multicollinearity in the data.

• Dropping off unimportant variables

If possible, identify the variables which seems to causing multicollinearity. The process of

omitting the variables way be carried out on the basis of some kind of ordering of

explanatory variables, e.g., those variables can be deleted first which have smaller value

of t-ratio. In another example, suppose the experimenter is not interested in all the

parameters.

• Use Ridge Regression

• Use Principal Component Regression

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 10

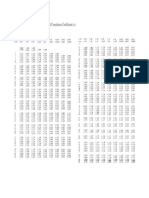

Example 3: The blood pressure data in which researchers observed the following data on 20

individuals with high blood pressure:

The Blood Pressure Dataset

Result:

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 11

6.8 Autocorrelated Errors

Recall that one of the assumptions when building a linear regression model is that the errors

are independent. One of the basic assumptions in linear regression model is that the random

error components or disturbances are identically and independently distributed. So, in the

model 𝑦𝑦 = 𝑋𝑋𝑋𝑋 + 𝜀𝜀, it is assumed that

𝜀𝜀𝑖𝑖𝑖𝑖 ~𝑁𝑁(0, 𝜎𝜎 2 ), and 𝜎𝜎�𝜀𝜀𝑖𝑖 , 𝜀𝜀𝑗𝑗 � = 0, 𝑖𝑖 ≠ 𝑗𝑗, 𝑖𝑖, 𝑗𝑗 = 1,2, … , 𝑛𝑛

The assumption is that errors are uncorrelated. In real practice, independent error is violated,

where errors are frequently correlated positively over time. When autocorrelation is present,

some or all off diagonal elements in 𝐸𝐸(𝜀𝜀𝜀𝜀′) are nonzero.

Error terms corrected over time are said to be autocorrelated or serially correlated. The

assumption of independent not always true when data are obtained in a time sequence, where

error terms are correlated with the previous error.

Sometimes the study and explanatory variables have a natural sequence order over time, i.e.,

the data is collected with respect to time. Such data is termed as time series data. The

disturbance terms in time series data are serially correlated.

Major cause of positively autocorrelated error terms in business and economic involving time

series data, is the omission of one or more variable which has an effect on y but were not

included in model.

Example 4:

Suppose that every morning you watch a weather report featuring your favourite meteorologist

on the local morning news. You use his forecasts to decide what clothes to wear.

− You notice that in the summer months, once you get outside, you are always hot. After

a while, you realize that the temperature predicted by the meteorologist is always higher

than the actual temperature.

− Then, during the rainy, you realize the temperature he predicts is always lower than the

actual temperature. Something is wrong with the meteorologist’s method.

− Since his winter forecasts are always too high, why doesn’t he start lowering his

predictions? When rainy rolls around, then his predictions are always too low. Why

doesn’t he realize this and raise his rainy forecasts?

This is the essence of autocorrelation: The errors follow a pattern, showing that something is

wrong with the regression model.

Example 5:

Suppose that we wish to regress annual sales of a soft drink concentrate against the annual

advertising expenditures for that product. Now, the growth in population over the period of

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 12

time used in the study will also influence the product sales. If population size is not included

in the model, this may cause the errors in the model to be autocorrelated because population

size is correlated with product sales.

When error terms are autocorrelated, some issues arise when using ordinary least squares.

These problems are:

1. The OLS estimated regression coefficients are still unbiased but they are longer having

minimum variance property and may be quite inefficient.

2. The MSE may seriously under estimate the variance of the error terms.

3. The standard error of the regression coefficients may seriously underestimate the true

standard deviation of the estimated regression coefficients. This will result in a

conclusion that the estimate is good but actually it is not.

4. Statistical intervals and inference procedures are no longer strictly applicable.

Detecting the presence of autocorrelation

Since problem of autocorrelation is very serious – it’s important to detect them. There are two

general approaches for dealing with the detecting of autocorrelation.

1. Plot residual against time

2. Formal statistical test- Durbin Watson

This test is based on the assumption that the errors in the regression model are generated

by a first-order autoregressive error model.

The generalized simple linear regression model for one predictor variables when the random

error terms follow a first-order autoregressive, process is:

𝑌𝑌𝑡𝑡 = 𝛽𝛽0 + 𝛽𝛽1 𝑋𝑋𝑡𝑡 + 𝜀𝜀𝑡𝑡 (6.17)

𝜀𝜀𝑡𝑡 = 𝜌𝜌𝜌𝜌𝑡𝑡−1 + 𝑢𝑢𝑡𝑡

where:

𝜌𝜌 is a parameter ∋ |𝜌𝜌| < 1 𝑢𝑢𝑡𝑡 are independent 𝑁𝑁(0, 𝜎𝜎 2 )

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 13

Each error term in above model (6.17) consists of a fraction of the previous error term (when

𝜌𝜌 > 0) plus a new disturbance term 𝑢𝑢𝑡𝑡 . The parameter ρ is called the autocorrelation parameter.

Suppose we transform the response variable so that 𝑦𝑦𝑡𝑡′ = 𝑦𝑦𝑡𝑡 − 𝜌𝜌𝜌𝜌𝑡𝑡−1. Substitute in this

expression for 𝑦𝑦𝑡𝑡 and 𝑦𝑦𝑡𝑡−1 according to the regression becomes:

𝑦𝑦𝑡𝑡′ = (𝛽𝛽0 + 𝛽𝛽1 𝑥𝑥𝑡𝑡 + 𝜀𝜀𝑡𝑡 ) − 𝜌𝜌(𝛽𝛽0 + 𝛽𝛽1 𝑥𝑥𝑡𝑡−1 + 𝜀𝜀𝑡𝑡−1 )

= 𝛽𝛽0 (1 − 𝜌𝜌) + 𝛽𝛽1 (𝑥𝑥𝑡𝑡 − 𝜌𝜌𝜌𝜌𝑡𝑡−1 ) + 𝜀𝜀𝑡𝑡 − 𝜌𝜌𝜌𝜌𝑡𝑡−1

𝑦𝑦𝑡𝑡′ = 𝛽𝛽0 (1 − 𝜌𝜌) + 𝛽𝛽1 (𝑥𝑥𝑡𝑡 − 𝜌𝜌𝜌𝜌𝑡𝑡−1 ) + 𝑢𝑢𝑡𝑡 (6.18)

where 𝑢𝑢𝑡𝑡 independence disturbance term.

Now, we write the model (6.18) becomes:

𝑦𝑦𝑡𝑡′ = 𝛽𝛽0′ + 𝛽𝛽1′ 𝑥𝑥𝑡𝑡′ + 𝑢𝑢𝑡𝑡 (6.19)

The reparameterized model (6.19) cannot be used directly because the new regressor and

response variables 𝑥𝑥𝑡𝑡′ and 𝑦𝑦𝑡𝑡′ are functions of the unknown parameter ρ.

The autoregressive error process can be viewed as a regression through the origin.

𝜀𝜀𝑡𝑡 = 𝜌𝜌𝜌𝜌𝑡𝑡−1 + 𝑢𝑢𝑡𝑡 (6.20)

Properties of Error Terms

The error terms 𝜀𝜀𝑡𝑡 still have mean 0 and constant variance:

𝐸𝐸(𝜀𝜀𝑡𝑡 ) = 0 (6.21)

𝜎𝜎 2

𝜎𝜎 2 (𝜀𝜀𝑡𝑡 ) = (6.21)

1 − 𝜌𝜌2

The covariance (a measure of the relationship between two variables) between adjacent error

terms is:

𝜎𝜎 2

𝜎𝜎(𝜀𝜀𝑡𝑡 , 𝜀𝜀𝑡𝑡−1 ) = 𝜌𝜌 � � (6.22)

1 − 𝜌𝜌2

which implies the coefficient of correlation between adjacent error terms is:

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 14

𝜎𝜎 2

𝜌𝜌 � �

1 − 𝜌𝜌2

𝜌𝜌(𝜀𝜀𝑡𝑡 , 𝜀𝜀𝑡𝑡−1 ) = = 𝜌𝜌 (6.23)

𝜎𝜎 2 𝜎𝜎 2

� �

1 − 𝜌𝜌2 1 − 𝜌𝜌2

which is the autocorrelation parameter we introduced above. Thus, the autocorrelation

parameter ρ is the coefficient of correlation between adjacent error terms.

6.9 Durbin-Watson Test for Autocorrelation

We shall now consider a test of the null hypothesis of that no serial correlation is present

(𝜌𝜌 = 0). By far the most popular test autocorrelation (serial correlation) is the Durbin-Watson

test.

This test involves the calculation of a test statistics based on the OLS residuals. The statistic

is defined as

∑𝑛𝑛𝑡𝑡=2(𝑒𝑒𝑡𝑡 − 𝑒𝑒𝑡𝑡−1 )2

𝐷𝐷 = (6.24)

∑𝑛𝑛𝑡𝑡=1 𝑒𝑒𝑡𝑡2

When successive values of 𝑒𝑒̂𝑡𝑡 are close to each other, the DW statistic will be low, indicating

the presence of positive serial correlation. The summary of decision rule of the Durbin-Watson

test is:

Value of DW Result

0 < 𝐷𝐷𝐷𝐷 < 𝑑𝑑𝐿𝐿 Reject null hypothesis; positive serial correlation present

𝑑𝑑𝐿𝐿 < 𝐷𝐷𝐷𝐷 < 𝑑𝑑𝑈𝑈 Indeterminate

𝑑𝑑𝑈𝑈 < 𝐷𝐷𝐷𝐷 < 2 Accept null hypothesis

2 < 𝐷𝐷𝐷𝐷 < 4 − 𝑑𝑑𝑈𝑈 Accept null hypothesis

4 − 𝑑𝑑𝑈𝑈 < 𝐷𝐷𝐷𝐷 < 4 − 𝑑𝑑𝐿𝐿 Indeterminate

4 − 𝑑𝑑𝐿𝐿 < 𝐷𝐷𝐷𝐷 < 4 Reject null hypothesis; negative serial correlation present

Values of 𝑑𝑑𝐿𝐿 and 𝑑𝑑𝑈𝑈 are available from the Durbin-Watson table.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 15

Example 6: Consumer Expenditure data given by Chartterjee and Hadi, 2006.

Consumer Money Stock Consumer Money Stock

Expenditure Expenditure

214.6 159.3 238.7 173.9

217.7 161.2 243.2 176.1

219.6 162.8 249.4 178.0

227.2 164.6 254.3 179.1

230.9 165.9 260.9 180.2

233.3 167.9 263.3 181.2

234.1 168.3 265.6 181.6

232.3 169.7 268.2 182.5

233.7 170.5 270.4 183.3

236.5 171.6 275.6 184.3

Time series plot of residuals indicates that positive autocorrelation is present in the data. for

this data, we obtain:

8195.21

𝐷𝐷𝐷𝐷 = = 0.328

7587.92

At the 5% level of significance, the critical values corresponding to 𝑛𝑛 = 20 are 𝑑𝑑𝐿𝐿 = 1.20 and

𝑑𝑑𝑈𝑈 = 1.41. Since the observed value of d is less than 𝑑𝑑𝐿𝐿 , we reject the null hypothesis and

conclude that positive autocorrelation is present in our data.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 16

6.10 Corrections for Autocorrelation

When autocorrelated error terms are found to be present, then one of the first remedial measures

should be to investigate the omission of a key predictor variable. If such a predictor does not

aid in reducing/eliminating autocorrelation of the error terms, then certain transformations on

the variables can be performed.

Cochrane-Orcutt Procedure (Cochrane and Orcutt, 1949)

This procedure involves a series of iterations, each of which produces a better estimate of than

does the previous one.

Step 1: The OLS method is used to estimate regression parameters and the correlation

coefficient is estimated by

∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡 𝜀𝜀̂𝑡𝑡−1

𝜌𝜌� = (6.25)

�∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡2 �∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡−1

2

Step 2: The estimated value of ρ is used to perform the generalized differences

∗ ∗

𝑌𝑌𝑡𝑡∗ = 𝑌𝑌𝑡𝑡 − 𝜌𝜌𝑌𝑌𝑡𝑡−1 , 𝑋𝑋1𝑡𝑡 = 𝑋𝑋1𝑡𝑡 − 𝜌𝜌𝑋𝑋1𝑡𝑡−1 , . . . 𝑋𝑋𝑘𝑘𝑘𝑘 = 𝑋𝑋𝑘𝑘𝑘𝑘 − 𝜌𝜌𝑋𝑋𝑘𝑘𝑘𝑘−1

We estimate regression parameters from the transformed model:

∗ ∗ ∗

𝑌𝑌𝑡𝑡∗ = 𝛽𝛽0 (1 − 𝜌𝜌) + 𝛽𝛽1 𝑋𝑋1𝑡𝑡 + 𝛽𝛽2 𝑋𝑋2𝑡𝑡 + ⋯ + 𝛽𝛽𝑘𝑘 𝑋𝑋𝑘𝑘𝑘𝑘 + 𝑢𝑢𝑡𝑡

calculate a new set of residuals as

𝜀𝜀̂𝑡𝑡 = 𝑌𝑌𝑡𝑡 − 𝛽𝛽̂1 − 𝛽𝛽̂2 𝑋𝑋2𝑡𝑡 − 𝛽𝛽̂3 𝑋𝑋3𝑡𝑡 − ⋯ − 𝛽𝛽̂𝑘𝑘 𝑋𝑋𝑘𝑘𝑘𝑘

Step 3: Recalculate the correlation coefficient

∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡 𝜀𝜀̂𝑡𝑡−1

𝜌𝜌� = (6.26)

�∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡2 �∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡−1

2

and so on …

The iterative procedure can be carried on for as many steps as desired. Standard procedure is

to stop the iterations when the new estimate of ρ differs from the previous one by less than 0.01

or 0.005.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 17

Example 7: For the Consumer Expenditure Data, we have;

∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡 𝜀𝜀̂𝑡𝑡−1

𝜌𝜌� = = 0.751

�∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡2 �∑𝑇𝑇𝑡𝑡=2 𝜀𝜀̂𝑡𝑡−1

2

Let us define 𝑌𝑌𝑡𝑡∗ = 𝑌𝑌𝑡𝑡 − 𝜌𝜌�𝑌𝑌𝑡𝑡−1 , 𝑋𝑋𝑡𝑡∗ = 𝑋𝑋1𝑡𝑡 − 𝜌𝜌�𝑋𝑋𝑡𝑡−1

Now a fitting 𝑌𝑌𝑡𝑡∗ on 𝑋𝑋𝑡𝑡∗ gives 𝜀𝜀̂𝑡𝑡 = 𝑌𝑌𝑡𝑡∗ − 𝑌𝑌�𝑡𝑡∗

For this revised residuals, Durbin-Watson statistic is 1.43.

From the table given by Durbin-Watson (𝑛𝑛 = 19, 𝑝𝑝 = 1), we obtain 𝑑𝑑𝐿𝐿 = 1.18 and 𝑑𝑑𝑈𝑈 = 1.40.

Since the D-W statistic lies between 𝑑𝑑𝑈𝑈 and 2, we may accept the null hypothesis and conclude

that of there is no evidence of autocorrelation in this data.

Regression Analysis | Chapter 6 | MTH5404| Mustafa, M. S (UPM) 18

Das könnte Ihnen auch gefallen

- Chapter 6 Roots of Nonlinear EquationsDokument10 SeitenChapter 6 Roots of Nonlinear Equationsbernalesdanica0102Noch keine Bewertungen

- CH 6 Velocity and Singularity AnalysisDokument11 SeitenCH 6 Velocity and Singularity AnalysisMbuso MadidaNoch keine Bewertungen

- WQU - Econometrics - Module2 - Compiled ContentDokument73 SeitenWQU - Econometrics - Module2 - Compiled ContentYumiko Huang100% (1)

- Chapter 8Dokument13 SeitenChapter 8Anonymous aLTMYuNoch keine Bewertungen

- 1 s2.0 S0950705124000613 MainDokument16 Seiten1 s2.0 S0950705124000613 MainZhang WeiNoch keine Bewertungen

- Quadratic Forms and Definite MatricesDokument15 SeitenQuadratic Forms and Definite MatricesWorkineh Asmare KassieNoch keine Bewertungen

- An Introduction To Eigenvalues and Eigenvectors: Bachelor of ScienceDokument18 SeitenAn Introduction To Eigenvalues and Eigenvectors: Bachelor of ScienceUjjal Kumar NandaNoch keine Bewertungen

- Regression AnalysisDokument22 SeitenRegression AnalysisNanthitha BNoch keine Bewertungen

- An Introduction To Eigenvalues and Eigenvectors: A Project ReportDokument16 SeitenAn Introduction To Eigenvalues and Eigenvectors: A Project ReportUjjal Kumar NandaNoch keine Bewertungen

- Literature Review v02Dokument3 SeitenLiterature Review v02Hasan Md ErshadNoch keine Bewertungen

- 1.6 - Linear System and Inverse MatrixDokument14 Seiten1.6 - Linear System and Inverse MatrixABU MASROOR AHMEDNoch keine Bewertungen

- ME223-Lecture 29 Torsion Stress FunctionDokument14 SeitenME223-Lecture 29 Torsion Stress FunctionArushiNoch keine Bewertungen

- Lecture 08 Forced Vibration Single Degree of Freedom Model Practical ApplicationDokument11 SeitenLecture 08 Forced Vibration Single Degree of Freedom Model Practical ApplicationBijay Kumar MohapatraNoch keine Bewertungen

- Applied Statistics II-SLRDokument23 SeitenApplied Statistics II-SLRMagnifico FangaWoro100% (1)

- 1-Slope Deflection MethodDokument25 Seiten1-Slope Deflection MethodyousifNoch keine Bewertungen

- Unit II - Module 6 - ENS181Dokument12 SeitenUnit II - Module 6 - ENS181Anyanna MunderNoch keine Bewertungen

- Laboratory 5: Discrete Fourier Transform: Instructor: MR Ammar Naseer EE UET New CampusDokument9 SeitenLaboratory 5: Discrete Fourier Transform: Instructor: MR Ammar Naseer EE UET New CampusWaleed SaeedNoch keine Bewertungen

- CP 2Dokument2 SeitenCP 2Ankita MishraNoch keine Bewertungen

- MATH2023 Multivariable Calculus Chapter 6 Vector Calculus L2/L3 (Fall 2019)Dokument40 SeitenMATH2023 Multivariable Calculus Chapter 6 Vector Calculus L2/L3 (Fall 2019)物理系小薯Noch keine Bewertungen

- Chapter 5Dokument5 SeitenChapter 5hussein mohammedNoch keine Bewertungen

- Appendix Robust RegressionDokument17 SeitenAppendix Robust RegressionPRASHANTH BHASKARANNoch keine Bewertungen

- Hyperbola at The HKDokument9 SeitenHyperbola at The HKarceohannahgwenNoch keine Bewertungen

- ML Mid Key PDFDokument6 SeitenML Mid Key PDFRaja HindustaniNoch keine Bewertungen

- This Content Downloaded From 117.227.34.195 On Fri, 18 Nov 2022 17:23:35 UTCDokument11 SeitenThis Content Downloaded From 117.227.34.195 On Fri, 18 Nov 2022 17:23:35 UTCsherlockholmes108Noch keine Bewertungen

- 6 Dof Robotic Manipulator Mathematics and ModelsDokument6 Seiten6 Dof Robotic Manipulator Mathematics and ModelsErwin Lopez ZapataNoch keine Bewertungen

- Pages From 30.fundamentals of Finite Element Analysis-5Dokument8 SeitenPages From 30.fundamentals of Finite Element Analysis-5Tú LêNoch keine Bewertungen

- Small Signal Staibili Studies On Wetsern Region NetworkDokument10 SeitenSmall Signal Staibili Studies On Wetsern Region NetworkSaugato MondalNoch keine Bewertungen

- STAT - Lec.3 - Correlation and RegressionDokument8 SeitenSTAT - Lec.3 - Correlation and RegressionSalma HazemNoch keine Bewertungen

- A Single Time-Scale Stochastic Approximation Method For Nested Stochastic OptimizationDokument26 SeitenA Single Time-Scale Stochastic Approximation Method For Nested Stochastic OptimizationBaran BahriNoch keine Bewertungen

- Relativistic Electromagnetism: 6.1 Four-VectorsDokument15 SeitenRelativistic Electromagnetism: 6.1 Four-VectorsRyan TraversNoch keine Bewertungen

- Research Article: Numerical Solution of Two-Point Boundary Value Problems by Interpolating Subdivision SchemesDokument14 SeitenResearch Article: Numerical Solution of Two-Point Boundary Value Problems by Interpolating Subdivision SchemesSyeda Tehmina EjazNoch keine Bewertungen

- Chapter 6 S 1Dokument32 SeitenChapter 6 S 1Isra WaheedNoch keine Bewertungen

- Roots of EquationsDokument8 SeitenRoots of EquationsrawadNoch keine Bewertungen

- Chapter 5Dokument13 SeitenChapter 5abdulbasitNoch keine Bewertungen

- AGEC516 - L10-11 - Matrix Algebra IIDokument28 SeitenAGEC516 - L10-11 - Matrix Algebra IIalonso estevezNoch keine Bewertungen

- Eigen DecompositionDokument24 SeitenEigen DecompositionpatricknamdevNoch keine Bewertungen

- A-level Maths Revision: Cheeky Revision ShortcutsVon EverandA-level Maths Revision: Cheeky Revision ShortcutsBewertung: 3.5 von 5 Sternen3.5/5 (8)

- 1 Basic Geometric Intuition: For Example, See Theorems 6.3.8 and 6.3.9 in Lay's Linear Algebra Book On The SyllabusDokument3 Seiten1 Basic Geometric Intuition: For Example, See Theorems 6.3.8 and 6.3.9 in Lay's Linear Algebra Book On The SyllabusKadirNoch keine Bewertungen

- MATH412 QUIZ 3 SolutionDokument5 SeitenMATH412 QUIZ 3 SolutionAhmad Zen FiraNoch keine Bewertungen

- Stat Lesson 1 PDFDokument19 SeitenStat Lesson 1 PDFCharles Contridas100% (1)

- Stat Lesson 1 PDFDokument19 SeitenStat Lesson 1 PDFCharles ContridasNoch keine Bewertungen

- Stat Lesson 1Dokument19 SeitenStat Lesson 1Reignallienn MartinNoch keine Bewertungen

- Pca Portfolio SelectionDokument18 SeitenPca Portfolio Selectionluli_kbreraNoch keine Bewertungen

- Problem2 PDFDokument3 SeitenProblem2 PDFEdgar HuancaNoch keine Bewertungen

- AS CSEC Book 4 STHILLMathematicsDokument34 SeitenAS CSEC Book 4 STHILLMathematicsLatoya WatkinsNoch keine Bewertungen

- Describing Random Sequences: P C N X E N XDokument8 SeitenDescribing Random Sequences: P C N X E N XTsega TeklewoldNoch keine Bewertungen

- Lagrange Multipliers and Neutrosophic Nonlinear Programming Problems Constrained by Equality ConstraintsDokument7 SeitenLagrange Multipliers and Neutrosophic Nonlinear Programming Problems Constrained by Equality ConstraintsScience DirectNoch keine Bewertungen

- Week 5 Lecture Q ADokument14 SeitenWeek 5 Lecture Q AHuma RehmanNoch keine Bewertungen

- On The Numerical Solution of Picard Iteration Method For Fractional Integro - Differential EquationDokument7 SeitenOn The Numerical Solution of Picard Iteration Method For Fractional Integro - Differential EquationDavid Ilejimi ONoch keine Bewertungen

- Assignment 02 AE675Dokument3 SeitenAssignment 02 AE675yvnarayanaNoch keine Bewertungen

- Modeling and Vibrational Analysis of A Sdof System: Marcio Holanda SoutoDokument7 SeitenModeling and Vibrational Analysis of A Sdof System: Marcio Holanda SoutoMarcelo CavalcantiNoch keine Bewertungen

- EDITED - MODULE - in - Mathematics - in - The - Modern - World - Week 11Dokument5 SeitenEDITED - MODULE - in - Mathematics - in - The - Modern - World - Week 11Class LectureNoch keine Bewertungen

- MATH 322: Probability and Statistical MethodsDokument49 SeitenMATH 322: Probability and Statistical MethodsAwab AbdelhadiNoch keine Bewertungen

- CH 6Dokument6 SeitenCH 6Brij Mohan SinghNoch keine Bewertungen

- Multiple RegressionDokument22 SeitenMultiple Regressionabu nayam muhammad SalimNoch keine Bewertungen

- Regression Modelling With Actuarial and Financial Applications - Key NotesDokument3 SeitenRegression Modelling With Actuarial and Financial Applications - Key NotesMitchell NathanielNoch keine Bewertungen

- Chapter 2 M 1Dokument34 SeitenChapter 2 M 1Isra WaheedNoch keine Bewertungen

- Ece 2306 Notes Vii Jan2014Dokument6 SeitenEce 2306 Notes Vii Jan2014TinaNoch keine Bewertungen

- Difference Equations in Normed Spaces: Stability and OscillationsVon EverandDifference Equations in Normed Spaces: Stability and OscillationsNoch keine Bewertungen

- This Study Resource Was: BCG People Priorities in Response To Covid 19Dokument8 SeitenThis Study Resource Was: BCG People Priorities in Response To Covid 19Mon LuffyNoch keine Bewertungen

- Why Do We Study Physics - Socratic PDFDokument1 SeiteWhy Do We Study Physics - Socratic PDFMon LuffyNoch keine Bewertungen

- BBBM4103 Bank Management PDFDokument319 SeitenBBBM4103 Bank Management PDFkshangkariNoch keine Bewertungen

- Why Should You Study Physics - PDFDokument10 SeitenWhy Should You Study Physics - PDFMon LuffyNoch keine Bewertungen

- Multiple Choice Questions (1-5) 1 Tick For Each Correct Answer PDFDokument2 SeitenMultiple Choice Questions (1-5) 1 Tick For Each Correct Answer PDFMon LuffyNoch keine Bewertungen

- ITS Education Asia Article - WHY STUDY PHYSICS AND IS PHYSICS RELEVANT - PDFDokument4 SeitenITS Education Asia Article - WHY STUDY PHYSICS AND IS PHYSICS RELEVANT - PDFMon LuffyNoch keine Bewertungen

- TB Chapter 20Dokument14 SeitenTB Chapter 20Mon LuffyNoch keine Bewertungen

- Case Study 19.2Dokument1 SeiteCase Study 19.2Mon LuffyNoch keine Bewertungen

- Based On BCG PEOPLE PRIORITIES IN RESPONSE TO COVID-19 Article, PDFDokument2 SeitenBased On BCG PEOPLE PRIORITIES IN RESPONSE TO COVID-19 Article, PDFMon LuffyNoch keine Bewertungen

- Case Study 15.1 Following Ana S Medical HistoryDokument2 SeitenCase Study 15.1 Following Ana S Medical HistoryMon LuffyNoch keine Bewertungen

- NUTR 323 Chapter 14 NotesDokument27 SeitenNUTR 323 Chapter 14 NotesMon LuffyNoch keine Bewertungen

- Sample Exam Answer 3 PDFDokument16 SeitenSample Exam Answer 3 PDFMon LuffyNoch keine Bewertungen

- Based On BCG PEOPLE PRIORITIES IN RESPONSE TO COVID-19 Article, PDFDokument2 SeitenBased On BCG PEOPLE PRIORITIES IN RESPONSE TO COVID-19 Article, PDFMon LuffyNoch keine Bewertungen

- Case Study 18.1Dokument2 SeitenCase Study 18.1Mon Luffy100% (1)

- Test Bank International Finance MCQ (Word) Chap 10Dokument38 SeitenTest Bank International Finance MCQ (Word) Chap 10Mon LuffyNoch keine Bewertungen

- Assignment For SCC Internship PDFDokument2 SeitenAssignment For SCC Internship PDFMon LuffyNoch keine Bewertungen

- Summary of Alpha MaleDokument1 SeiteSummary of Alpha MaleMon LuffyNoch keine Bewertungen

- IFM TB ch18Dokument9 SeitenIFM TB ch18Mon LuffyNoch keine Bewertungen

- Multinational Cost of Capital and Capital StructureDokument11 SeitenMultinational Cost of Capital and Capital StructureMon Luffy100% (1)

- Case Study 5 - Older AdultsDokument6 SeitenCase Study 5 - Older AdultsMon LuffyNoch keine Bewertungen

- 20.1 Forecasting Short-Term Financing NeedsDokument40 Seiten20.1 Forecasting Short-Term Financing NeedsMon LuffyNoch keine Bewertungen

- Are You?" This Book Communicates To Strong Men Who Are Good, HardworkingDokument8 SeitenAre You?" This Book Communicates To Strong Men Who Are Good, HardworkingMon LuffyNoch keine Bewertungen

- CBMS4303 Management of Information System September 2017Dokument14 SeitenCBMS4303 Management of Information System September 2017Mon LuffyNoch keine Bewertungen

- Case Study 17.1 Maintaining A Healthy WeightDokument1 SeiteCase Study 17.1 Maintaining A Healthy WeightMon LuffyNoch keine Bewertungen

- IFM TB ch21Dokument10 SeitenIFM TB ch21Mon LuffyNoch keine Bewertungen

- 6a Operational Excellence in Action Celcom PDFDokument9 Seiten6a Operational Excellence in Action Celcom PDFMon LuffyNoch keine Bewertungen

- 9-13 What Was The Problem at Celcom That Was Described This Case? What People, Organization, and Technology Factors Contributed To This Problem?Dokument5 Seiten9-13 What Was The Problem at Celcom That Was Described This Case? What People, Organization, and Technology Factors Contributed To This Problem?Mon LuffyNoch keine Bewertungen

- Be Final PDFDokument15 SeitenBe Final PDFMon LuffyNoch keine Bewertungen

- Marketing Mix: MKT420 (Principles and Practice or Marketing) Group MHR 2Dokument53 SeitenMarketing Mix: MKT420 (Principles and Practice or Marketing) Group MHR 2Mon LuffyNoch keine Bewertungen

- Case Study CelcomDokument7 SeitenCase Study CelcomMon LuffyNoch keine Bewertungen

- Toward Better Statistical Validation of Machine Learning-Based Multimedia Quality EstimatorsDokument15 SeitenToward Better Statistical Validation of Machine Learning-Based Multimedia Quality EstimatorsKrishna KumarNoch keine Bewertungen

- UntitledDokument14 SeitenUntitledKattisNoch keine Bewertungen

- CEE 6505: Transportation Planning: Week 03: Trip Generation (Fundamentals)Dokument66 SeitenCEE 6505: Transportation Planning: Week 03: Trip Generation (Fundamentals)Rifat HasanNoch keine Bewertungen

- 1.linear Regression PSPDokument92 Seiten1.linear Regression PSPsharadNoch keine Bewertungen

- BPT-Probability-binomia Distribution, Poisson Distribution, Normal Distribution and Chi Square TestDokument41 SeitenBPT-Probability-binomia Distribution, Poisson Distribution, Normal Distribution and Chi Square TestAjju NagarNoch keine Bewertungen

- STATISTICS SiegelDokument8 SeitenSTATISTICS SiegelgichuyiaNoch keine Bewertungen

- Exploratory Data AnalysisDokument7 SeitenExploratory Data Analysismattew657Noch keine Bewertungen

- TABEL RANK SPEARMAN-spearman Ranked Correlation TableDokument1 SeiteTABEL RANK SPEARMAN-spearman Ranked Correlation TableHakim Tanjung PrayogaNoch keine Bewertungen

- HousepricesDokument29 SeitenHousepricesAnshika YadavNoch keine Bewertungen

- Hasil AnalisisDokument3 SeitenHasil AnalisisRaras Sekti PudyasariNoch keine Bewertungen

- Assignment 2Dokument3 SeitenAssignment 2Cw ZhiweiNoch keine Bewertungen

- Inferential StatisticsDokument10 SeitenInferential StatisticsSapana SonawaneNoch keine Bewertungen

- Midterm Sample Paper 2Dokument18 SeitenMidterm Sample Paper 2ryann gohNoch keine Bewertungen

- Factorial ExperimentsDokument24 SeitenFactorial Experiments7abib77Noch keine Bewertungen

- Studi Deskriptif Effect Size PenelitianDokument17 SeitenStudi Deskriptif Effect Size PenelitianRatiih YuniiartyNoch keine Bewertungen

- Accuracy and Error MeasuresDokument46 SeitenAccuracy and Error Measuresbiswanath dehuriNoch keine Bewertungen

- Dist Normal FullDokument2 SeitenDist Normal FullSalsha Anggia PutriNoch keine Bewertungen

- F TestDokument7 SeitenF TestShamik MisraNoch keine Bewertungen

- Lec17 and 17-Testing of HypothesisDokument16 SeitenLec17 and 17-Testing of HypothesisSaad Nadeem 090Noch keine Bewertungen

- Statistician ResultsDokument5 SeitenStatistician ResultsZejkeara ImperialNoch keine Bewertungen

- Big IplDokument13 SeitenBig IplNishant KumarNoch keine Bewertungen

- Stock Watson 3U ExerciseSolutions Chapter12 StudentsDokument6 SeitenStock Watson 3U ExerciseSolutions Chapter12 Studentsgfdsa123tryaggNoch keine Bewertungen

- Data Analysis Final RequierementsDokument11 SeitenData Analysis Final RequierementsJane MahidlawonNoch keine Bewertungen

- Tutorial 2 SSF1093 Descriptive Statistics Numerical WayDokument2 SeitenTutorial 2 SSF1093 Descriptive Statistics Numerical WayJeandisle MaripaNoch keine Bewertungen

- Statistic TestDokument4 SeitenStatistic Testfarrahnajihah100% (1)

- Naive Bayes Classifier From WikipediaDokument13 SeitenNaive Bayes Classifier From WikipediaalfianafitriNoch keine Bewertungen

- Financial Time Series ModelsDokument11 SeitenFinancial Time Series ModelsAbdi HiirNoch keine Bewertungen

- Determinants of Economic Growth: Empirical Evidence From Russian RegionsDokument32 SeitenDeterminants of Economic Growth: Empirical Evidence From Russian RegionsChristopher ImanuelNoch keine Bewertungen

- Homework 05 AnswersDokument3 SeitenHomework 05 AnswersChristopher WilliamsNoch keine Bewertungen

- Final Term Fall-2019 BSCS (Statistics) MNS-UETDokument3 SeitenFinal Term Fall-2019 BSCS (Statistics) MNS-UETMohsinNoch keine Bewertungen