Beruflich Dokumente

Kultur Dokumente

Promising Practices - May 2011 - Evaluation Article 1

Hochgeladen von

bygskyCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Promising Practices - May 2011 - Evaluation Article 1

Hochgeladen von

bygskyCopyright:

Verfügbare Formate

Promising Practices

In 4-H Science

May 2011

Evaluation of 4-H Science Programming

Katherine E. Heck & Martin H. Smith

University of California, Division of Agriculture and Natural Resources

4-H Youth Development Program

Why evaluate?

4-H Science programs provide opportunities for young people to learn science content, improve their science

process skills, and develop positive attitudes toward science. This is accomplished within a positive youth devel-

opment program structure that allows youth to form positive relationships and to grow as individuals. Evalua-

tions can provide important information about 4-H Science

programming, including whether or not, or to what degree

outcomes have been achieved; areas necessary for program

improvement; and to demonstrate to funders the value of a

particular program.

Evaluations may include formative or summative elements,

or both. Formative evaluations are primarily concerned with

process and how well a program worked, and the information

gained is typically used to feed back into program improve-

ments. Summative evaluations focus on the outcomes of the

program; for example, they might attempt to determine what

skills the young people learned.

© 2011 National 4-H Council

4-H science education programs help increase youth scientific

literacy in nonformal educational settings to improve attitudes,

The 4-H name and emblem are protected under 18 USC 707. content knowledge, and science process skills.

Formative evaluations: Evaluation of process

Many evaluations of out-of-school time programming have been published. Research has demonstrated that

formative evaluations can improve the quality of programming (Brown & Kiernan, 2001). Often conducted in

the early stages of program implementation, formative evaluations typically use observational data, surveys, and/

or interviews to determine whether the program is being implemented in accordance with its stated goals, and

whether it adheres to a positive youth development framework. Participant satisfaction is one element com-

monly addressed. Formative evaluations could identify challenges that may help the program to improve. Such

challenges might involve structural issues (such as problems with attendance), staff development needs, issues of

collaboration or other concerns (Scott-Little, Hamann, & Jurs, 2002). Additionally, formative evaluation may be

used in 4-H Science programs to see how closely they adhere to the recommended 4-H Science checklist, which

states that programs should use inquiry strategies and an experiential learning approach, and should be delivered

by trained and caring adults who include youth as partners.

Summative evaluations: Evaluation of outcomes

A summative evaluation seeks to determine whether, or to what

degree, youth who participate in a particular program achieve tar-

geted outcomes. Summative evaluations are typically conducted on

established programs, rather than in the first few months of program

implementation. They seek to identify the impacts programs have on

their participants.

The 4-H Science Initiative promotes the acquisition of a specific set of

science process skills within a framework of positive youth develop-

ment and based on the National Science Education Standards. Youth

who participate in 4-H Science programming are expected to improve

their “Science Abilities,” a group of science process skills. These 30

abilities, which include skills such as hypothesizing, researching a

problem, collecting data, interpreting information, and developing

solutions, are delineated on the 4-H Science Checklist, available at

https://sites.google.com/site/4hsetonline/liaison-documents/4-HSET-

Checklist2009.pdf. Youth in a 4-H Science-Ready program are also

expected to have the opportunity to develop mastery and indepen-

dence, to be able to contribute and feel generosity, and to feel a sense

of belonging within the group. The acquisition of science content, the

development of science process skills, and youth development out-

comes are all possibilities for summative evaluation.

4-H science education programs help increase youth scientific

literacy in nonformal educational settings to improve attitudes,

content knowledge, and science process skills.

Evaluation methods

Evaluation of science programs can take a variety of forms, such as observation of the program, surveys of

participants, interviews with program staff or volunteers, or focus groups with participants. Each method has ad-

vantages and disadvantages, and selection of the appropriate method will depend on the goals of the evaluation.

• An initial interview with the program director is important in determining the goals of the program, which

is the first step in identifying the most appropriate methods for evaluation. If the program has a logic model,

or can develop one, that can help clarify the goals and outcomes to be evaluated. The evaluation plan needs to

be specific and appropriate to the program that is being evaluated.

• Individual interviews or focus group interviews with staff, volunteers, or participants may provide insights

into process issues that could be useful in formative or summative evaluations.

• Observation of a program can provide useful information

for a formative evaluation in determining how well the pro-

gram delivery is working. Observers need to be prepared

in advance for what to look for and how to note or code

the interactions or other behaviors. One potential resource

for observational data collection focusing on quality of

programming is the Out-of-School Time (OST) Evaluation

Instrument developed by Policy Studies Associates avail-

able from http://www.policystudies.com/studies/?id=30.

• Surveys of program participants are one of the most com-

mon methods for evaluation. Surveys, particularly those

involving quantitative data, have the advantage of typically

being quicker and simpler, and often less costly, to com-

plete than qualitative methods. Traditional survey data

collection was done with paper and pencil surveys, but new

forms that may be used include online surveys or group

surveys done with computer assistance.

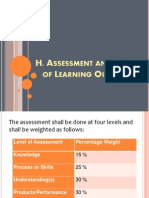

• Authentic assessment is another method of evaluation. In

authentic assessment, learners are asked to demonstrate

their knowledge and skills through real-world tasks (Palm,

2008). Authentic assessments can be utilized for formative

or summative purposes and can be used independently or in conjunction with more traditional assessment

strategies such as surveys, observations, or interviews. With respect to 4-H Science, authentic assessments

strategies such as learners’ responses to open-ended questions, written data presented in a science notebook,

developing, conducting, and explaining the results of an experiment, or designing and building a model

bridge can be used to assess youths’ understanding of specific science content and the development of Sci-

ence Abilities. The activities or results are then coded independently by the evaluators.

4-H science education programs help increase youth scientific

literacy in nonformal educational settings to improve attitudes,

content knowledge, and science process skills.

Best practices in science program evaluation

Evaluation tools need to be piloted prior to implementation to ensure that they are able to be used and under-

stood, and to identify and correct any problems. Ideally the items on a survey or interview should be validated

and the reliability of the instrument ascertained. The reliability of an instrument is the extent to which the

instrument achieves consistent results, which may include consistency among the participants or among the

individuals who are coding or rating the responses. The validity is a determination of whether the instrument

is measuring what it is intended to measure; whether the questions accurately and fully provide a picture of the

concepts or constructs intended.

There are several considerations to take into account when identifying a particular evaluation method and an

apropriate tool to use. Some of these might include:

• The age or abilities of the potential respondents. For example, paper and pencil surveys or surveys with com-

plex questions are not appropriate for very young children.

• The number of respondents. Large numbers of respondents are often more suitable for quantitative meth-

ods, research that involves data that are typically measured numerically, with objective questions, often

using multiple choice or other fixed response categories. Small numbers of participants may make qualita-

tive methods more appropriate and feasible. Qualitative research involves a text-based method of developing

themes, theories, and ideas from observations, short or essay answers, focus groups, or interviews.

• The amount of time available for completing the evaluation. Some methods are quicker to complete than oth-

ers.

• Whether the evaluation should be longitudinal and follow participants over time, or whether a point in time,

cross-sectional evaluation is appropriate.

• How large a burden the evaluation needs to place on participants in terms of time they will need to spend

responding to a survey or participating in an interview or focus group.

• What specific outcomes or processes the evaluation will be ascertaining.

When developing new instruments or questions, it is important to try to prevent bias in the survey that may

result from sampling issues or from the wording of particular questions. Bias results when the survey or sam-

pling design causes error in measurement. Participants in evaluation surveys should ideally be either the entire

program or a representative sample of all program participants. Questions should be worded to be as objective as

possible, and written in a way that respondents are able to answer accurately. (For example, surveys asking about

past behavior may be answered inaccurately if the respondent cannot recall behavior from a long time ago.)

Some states, such as Tennessee and Louisiana, have begun routinely collecting and summarizing evaluation data

from program participants. Tennessee has developed a program evaluation network that includes a limited set

of evaluation items that are completed by all youth participating in 4-H programming in the state. The specific

questions that youth respond to vary depending on the projects in which they are engaged. The questions in the

survey bank were drawn from previously validated tools, such as, for science programming, the Science Process

Skills Inventory (available at http://www.pearweb.org/atis/tools/18).

4-H science education programs help increase youth scientific

literacy in nonformal educational settings to improve attitudes,

content knowledge, and science process skills.

Resources for science program evaluators

There are many pre-existing validated, reliable surveys that can be used focusing on science content and science

process skills. One useful resource for these tools is the Assessment Tools in Informal Science (ATIS) website,

maintained by Harvard University’s Program in Education, Afterschool and Resiliency. This website is located at

http://www.pearweb.org/atis. Previously validated instruments are available for a range of participant ages, from

preschool through college, and focusing on a range of science outcomes, from skills to interest to content knowl-

edge. In addition to ATIS, there are a bank of instruments on science and other youth development outcomes

available at the CYFERNET website, at https://cyfernetsearch.org/ilm_common_measures. These instruments

are used in the evaluation of National Institute of Food and Agriculture (NIFA)-funded Children, Youth, and

Families At Risk (CYFAR) projects, and were selected from pre-existing, validated instruments on a variety of

topics, including science and technology but also demographics, youth program quality, leadership, citizenship,

nutrition, parenting, workforce preparation, and others.

In addition to pre-existing validated tools, several journals also exist which publish articles that provide guidance

on methodological issues around program evaluation. Among these are Practical Assessment, Research & Evalu-

ation (http://pareonline.net/); the American Journal of Evaluation (http://aje.sagepub.com/); Evaluation Review

(http://erx.sagepub.com/); and Evaluation (http://www.uk.sagepub.com/journals/Journal200757).

References

Brown, J.L., & Kiernan, N.E. (2001). Assessing the subsequent effect of a formative evaluation on a program.

Evaluation and Program Planning, 24(2), 129-143.

Palm, T. (2008). Performance assessment and authentic assessment: A conceptual analysis of the literature.

Practical Assessment Research & Evaluation, 13(4). Available online: http://pareonline.net/getvn.

asp?v=13&n=4

Scott-Little, C., Hamann, M.S., & Jurs, S.G. (2002). Evaluations of after-school programs: A meta-evaluation of

methodologies and narrative synthesis of findings. American Journal of Evaluation, 23(4), 387-419.

National 4-H Science Professional Development: Janet Golden, jgolden@fourhcouncil.edu, 301-961-2982

Graphic Design and Layout: Marc Larsen-Hallock, UC Davis, State 4-H Office

4-H science education programs help increase youth scientific

literacy in nonformal educational settings to improve attitudes,

content knowledge, and science process skills.

Das könnte Ihnen auch gefallen

- National Emergency Medicine Board Review CourseDokument1 SeiteNational Emergency Medicine Board Review CourseJayaraj Mymbilly BalakrishnanNoch keine Bewertungen

- Struck by Lightning - The Carson Phillips Journal - Colfer - ChrisDokument188 SeitenStruck by Lightning - The Carson Phillips Journal - Colfer - ChrisJan Kristian Hisuan50% (6)

- Impacts of Career Guidance Program To SHS StudensDokument35 SeitenImpacts of Career Guidance Program To SHS StudensLuvdivine Lalas100% (11)

- Assessment in Learning 1Dokument36 SeitenAssessment in Learning 1Joel Phillip Granada100% (1)

- Planning and Evaluating Human Services Programs: A Resource Guide for PractitionersVon EverandPlanning and Evaluating Human Services Programs: A Resource Guide for PractitionersNoch keine Bewertungen

- As TeenVolunteersDokument72 SeitenAs TeenVolunteersbygsky100% (1)

- Position PaperDokument7 SeitenPosition Papercocopitlabmix100% (3)

- Preschool Assessment: A Guide To Developing A Balanced ApproachDokument12 SeitenPreschool Assessment: A Guide To Developing A Balanced Approachsuji1974Noch keine Bewertungen

- Walkington Engaging Students in ResearchDokument7 SeitenWalkington Engaging Students in ResearchDilan WeerasekaraNoch keine Bewertungen

- Concept PaperDokument5 SeitenConcept PaperSam Ashly CaculitanNoch keine Bewertungen

- Defining Portfolio AssessmentDokument12 SeitenDefining Portfolio AssessmentMarvz PototNoch keine Bewertungen

- Essey EnglishDokument8 SeitenEssey EnglishFazzy ArrysNoch keine Bewertungen

- Allama Iqbal Open University Semester Terminal Exam Autumn 2020 Program /level: B. ED Title /course Code: Curriculum Development (8603)Dokument8 SeitenAllama Iqbal Open University Semester Terminal Exam Autumn 2020 Program /level: B. ED Title /course Code: Curriculum Development (8603)Osama Yaqoob0% (1)

- Lesson 10Dokument5 SeitenLesson 10Juliette MariñoNoch keine Bewertungen

- A Feasibility Study On Personal DevelopmentDokument12 SeitenA Feasibility Study On Personal Developmentkadilobari100% (2)

- Financial Planning Curriculum For Teens: Impact Evaluation: Sharon M. Danes, Catherine Huddleston-Casas, and Laurie BoyceDokument14 SeitenFinancial Planning Curriculum For Teens: Impact Evaluation: Sharon M. Danes, Catherine Huddleston-Casas, and Laurie BoyceWawanNoch keine Bewertungen

- Module 2 Implementing Health Education PlanDokument17 SeitenModule 2 Implementing Health Education PlanCedrick Mesa Sali-otNoch keine Bewertungen

- Awareness On The Advantages and Disadvantages ofDokument10 SeitenAwareness On The Advantages and Disadvantages ofMatthew Spencer HoNoch keine Bewertungen

- Research Manual UpdatedDokument19 SeitenResearch Manual UpdatedSt. Camillus College FoundationNoch keine Bewertungen

- Research Methodology (5669)Dokument16 SeitenResearch Methodology (5669)Shaukat Hussain AfridiNoch keine Bewertungen

- The Purposes of Educational Research Learning OutcomesDokument3 SeitenThe Purposes of Educational Research Learning OutcomesErika Quindipan100% (1)

- Ed 202869Dokument34 SeitenEd 202869PinkyFarooqiBarfiNoch keine Bewertungen

- Preschool PPS 211 - AssessmentDokument18 SeitenPreschool PPS 211 - Assessmentnorazak100% (2)

- Exploratory Vs Formative ResearchDokument49 SeitenExploratory Vs Formative ResearchHelen ZakiNoch keine Bewertungen

- Nurturing Gifted and Talented Children at Key Stage 1Dokument105 SeitenNurturing Gifted and Talented Children at Key Stage 1Amanda PeixotoNoch keine Bewertungen

- Jeffrey B Villangca-educ-202-Summary On Methods of ResearchDokument15 SeitenJeffrey B Villangca-educ-202-Summary On Methods of ResearchJEFFREY VILLANGCANoch keine Bewertungen

- IMD - Educ101 Module PDFDokument67 SeitenIMD - Educ101 Module PDFKarla G. AbadNoch keine Bewertungen

- Lecture Notes NCM 119Dokument23 SeitenLecture Notes NCM 119Angelie PantajoNoch keine Bewertungen

- Ed 243913Dokument70 SeitenEd 243913aryandi meetingNoch keine Bewertungen

- Summary Chapter 11Dokument9 SeitenSummary Chapter 11IO OutletsNoch keine Bewertungen

- Notes On Methods of Research (Updated)Dokument53 SeitenNotes On Methods of Research (Updated)Rei Dela CruzNoch keine Bewertungen

- Module-6 Evaluation in Non-Formal EducationDokument9 SeitenModule-6 Evaluation in Non-Formal EducationOliver EstoceNoch keine Bewertungen

- Allama Iqbal Open University Islamabad Assignment # 1 Submitted To: Submitted byDokument14 SeitenAllama Iqbal Open University Islamabad Assignment # 1 Submitted To: Submitted byعائشہ حسینNoch keine Bewertungen

- Program PlanDokument20 SeitenProgram Planapi-490053195Noch keine Bewertungen

- General Principles of AssessmentDokument11 SeitenGeneral Principles of AssessmentSwami GurunandNoch keine Bewertungen

- 20170920070907curriculum EvaluationDokument8 Seiten20170920070907curriculum Evaluationsahanasweet88Noch keine Bewertungen

- Chapter 4Dokument6 SeitenChapter 4MARIFA ROSERONoch keine Bewertungen

- Tugas MKLH Curriculum DesignDokument10 SeitenTugas MKLH Curriculum DesignHerty KimNoch keine Bewertungen

- MethodologyDokument7 SeitenMethodologyCarlos TorresNoch keine Bewertungen

- Standardized Assessment Testing EducationDokument4 SeitenStandardized Assessment Testing EducationShopnil SarkarNoch keine Bewertungen

- Application and Misapplication of ResearchDokument19 SeitenApplication and Misapplication of ResearchButch RejusoNoch keine Bewertungen

- Introduction To AssessmentDokument15 SeitenIntroduction To AssessmentNUR HAFIZAHTUN NADIYAH MANASNoch keine Bewertungen

- Ges New Jhs Syllabus Career Technology CCP Curriculum For b7 b10 Draft ZeroDokument367 SeitenGes New Jhs Syllabus Career Technology CCP Curriculum For b7 b10 Draft ZeroElvis Adjei Yamoah100% (1)

- 6.4 Viewing, Listening, Answering MethodDokument4 Seiten6.4 Viewing, Listening, Answering Methodnimra navairaNoch keine Bewertungen

- Chapter 3Dokument2 SeitenChapter 3Dorado Lammy S.Noch keine Bewertungen

- Allama Iqbal Open University, IslamabadDokument29 SeitenAllama Iqbal Open University, IslamabadAhmad CssNoch keine Bewertungen

- The Process of Developing AssessmentDokument14 SeitenThe Process of Developing AssessmentRatihPuspitasariNoch keine Bewertungen

- AECF SupportingSELwithEBP 2018 FinalDokument46 SeitenAECF SupportingSELwithEBP 2018 FinalEldgar TreviñoNoch keine Bewertungen

- CHAPTER I. The Problem and Its SettingDokument26 SeitenCHAPTER I. The Problem and Its SettingAnna ChristineNoch keine Bewertungen

- GRP2 Chap3templateDokument4 SeitenGRP2 Chap3templateAndreaNoch keine Bewertungen

- Project Devt p2.2Dokument26 SeitenProject Devt p2.2Neil VillasNoch keine Bewertungen

- Process of Teaching 3rd YearDokument39 SeitenProcess of Teaching 3rd YearLily Mae MontalbanNoch keine Bewertungen

- Iad Guidance 15Dokument4 SeitenIad Guidance 15Miriam AlvarezNoch keine Bewertungen

- A Study of The Impact of Extracurricular Activities On Social Development of Students Studying in VIII Class of SchoolDokument45 SeitenA Study of The Impact of Extracurricular Activities On Social Development of Students Studying in VIII Class of SchoolSunil BalaniNoch keine Bewertungen

- EvaluationDokument45 SeitenEvaluationkiwandaemmanuel21Noch keine Bewertungen

- UbD Research BaseDokument49 SeitenUbD Research BaseAnalyn CasimiroNoch keine Bewertungen

- Article Critique 2Dokument4 SeitenArticle Critique 2api-640983441Noch keine Bewertungen

- IDEASDokument5 SeitenIDEASamalina nawawiNoch keine Bewertungen

- A Multidimensional Model of Assessment Kevin Jenson Colorado State University 2014Dokument21 SeitenA Multidimensional Model of Assessment Kevin Jenson Colorado State University 2014Kevin JensonNoch keine Bewertungen

- Unit-1 Education ResearchDokument24 SeitenUnit-1 Education ResearchSadam IrshadNoch keine Bewertungen

- ReporyDokument17 SeitenReporyshyrel jaljalisNoch keine Bewertungen

- Stages of Curriculum DevelopmentDokument19 SeitenStages of Curriculum Developmentlovemhiz431Noch keine Bewertungen

- My Assignment CurriculumDokument30 SeitenMy Assignment Curriculummichaelgregg.stewNoch keine Bewertungen

- E Academy Print Piece FINALDokument1 SeiteE Academy Print Piece FINALbygskyNoch keine Bewertungen

- SPL Pre Webinar FinalDokument39 SeitenSPL Pre Webinar FinalbygskyNoch keine Bewertungen

- URLS For AssessmentsDokument1 SeiteURLS For AssessmentsbygskyNoch keine Bewertungen

- Engagement ActivitiesDokument4 SeitenEngagement ActivitiesbygskyNoch keine Bewertungen

- Email AnnouncementDokument1 SeiteEmail AnnouncementbygskyNoch keine Bewertungen

- FINAL Needs Assessment ReportDokument33 SeitenFINAL Needs Assessment ReportbygskyNoch keine Bewertungen

- Ejournal Session 5Dokument10 SeitenEjournal Session 5bygskyNoch keine Bewertungen

- PLN ListDokument1 SeitePLN ListbygskyNoch keine Bewertungen

- Agreement Creation MasterDokument1 SeiteAgreement Creation MasterbygskyNoch keine Bewertungen

- 2013 Timeline and Action ItemsDokument13 Seiten2013 Timeline and Action ItemsbygskyNoch keine Bewertungen

- Sloan - C International Conference On Online LearningDokument126 SeitenSloan - C International Conference On Online LearningbygskyNoch keine Bewertungen

- 4-H Robotics WebinarsDokument3 Seiten4-H Robotics WebinarsbygskyNoch keine Bewertungen

- Vital Signs PennsylvaniaDokument2 SeitenVital Signs PennsylvaniabygskyNoch keine Bewertungen

- Policy Brief: Accuracy and Validity of Information Executive SummaryDokument4 SeitenPolicy Brief: Accuracy and Validity of Information Executive SummarybygskyNoch keine Bewertungen

- Inter Generational Newsletter V11no4Dokument8 SeitenInter Generational Newsletter V11no4bygskyNoch keine Bewertungen

- Policy Brief Social MediaDokument3 SeitenPolicy Brief Social MediabygskyNoch keine Bewertungen

- Planning Master Full VersionDokument13 SeitenPlanning Master Full VersionbygskyNoch keine Bewertungen

- Essential Elements Blueprint Edward BenderDokument4 SeitenEssential Elements Blueprint Edward BenderbygskyNoch keine Bewertungen

- To Create A Distribution List From An EmailDokument8 SeitenTo Create A Distribution List From An EmailbygskyNoch keine Bewertungen

- Cleaning House TodayDokument1 SeiteCleaning House TodaybygskyNoch keine Bewertungen

- MPCE 15 InternshipDokument56 SeitenMPCE 15 InternshipRaghavNoch keine Bewertungen

- Agenda TemplateDokument1 SeiteAgenda Templateapi-236337064Noch keine Bewertungen

- Digital Media SyllabUsDokument1 SeiteDigital Media SyllabUsWill KurlinkusNoch keine Bewertungen

- IMSDokument19 SeitenIMSSIDDHANTNoch keine Bewertungen

- Republic of The Philippines Department of Education Region V-Bicol Division of Catanduanes Agban National High School Baras, CatanduanesDokument3 SeitenRepublic of The Philippines Department of Education Region V-Bicol Division of Catanduanes Agban National High School Baras, CatanduanesRomeo Jr Vicente RamirezNoch keine Bewertungen

- Application of Soft Computing KCS056Dokument1 SeiteApplication of Soft Computing KCS056sahuritik314Noch keine Bewertungen

- Narrative-Descriptive Essay Marking SchemeDokument1 SeiteNarrative-Descriptive Essay Marking SchemeAlecsandra Andrei100% (1)

- CST 47 TeacherDokument56 SeitenCST 47 TeacherElena DavalosNoch keine Bewertungen

- NSTP2 MODULE 2 Community PlanningDokument10 SeitenNSTP2 MODULE 2 Community Planningvivien velezNoch keine Bewertungen

- Ebook The Science of Paediatrics MRCPCH Mastercourse PDF Full Chapter PDFDokument55 SeitenEbook The Science of Paediatrics MRCPCH Mastercourse PDF Full Chapter PDFbyron.kahl853100% (28)

- DissertationDokument110 SeitenDissertationJacobsonson ChanNoch keine Bewertungen

- Assessment TASK 3 AnswerDokument4 SeitenAssessment TASK 3 AnswerRajkumar TiwariNoch keine Bewertungen

- SED LIT 2 Chapter 3 ActivitiesDokument4 SeitenSED LIT 2 Chapter 3 ActivitiesSylvia DanisNoch keine Bewertungen

- DLL Social ScienceDokument15 SeitenDLL Social ScienceMark Andris GempisawNoch keine Bewertungen

- 9,11 CBSE and 9 KB-28febDokument10 Seiten9,11 CBSE and 9 KB-28febsaoud khanNoch keine Bewertungen

- Course OrientationDokument14 SeitenCourse OrientationJSNoch keine Bewertungen

- Mathematics in The Modern World: CCGE - 104Dokument42 SeitenMathematics in The Modern World: CCGE - 104Mary Rose NaboaNoch keine Bewertungen

- Assessment and Rating of Learning OutcomesDokument28 SeitenAssessment and Rating of Learning OutcomesElisa Siatres Marcelino100% (1)

- A Study On Customer Satisfaction Towards " Asian Paints" (With Reference To Hightech Paint Shopee, Adoni)Dokument7 SeitenA Study On Customer Satisfaction Towards " Asian Paints" (With Reference To Hightech Paint Shopee, Adoni)RameshNoch keine Bewertungen

- Department OF Education: Director IVDokument2 SeitenDepartment OF Education: Director IVApril Mae ArcayaNoch keine Bewertungen

- Basketball Skills Assessment Tests (BSAT) : DribblingDokument3 SeitenBasketball Skills Assessment Tests (BSAT) : DribblingPatricia QuiloNoch keine Bewertungen

- Rough Draft EssayDokument3 SeitenRough Draft Essayapi-302560543Noch keine Bewertungen

- An Overview of The Yoga Sutras: April 2010Dokument18 SeitenAn Overview of The Yoga Sutras: April 2010Kashif FarooqiNoch keine Bewertungen

- Year 4 Cefr Writing LPDokument2 SeitenYear 4 Cefr Writing LPKannan Thiagarajan100% (1)

- Machine Learning: Mona Leeza Email: Monaleeza - Bukc@bahria - Edu.pkDokument60 SeitenMachine Learning: Mona Leeza Email: Monaleeza - Bukc@bahria - Edu.pkzombiee hookNoch keine Bewertungen

- SCOPUS Guide For Journal Editors ELSEVIERDokument75 SeitenSCOPUS Guide For Journal Editors ELSEVIERKhis HudhaNoch keine Bewertungen

- Shak-Kushan Abhilekhon Me Varnit Kshatrapa Avam MahaKshatrapaDokument7 SeitenShak-Kushan Abhilekhon Me Varnit Kshatrapa Avam MahaKshatrapaAvinash PalNoch keine Bewertungen