Beruflich Dokumente

Kultur Dokumente

Computer Climate Models Are The Heart of The Problem of Global Warming Predictions. by Dr. Timothy Ball

Hochgeladen von

api-71984717Originalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Computer Climate Models Are The Heart of The Problem of Global Warming Predictions. by Dr. Timothy Ball

Hochgeladen von

api-71984717Copyright:

Verfügbare Formate

COMPUTER CLIMATE MODELS

Computer climate models are the heart of the problem of global

warming predictions.

By Dr. Timothy Ball

Abstract

Entire global energy and climate policies are based on the Reports of

the Intergovernmental Panel on Climate Change (IPCC). Their

conclusions are based on climate models that don’t and cannot work.

This article explains how the situation developed and why the models

are failures.

Introduction

What do the IPCC reports actually say about global warming? What

is the basis for their position? All predictions of global warming are

based on computer climate models. The major models in question

are the ones used by the Intergovernmental Panel on Climate

Change (IPCC) to produce their Reports. The most recent, the Fourth

Assessment Report (AR4) uses and averages output from 18

computer models. These Reports are the source for policy on climate

change used by world governments. The Reports are released in two

parts. The first release and the one used for policy by governments

was the Summary for Policymakers (SPM) released in April 2007.

The Technical Report (“The Physical Science Basis”) produced by

Working Group I was released in November 2007. It is essential to

read because it contains more, but not all, of the severe limitations in

climate research including the data, the mechanisms and the

computer models.

Definition of climate change

The definition of climate change is the first serious limitation on the

IPCC work and models. They use the definition set out by the United

Nations Environment Program in article 1 of the United Nations

Framework Convention on Climate Change (UNFCCC). Climate

Change was defined as “a change of climate which is attributed

directly or indirectly to human activity that alters the composition of

the global atmosphere and which is in addition to natural climate

variability observed over considerable time periods”. Human impact is

the primary purpose of the research. However, you cannot determine

the human portion unless you know the amount and cause of natural

climate change. As Professor Roy Spencer said in his testimony

before the US Senate EPW Committee, “And given that virtually no

research into possible natural explanations for global warming has

been performed, it is time for scientific objectivity and integrity to be

restored to the field of global warming research.”

IPCC models are sole the source of predictions about future climates

except they don’t call them predictions. They become predictions

through the media and in the public mind. IPCC reports have advised

about their definition from the start. The First Assessment Report

(Climate Change 1992) "Scenarios are not predictions of the future

and should not be used as such." While the Special Report on

Emissions Scenarios says; "Scenarios are images of the future or

alternative futures. They are neither predictions nor forecasts.

Climate-Change 2001 continues the warnings; "The possibility that

any single in emissions path will occur as described in this scenario is

highly uncertain." In the same Report they say, "No judgment is

offered in this report as to the preference for any of the scenarios and

they are not assigned probabilities of recurrence, neither must they

be interpreted as policy recommendations." This is reference to the

range of scenarios they produce using different future possible

economic conditions.

Climate Change 2001 substitutes the word projection for prediction.

Projection is defined as follows; “A projection is a potential future

evolution of a quantity or set of quantities, often computed with the

help of a model. Projections are distinguished from predictions in

order to emphasise that projections involve assumptions concerning

e.g. future socio-economic and technological developments that may

or may not be realised and are therefore subject to substantial

uncertainty".

There is an inherent contradiction between these statements and the

production of a Summary for Policymakers, which is the document

used as the basis for policy by governments worldwide. The

Summary for Policymakers (SPM) released by the IPCC in April

2007 says, “Most of the observed increase in globally averaged

temperatures since the mid-20th century is very likely due to the

observed increase in anthropogenic greenhouse gas concentrations.”

They define “very likely” as greater than a 90% probability. (Table 4

“Likelihood Scale”) Here are Professor Roy Spencer’s comments

about probabilities in this context. “Any statements of probability are

meaningless and misleading. I think the IPCC made a big mistake.

They're pandering to the public not understanding probabilities. When

they say 90 percent they make it sound like they've come up with

some kind of objective, independent, quantitative way of estimating

probabilities related to this stuff. It isn't. All it is is a statement of faith.”

The Models

This and similar statements are based on the unproven hypothesis

that human produced CO2 is causing warming and or climate

change. The evidence is based solely on the output of the 18

computer climate models selected by the IPCC. There are a multitude

of problems with the computer models including the fact that every

time they are run they produce different results. The final result is an

average of all these runs. The IPCC then take the average results of

the 18 models and average them for the results in their Reports.

Tim Palmer, a leading climate modeler at the European Centre for

Medium - Range Weather Forecasts said “I don’t want to undermine

the IPCC, but the forecasts, especially for regional climate change,

are immensely uncertain.” This comment is partly explained by the

scale of the General Circulation Models (GCM). The models are

mathematical constructs that divide the world into rectangles. Size of

the rectangles is critical to the abilities of the models as the IPCC

AR4 acknowledges. “Computational constraints restrict the resolution

that is possible in the discretized equations, and some representation

of the large-scale impacts of unresolved processes is required (the

parametrization problem). “ (AR4 Chapter 8. p.596.)

The IPCC uses surface weather data, which means there is

inadequate data for most of the world to create an accurate model.

The amount of data is limited in space and time. An illustration of the

problem is identified by the IPCC comment of the problems of

modeling Arctic climates.

“Despite advances since the TAR, substantial uncertainty remains in

the magnitude of cryospheric feedbacks within AOGCMs. This

contributes to a spread of modelled climate response, particularly at

high latitudes. At the global scale, the surface albedo feedback is

positive in all the models, and varies between models much less than

cloud feedbacks. Understanding and evaluating sea ice feedbacks is

complicated by the strong coupling to polar cloud processes and

ocean heat and freshwater transport. Scarcity of observations in polar

regions also hampers evaluation.” (AR4.,Chapter 8, p593.) Most of

the information for the Arctic came from the Arctic Climate Impact

Assessment (ACIA) and a diagram from that report illustrates the

problem.

The very large area labeled “No Data” covers most of the Arctic

Basin an area of approximately 14,250,000 sq.km (5,500,000) square

miles).

In the Southern Hemisphere the IPCC identifies this problem over a

vast area of the Earth’s surface. “Systematic biases have been found

in most models’ simulation of the Southern Ocean. Since the

Southern Ocean is important for ocean heat uptake, this results in

some uncertainty in transient climate response.” (AR4. Chapter 8. p.

591.)

Limitations of the surface data are more than matched by the paucity

of information above the surface. This diagram physically illustrates

the structure of the mathematical model. It shows the three

dimensional nature of the model and the artificial but necessary box

system.

Claims the model are improved because they have increased the

number of layers are meaningless because it doesn’t alter the lack of

data at any level.

The atmosphere and the oceans are fluids and as such are governed

by non-linear rather than linear equations. These equations have

unpredictability similar to randomness and known as chaos. These

problems are well known outside of climate science and were

specifically acknowledged in the IPCC Third Assessment Report

(TAR), “In climate research and modeling, we should recognize that

we are dealing with a coupled non-linear chaotic system, and

therefore that the long-term prediction of future climate states is not

possible.” (TAR, p.774.)

Validation is essential for any model before using it for predictions. A

normal procedure is to require proven evidence that they can make

future predictions to a satisfactory level of accuracy. The IPCC use

the term evaluation instead of validation. They do not evaluate the

entire model. They say to do so shows problems but the source is not

determined. Instead they evaluate at the component level. This

means they don’t evaluate the important interactions between the

components at any level, which is critical to the effectiveness of

duplicating natural processes.

A recent study illustrates the extent of the problem with regard to

components.

“The climate models employed in the IPCC's Fourth Assessment are

clearly deficient in their ability to correctly simulate soil moisture

trends, even when applied to the past and when driven by observed

climate forcings. In other words, they fail the most basic type of test

imaginable; and in the words of Li et al., this finding suggests that

"global climate models should better integrate the biological,

chemical, and physical components of the earth system."

Li,H.,Robock, A. and Wild, M. 2007. “Evaluation of Intergovernmental

Panel on Climate Change Fourth Assessment soil moisture

simulations for the second half of the twentieth century.” Journal of

Geophysical Research 112

IPCC Report AR4 makes a remarkable statement not repeated in the

Summary for Policymakers. It speaks to the lack of valuation, which

explains the failure of their projections.

“What does the accuracy of a climate model’s simulation of past or

contemporary climate say about the accuracy of its projections of

climate change? This question is just beginning to be addressed,

exploiting the newly available ensembles of models.” (AR4, Chapter

8. p.594.)

Predictions?

A simple single word definition of science is the ability to predict. It is

rejected by the IPCC yet they present their work as scientific. Media

and the public generally believe the IPCC is making predictions and

that is clearly the assumption for government policies. Members of

the IPCC do nothing to dissuade the public from that view. All

previous projections have been incorrect. The most recent example is

the period from 2000 to 2008. This diagram shows temperature data

points from various sources and their trend (purple line) compared

with the IPCC projections (orange line) for the period.

Beyond the major problems identified above there is the fundamental

emphasis on the CO2 and especially the human portion as the

primary cause. A basic assumption of the Anthropogenic Global

Warming (AGW) hypothesis is that an increase in atmospheric CO2

will cause an increase in atmospheric temperature. This is not found

in any record of any duration for any time period. In every case,

temperature increases before CO2. Despite this, the models are

designed so that an increase in CO2 causes and increase in

temperature.

The IPCC AR 4 Report provides a good argument against the use of

IPCC model projections as the basis for any climate policy let alone

those currently being pursued.

“Models continue to have significant limitations, such as in their

representation of clouds, which lead to uncertainties in the magnitude

and timing, as well as regional details, of predicted climate change.”

(AR4, Chapter 8. p.600)

12 / 2008

Das könnte Ihnen auch gefallen

- Poisonous Plants List (Compiled by E. Paul 20/04/07) : Botanical Name Common Name Form CommentsDokument45 SeitenPoisonous Plants List (Compiled by E. Paul 20/04/07) : Botanical Name Common Name Form CommentsdustysuloNoch keine Bewertungen

- The Economics of Avoiding Dangerous Climate ChangeDokument29 SeitenThe Economics of Avoiding Dangerous Climate ChangeTyndall Centre for Climate Change Research100% (2)

- 2011 NIPCC Interim ReportDokument432 Seiten2011 NIPCC Interim ReportMattParke100% (2)

- Climate Change Forecasting Using Machine Learning AlgorithmsDokument10 SeitenClimate Change Forecasting Using Machine Learning AlgorithmsIJRASETPublicationsNoch keine Bewertungen

- CBSE Class 5 Science Worksheets (16) - The Environment-PollutionDokument2 SeitenCBSE Class 5 Science Worksheets (16) - The Environment-PollutionRakesh Agarwal57% (7)

- NIPCC vs. IPCC: Addressing the Disparity between Climate Models and Observations: Testing the Hypothesis of Anthropogenic Global WarmingVon EverandNIPCC vs. IPCC: Addressing the Disparity between Climate Models and Observations: Testing the Hypothesis of Anthropogenic Global WarmingBewertung: 3 von 5 Sternen3/5 (2)

- Adbi wp1051Dokument31 SeitenAdbi wp1051royal01818Noch keine Bewertungen

- Uncertainty and Climate Change: 1. OverviewDokument37 SeitenUncertainty and Climate Change: 1. OverviewCaroNoch keine Bewertungen

- Rodhe2000 Article AvoidingCircularLogicInClimateDokument4 SeitenRodhe2000 Article AvoidingCircularLogicInClimateJuan GomezNoch keine Bewertungen

- Global WarmingDokument10 SeitenGlobal WarmingroseNoch keine Bewertungen

- Handling Uncertainties in The UKCIP02 Scenarios of Climate ChangeDokument15 SeitenHandling Uncertainties in The UKCIP02 Scenarios of Climate ChangePier Paolo Dal MonteNoch keine Bewertungen

- David Wasdell - Feedback Dynamics, Sensitivity and Runaway Conditions in The Global Climate System - July 2012Dokument25 SeitenDavid Wasdell - Feedback Dynamics, Sensitivity and Runaway Conditions in The Global Climate System - July 2012Ander SanderNoch keine Bewertungen

- A Multi-Model Framework For Climate Change Impact AssessmentDokument16 SeitenA Multi-Model Framework For Climate Change Impact AssessmentRODRIGUEZ RODRIGUEZ MICHAEL STIVENNoch keine Bewertungen

- Estimation and Extrapolation of Climate Normals and Climatic TrendsDokument25 SeitenEstimation and Extrapolation of Climate Normals and Climatic TrendsIvan AvramovNoch keine Bewertungen

- Zhai2018 Article AReviewOfClimateChangeAttributDokument22 SeitenZhai2018 Article AReviewOfClimateChangeAttributfaewfefaNoch keine Bewertungen

- 8 Data Gaps, Uncertainties and Future Needs: Need To Address UncertaintyDokument15 Seiten8 Data Gaps, Uncertainties and Future Needs: Need To Address UncertaintyCIO-CIONoch keine Bewertungen

- Climate Holes Nature 2010 PDFDokument4 SeitenClimate Holes Nature 2010 PDFJoseph BaileyNoch keine Bewertungen

- Ar4 wg1 Full Report-1 PDFDokument1.007 SeitenAr4 wg1 Full Report-1 PDFHasbNoch keine Bewertungen

- Climate Change 815020641Dokument10 SeitenClimate Change 815020641shahnaroNoch keine Bewertungen

- RVI111Roson enDokument13 SeitenRVI111Roson enyelida blancoNoch keine Bewertungen

- Climate Science Summary ActuariesDokument61 SeitenClimate Science Summary ActuariesRaymond HuangNoch keine Bewertungen

- Pinpointing Climate ChangeDokument8 SeitenPinpointing Climate ChangeDavid MerinoNoch keine Bewertungen

- Climate Change: State of Science and Implications For PolicyDokument10 SeitenClimate Change: State of Science and Implications For PolicyMarcelo Varejão CasarinNoch keine Bewertungen

- WMD Final ProjectDokument6 SeitenWMD Final Projectapi-642869165Noch keine Bewertungen

- Climate Change Analysis and Prediction (Literature Review) CompleteDokument4 SeitenClimate Change Analysis and Prediction (Literature Review) CompleteSudeep JasonNoch keine Bewertungen

- Research Paper of Climate ChangeDokument10 SeitenResearch Paper of Climate Changeefj02jba100% (1)

- Acs20120400002 59142760Dokument15 SeitenAcs20120400002 59142760LEONG YUNG KIENoch keine Bewertungen

- Possible or ProbableDokument1 SeitePossible or ProbableDany FajardoNoch keine Bewertungen

- What Are Climate Models?Dokument4 SeitenWhat Are Climate Models?Enoch AgbagblajaNoch keine Bewertungen

- Representative Concentration Pathways: The Beginner's Guide ToDokument25 SeitenRepresentative Concentration Pathways: The Beginner's Guide ToDebanandNoch keine Bewertungen

- Climate ModelsDokument25 SeitenClimate ModelsJulian QuirozNoch keine Bewertungen

- Climate ChangeDokument7 SeitenClimate ChangeLaura StevensonNoch keine Bewertungen

- 4 Degree - Chapter 3, 4, 5Dokument24 Seiten4 Degree - Chapter 3, 4, 5Mihai EnescuNoch keine Bewertungen

- Nordhaus Dec 2016 PaperDokument44 SeitenNordhaus Dec 2016 PaperclimatehomescribdNoch keine Bewertungen

- DSGE For Climate Change AbatementDokument52 SeitenDSGE For Climate Change AbatementWalaa MahrousNoch keine Bewertungen

- Solar RadiationDokument24 SeitenSolar RadiationDanutz MaximNoch keine Bewertungen

- Literature Review Climate Change AdaptationDokument8 SeitenLiterature Review Climate Change Adaptationlsfxofrif100% (1)

- Carbontax Capntrade StandardDokument17 SeitenCarbontax Capntrade Standardolcar12Noch keine Bewertungen

- PDF DatastreamDokument53 SeitenPDF DatastreamANGELCLYDE GERON MANUELNoch keine Bewertungen

- Research ArticleDokument16 SeitenResearch ArticleTatjana KomlenNoch keine Bewertungen

- The Frozen Climate Views of the IPCC: An Analysis of AR6Von EverandThe Frozen Climate Views of the IPCC: An Analysis of AR6Noch keine Bewertungen

- Stern The Economics of Climate Change PDFDokument64 SeitenStern The Economics of Climate Change PDFJeremyNoch keine Bewertungen

- Calentamiento GlobalDokument22 SeitenCalentamiento GlobalAnonymous 2DvIUvkCNoch keine Bewertungen

- Course SummaryDokument6 SeitenCourse SummarySana WaqarNoch keine Bewertungen

- Review PaperDokument16 SeitenReview PaperParul AgarwalNoch keine Bewertungen

- Hilaire2019 Article NegativeEmissionsAndInternatioDokument31 SeitenHilaire2019 Article NegativeEmissionsAndInternatioviginimary1990Noch keine Bewertungen

- PRECIS HandbookDokument44 SeitenPRECIS HandbookSatrio AninditoNoch keine Bewertungen

- A Review of The Stern Review: Richard S. J. Tol & Gary W. YoheDokument18 SeitenA Review of The Stern Review: Richard S. J. Tol & Gary W. YoheAnonymous WNGkVPai1Noch keine Bewertungen

- Copenhagen Consensus 2008 YoheDokument52 SeitenCopenhagen Consensus 2008 YoheANDI Agencia de Noticias do Direito da Infancia100% (1)

- Quantifying Economic Damages From Climate Change: Maximilian AuffhammerDokument20 SeitenQuantifying Economic Damages From Climate Change: Maximilian AuffhammerRajesh MKNoch keine Bewertungen

- Research Paper About Climate Change in The PhilippinesDokument6 SeitenResearch Paper About Climate Change in The PhilippinesudmwfrundNoch keine Bewertungen

- Climate Change Thesis TopicsDokument9 SeitenClimate Change Thesis TopicsDarian Pruitt100% (2)

- Untitled DocumentDokument3 SeitenUntitled DocumentGeraldine SoledadNoch keine Bewertungen

- Intl Journal of Climatology - 2006 - McGregorDokument5 SeitenIntl Journal of Climatology - 2006 - McGregorShreyans MishraNoch keine Bewertungen

- Weather and Climate Extremes: Michael Wehner, Peter Gleckler, Jiwoo LeeDokument15 SeitenWeather and Climate Extremes: Michael Wehner, Peter Gleckler, Jiwoo LeeJoey WongNoch keine Bewertungen

- Example of Research Paper About Climate ChangeDokument5 SeitenExample of Research Paper About Climate Changenekynek1buw3Noch keine Bewertungen

- climatechangechap3WHO Intnl ConcesusDokument18 Seitenclimatechangechap3WHO Intnl ConcesusrevaNoch keine Bewertungen

- Integrated Assessment Models For Climate Change ControlDokument35 SeitenIntegrated Assessment Models For Climate Change ControlRonal Cervantes ZavalaNoch keine Bewertungen

- Critic NIPCCreport Climate Change Reconsidered 2007Dokument9 SeitenCritic NIPCCreport Climate Change Reconsidered 2007Yogesh NadgoudaNoch keine Bewertungen

- Ipcc Special Report On Renewable EnergyDokument2 SeitenIpcc Special Report On Renewable EnergyIzaiah MulengaNoch keine Bewertungen

- Climate Risks and Carbon Prices: Revising The Social Cost of CarbonDokument27 SeitenClimate Risks and Carbon Prices: Revising The Social Cost of CarbonworouNoch keine Bewertungen

- What's That About?: Cloud SeedingDokument5 SeitenWhat's That About?: Cloud Seedingapi-71984717Noch keine Bewertungen

- The Origin of The: Fundamental or Environmental Research?: Earth's Magnetic FieldDokument4 SeitenThe Origin of The: Fundamental or Environmental Research?: Earth's Magnetic Fieldapi-71984717Noch keine Bewertungen

- Cremation Costs To Rise As Tooth Fillings Poison The Living: Add Your ViewDokument2 SeitenCremation Costs To Rise As Tooth Fillings Poison The Living: Add Your Viewapi-71984717Noch keine Bewertungen

- Solar Maximum: Space Environment Center 325 Broadway, Boulder, CO 80303-3326Dokument4 SeitenSolar Maximum: Space Environment Center 325 Broadway, Boulder, CO 80303-3326api-71984717Noch keine Bewertungen

- Cannot Continue - Reversing Over 150 Years of Unsustainable Economic Development Will RequireDokument2 SeitenCannot Continue - Reversing Over 150 Years of Unsustainable Economic Development Will Requireapi-71984717Noch keine Bewertungen

- UntitledDokument216 SeitenUntitledapi-71984717Noch keine Bewertungen

- Slowing Down Atomic Diffusion in Subdwarf B Stars: Mass Loss or Turbulence?Dokument12 SeitenSlowing Down Atomic Diffusion in Subdwarf B Stars: Mass Loss or Turbulence?api-71984717Noch keine Bewertungen

- UntitledDokument216 SeitenUntitledapi-71984717Noch keine Bewertungen

- Social Studies HomeworkDokument2 SeitenSocial Studies HomeworkHadizah JulaihiNoch keine Bewertungen

- L6 - Climate ChangeDokument4 SeitenL6 - Climate ChangeIwaNoch keine Bewertungen

- Resource Conservation Earth Science Mind Map in Green Orange Lined StyleDokument3 SeitenResource Conservation Earth Science Mind Map in Green Orange Lined Stylexim.bloqueadaNoch keine Bewertungen

- Performance Task in ScienceDokument6 SeitenPerformance Task in ScienceibnsanguilaNoch keine Bewertungen

- Climate Feedback: Global Warming (BIOL002) Dr. Safa Baydoun Faculty of Sciences Spring 2018-2019 Beirut CampusDokument15 SeitenClimate Feedback: Global Warming (BIOL002) Dr. Safa Baydoun Faculty of Sciences Spring 2018-2019 Beirut Campusabd jafNoch keine Bewertungen

- o20LevelsEnglish20 (1123) 20221123 s22 in 22 PDFDokument4 Seiteno20LevelsEnglish20 (1123) 20221123 s22 in 22 PDFsaheelwazirNoch keine Bewertungen

- Biology ProjectDokument4 SeitenBiology ProjectAnthony FalconNoch keine Bewertungen

- Modern Science Biomes PresentationDokument18 SeitenModern Science Biomes PresentationAvegail BumatayNoch keine Bewertungen

- Vintage Essay of The Importance of TreesDokument2 SeitenVintage Essay of The Importance of TreesCatmaster JackNoch keine Bewertungen

- Kiem Tra 15 Unit 6Dokument1 SeiteKiem Tra 15 Unit 6Văn Nguyễn Thị HồngNoch keine Bewertungen

- Study 196 - Executive - SummaryDokument4 SeitenStudy 196 - Executive - SummaryhamidrahmanyfardNoch keine Bewertungen

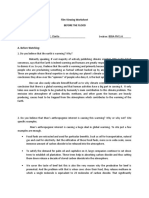

- Film Viewing Worksheet Before The FloodDokument4 SeitenFilm Viewing Worksheet Before The FloodHirouiNoch keine Bewertungen

- Environmental Science Ppt-1Dokument10 SeitenEnvironmental Science Ppt-1munna bhaiyaNoch keine Bewertungen

- Rapid Global Warming andDokument6 SeitenRapid Global Warming andshekki6Noch keine Bewertungen

- Activity DLLDokument7 SeitenActivity DLLMICAH NORADANoch keine Bewertungen

- The Greenhouse HamburgerDokument3 SeitenThe Greenhouse Hamburgerapi-235658421Noch keine Bewertungen

- Trends Summative TestDokument1 SeiteTrends Summative TestReiven TolentinoNoch keine Bewertungen

- Development ProjectDokument3 SeitenDevelopment Project23004245Noch keine Bewertungen

- REDD+ or OFLP Orientation GetachewDokument31 SeitenREDD+ or OFLP Orientation GetachewKecha TayeNoch keine Bewertungen

- SYS100 SMP3-Module1Dokument9 SeitenSYS100 SMP3-Module1ALDRINNoch keine Bewertungen

- List of Topic For Performance Task 3Dokument2 SeitenList of Topic For Performance Task 3Cha Cha CañeteNoch keine Bewertungen

- Carbon Inventory Methods Handbook For Greenhouse Gas Inventory, Carbon Mitigation and Roundwood Production ProjectsDokument327 SeitenCarbon Inventory Methods Handbook For Greenhouse Gas Inventory, Carbon Mitigation and Roundwood Production ProjectsAditi GargeNoch keine Bewertungen

- Dzexams 1as Anglais TCST - d2 20190 68060 PDFDokument2 SeitenDzexams 1as Anglais TCST - d2 20190 68060 PDFĞsb MôkhtarNoch keine Bewertungen

- Green Is Great Part 1Dokument2 SeitenGreen Is Great Part 1Nicolás AnriquezNoch keine Bewertungen

- 1BAC - UNIT 4 Intro, VocabDokument3 Seiten1BAC - UNIT 4 Intro, VocabHamo Smoyez100% (1)

- How Green Was My ValleyDokument3 SeitenHow Green Was My Valleykranthi633Noch keine Bewertungen

- Industrial Safety Engineering KtuDokument34 SeitenIndustrial Safety Engineering Ktudilja aravindanNoch keine Bewertungen

- Effects of Climate ChangeDokument10 SeitenEffects of Climate ChangeJan100% (1)

- Land Sector and Removals Guidance Pilot Testing and Review Draft Part 1Dokument274 SeitenLand Sector and Removals Guidance Pilot Testing and Review Draft Part 1Alejandro Correa AmayaNoch keine Bewertungen