Beruflich Dokumente

Kultur Dokumente

Stat Defn Booklet

Hochgeladen von

Vishal GattaniOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

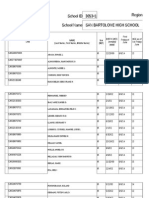

Stat Defn Booklet

Hochgeladen von

Vishal GattaniCopyright:

Verfügbare Formate

ELEMENTARY STATISTICS: BRIEF NOTES AND DEFINITIONS

Department of Statistics University of Oxford October 2006

ANALYSIS OF VARIANCE- The sum of squared deviations from the mean of a whole sample is split into parts representing the contributions from the major sources of variation. The procedure uses these parts to test hypotheses concerning the equality of mean eects of one or more factors and interactions between these factors. A special use of the procedure enables the testing of the signicance of regression, although in simple linear regression this test may be calculated and presented in the form of a t-test. ASSOCIATION- A relationship between two or more variables. BAYES RULE or BAYES THEOREM- Consider two types of event. The rst type has three mutually exclusive outcomes, A, B and C. The second type has an outcome X that may happen with either A, B or C with probabilities that are known: P (X|A), P (X|B) and P (X|C), respectively. The probability that X happens is then P (X) = P (A).P (X|A) + P (B).P (X|B) + P (C).P (X|C) and Bayes Theorem gives the probability that A happens given X has happened as P (A|X) = P (A).P (X|A) P (X)

Bayes Rule uses this probability to determine the best action in such situations. BIAS- An estimate of a parameter is biased if its expected value does not equal the population value of the parameter. BINOMIAL PROBABILITY DISTRIBUTION- In an experiment with n independent trials, where each event results in one of two mutually exclusive outcomes that happen with constant probabilities, p and q respectively, this distribution species the probabilities that r = 0, 1, 2, ..., n outcomes of the rst type occur. The distribution is discrete and if p < 0.5 is positive skew and if p > 0.5 is negative skew. For n not small and p near to 0.5, the distribution may be approximated by the normal distribution. For n large and p small, the distribution may be approximated by the Poisson distribution. In many experiments, due to the heterogeneity of subjects, the Binomial tted to the mean using x = np does not t well as the sample variance exceeds the theoretical variance (npq). The Binomila generalizes to the Multinomial when events have more than two outcomes. BOX PLOT or BOX-AND-WHISKER PLOT- Graph representing the sample distributiuon by a rectangular box covering the quartile range and whiskers at each end of the box indicating central dispersion beyond the quartiles. Outliers are shown as separate points in the tails. CATEGORICAL DATA- Observations that indicate categories to which individuals belong rather than measurements or values of variables. Often such data consists of a summary that shows the numbers or frequencies of individuals in each category. This form of data is known as a frequency table or, if there are two or more categorised features, as a contingency table. CENTRAL LIMIT THEOREM- If independent samples of size n are taken from a population the distribution of the sample means (known as the sampling distribution of the mean) will be approximately normal for large n. The mean of the sampling distribution is the population mean and its variance is the population variance divided by n. Note that there is no need for the distribution in the population sampled to be normal.

The theorem is important because it allows statements to be made about population parameters by comparing the results of a single experiment with those that would be expected according to some null hypothesis. CHI-SQUARE- 2 The name of a squared standard normal variable that has zero mean and unit variance but is more often used for the sum of v independent squared standard normal variables. The mean of this quantity is v, and this is termed the degrees of freedom. Common uses include: 1. to test a sample variance against a known value; 2. to test the homogeneity of a set of sample means where the population variance is known; 3. to test the homogeneity of a set of proportions; 4. to test the goodness-of-t of a theoretical distribution to an observed frequency distribution; 5. to test for association between categorised variables in a contingency table; 6. to construct a condence interval for a sample variance COEFFICIENT OF VARIATION A standardised measure of variation represented by 100 the standard deviation divided by the mean. COMBINATION- A selection of items from a group. CONDITIONAL PROBABILITY- Where two or more outcomes are observed together, the probability that one specied outcome is observed given that one or more other specied outcomes are observed. CONFIDENCE INTERVAL- The interval between the two values of a parameter known as CONFIDENCE LIMITS. CONFIDENCE LIMITS- Two values between which the true value of a population parameter is thought to lie with some given probability. This probability represents the proportion of occasions when such limits calculated from repeated samples actually include the true value of the parameter. An essential feature of the interval is that the distance between the limits depends on the size of the sample, being smaller for a larger sample. CONTINGENCY TABLE- A table in which individuals are categorized according to two or more characteristics or variables. Each cell of the table contains the number of individuals with a particular combination of characteristics or values. CORRELATION COEFFICIENT- A measure of interdependence between two variables expressed a value between -1 and +1. A value of zero indicates no correlation and the limits represent perfect negative and perfect positive correlation respectively. A sample product moment correlation coecient is calculated from pairs of values whereas rank correlation measures such as Spearmans rho, which has population value S , are calculated after ranking the variables. COVARIANCE- A measure of the extent to which two variables vary together. When divided by the product of the standard deviations of the two variables, this measure becomes the Pearson (or product-moment)correlation coecient.

CRITICAL REGION- The set of values of a test statistic for which the Null Hypothesis is rejected. Often the region is disjoint, comprising two tails of the sampling distribution of the test statistic, but in a one-tail test the region is simply the one tail of that distribution. CRITICAL VALUES- The values of a TEST-STATISTIC that determine the CRITICAL REGION of a test. DESCRIPTIVE STATISTICS- Quantities that are calculated from a sample in order to portray its main features, often with a population distribution in mind. DISPERSION- The spread of values of a variable in a distribution. Common measures of dispersion include the variance, standard deviation, range, mean deviation and semiinterquartile range. EFFECT SIZE- That amount which it is desired to determine as signicant when a test is used for the magnitude of a factor eect. If two groups with dierent means and the same standard deviations are to be compared then the eect size is the dieence of the means divided by the standard deviation. EXPECTATION- The mean value over repeated samples. The expected value of a function of a variable is the mean value of the function over repeated samples. EXPLORATORY DATA ANALYSIS (EDA) The use of techniques to examine the main featurs of data, particularly to conrm that assumptions necessary for the application of common techniques may be made. FACTOR- In experimental design, this term is used to denote a condition or variable that aects the main variable of interest. Factors may be quantitative (e.g. amount of training, dosage level) or qualitative (e.g. type of treatment, sex, occupation). In multivariate analysis, the term is used to describe a composite group of variables that serves as a meaningful entity for further analysis. F-TEST- The test statistic is the ratio of two mean sums of squares representing estimates of variances that are expected to be equal on the null hypothesis. In general, F may be used to compare the variances of two independent samples. In an important special case, it is calculated from estimates based upon between and within samples variation. This is the single factor analysis of variance. In more complex designs this idea is extended. In these cases, the F-test is actually testing hypotheses concerning equality of means of groups that are subject to the action of one or more factors. FREQUENCY TABLE. This table shows, for an ungrouped discrete variable, the values that a variable can take together with the counts of each value in the sample. For a grouped variable, whether discrete or continuous, the counts of sample values that fall within contiguous intervals are shown. FRIEDMAN TEST- Test for the dierence between the medians of groups when matched samples have been taken. It may be seen as the nonparametric counterpart of a two or more factor analysis of variance for a balanced design, but a separate analysis is completed for each factor and no tests for interaction eects are available. GOODNESS-OF-FIT TEST. Occurrences of values of a variable in a sample are compared to the numbers of such values that would be expected under some assumed probability 3

distribution. These occurrences are often in the form of a frequency table and the commonest tests used are 2 -tests and Kolmogorov-Smirnov tests. GROUPED DATA. Where data are represented by counts of values of a variable falling into intervals, the data are referred to as grouped. HOMOGENEITY OF VARIANCE- The property that is required for parametric tests using groups(populations) that may dier in their means but for the tests to be valid need to have their variances the same. HYPOTHESIS TEST- A procedure for deciding between two hypotheses, the NULL and the ALTERNATIVE HYPOTHESIS, using a test-statistic calculated from a sample. INDEPENDENCE- Where two events occur together and the happening of one does not aect the happening of the other, the events are said to be independent. Note that event here may mean the taking of a value by a random variable. If two random variables are independent then they have zero covariance but zero covariance does not imply independence. INDEX A summary measure that allows comparison of observations made at dierent points in time or in dierent populations. Commonly the value is standardised to a base value of 100 so that changes e.g. over time can be seen in percentage form. More complex indices combine several variables to represent some composite phenomenon. INTERACTION- In an experiment, factors may act independently so that the eect of one factor is the same at all levels of another factor, or may interact so that the eect of one factor depends on the levels of another factor. The term is also used for the relation between characteristics of classication in a contingency table, but this phenomenon is usually called association. KOLMOGOROV-SMIRNOV TEST- This test compares two sample distributions or one sample distribution with a theoretical distribution. KRUSKAL-WALLIS TEST- This tests for the dierence between the medians of several populations. The total sample values are ranked and the rank sums for the separate samples are used to calculate a 2 statistic. LEVEL OF SIGNIFICANCE- The probability that a test-statistic falls into the critical region for a test when the null hypothesis is true is usually expressed as a percentage and termed the signicance level. LOCATION PARAMETER- Measure indicating the central position of a distribution, e.g., mean, mode, median or mid-range. MANN-WHITNEY TEST- Test for the dierence between the medians of two independent samples that uses the rank sums calculated for the two samples from the ranks in the combined sample. MATCHED PAIRS TEST- When pairs of units from a population are observed in an experiment to investigate the eect of a single factor, a matched pairs test is used. These pairs are identical in other respects but may dier for the factor of interest. Dierences between matched pairs have smaller variance than those between unmatched units and this leads to a more ecient design. Sometimes, subjects are used as their own controls in a before-after experiment and the values from the same subject are a better basis for testing the eect of a factor than values from dierent subjects. 4

MEAN- The arithmetic mean of a sample of values is the sum of these values divided by the sample size. The population mean is the expected value of a variable considering its probability distribution. MEDIAN- The value which divides an ordered sample into two equal halves. In a population, the value that is exceeded, and not exceeded with equal probability. MODE- The most frequent value in a sample. The population value that has greatest probability. There may be several modes in a distribution if separated values have high frequency (or probability) in relation to their neighbours. This is common for heterogeneous populations where the distribution comprise a mixture of simpler component distributions. MUTUALLY EXCLUSIVE EVENTS- Events that cannot occur together in a sample. NON-PARAMETRIC TEST- A test that does not depend on the underlying form of the distribution of the observations. Many such tests use ranks instead of the raw data, others use signs. NORMAL PROBABILITY DISTRIBUTION- This symmetric continuous distribution arises in many natural situations, hence the name Normal (although it is sometimes known as Gaussian). It is unimodal and bell shaped. Within one standard deviation of the mean lies approximately 68% of the distribution, and within two standard deviations, approximately 95%. The Normal is used as an approximating distribution to many other distributions, both discrete and continuous, including the Binomial and the Poisson in circumstances when these distributions are symmetrical and the means are not small. But the major importance of the Normal is due to the CENTRAL LIMIT THEOREM and the fact that many sampling distributions are approximately normal so that statistical inferences can be made over a wide range of circumstances. NULL HYPOTHESIS- An assertion or statement concerning the values of one or more parameters in one or more populations. Usually referred to as H0 . Often asserts equality of e.g. means of groups, there being no dierence between them. The term ALTERNATIVE HYPOTHESIS is used for the statement that is true when the Null Hypothesis is untrue. Often known as Ha . Sometimes called the Research Hypothesis. ONE-TAIL TEST- When the CRITICAL REGION for a test is concentrated in one tail of the distribution of a test-statistic. In particular if the Alternative Hypothesis under test states that a parameter value exceeds some specied value then the Null Hypothesis is tested against this one-sided alternative. OUTLIER- An improbable value that does not resemble other values in a sample. PARAMETER- A measure used in specifying a particular probability distribution in a population, e.g. mean, variance. PERMUTATION- An ordering of the members of a group. POINT ESTIMATE AND INTERVAL ESTIMATE- A single value calculated from a sample to represent a population parameter is called a point estimate. Where two values specify an interval within which the population value for a parameter is thought to lie these two values constitute an interval estimate.

POISSON DISTRIBUTION- The counted number of events in equal intervals of time or of points in equal units in space follows a Poisson distribution if the events or points are random and have constant probability over time or space and are independent. This distribution is dened for all positive integers and has the special property that its mean equals its variance. Because of this, departures from randomness may be detected: clustering leads to a variance that is larger than the mean whereas systematic evenness or regularity leads to a variance that is smaller than the mean. POPULATION- As used in statistics, the aggregate of all units upon which observations could ever be made in repeated sampling. Sometimes it is appropriate to think of it as all the values of a variable that could be observed. POWER OF A TEST- The probability that a statistical test correctly accepts the alternate hypothesis when the null hypothesis is not true. The power of a test is 1 , where is the Type II error. PROBABILITY- An expressed degree of belief or a limiting relative frequency of an event over an innite sample. PROBABILITY DISTRIBUTION- The set of values taken by a random variable together with their probabilities. Multivariate distributions dene the combinations of values taken by several variables and the probabilities with which they occur. QUARTILES- The values below which occur 25%, 50% and 75% of the values in a distribution. The median is the second quartile. RANDOM SAMPLE- A sample in which every member of the population has the same chance of appearing and such that the members of the sample are chosen independently. RANDOM VARIABLE- A variable that follows a probability distribution. RANGE- The dierence between the largest and smallest value in a sample, or for the population range, in the population. REPLICATION- The taking of several measurements under the same conditions or combination of factors. This device is used to increase the precision of estimates of eects, by increasing the degrees of freedom for the residual or error sum of squares. REGRESSION- The dependence of a variable upon one or more variables expressed in mathematical form. Simple linear regression refers to a straight line equation: y = + x in which the parameter is the intercept and the slope of the line. REPEATED MEASURES- Where each subject is measured under each of a specied set of dierent conditions or at specied times. RISK- The probability that an individual has a characteristic or that a particular event will take place. Used in risk analysis of systems to assess the probabilities of important events, e.g. disasters, or in medicine to consider the outcomes from treatment or surgery, or the characteristics of individuals that predispose them to certain diseases. SAMPLE- A subset of the whole population, usually taken to provide insights concerning the whole population SAMPLING DISTRIBUTION- The distribution of some specied sample quantity, most commonly the sample mean, over repeated samples of xed size. 6

SCALE- The type of measurement or observation may be qualitative or quantitative: usually seperated into nominal (labels), ordinal (ordered categories), interval (equal quantities represented by intervals of the same width) or ratio (true zero and meaningful division of values). Importance: may aect the choice of techniques available for analysis. SEMI-INTERQUARTILE RANGE- Half the dierence between the rst and third quartiles. SIGN TEST- A test that uses the signs of observed values (or more often of dierences between pairs of observed values) rather than their magnitude to calculate a test-statistic. Typically, + and signs are equiprobable on the null hypothesis and a test-statistic is based on the number of +s as this follows a binomial distribution with p = 0.5. SIGNIFICANCE TEST- A null hypothesis describes some feature of one or more populations. This hypothesis is assumed to be true but may be rejected after calculation of a teststatistic from sample values. This happens when the statistic has a value that is unlikely to arise if the null hypothesis is true- this will usually be when the statistic falls into the critical region for the test. SIGNIFICANCE LEVEL- The probability, usually expressed as a percentage, that the null hypothesis is rejected by a signicance test when it is in fact true. SKEWNESS- A unimodal distribution is skew if the probability of exceeding or not exceeding the mode are not equal. STANDARD DEVIATION- The square root of the variance STANDARD ERROR- The standard deviation of the sampling distribution of an estimate of a population parameter. STANDARD or STANDARDIZED NORMAL VARIABLE- A normally distributed variable that has mean zero and variance 1. STATISTIC- A measure calculated from a sample often used to estimate a population parameter. Note that STATISTICS may refer to several such measures or to the whole subject- the scientic collection and interpretation of observations. STEM-AND-LEAF PLOT- A form of histogram that displays the actual values in intervals as well as their frequency. Useful EDA technique for showing bias in recording or for comparing two distributions. STRATIFIED RANDOM SAMPLE- a sample that comprises random samples taken from the strata of a population. TEST STATISTIC- A quantity calculated from a sample in order conduct a SIGNIFICANCE TEST. The test statistic has a sampling distribution from which tail values as critical values for use in the test. tTEST. A test using a statistic that is calculated using sample standard deviations in the absence of knowledge of the population values. Most often used to test the dierence between two means or two regression line gradients. TRANSFORMATION- The process of replacing each observation by a mathematical function of it (e.g. log or square root) so that the data may conform to a normal distribution or have some desired property such as homogeneity of variance. 7

TWO-TAIL TEST- Uses two values to determine the critical region. The null hypothesis is rejected if the test statistic does not lie between these values. TYPE I AND TYPE II ERRORS- When the null hypothesis is true but it is rejected by a statistical test an error of Type I has occurred. When the alternative hypothesis is true but it is rejected by the test an error of Type II has occurred. These errors are expressed as probabilities and are also referred to as and , respectively. UNBIASED ESTIMATOR- One whose sampling distribution has for its mean the true value of the population parameter being estimated . UNIMODAL DISTRIBUTION- A distribution that has just one mode. VARIANCE- The mean of the squared deviations from the mean of a sample. For a population, the expected value of the squared deviation of a value from the mean. WILCOXON MATCHED-PAIRS TEST- Uses the ranks of dierences between pairs to test the hypothesis that the median dierence is zero (two-tail test) or that it is greater (or less) than zero (one-tail test). zTEST- When the quantity (e.g. the dierence between two means) calculated to test a particular hypothesis is believed to be normally distributed and its standard error can be determined from a known standard deviation, the statistic obtained by dividing the quantity by its standard error often follows a zdistribution and so a ztest is used.

Das könnte Ihnen auch gefallen

- CHYS 3P15 Final Exam ReviewDokument7 SeitenCHYS 3P15 Final Exam ReviewAmanda ScottNoch keine Bewertungen

- SOCI 301 Final Notes Chapter 5 - Hypothesis TestingDokument8 SeitenSOCI 301 Final Notes Chapter 5 - Hypothesis TestingJustin LomatNoch keine Bewertungen

- Parametric Test RDokument47 SeitenParametric Test RRuju VyasNoch keine Bewertungen

- Definition of Terms StatsDokument2 SeitenDefinition of Terms StatsMarvicNoch keine Bewertungen

- AMR Concept Note-1 (Freq Dist, Cross Tab, T-Test and ANOVA)Dokument0 SeitenAMR Concept Note-1 (Freq Dist, Cross Tab, T-Test and ANOVA)pri23290Noch keine Bewertungen

- Notes On Medical StatisticsDokument10 SeitenNotes On Medical StatisticsCalvin Yeow-kuan ChongNoch keine Bewertungen

- Appendix ADokument7 SeitenAppendix AuzznNoch keine Bewertungen

- Glossary of Key Data Analysis Terms - 2Dokument2 SeitenGlossary of Key Data Analysis Terms - 2SocialNoch keine Bewertungen

- Inferential StatDokument19 SeitenInferential Statsherlyn cabcabanNoch keine Bewertungen

- Main Title: Planning Data Analysis Using Statistical DataDokument40 SeitenMain Title: Planning Data Analysis Using Statistical DataRuffa L100% (1)

- Stats Test 2 Cheat Sheet 2.0Dokument3 SeitenStats Test 2 Cheat Sheet 2.0Mark StancliffeNoch keine Bewertungen

- Notes Unit-4 BRMDokument10 SeitenNotes Unit-4 BRMDr. Moiz AkhtarNoch keine Bewertungen

- Biostatistics 140127003954 Phpapp02Dokument47 SeitenBiostatistics 140127003954 Phpapp02Nitya KrishnaNoch keine Bewertungen

- Completely Randomized DesignDokument5 SeitenCompletely Randomized DesignQuinn's Yat100% (3)

- AP Statistics 1st Semester Study GuideDokument6 SeitenAP Statistics 1st Semester Study GuideSusan HuynhNoch keine Bewertungen

- Distribution Free Methods, Which Do Not Rely On Assumptions That TheDokument4 SeitenDistribution Free Methods, Which Do Not Rely On Assumptions That TheNihar JawadinNoch keine Bewertungen

- Bio StatDokument5 SeitenBio StatKhey AgamNoch keine Bewertungen

- Types of Test: Group 3Dokument32 SeitenTypes of Test: Group 3Hannah Sheree DarantinaoNoch keine Bewertungen

- Data Science Interview Preparation (30 Days of Interview Preparation)Dokument27 SeitenData Science Interview Preparation (30 Days of Interview Preparation)Satyavaraprasad BallaNoch keine Bewertungen

- Non Parametric TestsDokument12 SeitenNon Parametric TestsHoney Elizabeth JoseNoch keine Bewertungen

- Biostat - Group 3Dokument42 SeitenBiostat - Group 3Jasmin JimenezNoch keine Bewertungen

- Statistical and Probability Tools For Cost EngineeringDokument16 SeitenStatistical and Probability Tools For Cost EngineeringAhmed AbdulshafiNoch keine Bewertungen

- Explanation For The Statistical Tools UsedDokument4 SeitenExplanation For The Statistical Tools UsedgeethamadhuNoch keine Bewertungen

- Parametric Tests, Sept 2020Dokument30 SeitenParametric Tests, Sept 2020prasanna lamaNoch keine Bewertungen

- Module-1 2Dokument32 SeitenModule-1 2Ctrl-Alt-DelNoch keine Bewertungen

- Interview MLDokument24 SeitenInterview MLRitwik Gupta (RA1911003010405)Noch keine Bewertungen

- 8614 22Dokument13 Seiten8614 22Muhammad NaqeebNoch keine Bewertungen

- Summary Molecular Systems BiologyDokument15 SeitenSummary Molecular Systems BiologytjNoch keine Bewertungen

- Differences and Similarities Between ParDokument6 SeitenDifferences and Similarities Between ParPranay Pandey100% (1)

- Advancedstatistics 130526200328 Phpapp02Dokument91 SeitenAdvancedstatistics 130526200328 Phpapp02Gerry Makilan100% (1)

- Statistics Ready ReckonerDokument4 SeitenStatistics Ready ReckonerAdarsh KumarNoch keine Bewertungen

- Analytical Chemistry Finals ReviewerDokument10 SeitenAnalytical Chemistry Finals Revieweraaleah moscaNoch keine Bewertungen

- Finals in Edu 533Dokument11 SeitenFinals in Edu 533ISSUE TVNoch keine Bewertungen

- STAT NotesDokument6 SeitenSTAT NotesCeline Therese BuNoch keine Bewertungen

- Analysis of VarianceDokument7 SeitenAnalysis of VariancehimanshuNoch keine Bewertungen

- STats - Descriptive and Inferential DivisionsDokument4 SeitenSTats - Descriptive and Inferential DivisionsStudent-here14Noch keine Bewertungen

- Understanding Repeated-Measures ANOVA PDFDokument7 SeitenUnderstanding Repeated-Measures ANOVA PDFwahida_halimNoch keine Bewertungen

- Inferential Statistics For Data ScienceDokument10 SeitenInferential Statistics For Data Sciencersaranms100% (1)

- R M HandoutDokument13 SeitenR M HandoutGaurav Gangwar SuryaNoch keine Bewertungen

- Chapter 12Dokument26 SeitenChapter 12maroof_mirNoch keine Bewertungen

- Study Material For BA-II Statistics For Economics: One Tailed TestDokument9 SeitenStudy Material For BA-II Statistics For Economics: One Tailed TestC.s TanyaNoch keine Bewertungen

- Stats and Prob Reviewer, Q3 Jess Anch.Dokument8 SeitenStats and Prob Reviewer, Q3 Jess Anch.JessicaNoch keine Bewertungen

- Biostatistics PPT - 5Dokument44 SeitenBiostatistics PPT - 5asaduzzaman asadNoch keine Bewertungen

- Test Formula Assumption Notes Source Procedures That Utilize Data From A Single SampleDokument12 SeitenTest Formula Assumption Notes Source Procedures That Utilize Data From A Single SamplesitifothmanNoch keine Bewertungen

- T-Test (Independent & Paired) 1Dokument7 SeitenT-Test (Independent & Paired) 1መለክ ሓራNoch keine Bewertungen

- Parametric TestDokument2 SeitenParametric TestPankaj2cNoch keine Bewertungen

- Multivariate AnalysisDokument11 SeitenMultivariate AnalysisCerlin PajilaNoch keine Bewertungen

- ContinuousDokument11 SeitenContinuousDinaol TikuNoch keine Bewertungen

- 6.descriptve PPHDDokument70 Seiten6.descriptve PPHDSharad KhatakeNoch keine Bewertungen

- Measures of Central Tendency: MeanDokument7 SeitenMeasures of Central Tendency: MeanliliNoch keine Bewertungen

- GlossaryDokument27 SeitenGlossary29_ramesh170Noch keine Bewertungen

- 123 T F Z Chi Test 2Dokument5 Seiten123 T F Z Chi Test 2izzyguyNoch keine Bewertungen

- Quanti Quiz 5 Quiz 6 Final PDFDokument34 SeitenQuanti Quiz 5 Quiz 6 Final PDFpau baniagaNoch keine Bewertungen

- Term End Examination: Descriptive Answer ScriptDokument5 SeitenTerm End Examination: Descriptive Answer ScriptAbhishek ChoudharyNoch keine Bewertungen

- T TEST LectureDokument26 SeitenT TEST LectureMax SantosNoch keine Bewertungen

- Mba Statistics Midterm Review SheetDokument1 SeiteMba Statistics Midterm Review Sheetsk112988Noch keine Bewertungen

- BRM PresentationDokument25 SeitenBRM PresentationHeena Abhyankar BhandariNoch keine Bewertungen

- Spss Tutorials: One-Way AnovaDokument12 SeitenSpss Tutorials: One-Way AnovaMat3xNoch keine Bewertungen

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignVon EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignNoch keine Bewertungen

- The Indian Contract Act 1872Dokument47 SeitenThe Indian Contract Act 1872Dilfaraz KalawatNoch keine Bewertungen

- 43 - The Indian Contract Act 1872Dokument5 Seiten43 - The Indian Contract Act 1872Sanjeev Kumar TirthaniNoch keine Bewertungen

- Sale of Goods Act 1930Dokument12 SeitenSale of Goods Act 1930Vishal GattaniNoch keine Bewertungen

- Pre Qs. PaperDokument48 SeitenPre Qs. PaperSidharth Singh AntilNoch keine Bewertungen

- Sale of Goods Act 1930Dokument12 SeitenSale of Goods Act 1930Vishal GattaniNoch keine Bewertungen

- Contract Act 1872Dokument38 SeitenContract Act 1872Vishal GattaniNoch keine Bewertungen

- CPT Mercantile Law Dharmendra Madaan 3Dokument21 SeitenCPT Mercantile Law Dharmendra Madaan 3Viswanathan Ramakrishnan50% (2)

- Law Contract Act. BasicnsDokument31 SeitenLaw Contract Act. BasicnsVishal GattaniNoch keine Bewertungen

- Indian Contract ActDokument8 SeitenIndian Contract ActjjmainiNoch keine Bewertungen

- Contract ActDokument5 SeitenContract ActVishal GattaniNoch keine Bewertungen

- Statistc in ChemistryDokument13 SeitenStatistc in Chemistrycinvehbi711Noch keine Bewertungen

- 0CPT Scanner (Paper2) Appendix Dec 09Dokument3 Seiten0CPT Scanner (Paper2) Appendix Dec 09Vishal GattaniNoch keine Bewertungen

- Contract Act 1872Dokument69 SeitenContract Act 1872Vishal GattaniNoch keine Bewertungen

- Chapter-3 The Indian Partnership Act, 1932Dokument22 SeitenChapter-3 The Indian Partnership Act, 1932Vishal Gattani100% (8)

- Chapter-1 The Indian Contract Act, 1872Dokument39 SeitenChapter-1 The Indian Contract Act, 1872Vishal GattaniNoch keine Bewertungen

- Chapter-2 The Sale of Goods Act, 1930Dokument27 SeitenChapter-2 The Sale of Goods Act, 1930Vishal GattaniNoch keine Bewertungen

- Notes 424 03Dokument164 SeitenNotes 424 03Muhammad Shafaat AwanNoch keine Bewertungen

- Statistics 333Dokument84 SeitenStatistics 333Vishal Gattani100% (1)

- Exercises in Measure of Central Tendency-Grouped and Ungrouped DataDokument3 SeitenExercises in Measure of Central Tendency-Grouped and Ungrouped DataVishal Gattani93% (42)

- Math Ratio & ProportionDokument4 SeitenMath Ratio & ProportionVishal Gattani50% (2)

- CPT MathDokument2 SeitenCPT MathVishal GattaniNoch keine Bewertungen

- Hcs CatalogueDokument16 SeitenHcs CatalogueG MuratiNoch keine Bewertungen

- How To Be A Multi-Orgasmic Woman: Know Thyself (And Thy Orgasm Ability)Dokument3 SeitenHow To Be A Multi-Orgasmic Woman: Know Thyself (And Thy Orgasm Ability)ricardo_sotelo817652Noch keine Bewertungen

- Understanding The Experiences of Grade-11 HumssDokument57 SeitenUnderstanding The Experiences of Grade-11 HumssBLANCHE BUENONoch keine Bewertungen

- LUMBERPUNCTURDokument14 SeitenLUMBERPUNCTURRahul VasavaNoch keine Bewertungen

- Chapter-24 Alcohols, Phenols and Ethers PDFDokument38 SeitenChapter-24 Alcohols, Phenols and Ethers PDFAniket Chauhan67% (3)

- Formatof Indenity BondDokument2 SeitenFormatof Indenity BondSudhir SinhaNoch keine Bewertungen

- Dysphagia in Lateral Medullary Syndrome ObjavljenDokument10 SeitenDysphagia in Lateral Medullary Syndrome ObjavljenMala MirnaNoch keine Bewertungen

- Ka TurayDokument6 SeitenKa TurayNizelCalmerinBaesNoch keine Bewertungen

- 11 - Chapter 4 Muslim Law PDFDokument87 Seiten11 - Chapter 4 Muslim Law PDFAnfal BarbhuiyaNoch keine Bewertungen

- Young Children's Use of Digital Media and The Corresponding Parental MediationDokument15 SeitenYoung Children's Use of Digital Media and The Corresponding Parental MediationFernan EnadNoch keine Bewertungen

- Magnetic Drive Pump BrochureDokument4 SeitenMagnetic Drive Pump BrochureIwaki AmericaNoch keine Bewertungen

- W - Paul Smith, William C. Compton and W - Beryl WestDokument5 SeitenW - Paul Smith, William C. Compton and W - Beryl Westefarfan26Noch keine Bewertungen

- Epithelial TissueDokument10 SeitenEpithelial Tissuememe bolongonNoch keine Bewertungen

- Transcritical Co2 Refrigeration - PDFDokument11 SeitenTranscritical Co2 Refrigeration - PDFHector Fabian Hernandez Algarra100% (1)

- HB 326-2008 Urban Greywater Installation Handbook For Single HouseholdsDokument9 SeitenHB 326-2008 Urban Greywater Installation Handbook For Single HouseholdsSAI Global - APACNoch keine Bewertungen

- Mothership Zine Rulebook v5 PDFDokument44 SeitenMothership Zine Rulebook v5 PDFAna Luiza FernandesNoch keine Bewertungen

- Safety Precautions For Handling ChemicalsDokument68 SeitenSafety Precautions For Handling ChemicalsRaul FenrandezNoch keine Bewertungen

- Treatment Plan 3Dokument8 SeitenTreatment Plan 3Kathy Lee Hornbeck KendrickNoch keine Bewertungen

- PSC Solar Uk Xantra Inverter With Avr User ManualDokument11 SeitenPSC Solar Uk Xantra Inverter With Avr User Manualnwizu100% (1)

- Nuñez Survey InstrumentDokument3 SeitenNuñez Survey InstrumentAnna Carmela NuñezNoch keine Bewertungen

- NCP RequirementsDokument3 SeitenNCP RequirementsJasmine JarapNoch keine Bewertungen

- Initiatives For Field StaffDokument1 SeiteInitiatives For Field Staffkalpesh thakerNoch keine Bewertungen

- Sewage Disposal System Treatment and Recycling - Group 1Dokument17 SeitenSewage Disposal System Treatment and Recycling - Group 1Rosevie Mae EnriqueNoch keine Bewertungen

- Hazard Area ClassificationDokument13 SeitenHazard Area ClassificationCobra_007Noch keine Bewertungen

- Halaal Gazette Vol 1 Issue 4Dokument4 SeitenHalaal Gazette Vol 1 Issue 4Shahil SadoolNoch keine Bewertungen

- Handouts First Aid in MasonryDokument5 SeitenHandouts First Aid in MasonryIvyNoch keine Bewertungen

- Sf1 Cinderella JuneDokument60 SeitenSf1 Cinderella JuneLaLa FullerNoch keine Bewertungen

- Week 5 UltrasoundDokument26 SeitenWeek 5 UltrasoundNoor FarhanNoch keine Bewertungen

- O Feci : (BL (ElDokument107 SeitenO Feci : (BL (ElKaren Elsy Gonzales CamachoNoch keine Bewertungen

- Grindex Lista de PartesDokument28 SeitenGrindex Lista de PartesMarco Antonio Cerna HaroNoch keine Bewertungen