Beruflich Dokumente

Kultur Dokumente

Fibre Channel SAN Configure Guide

Hochgeladen von

Nasron NasirOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Fibre Channel SAN Configure Guide

Hochgeladen von

Nasron NasirCopyright:

Verfügbare Formate

Fibre Channel SAN Configuration Guide

ESX Server 3.5, ESX Server 3i version 3.5 VirtualCenter 2.5

This document supports the version of each product listed and supports all subsequent versions until the document is replaced by a new edition. To check for more recent editions of this document, see http://www.vmware.com/support/pubs.

Fibre Channel SAN Configuration Guide

You can find the most up-to-date technical documentation on the VMware Web site at: http://www.vmware.com/support/ The VMware Web site also provides the latest product updates. If you have comments about this documentation, submit your feedback to: docfeedback@vmware.com

Copyright 20062010 VMware, Inc. All rights reserved. This product is protected by U.S. and international copyright and intellectual property laws. VMware products are covered by one or more patents listed at http://www.vmware.com/go/patents. VMware is a registered trademark or trademark of VMware, Inc. in the United States and/or other jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies.

VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com

2 VMware, Inc.

Contents

AboutThisBook

1 OverviewofVMwareESXServer 13

IntroductiontoESXServer 14 SystemComponents 14 SoftwareandHardwareCompatibility 15 UnderstandingVirtualization 16 CPU,Memory,andNetworkVirtualization 16 VirtualSCSI 17 DiskConfigurationOptions 18 VirtualMachineFileSystem 19 RawDeviceMapping 19 VirtualSCSIHostBusAdapters 20 InteractingwithESXServerSystems 20 VMwareVirtualCenter 20 ESXServer3ServiceConsole 21 VirtualizationataGlance 22

2 UsingESXServerwithFibreChannelSAN 25

StorageAreaNetworkConcepts 26 OverviewofUsingESXServerwithSAN 28 BenefitsofUsingESXServerwithSAN 28 ESXServerandSANUseCases 29 FindingFurtherInformation 30 SpecificsofUsingSANArrayswithESXServer 31 SharingaVMFSAcrossESXServers 31 MetadataUpdates 32 LUNDisplayandRescan 32 HostType 33 LevelsofIndirection 33 DataAccess:VMFSorRDM 34 ThirdPartyManagementApplications 35

VMware, Inc.

Fibre Channel SAN Configuration Guide

ZoningandESXServer 35 AccessControl(LUNMasking)andESXServer 36 UnderstandingVMFSandSANStorageChoices 36 ChoosingLargerorSmallerLUNs 36 MakingLUNDecisions 37 PredictiveScheme 37 AdaptiveScheme 37 TipsforMakingLUNDecisions 38 UnderstandingDataAccess 39 PathManagementandFailover 41 ChoosingVirtualMachineLocations 43 DesigningforServerFailure 44 UsingVMwareHA 44 UsingClusterServices 44 ServerFailoverandStorageConsiderations 45 OptimizingResourceUse 46 UsingVMotiontoMigrateVirtualMachines 46 UsingVMwareDRStoMigrateVirtualMachines 47

3 RequirementsandInstallation 49

GeneralESXServerSANRequirements 50 RestrictionsforESXServerwithaSAN 50 SettingLUNAllocations 51 SettingFibreChannelHBA 51 Recommendations 52 ESXServerBootfromSANRequirements 53 InstallationandSetupSteps 54

4 SettingUpSANStorageDeviceswithESXServer 57

SetupOverview 58 Testing 58 SupportedDevices 59 GeneralSetupConsiderations 59 EMCCLARiiONStorageSystems 60 EMCCLARiiONAX100andRDM 60 AX100DisplayProblemswithInactiveConnections 61 PushingHostConfigurationChangestotheArray 61 EMCSymmetrixStorageSystems 61

VMware, Inc.

Contents

IBMTotalStorageDS4000StorageSystems 62 ConfiguringtheHardwareforSANFailoverwithDS4000StorageServers 62 VerifyingtheStorageProcessorPortConfiguration 63 DisablingAutoVolumeTransfer 64 ConfiguringStorageProcessorSenseData 65 IBMTotalStorageDS4000andPathThrashing 66 IBMTotalStorage8000 66 HPStorageWorksStorageSystems 66 HPStorageWorksMSA 66 SettingtheProfileNametoLinux 66 HubControllerIssues 68 HPStorageWorksEVA 68 HPStorageWorksXP 69 HitachiDataSystemsStorage 69 NetworkApplianceStorage 69

5 UsingBootfromSANwithESXServerSystems 71

BootfromSANOverview 72 HowBootfromaSANWorks 72 BenefitsofBootfromSAN 73 GettingReadyforBootfromSAN 73 BeforeYouBegin 74 LUNMaskinginBootfromSANMode 74 PreparingtheSAN 75 MinimizingtheNumberofInitiators 76 SettingUptheFCHBAforBootfromSAN 76 SettingUptheQLogicFCHBAforBootfromSAN 76 EnablingtheQLogicHBABIOS 76 EnablingtheSelectableBoot 77 SelectingtheBootLUN 77 SettingUpYourSystemtoBootfromCDROMFirst SettingUptheEmulexFCHBAforBootfromSAN 78

78

6 ManagingESXServerSystemsThatUseSANStorage 81

IssuesandSolutions 82 GuidelinesforAvoidingProblems 83 GettingInformation 83 ViewingHBAInformation 83 ViewingDatastoreInformation 84

VMware, Inc.

Fibre Channel SAN Configuration Guide

ResolvingDisplayIssues 85 UnderstandingLUNNamingintheDisplay 85 ResolvingIssueswithLUNsThatAreNotVisible 86 UsingRescan 87 RemovingDatastores 88 AdvancedLUNDisplayConfiguration 88 ChangingtheNumberofLUNsScannedUsingDisk.MaxLUN 88 MaskingLUNsUsingDisk.MaskLUNs 89 ChangingSparseLUNSupportUsingDisk.SupportSparseLUN 90 NPortIDVirtualization 90 HowNPIVBasedLUNAccessWorks 90 RequirementsforUsingNPIV 91 AssigningWWNstoVirtualMachines 92 Multipathing 95 ViewingtheCurrentMultipathingState 95 SettingaLUNMultipathingPolicy 98 DisablingandEnablingPaths 99 SettingthePreferredPathforFixedPathPolicy 100 PathManagementandManualLoadBalancing 100 Failover 102 SettingtheHBATimeoutforFailover 103 SettingDeviceDriverOptionsforSCSIControllers 103 SettingOperatingSystemTimeout 104 VMkernelConfiguration 104 SharingDiagnosticPartitions 104 AvoidingandResolvingProblems 105 OptimizingSANStoragePerformance 106 StorageArrayPerformance 106 ServerPerformance 107 ResolvingPerformanceIssues 108 MonitoringPerformance 108 ResolvingPathThrashing 108 UnderstandingPathThrashing 109 EqualizingDiskAccessBetweenVirtualMachines 110 RemovingVMFS2Drivers 111 RemovingNFSDrivers 111 ReducingSCSIReservations 111 SettingMaximumQueueDepthforHBAs 112 AdjustingQueueDepthforaQLogicHBA 112 AdjustingQueueDepthforanEmulexHBA 113

VMware, Inc.

Contents

SANStorageBackupConsiderations 114 SnapshotSoftware 115 UsingaThirdPartyBackupPackage 115 ChoosingYourBackupSolution 116 LayeredApplications 116 ArrayBased(ThirdParty)Solution 116 FileBased(VMFS)Solution 117 VMFSVolumeResignaturing 117 MountingOriginal,Snapshot,orReplicaVMFSVolumes 118 UnderstandingResignaturingOptions 118 State1EnableResignature=0,DisallowSnapshotLUN=1(default) 119 State2EnableResignature=1,(DisallowSnapshotLUNisnotrelevant) 119 State3EnableResignature=0,DisallowSnapshotLUN=0 119

A MultipathingChecklist 121 B Utilities 123

esxtopandresxtopUtilities 124 storageMonitorUtility 124 Options 124 Examples 125

Index 127

VMware, Inc.

Fibre Channel SAN Configuration Guide

VMware, Inc.

About This Book

Thismanual,theFibreChannelSANConfigurationGuide,explainshowtousea VMwareESXServersystemwithastorageareanetwork(SAN).Themanualdiscusses conceptualbackground,installationrequirements,andmanagementinformationinthe followingmaintopics:

UnderstandingESXServerIntroducesESXServersystemsforSAN administrators. UsingESXServerwithaSANDiscussesrequirements,noticeabledifferencesin SANsetupifESXServerisused,andhowtomanageandtroubleshootthetwo systemstogether. EnablingyourESXServersystemtobootfromaLUNonaSANDiscusses requirements,limitations,andmanagementofbootfromSAN.

NOTEThismanualsfocusisSANoverFibreChannel(FC).ItdoesnotdiscussiSCSI orNFSstoragedevices.ForinformationaboutiSCSIstorage,seetheiSCSISAN ConfigurationGuide.Forinformationaboutothertypesofstorage,seetheESXServer3i ConfigurationGuideandESXServer3ConfigurationGuide. TheFibreChannelSANConfigurationGuidecoversbothESXServer3.5andESXServer 3iversion3.5.Foreaseofdiscussion,thisbookusesthefollowingproductnaming conventions:

FortopicsspecifictoESXServer3.5,thisbookusesthetermESXServer3. FortopicsspecifictoESXServer3iversion3.5,thisbookusestheterm ESX Server 3i. Fortopicscommontobothproducts,thisbookusesthetermESXServer.

VMware, Inc.

Fibre Channel SAN Configuration Guide

Whentheidentificationofaspecificreleaseisimportanttoadiscussion,thisbook referstotheproductbyitsfull,versionedname. WhenadiscussionappliestoallversionsofESXServerforVMwareInfrastructure 3,thisbookusesthetermESXServer3.x.

Intended Audience

TheinformationpresentedinthismanualiswrittenforexperiencedWindowsorLinux systemadministratorsandwhoarefamiliarwithvirtualmachinetechnology datacenteroperations.

Document Feedback

VMwarewelcomesyoursuggestionsforimprovingourdocumentation.Ifyouhave comments,sendyourfeedbackto: docfeedback@vmware.com

VMware Infrastructure Documentation

TheVMwareInfrastructuredocumentationconsistsofthecombinedVMware VirtualCenterandESXServerdocumentationset.

Abbreviations Used in Figures

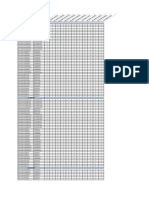

ThefiguresinthismanualusetheabbreviationslistedinTable 1. Table 1. Abbreviations

Abbreviation database datastore dsk# hostn SAN tmplt user# VC VM# Description VirtualCenterdatabase Storageforthemanagedhost Storagediskforthemanagedhost VirtualCentermanagedhosts Storageareanetworktypedatastoresharedbetweenmanagedhosts Template Userwithaccesspermissions VirtualCenter Virtualmachinesonamanagedhost

10

VMware, Inc.

About This Book

Technical Support and Education Resources

Thefollowingsectionsdescribethetechnicalsupportresourcesavailabletoyou.You canaccessthemostcurrentversionsofthismanualandotherbooksbygoingto: http://www.vmware.com/support/pubs

Online and Telephone Support

Useonlinesupporttosubmittechnicalsupportrequests,viewyourproductand contractinformation,andregisteryourproducts.Goto http://www.vmware.com/support. Customerswithappropriatesupportcontractsshouldusetelephonesupportforthe fastestresponseonpriority1issues.Goto http://www.vmware.com/support/phone_support.html.

Support Offerings

FindouthowVMwaresupportofferingscanhelpmeetyourbusinessneeds.Goto http://www.vmware.com/support/services.

VMware Education Services

VMwarecoursesofferextensivehandsonlabs,casestudyexamples,andcourse materialsdesignedtobeusedasonthejobreferencetools.Formoreinformationabout VMwareEducationServices,gotohttp://mylearn1.vmware.com/mgrreg/index.cfm.

VMware, Inc.

11

Fibre Channel SAN Configuration Guide

12

VMware, Inc.

Overview of VMware ESX Server

YoucanuseESXServerinconjunctionwithaFibreChannelstorageareanetwork (SAN),aspecializedhighspeednetworkthatusesFibreChannel(FC)protocolto transmitdatabetweenyourcomputersystemsandhighperformancestorage subsystems.UsingESXServertogetherwithaSANprovidesextrastoragefor consolidation,improvesreliability,andhelpswithdisasterrecovery. TouseESXServereffectivelywithaSAN,youmusthaveaworkingknowledgeofESX ServersystemsandSANconcepts.ThischapterpresentsanoverviewofESXServer concepts.ItismeantforSANadministratorsnotfamiliarwithESXServersystemsand consistsofthefollowingsections:

IntroductiontoESXServeronpage 14 UnderstandingVirtualizationonpage 16 InteractingwithESXServerSystemsonpage 20 VirtualizationataGlanceonpage 22

ForindepthinformationonVMwareESXServer,includingdocumentation,hardware compatibilitylists,whitepapers,andmore,gototheVMwareWebsiteat http://www.vmware.com.

VMware, Inc.

13

Fibre Channel SAN Configuration Guide

Introduction to ESX Server

TheESXServerarchitectureallowsadministratorstoallocatehardwareresourcesto multipleworkloadsinfullyisolatedenvironmentscalledvirtualmachines.

System Components

AnESXServersystemhasthefollowingkeycomponents:

VirtualizationlayerThislayerprovidestheidealizedhardwareenvironment andvirtualizationofunderlyingphysicalresourcestothevirtualmachines.It includesthevirtualmachinemonitor(VMM),whichisresponsiblefor virtualization,andVMkernel. Thevirtualizationlayerschedulesthevirtualmachineoperatingsystemsand,if youarerunninganESXServer3host,theserviceconsole.Thevirtualizationlayer manageshowtheoperatingsystemsaccessphysicalresources.TheVMkernel needsitsowndriverstoprovideaccesstothephysicaldevices.VMkerneldrivers aremodifiedLinuxdrivers,eventhoughtheVMkernelisnotaLinuxvariant.

HardwareinterfacecomponentsThevirtualmachinecommunicateswith hardwaresuchasCPUordiskbyusinghardwareinterfacecomponents.These componentsincludedevicedrivers,whichenablehardwarespecificservice deliverywhilehidinghardwaredifferencesfromotherpartsofthesystem. UserinterfaceAdministratorscanviewandmanageESXServerhostsand virtualmachinesinseveralways:

AVMwareInfrastructureClient(VIClient)canconnectdirectlytotheESX Serverhost.Thisisappropriateifyourenvironmenthasonlyonehost. AVIClientcanalsoconnecttoaVirtualCenterServerandinteractwithall ESXServerhoststhatVirtualCenterServermanages. TheVIWebAccessClientallowsyoutoperformmanymanagementtasksby usingabrowserbasedinterface. Onrareoccasions,whenyouneedtohavecommandlineaccess,youcanuse thefollowingoptions:

WithESXServer3,theserviceconsolecommandlineinterface.See AppendixAintheESXServer3ConfigurationGuide. WithESXServer3i,theremotecommandlineinterfaces(RCLIs).See AppendixAintheESXServer3iConfigurationGuide.

14

VMware, Inc.

Chapter 1 Overview of VMware ESX Server

Figure 11showshowthecomponentsinteract.TheESXServerhosthasfourvirtual machinesconfigured.Eachvirtualmachinerunsitsownguestoperatingsystemand applications.Administratorsmonitorthehostandthevirtualmachinesinthe followingways:

UsingaVIClienttoconnecttoanESXServerhostdirectly. UsingaVIClienttoconnecttoaVirtualCenterManagementServer.The VirtualCenterServercanmanageanumberofESXServerhosts.

Figure 1-1. Virtual Infrastructure Environment

VI Web Access VI Client VI Client VI Client

ESX Server host applications applications applications applications guest operating guest operating guest operating guest operating system system system system virtual machine virtual machine virtual machine virtual machine VMware virtualization layer host operating system

VirtualCenter Server

memory

CPUs

storage array

disk

network

Software and Hardware Compatibility

IntheVMwareESXServerarchitecture,theoperatingsystemofthevirtualmachine (theguestoperatingsystem)interactsonlywiththestandard,x86compatiblevirtual hardwarethatthevirtualizationlayerpresents.ThisarchitectureallowsVMware productstosupportanyx86compatibleoperatingsystem. Inpractice,VMwareproductssupportalargesubsetofx86compatibleoperating systemsthataretestedthroughouttheproductdevelopmentcycle.VMware documentstheinstallationandoperationoftheseguestoperatingsystemsandtrains itstechnicalpersonnelinsupportingthem.

VMware, Inc.

15

Fibre Channel SAN Configuration Guide

Mostapplicationsinteractonlywiththeguestoperatingsystem,notwiththe underlyinghardware.Asaresult,youcanrunapplicationsonthehardwareofyour choiceaslongasyouinstallavirtualmachinewiththeoperatingsystemthatthe applicationrequires.

Understanding Virtualization

TheVMwarevirtualizationlayeriscommonacrossVMwaredesktopproducts(suchas VMwareWorkstation)andserverproducts(suchasVMwareESXServer).Thislayer providesaconsistentplatformfordevelopment,testing,delivery,andsupportof applicationworkloadsandisorganizedasfollows:

Eachvirtualmachinerunsitsownoperatingsystem(theguestoperatingsystem) andapplications. Thevirtualizationlayerprovidesthevirtualdevicesthatmaptosharesofspecific physicaldevices.ThesedevicesincludevirtualizedCPU,memory,I/Obuses, networkinterfaces,storageadaptersanddevices,humaninterfacedevices, and BIOS.

CPU, Memory, and Network Virtualization

AVMwarevirtualmachineofferscompletehardwarevirtualization.Theguest operatingsystemandapplicationsrunningonavirtualmachinecanneverdetermine directlywhichphysicalresourcestheyareaccessing(suchaswhichphysicalCPUthey arerunningoninamultiprocessorsystem,orwhichphysicalmemoryismappedto theirpages).Thefollowingvirtualizationprocessesoccur:

CPUvirtualizationEachvirtualmachineappearstorunonitsownCPU(oraset ofCPUs),fullyisolatedfromothervirtualmachines.Registers,thetranslation lookasidebuffer,andothercontrolstructuresaremaintainedseparatelyforeach virtualmachine. MostinstructionsareexecuteddirectlyonthephysicalCPU,allowing resourceintensiveworkloadstorunatnearnativespeed.Thevirtualizationlayer safelyperformsprivilegedinstructions. SeetheResourceManagementGuide.

16

VMware, Inc.

Chapter 1 Overview of VMware ESX Server

MemoryvirtualizationAcontiguousmemoryspaceisvisibletoeachvirtual machine.However,theallocatedphysicalmemorymightnotbecontiguous. Instead,noncontiguousphysicalpagesareremappedandpresentedtoeach virtualmachine.Withunusuallymemoryintensiveloads,servermemory becomesovercommitted.Inthatcase,someofthephysicalmemoryofavirtual machinemightbemappedtosharedpagesortopagesthatareunmappedor swappedout. ESXServerperformsthisvirtualmemorymanagementwithouttheinformation thattheguestoperatingsystemhasandwithoutinterferingwiththeguest operatingsystemsmemorymanagementsubsystem. SeetheResourceManagementGuide.

NetworkvirtualizationThevirtualizationlayerguaranteesthateachvirtual machineisisolatedfromothervirtualmachines.Virtualmachinescantalktoeach otheronlythroughnetworkingmechanismssimilartothoseusedtoconnect separatephysicalmachines. Theisolationallowsadministratorstobuildinternalfirewallsorothernetwork isolationenvironments,allowingsomevirtualmachinestoconnecttotheoutside, whileothersareconnectedonlythroughvirtualnetworkstoothervirtual machines. SeetheESXServer3ConfigurationGuideorESXServer3iConfigurationGuide.

Virtual SCSI

InanESXServerenvironment,eachvirtualmachineincludesfromonetofourvirtual SCSIhostbusadapters(HBAs).ThesevirtualadaptersappearaseitherBuslogicorLSI LogicSCSIcontrollers.TheyaretheonlytypesofSCSIcontrollersthatavirtual machinecanaccess. EachvirtualdiskthatavirtualmachinecanaccessthroughoneofthevirtualSCSI adaptersresidesintheVMFSorisarawdisk.

VMware, Inc.

17

Fibre Channel SAN Configuration Guide

Figure 12givesanoverviewofstoragevirtualization.ItillustratesstorageusingVMFS andstorageusingrawdevicemapping(RDM). Figure 1-2. SAN Storage Virtualization

ESX Server virtual machine 1

SCSI controller (Buslogic or LSI Logic) virtual disk 1 virtual disk 2

VMware virtualization layer HBA

VMFS LUN1 .vmdk LUN2 RDM LUN5

Disk Configuration Options

YoucanconfigurevirtualmachineswithmultiplevirtualSCSIdrives.Foralistof supporteddrivers,seetheStorage/SANCompatibilityGuideat www.vmware.com/support/pubs/vi_pubs.html.Theguestoperatingsystemcanplace limitationsonthetotalnumberofSCSIdrives. AlthoughallSCSIdevicesarepresentedasSCSItargets,thefollowingphysical implementationalternativesexist:

Virtualmachine.vmdkfilestoredonaVMFSvolume.SeeVirtualMachineFile Systemonpage 19. DevicemappingtoaSANLUN(logicalunitnumber).SeeRawDeviceMapping onpage 19.

18

VMware, Inc.

Chapter 1 Overview of VMware ESX Server

LocalSCSIdevicepassedthroughdirectlytothevirtualmachine(forexample,a localtapedrive).

Fromthestandpointofthevirtualmachine,eachvirtualdiskappearsasifitwerea SCSIdriveconnectedtoaSCSIadapter.Whethertheactualphysicaldiskdeviceis beingaccessedthroughSCSI,iSCSI,RAID,NFS,orFibreChannelcontrollersis transparenttotheguestoperatingsystemandtoapplicationsrunningonthevirtual machine.

Virtual Machine File System

Inasimpleconfiguration,thevirtualmachinesdisksarestoredasfileswithinaVirtual MachineFileSystem(VMFS).WhenguestoperatingsystemsissueSCSIcommandsto theirvirtualdisks,thevirtualizationlayertranslatesthesecommandstoVMFSfile operations. ESXServersystemsuseVMFStostorevirtualmachinefiles.TominimizediskI/O overhead,VMFSisoptimizedtorunmultiplevirtualmachinesasoneworkload.VMFS alsoprovidesdistributedlockingforyourvirtualmachinefiles,sothatyourvirtual machinescanoperatesafelyinaSANenvironmentwheremultipleESXServerhosts shareasetofLUNs. VMFSisfirstconfiguredaspartoftheESXServerinstallation.Whenyoucreateanew VMFS3volume,itmustbe1200MBorlarger.SeetheInstallationGuide.Itcanthenbe customized,asdiscussedintheESXServer3ConfigurationGuideorESXServer3i ConfigurationGuide. AVMFSvolumecanbeextendedover32physicalstorageextentsofthesamestorage type.Thisabilityallowspoolingofstorageandflexibilityincreatingthestorage volumenecessaryforyourvirtualmachine.Youcanextendavolumewhilevirtual machinesarerunningonthevolumeaddingnewspacetoyourVMFSvolumesasyour virtualmachineneedsit.

Raw Device Mapping

Arawdevicemapping(RDM)isaspecialfileinaVMFSvolumethatactsasaproxyfor arawdevice.TheRDMprovidessomeoftheadvantagesofavirtualdiskintheVMFS filesystemwhilekeepingsomeadvantagesofdirectaccesstophysicaldevices. RDMmightberequiredifyouuseMicrosoftClusterService(MSCS)orifyourunSAN snapshotorotherlayeredapplicationsonthevirtualmachine.RDMsbetterenable systemstousethehardwarefeaturesinherenttoSANarrays.ForinformationonRDM, seeRawDeviceMappingintheESXServer3ConfigurationGuideorESXServer3i ConfigurationGuide,orSetupforMicrosoftClusterServiceforinformationaboutMSCS.

VMware, Inc.

19

Fibre Channel SAN Configuration Guide

Virtual SCSI Host Bus Adapters

VirtualSCSIhostbusadapters(HBAs)allowvirtualmachinesaccesstologicalSCSI devices,justasaphysicalHBAallowsaccesstophysicalstoragedevices.However,the virtualSCSIHBAdoesnotallowstorageadministrators(suchasSANadministrators) accesstothephysicalmachine.YoucanhidemanyvirtualHBAsbehindasingle(or multiple)FCHBAs.

Interacting with ESX Server Systems

AdministratorsinteractwithESXServersystemsinoneofthefollowingways:

Withaclient(VIClientorVIWebAccess).Clientscanbeconnecteddirectlytothe ESXServerhost,oryoucanmanagemultipleESXServerhostssimultaneouslyby usingtheVirtualCenterManagementServer. WithESXServer3,useaserviceconsole.InESXServer3.x,useoftheservice consoleisnotnecessaryandisdiscouragedbecauseyoucanperformmost administrativeoperationsusingaVIClientorVIWebAccess.Forscripted management,usetheVirtualInfrastructureSDK. Formoreinformationontheserviceconsole,seeESXServer3ServiceConsole onpage 21.

WithESXServer3i,usearemotecommandlineinterfaces(RCLIs).BecauseESX Server3idoesnotincludetheserviceconsole,configurationofanESXServer3i hostisusuallydonebyusingtheVIClient.However,ifyouwanttousethesame configurationsettingswithmultipleESXServer3ihosts,orifyouneed commandlineaccessforotherreasons,theRCLIsareavailable. SeetheESXServer3iConfigurationGuide.

VMware Virtual Center

YoucanaccessaVirtualCenterServerthroughaVIClientorVIWebAccess.

TheVirtualCenterServeractsasacentraladministratorforESXServerhosts connectedonanetwork.Theserverdirectsactionsuponthevirtualmachinesand VMwareESXServer. TheVIClientrunsonMicrosoftWindows.Inamultihostenvironment, administratorsusetheVIClienttomakerequeststotheVirtualCenterserver, whichinturnaffectsitsvirtualmachinesandhosts.Inasingleserver environment,theVIClientconnectsdirectlytoanESXServerhost.

20

VMware, Inc.

Chapter 1 Overview of VMware ESX Server

VIWebAccessallowsyoutoconnecttoaVirtualCenterServerbyusinganHTML browser.

Figure 13showstheConfigurationtabofaVIClientdisplaywithStorageselected. TheselectedESXServerhostconnectstoSANLUNsandtolocalharddisks.The differenceinthedisplayisvisibleonlybecauseofthenamesthatwerechosenduring setup. Figure 1-3. Storage Information Displayed in VI Client, Configuration Tab

ESX Server 3 Service Console

TheserviceconsoleistheESXServer3commandlinemanagementinterface.ESX Server3idoesnotprovideaserviceconsole.TheserviceconsolesupportsESX Server 3 systemmanagementfunctionsandinterfaces.TheseincludeHTTP,SNMP,andAPI interfaces,aswellasothersupportfunctionssuchasauthenticationand lowperformancedeviceaccess. BecauseVirtualCenterfunctionalityisenhancedtoallowalmostalladministrative operations,serviceconsolefunctionalityisnowlimited.Theserviceconsoleisused onlyunderspecialcircumstances. NOTEForscriptedmanagement,usetheVirtualInfrastructureSDK.

VMware, Inc.

21

Fibre Channel SAN Configuration Guide

TheserviceconsoleisimplementedusingamodifiedLinuxdistribution.However,the serviceconsoledoesnotcorresponddirectlytoaLinuxcommandprompt. ThefollowingESXServer3managementprocessesandservicesrunintheservice console:

Hostdaemon(hostd)Performsactionsintheserviceconsoleonbehalfofthe serviceconsoleandtheVIClient. Authenticationdaemon(vmauthd)AuthenticatesremoteusersoftheVIClient andremoteconsolesbyusingtheusernameandpassworddatabase.Youcanalso useanyotherauthenticationstorethatyoucanaccessusingtheserviceconsoles PluggableAuthenticationModule(PAM)capabilities.Havingmultiplepassword storagemechanismspermitstheuseofpasswordsfromaWindowsdomain controller,LDAPorRADIUSserver,orsimilarcentralauthenticationstorein conjunctionwithVMwareESXServerforremoteaccess. SNMPserver(netsnmpd)ImplementstheSNMPtrapsanddatastructuresthat anadministratorcanusetointegrateanESXServersystemintoanSNMPbased systemmanagementtool.

Inadditiontotheseservices,whicharesuppliedbyVMware,theserviceconsolecanbe usedtorunothersystemwideorhardwaredependentmanagementtools.Thesetools canincludehardwarespecifichealthmonitors(suchasIBMDirectororHPInsight Manager),fullsystembackupanddisasterrecoverysoftware,andclusteringandhigh availabilityproducts. NOTETheserviceconsoleisnotguaranteedtobeavailableforgeneralpurposeLinux hardwaremonitoring.ItisnotequivalenttoaLinuxshell.

Virtualization at a Glance

ESXServervirtualizestheresourcesofthephysicalsystemforthevirtualmachinesto use. Figure 14illustrateshowmultiplevirtualmachinessharephysicaldevices.Itshows twovirtualmachines,eachconfiguredwiththefollowing:

OneCPU Anallocationofmemoryandanetworkadapter(NIC) Twovirtualdisks

22

VMware, Inc.

Chapter 1 Overview of VMware ESX Server

Figure 1-4. Virtual Machines Sharing Physical Resources

virtual machine 1 network memory CPU adapter disk disk 1 2 virtual machine 2 disk disk network CPU memory 1 2 adapter

VMFS

raw disk

storage array

network adapter

CPU memory physical resources

ThevirtualmachineseachuseoneoftheCPUsontheserverandaccessnoncontiguous pagesofmemory,withpartofthememoryofonevirtualmachinecurrentlyswapped todisk(notshown).Thetwovirtualnetworkadaptersareconnectedtotwophysical networkadapters. Thedisksaremappedasfollows:

Disk1ofvirtualmachine1ismappeddirectlytoarawdisk.Thisconfigurationcan beadvantageousundercertaincircumstances. Disk2ofvirtualmachine1andbothdisksofvirtualmachine2resideontheVMFS, whichislocatedonaSANstoragearray.VMFSmakessurethatappropriate lockingandsecurityisinplaceatalltimes.

VMware, Inc.

23

Fibre Channel SAN Configuration Guide

24

VMware, Inc.

Using ESX Server with Fibre Channel SAN

WhenyousetupESXServerhoststouseFCSANarraystorage,specialconsiderations arenecessary.Thischapterprovidesintroductoryinformationabouthowtouse ESX ServerwithaSANarrayanddiscussesthesetopics:

StorageAreaNetworkConceptsonpage 26 OverviewofUsingESXServerwithSANonpage 28 SpecificsofUsingSANArrayswithESXServeronpage 31 UnderstandingVMFSandSANStorageChoicesonpage 36 UnderstandingDataAccessonpage 39 PathManagementandFailoveronpage 41 ChoosingVirtualMachineLocationsonpage 43 DesigningforServerFailureonpage 44 OptimizingResourceUseonpage 46

VMware, Inc.

25

Fibre Channel SAN Configuration Guide

Storage Area Network Concepts

IfyouareanESXServeradministratorplanningtosetupESXServerhoststoworkwith SANs,youmusthaveaworkingknowledgeofSANconcepts.Youcanfindinformation aboutSANinprintandontheInternet.Twowebbasedresourcesare:

www.searchstorage.com www.snia.org

Becausethisindustrychangesconstantly,checktheseresourcesfrequently. IfyouarenewtoSANtechnology,readthissectiontofamiliarizeyourselfwiththe basicterminologySANConfigurationGuideuses.TolearnaboutbasicSANconcepts, seetheSANConceptualandDesignBasicswhitepaperat http://www.vmware.com/support/pubs. NOTESANadministratorscanskipthissectionandcontinuewiththerestofthis chapter. Astorageareanetwork(SAN)isaspecializedhighspeednetworkthatconnects computersystems,orhostservers,tohighperformancestoragesubsystems.TheSAN componentsincludehostbusadapters(HBAs)inthehostservers,switchesthathelp routestoragetraffic,cables,storageprocessors(SPs),andstoragediskarrays. ASANtopologywithatleastoneswitchpresentonthenetworkformsaSANfabric. Totransfertrafficfromhostserverstosharedstorage,theSANusesFibreChannel(FC) protocolthatpackagesSCSIcommandsintoFibreChannelframes.

26

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

Inthecontextofthisdocument,aportistheconnectionfromadevicetotheSAN.Each nodeintheSAN,ahost,storagedevice,andfabriccomponent,hasoneormoreports thatconnectittotheSAN.Portscanbeidentifiedinanumberofways:

WWPN(WorldWidePortName)Agloballyuniqueidentifierforaportthat allowscertainapplicationstoaccesstheport.TheFCswitchesdiscovertheWWPN ofadeviceorhostandassignaportaddresstothedevice. ToviewtheWWPNbyusingaVIClient,clickthehostsConfigurationtaband chooseStorageAdapters.Youcanthenselectthestorageadapterthatyouwantto seetheWWPNfor.

Port_ID(orportaddress)IntheSAN,eachporthasauniqueportIDthatserves astheFCaddressfortheport.ThisenablesroutingofdatathroughtheSANtothat port.TheFCswitchesassigntheportIDwhenthedevicelogsintothefabric.The portIDisvalidonlywhilethedeviceisloggedon.

WhenNPortIDVirtualization(NPIV)isused,asingleFCHBAport(Nport)can registerwiththefabricbyusingseveralWWPNs.ThisallowsanNporttoclaim multiplefabricaddresses,eachofwhichappearsasauniqueentity.Inthecontextofa SANbeingusedbyESXServerhosts,thesemultiple,uniqueidentifiersallowthe assignmentofWWNstoindividualvirtualmachinesaspartoftheirconfiguration.See NPortIDVirtualizationonpage 90. Whentransferringdatabetweenthehostserverandstorage,theSANusesa multipathingtechnique.Multipathingallowsyoutohavemorethanonephysicalpath fromtheESXServerhosttoaLUNonastoragearray. IfapathoranycomponentalongthepathHBA,cable,switchport,orstorage processorfails,theserverselectsanotheroftheavailablepaths.Theprocessof detectingafailedpathandswitchingtoanotheriscalledpathfailover.

VMware, Inc.

27

Fibre Channel SAN Configuration Guide

Storagediskarrayscanbeofthefollowingtypes:

Anactive/activediskarray,whichallowsaccesstotheLUNssimultaneouslythrough allthestorageprocessorsthatareavailablewithoutsignificantperformance degradation.Allthepathsareactiveatalltimes(unlessapathfails). Anactive/passivediskarray,inwhichonestorageprocessor(SP)isactivelyservicing agivenLUN.TheotherSPactsasbackupfortheLUNandcanbeactivelyservicing otherLUNI/O.I/Ocanbesentonlytoanactiveprocessor.IftheprimarySPfails, oneofthesecondarystorageprocessorsbecomesactive,eitherautomaticallyor throughadministratorintervention.

Torestrictserveraccesstostoragearraysnotallocatedtothatserver,theSANuses zoning.Typically,zonesarecreatedforeachgroupofserversthataccessasharedgroup ofstoragedevicesandLUNs.ZonesdefinewhichHBAscanconnecttowhichSPs. Devicesoutsideazonearenotvisibletothedevicesinsidethezone. ZoningissimilartoLUNmasking,whichiscommonlyusedforpermission management.LUNmaskingisaprocessthatmakesaLUNavailabletosomehostsand unavailabletootherhosts.Usually,LUNmaskingisperformedattheSPorserverlevel.

Overview of Using ESX Server with SAN

SupportforFCHBAsallowsanESXServersystemtobeconnectedtoaSANarray.You canthenuseSANarrayLUNstostorevirtualmachineconfigurationinformationand applicationdata.UsingESXServerwithaSANimprovesflexibility,efficiency,and reliability.Italsosupportscentralizedmanagement,aswellasfailoverandload balancingtechnologies.

Benefits of Using ESX Server with SAN

UsingaSANwithESXServerallowsyoutoimproveyourenvironmentsfailure resilience:

YoucanstoredataredundantlyandconfiguremultipleFCfabrics,eliminatinga singlepointoffailure.Yourenterpriseisnotcrippledwhenonedatacenter becomesunavailable. ESXServersystemsprovidemultipathingbydefaultandautomaticallysupportit foreveryvirtualmachine.SeePathManagementandFailoveronpage 41. UsingaSANwithESXServersystemsextendsfailureresistancetotheserver. WhenyouuseSANstorage,allapplicationscaninstantlyberestartedafterhost failure.SeeDesigningforServerFailureonpage 44.

28

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

UsingESXServerwithaSANmakeshighavailabilityandautomaticloadbalancing affordableformoreapplicationsthanifdedicatedhardwareisusedtoprovidestandby services:

Becausesharedcentralstorageisavailable,buildingvirtualmachineclustersthat useMSCSbecomespossible.SeeServerFailoverandStorageConsiderationson page 45. Ifvirtualmachinesareusedasstandbysystemsforexistingphysicalservers, sharedstorageisessentialandaSANisthebestsolution. UsetheVMwareVMotioncapabilitiestomigratevirtualmachinesseamlessly fromonehosttoanother. UseVMwareHighAvailability(HA)inconjunctionwithaSANforacoldstandby solutionthatguaranteesanimmediate,automaticresponse. UseVMwareDistributedResourceScheduler(DRS)tomigratevirtualmachines fromonehosttoanotherforloadbalancing.BecausestorageisonaSANarray, applicationscontinuerunningseamlessly. IfyouuseVMwareDRSclusters,putanESXServerhostintomaintenancemode tohavethesystemmigrateallrunningvirtualmachinestootherESXServerhosts. Youcanthenperformupgradesorothermaintenanceoperations.

ThetransportabilityandencapsulationofVMwarevirtualmachinescomplementsthe sharednatureofSANstorage.WhenvirtualmachinesarelocatedonSANbased storage,youcanshutdownavirtualmachineononeserverandpowerituponanother serverortosuspenditononeserverandresumeoperationonanotherserveronthe samenetworkinamatterofminutes.Thisabilityallowsyoutomigratecomputing resourceswhilemaintainingconsistentsharedaccess.

ESX Server and SAN Use Cases

UsingESXServersystemsinconjunctionwithSANiseffectiveforthefollowingtasks:

MaintenancewithzerodowntimeWhenperformingmaintenance,useVMware DRSorVMotiontomigratevirtualmachinestootherservers.Ifsharedstorageis ontheSAN,youcanperformmaintenancewithoutinterruptionstotheuser. LoadbalancingUseVMotionorVMwareDRStomigratevirtualmachinesto otherhostsforloadbalancing.IfsharedstorageisonaSAN,youcanperformload balancingwithoutinterruptiontotheuser.

VMware, Inc.

29

Fibre Channel SAN Configuration Guide

StorageconsolidationandsimplificationofstoragelayoutIfyouareworking withmultiplehosts,andeachhostisrunningmultiplevirtualmachines,thehosts storageisnolongersufficientandexternalstorageisneeded.ChoosingaSANfor externalstorageresultsinasimplersystemarchitecturewhilegivingyoutheother benefitslistedinthissection.StartbyreservingalargeLUNandthenallocate portionstovirtualmachinesasneeded.LUNreservationandcreationfromthe storagedeviceneedstohappenonlyonce. DisasterrecoveryHavingalldatastoredonaSANcangreatlyfacilitateremote storageofdatabackups.Inaddition,youcanrestartvirtualmachinesonremote ESXServerhostsforrecoveryifonesiteiscompromised.

Finding Further Information

Inadditiontothisdocument,anumberofotherresourcescanhelpyouconfigureyour ESXServersysteminconjunctionwithaSAN:

Useyourstoragearrayvendorsdocumentationformostsetupquestions.Your storagearrayvendormightalsoofferdocumentationonusingthestoragearrayin anESXServerenvironment. TheVMwareDocumentationWebsiteathttp://www.vmware.com/support/pubs/. TheiSCSISANConfigurationGuidediscussestheuseofESXServerwithiSCSI storageareanetworks. TheVMwareI/OCompatibilityGuideliststhecurrentlyapprovedHBAs,HBA drivers,anddriverversions. TheVMwareStorage/SANCompatibilityGuidelistscurrentlyapprovedstorage arrays. TheVMwareReleaseNotesgiveinformationaboutknownissuesandworkarounds. TheVMwareKnowledgeBaseshaveinformationoncommonissuesand workarounds.

30

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

Specifics of Using SAN Arrays with ESX Server

UsingaSANinconjunctionwithanESXServerhostdiffersfromtraditionalSANusage inavarietyofways,discussedinthissection.

Sharing a VMFS Across ESX Servers

ESXServerVMFSisdesignedforconcurrentaccessfrommultiplephysicalmachines andenforcestheappropriateaccesscontrolsonvirtualmachinefiles.Forbackground informationonVMFS,seeVirtualMachineFileSystemonpage 19. VMFScan:

CoordinateaccesstovirtualdiskfilesESXServerusesfilelevellocks,whichthe VMFSdistributedlockmanagermanages. CoordinateaccesstoVMFSinternalfilesysteminformation(metadata)ESX ServerusesshortlivedSCSIreservationsaspartofitsdistributedlockingprotocol. SCSIreservationsarenotheldduringmetadataupdatestotheVMFSvolume.

BecausevirtualmachinesshareacommonVMFS,itmightbedifficulttocharacterize peakaccessperiodsoroptimizeperformance.Planvirtualmachinestorageaccessfor peakperiods,butdifferentapplicationsmighthavedifferentpeakaccessperiods.The morevirtualmachinesshareaVMFS,thegreateristhepotentialforperformance degradationbecauseofI/Ocontention. NOTEVMwarerecommendsthatyouloadbalancevirtualmachinesoverservers, CPU,andstorage.Runamixofvirtualmachinesoneachgivenserverandstorageso thatnotallexperiencehighdemandinthesameareaatthesametime.

VMware, Inc.

31

Fibre Channel SAN Configuration Guide

Figure 21showsseveralESXServersystemssharingthesameVMFSvolume. Figure 2-1. Accessing Virtual Disk Files

ESX Server A ESX Server B ESX Server C

VM1

VM2

VM3

VMFS volume disk1 disk2 disk3

virtual disk files

Metadata Updates

AVMFSholdsfiles,directories,symboliclinks,RDMs,andsoon,andcorresponding metadatafortheseobjects.Metadataisaccessedeachtimetheattributesofafileare accessedormodified.Theseoperationsinclude,butarenotlimitedtothefollowing:

Creating,growing,orlockingafile Changingafilesattributes Poweringavirtualmachineonoroff

LUN Display and Rescan

ASANisdynamic,andwhichLUNsareavailabletoacertainhostcanchangebased onanumberoffactors,includingthefollowing:

NewLUNscreatedontheSANstoragearrays ChangestoLUNmasking ChangesinSANconnectivityorotheraspectsoftheSAN

32

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

TheVMkerneldiscoversLUNswhenitboots,andthoseLUNsarethenvisibleintheVI Client.IfchangesaremadetotheLUNs,youmustrescantoseethosechanges. CAUTIONAfteryoucreateanewVMFSdatastoreorextendanexistingVMFS datastore,youmustrescantheSANstoragefromallESXServerhoststhatcouldseethat particulardatastore.Ifthisisnotdone,theshareddatastoremightbecomeinvisibleto someofthosehosts.

Host Type

ALUNhasaslightlydifferentbehaviordependingonthetypeofhostthatisaccessing it.Usually,thehosttypeassignmentdealswithoperatingsystemspecificfeaturesor issues.ESXServerarraysaretypicallyconfiguredwithahosttypeofLinuxor,if available,ESXorVMwarehosttype. SeeChapter 6,ManagingESXServerSystemsThatUseSANStorage,onpage 81and theVMwareknowledgebases.

Levels of Indirection

IfyouareusedtoworkingwithtraditionalSANs,thelevelsofindirectioncaninitially beconfusing.

Youcannotdirectlyaccessthevirtualmachineoperatingsystemthatusesthe storage.Withtraditionaltools,youcanmonitoronlytheVMwareESXServer operatingsystem,butnotthevirtualmachineoperatingsystem.Youusethe VI Clienttomonitorvirtualmachines. Eachvirtualmachineis,bydefault,configuredwithonevirtualharddiskandone virtualSCSIcontrollerduringinstallation.YoucanmodifytheSCSIcontrollertype andSCSIbussharingcharacteristicsbyusingtheVIClienttoeditthevirtual machinesettings,asshowninFigure 22.Youcanalsoaddharddiskstoyour virtualmachine.SeetheBasicSystemAdministration.

VMware, Inc.

33

Fibre Channel SAN Configuration Guide

Figure 2-2. Setting the SCSI Controller Type

TheHBAvisibletotheSANadministrationtoolsispartoftheESXServersystem, notthevirtualmachine. YourESXServersystemperformsmultipathingforyou.Multipathingsoftware, suchasPowerPath,inthevirtualmachineisnotsupportedandnotrequired.

Data Access: VMFS or RDM

Typically,avirtualdiskisplacedonaVMFSdatastoreduringvirtualmachinecreation. WhenguestoperatingsystemsissueSCSIcommandstotheirvirtualdisks,the virtualizationlayertranslatesthesecommandstoVMFSfileoperations.SeeVirtual MachineFileSystemonpage 19. AnalternativetoVMFSisusingRDMs.RDMsarespecialfilesinaVMFSvolumethat actasaproxyforarawdevice.TheRDMgivessomeoftheadvantagesofavirtualdisk intheVMFS,whilekeepingsomeadvantagesofdirectaccesstoaphysicaldevice.See RawDeviceMappingonpage 19.

34

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

Third-Party Management Applications

MostSANhardwareispackagedwithSANmanagementsoftware.Thissoftware typicallyrunsonthestoragearrayoronasingleserver,independentoftheserversthat usetheSANforstorage.Usethisthirdpartymanagementsoftwareforanumberof tasks:

StoragearraymanagementincludingLUNcreation,arraycachemanagement, LUNmapping,andLUNsecurity. Settingupreplication,checkpoints,snapshots,ormirroring.

WhenyoudecidetoruntheSANmanagementsoftwareonavirtualmachine,yougain thebenefitsofrunningavirtualmachineincludingfailoverusingVMotionand VMwareHA,andsoon.Becauseoftheadditionallevelofindirection,however,the managementsoftwaremightnotbeabletoseetheSAN.Thisproblemcanberesolved byusinganRDM.SeeLayeredApplicationsonpage 116. NOTEWhetheravirtualmachinecanrunmanagementsoftwaresuccessfullydepends onthestoragearray.

Zoning and ESX Server

ZoningprovidesaccesscontrolintheSANtopology.ZoningdefineswhichHBAscan connecttowhichSPs.WhenaSANisconfiguredbyusingzoning,thedevicesoutside azonearenotvisibletothedevicesinsidethezone. Zoninghasthefollowingeffects:

ReducesthenumberoftargetsandLUNspresentedtoanESXServersystem. Controlsandisolatespathsinafabric. CanpreventnonESXServersystemsfromseeingaparticularstoragesystem,and frompossiblydestroyingESXServerVMFSdata. Canbeusedtoseparatedifferentenvironments(forexample,atestfroma productionenvironment).

VMware, Inc.

35

Fibre Channel SAN Configuration Guide

Whenyouusezoning,keepinmindthefollowingitems:

ESXServerhoststhatusesharedstorageforvirtualmachinefailoverorload balancingmustbeinonezone. Ifyouhaveaverylargedeployment,youmightneedtocreateseparatezonesfor differentareasoffunctionality.Forexample,youcanseparateaccountingfrom humanresources. Itdoesnotworkwelltocreatemanysmallzonesof,forexample,twohostswith fourvirtualmachineseach.

NOTECheckwiththestoragearrayvendorforzoningbestpractices.

Access Control (LUN Masking) and ESX Server

AccesscontrolallowsyoutolimitthenumberofESXServerhosts(orotherhosts)that canseeaLUN.Accesscontrolcanbeusefulto:

ReducethenumberofLUNspresentedtoanESXServersystem. PreventnonESXServersystemsfromseeingESXServerLUNsandfrompossibly destroyingVMFSvolumes.

Understanding VMFS and SAN Storage Choices

ThissectiondiscussestheavailableVMFSandSANstoragechoicesandgivesadviceon howtomakethem.

Choosing Larger or Smaller LUNs

WhenyousetupstorageforyourESXServersystems,chooseoneoftheseapproaches:

ManyLUNswithoneVMFSvolumeoneachLUN ManyLUNswithasingleVMFSvolumespanningallLUNs

YoucanhaveonlyoneVMFSvolumeperLUN.Youcan,however,decidetouseone largeLUNormultiplesmallLUNs. Youmightwantfewer,largerLUNsforthefollowingreasons:

MoreflexibilitytocreatevirtualmachineswithoutgoingbacktotheSAN administratorformorespace. Moreflexibilityforresizingvirtualdisks,takingsnapshots,andsoon. FewerLUNstoidentifyandmanage.

VMware, Inc.

36

Chapter 2 Using ESX Server with Fibre Channel SAN

Youmightwantmore,smallerLUNsforthefollowingreasons:

DifferentapplicationsmightneeddifferentRAIDcharacteristics. Moreflexibility(themultipathingpolicyanddisksharesaresetperLUN). UseofMicrosoftClusterService,whichrequiresthateachclusterdiskresourceis onitsownLUN.

Making LUN Decisions

Whenthestoragecharacterizationforavirtualmachineisnotavailable,useoneofthe followingapproachestodecideonLUNsizeandnumberofLUNstouse:

Predictivescheme Adaptivescheme

Predictive Scheme

Inthepredictivescheme,you:

CreateseveralLUNswithdifferentstoragecharacteristics. BuildaVMFSvolumeoneachLUN(labeleachvolumeaccordingtoits characteristics). LocateeachapplicationintheappropriateRAIDforitsrequirements. Usedisksharestodistinguishhighpriorityfromlowpriorityvirtualmachines. DisksharesarerelevantonlywithinagivenESXServerhost.Thesharesassigned tovirtualmachinesononeESXServerhosthavenoeffectonvirtualmachineson otherESXServerhosts.

Adaptive Scheme

Intheadaptivescheme,you:

CreatealargeLUN(RAID1+0orRAID5),withwritecachingenabled. BuildaVMFSonthatLUN. PlaceseveraldisksontheVMFS. Runtheapplicationsanddeterminewhetherdiskperformanceisacceptable. Ifperformanceisacceptable,youcanplaceadditionalvirtualdisksontheVMFS. Ifperformanceisnotacceptable,createanew,largerLUN,possiblywitha differentRAIDlevel,andrepeattheprocess.Youcanusecoldmigrationsothat youdonotlosevirtualmachineswhenrecreatingtheLUN.

VMware, Inc.

37

Fibre Channel SAN Configuration Guide

Tips for Making LUN Decisions

WhenmakingyourLUNdecision,keepinmindthefollowing:

EachLUNshouldhavethecorrectRAIDlevelandstoragecharacteristicfor applicationsinvirtualmachinesthatuseit. OneLUNmustcontainonlyonesingleVMFSvolume. IfmultiplevirtualmachinesaccessthesameLUN,usedisksharestoprioritize virtualmachines.

To use disk shares to prioritize virtual machines 1 2 3 4 StartaVIClientandconnecttoaVirtualCenterServer. Selectthevirtualmachinefromtheinventory,rightclick,andchooseEditSettings. ClicktheResourcestabandclickDisk. RightclicktheSharescolumnforthedisktomodify,andselecttherequiredvalue fromthedropdownmenu.

Sharesisavaluethatrepresentstherelativemetricforcontrollingdiskbandwidth toallvirtualmachines.ThevaluesLow,Normal,High,andCustomarecompared tothesumofallsharesofallvirtualmachinesontheserverand,onanESXServer 3 host,theserviceconsole.Shareallocationsymbolicvaluescanbeusedtoconfigure theirconversionintonumericvalues.

38

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

Understanding Data Access

Virtualmachinesaccessdatabyusingoneofthefollowingmethods:

VMFSInasimpleconfiguration,thevirtualmachinesdisksarestoredas.vmdk fileswithinanESXServerVMFSdatastore.Whenguestoperatingsystemsissue SCSIcommandstotheirvirtualdisks,thevirtualizationlayertranslatesthese commandstoVMFSfileoperations. Inadefaultsetup,thevirtualmachinealwaysgoesthroughVMFSwhenitaccesses afile,whetherthefileisonaSANorahostslocalharddrives.SeeVirtual MachineFileSystemonpage 19.

RDMAnRDMisamappingfileinsidetheVMFSthatactsasaproxyforaraw device.TheRDMgivestheguestoperatingsystemaccesstotherawdevice. RDMisrecommendedwhenavirtualmachinemustinteractdirectlywitha physicaldiskontheSAN.Thisisthecase,forexample,whenyouwanttoissue diskarraysnapshotcreationcommandsfromyourguestoperationsystemor, morerarely,ifyouhavealargeamountofdatathatyoudonotwanttomoveonto avirtualdisk.RDMisalsorequiredforMicrosoftClusterServicesetup.Seethe VMwaredocumentSetupforMicrosoftClusterService.

VMware, Inc.

39

Fibre Channel SAN Configuration Guide

Figure 23illustrateshowvirtualmachinesaccessdatabyusingVMFSorRDM. Figure 2-3. How Virtual Machines Access Data

ESX Server virtual machine 1

SCSI controller (Buslogic or LSI Logic) virtual disk 1 virtual disk 2

VMware virtualization layer HBA

VMFS LUN1 .vmdk LUN2 RDM LUN5

FormoreinformationaboutVMFSandRDMs,seetheESXServer3ConfigurationGuide orESXServer3iConfigurationGuide. WhenavirtualmachineinteractswithaSAN,thefollowingprocesstakesplace: 1 2 Whentheguestoperatingsysteminavirtualmachineneedstoreadorwriteto SCSIdisk,itissuesSCSIcommandstothevirtualdisk. Devicedriversinthevirtualmachinesoperatingsystemcommunicatewiththe virtualSCSIcontrollers.VMwareESXServersupportstwotypesofvirtualSCSI controllers:BusLogicandLSILogic. ThevirtualSCSIControllerforwardsthecommandtotheVMkernel. TheVMkernel:

3 4

LocatesthefileintheVMFSvolumethatcorrespondstotheguestvirtual machinedisk. Mapstherequestsfortheblocksonthevirtualdisktoblocksonthe appropriatephysicaldevice.

VMware, Inc.

40

Chapter 2 Using ESX Server with Fibre Channel SAN

SendsthemodifiedI/OrequestfromthedevicedriverintheVMkerneltothe physicalHBA(hostHBA).

ThehostHBA:

Convertstherequestfromitsbinarydataformtotheopticalformrequiredfor transmissiononthefiberopticcable. PackagestherequestaccordingtotherulesoftheFCprotocol. TransmitstherequesttotheSAN.

DependingonwhichporttheHBAusestoconnecttothefabric,oneoftheSAN switchesreceivestherequestandroutesittothestoragedevicethatthehostwants toaccess. Fromthehostsperspective,thisstoragedeviceappearstobeaspecificdisk,butit mightbealogicaldevicethatcorrespondstoaphysicaldeviceontheSAN.The switchmustdeterminewhichphysicaldeviceismadeavailabletothehostforits targetedlogicaldevice.

Path Management and Failover

ESXServersupportsmultipathingtomaintainaconstantconnectionbetweenthe servermachineandthestoragedeviceincaseofthefailureofanHBA,switch,SP,or FCcable.Multipathingsupportdoesnotrequirespecificfailoverdrivers. Tosupportpathswitching,theservertypicallyhastwoormoreHBAsavailablefrom whichthestoragearraycanbereachedbyusingoneormoreswitches.Alternatively, thesetupcouldincludeoneHBAandtwostorageprocessorssothattheHBAcanuse adifferentpathtoreachthediskarray. InFigure 24,multiplepathsconnecteachserverwiththestoragedevice.Forexample, ifHBA1orthelinkbetweenHBA1andtheFCswitchfails,HBA2takesoverand providestheconnectionbetweentheserverandtheswitch.TheprocessofoneHBA takingoverforanotheriscalledHBAfailover.

VMware, Inc.

41

Fibre Channel SAN Configuration Guide

Figure 2-4. Multipathing and Failover

ESX Server ESX Server

HBA2

HBA1

HBA3

HBA4

switch

switch

SP1

SP2

storage array

Similarly,ifSP1failsorthelinksbetweenSP1andtheswitchesbreaks,SP2takesover andprovidestheconnectionbetweentheswitchandthestoragedevice.Thisprocessis calledSPfailover.VMwareESXServersupportsHBAandSPfailoverwithits multipathingcapability. Youcanchooseamultipathingpolicyforyoursystem,eitherFixedorMostRecently Used.IfthepolicyisFixed,youcanspecifyapreferredpath.EachLUN(disk)thatis visibletotheESXServerhostcanhaveitsownpathpolicy.Forinformationonhowto viewthecurrentmultipathingstateandhowtosetthemultipathingpolicy,see Multipathingonpage 95.

42

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

VirtualmachineI/Omightbedelayedforatmostsixtysecondswhilefailovertakes place,particularlyonanactive/passivearray.ThisdelayisnecessarytoallowtheSAN fabrictostabilizeitsconfigurationaftertopologychangesorotherfabricevents.Inthe caseofanactive/passivearraywithpathpolicyFixed,paththrashingmightbea problem.SeeResolvingPathThrashingonpage 108. Avirtualmachinewillfailinanunpredictablewayifallpathstothestoragedevice whereyoustoredyourvirtualmachinedisksbecomeunavailable.

Choosing Virtual Machine Locations

Whenyouareworkingonoptimizingperformanceforyourvirtualmachines,storage locationisanimportantfactor.Thereisalwaysatradeoffbetweenexpensivestorage thatoffershighperformanceandhighavailabilityandstoragewithlowercostand lowerperformance.Storagecanbedividedintodifferenttiersdependingonanumber offactors:

HightierOffershighperformanceandhighavailability.Mightofferbuiltin snapshotstofacilitatebackupsandPointinTime(PiT)restorations.Supports replication,fullSPredundancy,andfibredrives.Useshighcostspindles. MidtierOffersmidrangeperformance,loweravailability,someSPredundancy, andSCSIdrives.Mightoffersnapshots.Usesmediumcostspindles. LowertierOfferslowperformance,littleinternalstorageredundancy.Useslow endSCSIdrivesorSATA(seriallowcostspindles).

Notallapplicationsneedtobeonthehighestperformance,mostavailablestorageat leastnotthroughouttheirentirelifecycle. Ifyouneedsomeofthefunctionalityofthehightier,suchassnapshots,butdonotwant topayforit,youmightbeabletoachievesomeofthehighperformancecharacteristics insoftware.Forexample,youcancreatesnapshotsinsoftware. Whenyoudecidewheretoplaceavirtualmachine,askyourselfthesequestions:

Howcriticalisthevirtualmachine? Whatareitsperformanceandavailabilityrequirements? Whatareitspointintime(PiT)restorationrequirements? Whatareitsbackuprequirements? Whatareitsreplicationrequirements?

VMware, Inc.

43

Fibre Channel SAN Configuration Guide

Avirtualmachinemightchangetiersthroughoutitslifecyclebecauseofchangesin criticalityorchangesintechnologythatpushhighertierfeaturestoalowertier. Criticalityisrelative,andmightchangeforavarietyofreasons,includingchangesin theorganization,operationalprocesses,regulatoryrequirements,disasterplanning, andsoon.

Designing for Server Failure

TheRAIDarchitectureofSANstorageinherentlyprotectsyoufromfailureatthe physicaldisklevel.Adualfabric,withduplicationofallfabriccomponents,protects theSANfrommostfabricfailures.Thefinalstepinmakingyourwholeenvironment failureresistantistoprotectagainstserverfailure.ESXServersystemsfailoveroptions arediscussedinthefollowingsections.

Using VMware HA

VMwareHAallowsyoutoorganizevirtualmachinesintofailovergroups.Whenahost fails,allitsvirtualmachinesareimmediatelystartedondifferenthosts.HArequires SANstorage. Whenavirtualmachineisrestoredonadifferenthost,itlosesitsmemorystatebutits diskstateisexactlyasitwaswhenthehostfailed(crashconsistentfailover).Shared storage,suchasaSAN,isrequiredforHA.SeetheResourceManagementGuide. NOTEYoumustbelicensedtouseVMwareHA.

Using Cluster Services

Serverclusteringisamethodoftyingtwoormoreserverstogetherbyusinga highspeednetworkconnectionsothatthegroupofserversfunctionsasasingle, logicalserver.Ifoneoftheserversfails,theotherserversintheclustercontinue operating,pickinguptheoperationsthatthefailedserverperforms. VMwaretestsMicrosoftClusterServiceinconjunctionwithESXServersystems,but otherclustersolutionsmightalsowork.Differentconfigurationoptionsareavailable forachievingfailoverwithclustering:

ClusterinaboxTwovirtualmachinesononehostactasfailoverserversforeach other.Whenonevirtualmachinefails,theothertakesover.Thisconfigurationdoes notprotectagainsthostfailures.Itismostcommonlydoneduringtestingofthe clusteredapplication. ClusteracrossboxesAvirtualmachineonanESXServerhosthasamatching virtualmachineonanotherESXServerhost.

VMware, Inc.

44

Chapter 2 Using ESX Server with Fibre Channel SAN

Physicaltovirtualclustering(N+1clustering)AvirtualmachineonanESX Serverhostactsasafailoverserverforaphysicalserver.Becausevirtualmachines runningonasinglehostcanactasfailoverserversfornumerousphysicalservers, thisclusteringmethodprovidesacosteffectiveN+1solution.

SeeSetupforMicrosoftClusterService. Figure 25showsdifferentconfigurationoptionsavailableforachievingfailoverwith clustering. Figure 2-5. Clustering Using a Clustering Service

virtual machine virtual machine virtual machine virtual machine virtual machine virtual machine virtual machine cluster in a box cluster across boxes physical to virtual clustering

Server Failover and Storage Considerations

Foreachtypeofserverfailover,youmustconsiderstorageissues:

Approachestoserverfailoverworkonlyifeachserverhasaccesstothesame storage.Becausemultipleserversrequirealotofdiskspace,andbecausefailover forthestoragearraycomplementsfailoverfortheserver,SANsareusually employedinconjunctionwithserverfailover. WhenyoudesignaSANtoworkinconjunctionwithserverfailover,allLUNsthat areusedbytheclusteredvirtualmachinesmustbeseenbyallESXServerhosts. ThisrequirementiscounterintuitiveforSANadministrators,butisappropriate whenusingvirtualmachines.

VMware, Inc.

45

Fibre Channel SAN Configuration Guide

AlthoughaLUNisaccessibletoahost,allvirtualmachinesonthathostdonot necessarilyhaveaccesstoalldataonthatLUN.Avirtualmachinecanaccessonly thevirtualdisksforwhichitwasconfigured.Incaseofaconfigurationerror, virtualdisksarelockedwhenthevirtualmachinebootssothatnocorruption occurs. NOTEAsarule,whenyouareusingbootfromaSAN,onlytheESXServersystemthat isbootingfromaLUNshouldseeeachbootLUN.Anexceptioniswhenyouaretrying torecoverfromafailurebypointingasecondESXServersystemtothesameLUN.In thiscase,theSANLUNinquestionisnotreallybootingfromSAN.NoESXServer systemisbootingfromitbecauseitiscorrupted.TheSANLUNisanonbootLUNthat ismadevisibletoanESXServersystem.

Optimizing Resource Use

VMwareInfrastructureallowsyoutooptimizeresourceallocationbymigratingvirtual machinesfromoverusedhoststounderusedhosts.Thefollowingoptionsexist:

MigratevirtualmachinesmanuallybyusingVMotion. MigratevirtualmachinesautomaticallybyusingVMwareDRS.

YoucanuseVMotionorDRSonlyifthevirtualdisksarelocatedonsharedstorage accessibletomultipleservers.Inmostcases,SANstorageisused.Foradditional informationonVMotion,seeBasicSystemAdministration.Foradditionalinformationon DRS,seetheResourceManagementGuide.

Using VMotion to Migrate Virtual Machines

VMotionallowsadministratorstomanuallymigratevirtualmachinestodifferent hosts.Administratorscanmigratearunningvirtualmachinetoadifferentphysical serverconnectedtothesameSANwithoutserviceinterruption.VMotionmakesit possibleto:

Performzerodowntimemaintenancebymovingvirtualmachinesaroundsothat theunderlyinghardwareandstoragecanbeservicedwithoutdisruptinguser sessions. Continuouslybalanceworkloadsacrossthedatacentertomosteffectivelyuse resourcesinresponsetochangingbusinessdemands.

46

VMware, Inc.

Chapter 2 Using ESX Server with Fibre Channel SAN

Figure 26illustrateshowyoucanuseVMotiontomigrateavirtualmachine. Figure 2-6. Migration with VMotion

ESX Server VMotion technology applications guest operating system virtual machine applicat s plicat p t tions guest ope st e s erating system system em virtual achine virtual ma u a e applications guest operating system virtual machine applications guest operating system virtual machine ESX Server

Using VMware DRS to Migrate Virtual Machines

VMwareDRShelpsimproveresourceallocationacrossallhostsandresourcepools. DRScollectsresourceusageinformationforallhostsandvirtualmachinesinaVMware clusterandgivesrecommendations(ormigratesvirtualmachines)inoneoftwo situations:

InitialplacementWhenyoufirstpoweronavirtualmachineinthecluster,DRS eitherplacesthevirtualmachineormakesarecommendation. LoadbalancingDRStriestoimproveresourceuseacrosstheclusterby performingautomaticmigrationsofvirtualmachines(VMotion)orbyproviding recommendationsforvirtualmachinemigrations.

SeetheResourceManagementGuide.

VMware, Inc.

47

Fibre Channel SAN Configuration Guide

48

VMware, Inc.

Requirements and Installation

ThischapterdiscusseshardwareandsystemrequirementsforusingESXServer systemswithSANstorage.Thechapterconsistsofthefollowingsections:

GeneralESXServerSANRequirementsonpage 50 ESXServerBootfromSANRequirementsonpage 53 InstallationandSetupStepsonpage 54

Thischapterlistsonlythemostbasicrequirements.Fordetailedinformationabout settingupyoursystem,readChapter 4,SettingUpSANStorageDeviceswithESX Server,onpage 57.

VMware, Inc.

49

Fibre Channel SAN Configuration Guide

General ESX Server SAN Requirements

InpreparationforconfiguringyourSANandsettingupyourESXServersystemtouse SANstorage,reviewthefollowingrequirementsandrecommendations:

Hardwareandfirmware.OnlyalimitednumberofSANstoragehardwareand firmwarecombinationsaresupportedinconjunctionwithESXServersystems.For anuptodatelist,seetheStorage/SANCompatibilityGuide. OneVMFSvolumeperLUN.ConfigureyoursystemtohaveonlyoneVMFS volumeperLUN.WithVMFS3,youdonothavetosetaccessibility. Unlessyouareusingdisklessservers,donotsetupthediagnosticpartitionona SANLUN. InthecaseofdisklessserversthatbootfromaSAN,ashareddiagnosticpartition isappropriate.SeeSharingDiagnosticPartitionsonpage 104.

VMwarerecommendsthatyouuseRDMsforaccesstoanyrawdiskfromanESX Server2.5orlatermachine.FormoreinformationonRDMs,seetheESXServer3 ConfigurationGuideorESXServer3iConfigurationGuide. Multipathing.Formultipathingtoworkproperly,eachLUNmustpresentthe sameLUNIDnumbertoallESXServerhosts. Queuesize.MakesuretheBusLogicorLSILogicdriverintheguestoperating systemspecifiesalargeenoughqueue.Youcansetthequeuedepthforthe physicalHBAduringsystemsetup.Forsupporteddrivers,seetheStorage/SAN CompatibilityGuide. SCSItimeout.OnvirtualmachinesrunningMicrosoftWindows,consider increasingthevalueoftheSCSITimeoutValueparametertoallowsWindowsto bettertoleratedelayedI/Oresultingfrompathfailover.SeeSettingOperating SystemTimeoutonpage 104.

Restrictions for ESX Server with a SAN

ThefollowingrestrictionsapplywhenyouuseESXServerwithaSAN:

ESXServerdoesnotsupportFCconnectedtapedevices.TheVMware ConsolidatedBackupproxycanmanagethesedevices.SeetheVirtualMachine BackupGuide. YoucannotusevirtualmachinemultipathingsoftwaretoperformI/Oload balancingtoasinglephysicalLUN.

50

VMware, Inc.

Chapter 3 Requirements and Installation

Youcannotusevirtualmachinelogicalvolumemanagersoftwaretomirrorvirtual disks.DynamicdisksonaMicrosoftWindowsvirtualmachineareanexception, butrequirespecialconfiguration.

Setting LUN Allocations

WhenyousetLUNallocations,notethefollowingpoints:

Storageprovisioning.ToensurethattheESXServersystemrecognizestheLUNs atstartuptime,provisionallLUNStotheappropriateHBAsbeforeyouconnect theSANtotheESXServersystem. VMwarerecommendsthatyouprovisionallLUNstoallESXServerHBAsatthe sametime.HBAfailoverworksonlyifallHBAsseethesameLUNs.

VMotionandVMwareDRS.WhenyouuseVirtualCenterandVMotionorDRS, makesurethattheLUNsforthevirtualmachinesareprovisionedtoallESXServer hosts.Thisprovidesthegreatestfreedominmovingvirtualmachines. Active/activecomparedtoactive/passivearrays.WhenyouuseVMotionorDRS withanactive/passiveSANstoragedevice,makesurethatallESXServersystems haveconsistentpathstoallstorageprocessors.Notdoingsocancausepath thrashingwhenaVMotionmigrationoccurs.SeeResolvingPathThrashingon page 108. Foractive/passivestoragearraysnotlistedintheStorage/SANCompatibilityGuide, VMwaredoesnotsupportstorageportfailover.Inthosecases,youmustconnect theservertotheactiveportonthestoragearray.Thisconfigurationensuresthat theLUNsarepresentedtotheESXServerhost.

Setting Fibre Channel HBA

DuringFCHBAsetup,considerthefollowingpoints:

HBAdefaultsettings.FCHBAsworkcorrectlywiththedefaultconfiguration settings.Followtheconfigurationguidelinesgivenbyyourstoragearrayvendor. NOTEForbestresults,usethesamemodelofHBAinoneserver.Ensurethatthe firmwareleveloneachHBAisthesameinoneserver.HavingEmulexandQLogic HBAsinthesameservertothesametargetisnotsupported.

StaticloadbalancingacrossHBAs.YoucanconfiguresomeESXServersystems toloadbalancetrafficacrossmultipleHBAstomultipleLUNswithcertain active/activearrays.

VMware, Inc.

51

Fibre Channel SAN Configuration Guide

Todothis,assignpreferredpathstoyourLUNssothatyourHBAsarebeingused evenly.Forexample,ifyouhavetwoLUNs(AandB)andtwoHBAs(XandY),you cansetHBAXtobethepreferredpathforLUNA,andHBAYasthepreferred pathforLUNB.ThismaximizesuseofyourHBAs.PathpolicymustbesettoFixed forthiscase.SeeTosetthemultipathingpolicyusingaVIClientonpage 98.

Settingthetimeoutforfailover.Setthetimeoutvaluefordetectingwhenapath failsintheHBAdriver.VMwarerecommendsthatyousetthetimeoutto30 secondstoensureoptimalperformance.Tosetthevalue,followtheinstructionsin SettingtheHBATimeoutforFailoveronpage 103. Dedicatedadapterfortapedrives.Forbestresults,useadedicatedSCSIadapter foranytapedrivesthatyouareconnectingtoanESXServersystem.FCconnected tapedrivesarenotsupported.UsetheConsolidatedBackupproxy,asdiscussedin theVirtualMachineBackupGuide. ForadditionalinformationonbootfromaSANHBAsetup,seeChapter 5,Using BootfromSANwithESXServerSystems,onpage 71.

Recommendations

ConsiderthefollowingwhensettingupyourenvironmentwithESXServerhostsand aSAN:

UseRDMforavirtualdiskofavirtualmachinetousesomeofthehardware snapshottingfunctionsofthediskarray,ortoaccessadiskfromavirtualmachine andaphysicalmachineinacoldstandbyhostconfigurationfordataLUNs. UseRDMfortheshareddisksinaMicrosoftClusterServicesetup.SeetheSetup forMicrosoftClusterService. AllocatealargeLUNformultiplevirtualmachinestouseandsetitupasaVMFS. Youcanthencreateordeletevirtualmachinesdynamicallywithouthavingto requestadditionaldiskspaceeachtimeyouaddavirtualmachine. TomoveavirtualmachinetoadifferenthostusingVMotion,theLUNsthathold thevirtualdisksofthevirtualmachinesmustbevisiblefromallthehosts.

Foradditionalrecommendationsandtroubleshootinginformation,seeChapter 6, ManagingESXServerSystemsThatUseSANStorage,onpage 81.

52

VMware, Inc.

Chapter 3 Requirements and Installation

ESX Server Boot from SAN Requirements

WhenyouhaveSANstorageconfiguredwithyourESXServersystem,youcanplace theESXServerbootimageononeoftheLUNsontheSAN.Thisconfigurationmust meetspecificcriteria,discussedinthissection.SeeUsingBootfromSANwithESX ServerSystemsonpage 71. ToenableyourESXServersystemtobootfromaSAN,performthefollowingtasks:

Checkthatyourenvironmentmeetsthegeneralrequirements.SeeGeneralESX ServerSANRequirementsonpage 50. CompletethetaskslistedinTable 31.

Table 3-1. Boot from SAN Requirements

Requirement ESXServer system requirements HBA requirements Description ESXServer3.xisrecommended.WhenyouusetheESXServer3.xsystem, RDMsaresupportedinconjunctionwithbootfromSAN.ForanESXServer 2.5.xsystem,RDMsarenotsupportedinconjunctionwithbootfromSAN. TheHBABIOSforyourHBAFCcardmustbeenabledandcorrectly configuredtoaccessthebootLUN.SeeSettingFibreChannelHBAon page 51. TheHBAshouldbepluggedintothelowestPCIbusandslotnumber.This allowsthedriverstodetecttheHBAquicklybecausethedriversscanthe HBAsinascendingPCIbusandslotnumbers,regardlessoftheassociated virtualmachineHBAnumber. Forprecisedriverandversioninformation,seetheESXServerI/O CompatibilityGuide. BootLUN considerations

Whenyoubootfromanactive/passivestoragearray,theSPwhose WWNisspecifiedintheBIOSconfigurationoftheHBAmustbeactive. IfthatSPispassive,theHBAcannotsupportthebootprocess. TofacilitateBIOSconfiguration,maskeachbootLUNsothatonlyits ownESXServersystemcanseeit.EachESXServersystemshouldsee itsownbootLUN,butnotthebootLUNofanyotherESXServer system.

VMware, Inc.

53

Fibre Channel SAN Configuration Guide

Table 3-1. Boot from SAN Requirements (Continued)

Requirement SAN considerations Description

SANconnectionsmustbethroughaswitchfabrictopology.Bootfrom SANdoesnotsupportdirectconnect(thatis,connectionwithout switches)orFCarbitratedloopconnections. Redundantandnonredundantconfigurationsaresupported.Inthe redundantcase,ESXServercollapsestheredundantpathssothatonly asinglepathtoaLUNispresentedtotheuser.

Hardware specific considerations

IfyouarerunninganIBMeServerBladeCenterandusebootfromSAN,you mustdisableIDEdrivesontheblades. Foradditionalhardwarespecificconsiderations,seetheVMware knowledgebasearticlesandChapter 4,SettingUpSANStorageDevices withESXServer,onpage 57.

Installation and Setup Steps

Table 32givesanoverviewoftheinstallationandsetupsteps,withpointerstorelevant information. Table 3-2. Installation and Setup Steps

Step 1 Description DesignyourSANifitsnotalready configured.MostexistingSANs requireonlyminormodificationto workwithESXServer. CheckthatallSANcomponents meetrequirements. SetuptheHBAsfortheESXServer hosts. Reference Chapter 2,UsingESXServerwithFibre ChannelSAN,onpage 25.

Chapter 3,GeneralESXServerSAN Requirements,onpage 50. Storage/SANCompatibilityGuide. Forspecialrequirementsthatapplyonlyto bootfromSAN,seeChapter 3,ESXServer BootfromSANRequirements,onpage 53. SeealsoChapter 5,UsingBootfromSAN withESXServerSystems,onpage 71.

Performanynecessarystoragearray modification.

Foranoverview,seeChapter 4,SettingUp SANStorageDeviceswithESXServer,on page 57. Mostvendorshavevendorspecific documentationforsettingupaSANtowork withVMwareESXServer.

InstallESXServeronthehostsyou haveconnectedtotheSANandfor whichyouvesetuptheHBAs.

InstallationGuide.

54

VMware, Inc.

Chapter 3 Requirements and Installation

Table 3-2. Installation and Setup Steps (Continued)

Step 6 7 Description Createvirtualmachines. (Optional)Setupyoursystemfor VMwareHAfailoverorforusing MicrosoftClusteringServices. Upgradeormodifyyour environmentasneeded. Reference BasicSystemAdministration. ResourceManagementGuide. SetupforMicrosoftClusterService. Chapter 6,ManagingESXServerSystems ThatUseSANStorage,onpage 81givesan introduction. SearchtheVMwareknowledgebasearticles formachinespecificinformationand latebreakingnews.

VMware, Inc.

55

Fibre Channel SAN Configuration Guide

56

VMware, Inc.

Setting Up SAN Storage Devices with ESX Server

Thischapterdiscussesmanyofthestoragedevicessupportedinconjunctionwith VMwareESXServer.Foreachdevice,itliststhemajorknownpotentialissues,points tovendorspecificinformation(ifavailable),andincludesinformationfromVMware knowledgebasearticles. NOTEInformationinthisdocumentisupdatedonlywitheachrelease.New informationmightalreadybeavailable.ConsultthemostrecentStorage/SAN CompatibilityGuide,checkwithyourstoragearrayvendor,andexploretheVMware knowledgebasearticles. Thischapterdiscussesthefollowingtopics:

SetupOverviewonpage 58 GeneralSetupConsiderationsonpage 59 EMCCLARiiONStorageSystemsonpage 60 EMCSymmetrixStorageSystemsonpage 61 IBMTotalStorageDS4000StorageSystemsonpage 62 IBMTotalStorage8000onpage 66 HPStorageWorksStorageSystemsonpage 66 HitachiDataSystemsStorageonpage 69 NetworkApplianceStorageonpage 69

VMware, Inc.

57

Fibre Channel SAN Configuration Guide

Setup Overview

VMwareESXServersupportsavarietyofSANstoragearraysindifferent configurations.Notallstoragedevicesarecertifiedforallfeaturesandcapabilitiesof ESXServer,andvendorsmighthavespecificpositionsofsupportwithregardtoESX Server.Forthelatestinformationregardingsupportedstoragearrays,seethe Storage/SANCompatibilityGuide.

Testing

VMwaretestsESXServerwithstoragearraysinthefollowingconfigurations:

BasicconnectivityTestswhetherESXServercanrecognizeandoperatewiththe storagearray.Thisconfigurationdoesnotallowformultipathingoranytypeof failover. HBAfailoverTheserverisequippedwithmultipleHBAsconnectingtooneor moreSANswitches.TheserverisrobusttoHBAandswitchfailureonly. StorageportfailoverTheserverisattachedtomultiplestorageportsandis robusttostorageportfailuresandswitchfailures. BootfromSANTheESXServerhostbootsfromaLUNconfiguredontheSAN ratherthanfromtheserveritself. DirectconnectTheserverconnectstothearraywithoutusingswitches,using onlyanFCcable.Forallothertests,afabricconnectionisused.FCArbitratedLoop (AL)isnotsupported. ClusteringThesystemistestedwithMicrosoftClusterServicerunninginthe virtualmachine.SeetheSetupforMicrosoftClusterServicedocument.

58

VMware, Inc.

Chapter 4 Setting Up SAN Storage Devices with ESX Server

Supported Devices

Table 41listsstoragedevicessupportedwithESXServer3.xandpointswheretofind moreinformationaboutusingtheminconjunctionwithESXServer. Table 4-1. Supported SAN Arrays

Manufacturer EMC Device CLARiiONStorageSystem. AlsoavailablefromFSC. AlsoavailablefromDell,Inc.asthe Dell/EMCFCRAIDArrayfamily ofproducts. SymmetrixStorageSystem. IBM IBMTotalStorageDS4000systems (formerlyFAStTStoragesystem). AlsoavailablefromLSIEugenio andStorageTek. IBMTotalStorageEnterprise StorageSystems(previouslyShark Storagesystems). HewlettPackard Hitachi HPStorageWorks(MSA,EVA, and XP). HitachiDataSystemsStorage. AlsoavailablefromSunandas HP XP. Network Appliance NetworkApplianceFCSAN StorageSolutions. IBMTotalStorage8000on page 66. HPStorageWorksStorage Systemsonpage 66. HitachiDataSystemsStorageon page 69. NetworkApplianceStorageon page 69. EMCSymmetrixStorage Systemsonpage 61. IBMTotalStorageDS4000Storage Systemsonpage 62. Reference EMCCLARiiONStorage Systemsonpage 60.

General Setup Considerations

Forallstoragearrays,makesurethatthefollowingrequirementsaremet:

LUNsmustbepresentedtoeachHBAofeachhostwiththesameLUNIDnumber. Ifdifferentnumbersareused,theESXServerhostsdonotrecognizedifferent pathstothesameLUN. BecauseinstructionsonhowtoconfigureidenticalSANLUNIDsarevendor specific,consultyourstoragearraydocumentationformoreinformation.

Unlessspecifiedforindividualstoragearraysdiscussedinthischapter,setthehost typeforLUNspresentedtoESXServertoLinux,Linux Cluster,or,ifavailable, tovmwareoresx.

59

VMware, Inc.

Fibre Channel SAN Configuration Guide

IfyouareusingVMotion,DRS,orHA,makesurethatbothsourceandtargethosts forvirtualmachinescanseethesameLUNswithidenticalLUNIDs. SANadministratorsmightfinditcounterintuitivetohavemultiplehostsseethe sameLUNsbecausetheymightbeconcernedaboutdatacorruption.However, VMFSpreventsmultiplevirtualmachinesfromwritingtothesamefileatthesame time,soprovisioningtheLUNstoallrequiredESXServersystemisappropriate.

EMC CLARiiON Storage Systems

EMCCLARiiONstoragesystemsworkwithESXServermachinesinSAN configurations.Basicconfigurationstepsinclude: 1 2 3 4 5 6 Installingandconfiguringthestoragedevice. Configuringzoningattheswitchlevel. CreatingRAIDgroups. CreatingandbindingLUNs. RegisteringtheserversconnectedtotheSAN. CreatingstoragegroupsthatcontaintheserversandLUNs.

UsetheEMCsoftwaretoperformconfiguration.SeetheEMCdocumentation. Thisarrayisanactive/passivediskarray,sothefollowingrelatedissuesapply. Toavoidthepossibilityofpaththrashing,thedefaultmultipathingpolicyisMost RecentlyUsed,notFixed.TheESXServersystemsetsthedefaultpolicywhenit identifiesthearray.SeeResolvingPathThrashingonpage 108. AutomaticvolumeresignaturingisnotsupportedforAX100storagedevices.See VMFSVolumeResignaturingonpage 117. TousebootfromSAN,makesurethattheactiveSPischosenforthebootLUNstarget intheHBABIOS.

EMC CLARiiON AX100 and RDM

OnEMCCLARiiONAX100systems,RDMsaresupportedonlyifyouusethe NavisphereManagementSuiteforSANadministration.Navilightisnotguaranteedto workproperly. TouseRDMssuccessfully,agivenLUNmustbepresentedwiththesameLUNIDto everyESXServerhostinthecluster.Bydefault,theAX100doesnotsupportthis configuration.

60

VMware, Inc.

Chapter 4 Setting Up SAN Storage Devices with ESX Server

AX100 Display Problems with Inactive Connections

WhenyouuseanAX100FCstoragedevicedirectlyconnectedtoanESXServersystem, youmustverifythatallconnectionsareoperationalandunregisteranyconnections thatarenolongerinuse.Ifyoudont,ESXServercannotdiscovernewLUNsorpaths. Considerthefollowingscenario: 1 AnESXServersystemisdirectlyconnectedtoanAX100storagedevice.TheESX ServerhastwoFCHBAs.OneoftheHBAswaspreviouslyregisteredwiththe storagearrayanditsLUNswereconfigured,buttheconnectionsarenowinactive. WhenyouconnectthesecondHBAontheESXServerhosttotheAX100and registerit,theESXServerhostcorrectlyshowsthearrayashavinganactive connection.However,noneoftheLUNsthatwerepreviouslyconfiguredtothe ESXServerhostarevisible,evenafterrepeatedrescans.

Toresolvethisissue,removetheinactiveHBA,unregistertheconnectiontotheinactive HBA,ormakeallinactiveconnectionsactive.ThiscausesonlyactiveHBAstobeinthe storagegroup.Afterthischange,rescantoaddtheconfiguredLUNs.

Pushing Host Configuration Changes to the Array

WhenyouuseanAX100storagearray,nohostagentperiodicallychecksthehost configurationandpusheschangestothearray.Theaxnaviserverutil cliutilityis usedtoupdatethechanges.Thisisamanualoperationandshouldbeperformedas needed.

EMC Symmetrix Storage Systems

ThefollowingsettingsarerequiredforESXServeroperationsontheSymmetrix networkedstoragesystem:

Commonserialnumber(C) Autonegotiation(EAN)enabled Fibrepathenabledonthisport(VCM) SCSI3(SC3)set(enabled) Uniqueworldwidename(UWN) SPC2(Decal)(SPC2)SPC2flagisrequired

UseEMCsoftwaretoconfigurethestoragearray.SeeyourEMCdocumentation.

VMware, Inc.

61

Fibre Channel SAN Configuration Guide

TheESXServerhostconsidersanyLUNsfromaSymmetrixstoragearraywitha capacityof50MBorlessasmanagementLUNs.TheseLUNsarealsoknownaspseudo orgatekeeperLUNs.TheseLUNsappearintheEMCSymmetrixManagement Interfaceandshouldnotbeusedtoholddata.

IBM TotalStorage DS4000 Storage Systems

IBMTotalStorageDS4000systemsusedtobecalledIBMFAStT.Anumberofstorage arrayvendors(includingLSIandStorageTek)makeSANstoragearraysthatare compatiblewiththeDS4000. SeetheIBMRedbook,ImplementingVMwareESXServerwithIBMTotalStorageFAStTat http://www.redbooks.ibm.com/redbooks/pdfs/sg246434.pdf.Thissectionsummarizes howtoconfigureyourIBMTotalStorageStorageSystemtouseSANandMicrosoft ClusteringService.SeeSetupforMicrosoftClusterService. InadditiontonormalconfigurationstepsforyourIBMTotalStoragestoragesystem, youneedtoperformspecifictasks. YoumustalsomakesurethatmultipathingpolicyissettoMostRecentlyUsed.See ViewingtheCurrentMultipathingStateonpage 95.

Configuring the Hardware for SAN Failover with DS4000 Storage Servers

TosetupahighlyavailableSANfailoverconfigurationwithDS4000storagemodels equippedwithtwostorageprocessors,youneedthefollowinghardwarecomponents:

TwoFCHBAs,suchasQLogicorEmulex,oneachESXServermachine. TwoFCswitchesconnectingtheHBAstotheSAN(forexample,FCswitch1and FCswitch2). TwoSPs(forexample,SP1andSP2). EachSPmusthaveatleasttwoportsconnectedtotheSAN.

UsethefollowingconnectionsettingsfortheESXServerhost,asshowninFigure 41:

ConnecteachHBAoneachESXServermachinetoaseparateswitch.Forexample, connectHBA1toFCswitch1andHBA2toFCswitch2. OnFCswitch1,connectSP1toalowerswitchportnumberthanSP2,toensurethat SP1islistedfirst.Forexample,connectSP1toFCswitch1port1andSP2toFC switch1port2.

62

VMware, Inc.

Chapter 4 Setting Up SAN Storage Devices with ESX Server

OnFCswitch2,connectSP1toalowerswitchportnumberthanSP2,toensurethat SP1islistedfirst.Forexample,connectSP1toport1onFCswitch2andSP2toport 2onFCswitch2. Figure 4-1. SAN Failover

ESX Server 1 HBA1 HBA2 ESX Server 2 HBA3 HBA4

FC switch 1

FC switch 2

SP1 storage

SP2

ThisconfigurationprovidestwopathsfromeachHBA,sothateachelementofthe connectioncanfailovertoaredundantpath.Theorderofthepathsinthis configurationprovidesHBAandswitchfailoverwithouttheneedtotriggerSPfailover. ThestorageprocessorthatthepreferredpathsareconnectedtomustowntheLUNs.In theprecedingexampleconfiguration,SP1ownsthem. NOTETheprecedingexampleassumesthattheswitchesarenotconnectedthroughan InterSwitchLink(ISL)inonefabric.

Verifying the Storage Processor Port Configuration

YoucanverifytheSPportconfigurationbycomparingtheVIClientinformationwith theinformationintheDS4000subsystemprofile. To verify storage processor port configuration 1 2 3 ConnecttotheESXServerhostbyusingtheVIClient. SelectthehostandchoosetheConfigurationtab. ClickStorageAdaptersintheHardwarepanel.

VMware, Inc.

63

Fibre Channel SAN Configuration Guide

SelecteachstorageadaptertoseeitsWWPN.

SelectStoragetoseetheavailabledatastores.

ComparetheWWPNinformationtotheinformationlistedintheDS4000storage subsystemprofile.

Disabling Auto Volume Transfer