Beruflich Dokumente

Kultur Dokumente

Lecture01 11

Hochgeladen von

Niraj KhandekarOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Lecture01 11

Hochgeladen von

Niraj KhandekarCopyright:

Verfügbare Formate

ECE-451/ECE-566 - Introduction to Parallel and Distributed Programming

Lecture 1: Introduction

Department of Electrical & Computer Engineering Rutgers University

ECE 451-566, Fall 11

Course Information

Class: Mon/Wed 6:40-8pm, CoRE 538 Instructor: Manish Parashar, Ivan Rodero Class website:

http://nsfcac.rutgers.edu/people/parashar/classes/11-12/ece451-566/

Office hours: Mon/Wed 4:30-5:30pm, CoRE 626 Grading policy

Undergraduate (ECE 451) Programming Assignments: 30%, Midterm: 40%, Final Project (Groups of 2-3 Students): 30%, Quizzes/Class Participation: 10% Graduate (ECE 566) Programming Assignments: 30%, Midterms: 40%, Final Project (Individual): 30%, Quizzes/Class Participation: 10%

ECE 451-566, Fall 11

Objectives

Introduction to parallel/distributed programming

parallel/distributed programming

approaches, paradigms, tools, etc.

issues and approaches in parallel/distributed application development current state of parallel/distributed architectures and systems current and future trends

ECE 451-566, Fall 11

Course Organization

Introduction (2 lectures) 4 main units

Performance evaluation

What is performance? How to evaluate performance? tools, etc.

Shared memory

Traditional (e.g., phtreads, OpenMP) New paradigms (e.g., CUDA)

Message-passing (i.e., MPI) Distributed computing

Traditional (Corba, WS) Autonomic/Grid/Cloud computing

Why we need powerful computers?

ECE 451-566, Fall 11

Demand for Computational Speed

Continual demand for greater computational speed from a computer system than is currently possible Areas requiring great computational speed include numerical modeling and simulation of scientific and engineering problems. Computations must be completed within a reasonable time period.

ECE 451-566, Fall 11

Simulation: The Third Pillar of Science

Traditional scientific and engineering paradigm:

Do theory or paper design. Perform experiments or build system.

Limitations:

Too difficult -- build large wind tunnels. Too expensive -- build a throw-away passenger jet. Too slow -- wait for climate or galactic evolution. Too dangerous -- weapons, drug design, climate experimentation.

Computational science paradigm:

Use high performance computer systems to simulate the phenomenon

Base on known physical laws and efficient numerical methods.

ECE 451-566, Fall 11

Some Particularly Challenging Computations

Science

Global climate modeling Astrophysical modeling Biology: Genome analysis; protein folding (drug design)

Engineering

Crash simulation Semiconductor design Earthquake and structural modeling

Business

Financial and economic modeling Transaction processing, web services and search engines

Defense

Nuclear weapons -- test by simulations Cryptography

ECE 451-566, Fall 11

Units of Measure in HPC

High Performance Computing (HPC) units are:

Flop/s: floating point operations Bytes: size of data

Typical sizes are millions, billions, trillions!

Mega Mbyte = 106 byte (also 220 = 1048576) 9 flop/sec Giga Gflop/s = 10 Gbyte = 109 byte (also 230 = 1073741824) 12 flop/sec Tera Tflop/s = 10 Tbyte = 1012 byte (also 240 = 10995211627776) 15 flop/sec Peta Pflop/s = 10 Pbyte = 1015 byte (also 250 = 1125899906842624) 18 flop/sec Exa Eflop/s = 10 Ebyte = 1018 byte Mflop/s = 106 flop/sec

ECE 451-566, Fall 11

Economic Impact of HPC

Airlines:

System-wide logistics optimization systems on parallel systems. Savings: approx. $100 million per airline per year.

Automotive design:

Major automotive companies use large systems (500+ CPUs) for:

CAD-CAM, crash testing, structural integrity and aerodynamics. One company has 500+ CPU parallel system.

Savings: approx. $1 billion per company per year.

Semiconductor industry:

Semiconductor firms use large systems (500+ CPUs) for

device electronics simulation and logic validation

Savings: approx. $1 billion per company per year.

Securities industry:

Savings: approx. $15 billion per year for U.S. home mortgages.

ECE 451-566, Fall 11

Global Climate Modeling Problem

Problem is to compute:

f(latitude, longitude, elevation, time) ! temperature, pressure, humidity, wind velocity

Approach:

Discretize the domain, e.g., a measurement point every 1km Devise an algorithm to predict weather at time t+1 given t

Uses:

- Predict major events, e.g., El Nino - Use in setting air emissions standards

Source: http://www.epm.ornl.gov/chammp/chammp.html

ECE 451-566, Fall 11

Global Climate Modeling Computation

One piece is modeling the fluid flow in the atmosphere

Solve Navier-Stokes problem

Roughly 100 Flops per grid point with 1 minute timestep

Computational requirements:

To match real-time, need 5x 1011 flops in 60 seconds = 8 Gflop/s Weather prediction (7 days in 24 hours) ! 56 Gflop/s Climate prediction (50 years in 30 days) ! 4.8 Tflop/s To use in policy negotiations (50 years in 12 hours) ! 288 Tflop/s

To double the grid resolution, computation is at least 8x Current models are coarser than this

ECE 451-566, Fall 11

Heart Simulation

Problem is to compute blood flow in the heart Approach:

Modeled as an elastic structure in an incompressible fluid.

The immersed boundary method due to Peskin and McQueen. 20 years of development in model Many applications other than the heart: blood clotting, inner ear, paper making, embryo growth, and others

Use a regularly spaced mesh (set of points) for evaluating the fluid

Uses

Current model can be used to design artificial heart valves Can help in understand effects of disease (leaky valves) Related projects look at the behavior of the heart during a heart attack Ultimately: real-time clinical work

ECE 451-566, Fall 11

Heart Simulation Calculation

The involves solving Navier-Stokes equations

64^3 was possible on Cray YMP, but 128^3 required for accurate model (would have taken 3 years). Done on a Cray C90 -- 100x faster and 100x more memory Until recently, limited to vector machines - Needs more features: -Electrical model of the heart, and details of muscles, E.g., ! - Chris Johnson! - Andrew McCulloch! -Lungs, circulatory systems

ECE 451-566, Fall 11

Parallel Computing in Web Search

Functional parallelism: crawling, indexing, sorting Parallelism between queries: multiple users Finding information amidst junk Preprocessing of the web data set to help find information

General themes of sifting through large, unstructured data sets:

when to put white socks on sale what advertisements should you receive finding medical problems in a community

ECE 451-566, Fall 11

Document Retrieval Computation

Approach:

Store the documents in a large (sparse) matrix Use Latent Semantic Indexing (LSI), or related algorithms to partition Needs large sparse matrix-vector multiply

# documents ~= 10 M

24 18 65

# keywords ~100K

Matrix is compressed Random memory access Scatter/gather vs. cache miss per 2Flops

Ten million documents in typical matrix. Web storage increasing 2x every 5 months. Similar ideas may apply to image retrieval.

ECE 451-566, Fall 11

Transaction Processing

Parallelism is natural in relational operators: select, join, etc. Many difficult issues: data partitioning, locking, threading.

Why powerful (all ?) computers are parallel?

ECE 451-566, Fall 11

How to Run Applications Faster ?

There are 3 ways to improve performance:

1. Work Harder 2. Work Smarter 3. Get Help

Computer Analogy

1. Use faster hardware: e.g. reduce the time per instruction (clock cycle). 2. Optimized algorithms and techniques, customized hardware 3. Multiple computers to solve problem: That is, increase no. of instructions executed per clock cycle.

ECE 451-566, Fall 11

Sequential Architecture Limitations

Sequential architectures reaching physical limitation (speed of light, thermodynamics) Hardware improvements like pipelining, Superscalar, etc., are non-scalable and requires sophisticated Compiler Technology. Vector Processing works well for certain kind of problems.

10

ECE 451-566, Fall 11

What is Parallel Computing?

Parallel computing: using multiple processors in parallel to solve problems more quickly than with a single processor Examples of parallel machines:

A cluster computer that contains multiple PCs combined together with a high speed network A shared memory multiprocessor (SMP*) by connecting multiple processors to a single memory system A Chip Multi-Processor (CMP) contains multiple processors (called cores) on a single chip

Concurrent execution comes from desire for performance; unlike the inherent concurrency in a multi-user distributed system

* Technically, SMP stands for Symmetric Multi-Processor

ECE 451-566, Fall 11

Technology Trends: Microprocessor Capacity

Moore s Law

2X transistors/Chip Every 1.5 years

Called Moore s Law Microprocessors have become smaller, denser, and more powerful.

Gordon Moore (co-founder of Intel) predicted in 1965 that the transistor density of semiconductor chips would double roughly every 18 months.

Slide source: Jack Dongarra

11

ECE 451-566, Fall 11

Microprocessor Transistors and Clock Rate

Growth in transistors per chip

100,000,000

Increase in clock rate

1000

10,000,000

Transistors

1,000,000

i80386

Clock R ate (MHz)

R10000 Pentium

100

10

100,000

i8086

i80286

R3000 R2000

10,000

i8080 i4004

1,000 1970 1975 1980 1985 1990 1995 2000 2005

Year

0.1 1970

1980

1990

Year

2000

Why bother with parallel programming? Just wait a year or two!

ECE 451-566, Fall 11

Limit #1: Power density

Can soon put more transistors on a chip than can afford to turn on. -- Patterson 07 10000 Power Density (W/cm2)

Scaling clock speed (business as usual) will not work

Rocket Nozzle Nuclear Reactor

Sun s Surface

1000

100

Hot Plate 8086 10 4004 P6 8008 8085 Pentium 386 286 486 8080 1

1970 1980 1990 2000 2010

Source: Patrick Gelsinger, Intel!

12

ECE 451-566, Fall 11

Grassroots Scalability Motivations for Muticore

ECE 451-566, Fall 11

What are Scalable Systems?

Wikipedia Definition:

A scalable system is one that can easily be altered to accommodate changes in the number of users, resources and computing entities affected to it. Scalability can be measured in three different dimensions:

Load scalability

A distributed system should make it easy for us to expand and contract its resource pool to accommodate heavier or lighter loads.

Geographic scalability

A geographically scalable system is one that maintains its usefulness and usability, regardless of how far apart its users or resources are.

Administrative scalability

No matter how many different organizations need to share a single distributed system, it should still be easy to use and manage.

Some loss of performance may occur in a system that allows itself to scale in one or more of these dimensions.

13

ECE 451-566, Fall 11

Dimensions of Scalability

Grassroots Scalability Multicores, GPGPUs, etc. HE HPC Systems Enterprise Systems (Datacenters) Wide-area Scalability

ECE 451-566, Fall 11

How fast can a serial computer be?

1 Tflop/s, 1 Tbyte sequential machine

r = 0.3 mm

Consider the 1 Tflop/s sequential machine: Data must travel some distance, r, to get from memory to CPU. To get 1 data element per cycle, this means 1012 times per second at the speed of light, c = 3x108 m/s. Thus r < c/1012 = 0.3 mm. Now put 1 Tbyte of storage in a 0.3 mm x 0.3 mm area: Each word occupies about 3 square Angstroms, or the size of a small atom.

14

ECE 451-566, Fall 11

Impact of Device Shrinkage

What happens when the feature size shrinks by a factor of x ? Clock rate goes up by x

actually less than x, because of power consumption

Transistors per unit area goes up by x2 Die size also tends to increase

typically another factor of ~x

Raw computing power of the chip goes up by ~ x4 !

of which x3 is devoted either to parallelism or locality

ECE 451-566, Fall 11

Grassroots Scalability Motivations for Muticore

15

ECE 451-566, Fall 11

Grassroots Scalability Muticore is here to stay!

ECE 451-566, Fall 11

Grassroots Scalability The Memory Bottleneck

16

ECE 451-566, Fall 11

Grassroots Scalability Hiding Latency

ECE 451-566, Fall 11

HPC Performance Trends

17

ECE 451-566, Fall 11

HPC Performance Trends

ECE 451-566, Fall 11

HPC Performance Trends

18

ECE 451-566, Fall 11

Top of the Top500

ECE 451-566, Fall 11

HPC Hybrid Architectures

19

ECE 451-566, Fall 11

Architecture of a Typical HE HPC System

ECE 451-566, Fall 11

20

ECE 451-566, Fall 11

HPC Scalability Issues

Parallelism Model/Paradigm

SIMD, SPMD, MIMD, Vector DAG/Event Driven Hierarchical/Multi-level

Runtime Management

Load-balancing Adaptation Fault-Tolerance Data management

Locality! Locality! Locality!

Memory, Cache Communication

ECE 451-566, Fall 11

Principles of Parallel Computing

Parallelism and Amdahl s Law Finding and exploiting granularity Preserving data locality Load balancing Coordination and synchronization Performance modeling

All of these things make parallel programming more difficult than sequential programming.

21

ECE 451-566, Fall 11

Overhead of Parallelism

Given enough parallel work, this is the most significant barrier to getting desired speedup. Parallelism overheads include:

cost of starting a thread or process cost of communicating shared data cost of synchronizing extra (redundant) computation

Each of these can be in the range of milliseconds (= millions of flops) on some systems Tradeoff: Algorithm needs sufficiently large units of work to run fast in parallel (i.e. large granularity), but not so large that there is not enough parallel work.

ECE 451-566, Fall 11

Locality and Parallelism

Conventional Storage Proc Hierarchy Cache L2 Cache Proc Cache L2 Cache Proc Cache L2 Cache potential interconnects

L3 Cache

L3 Cache

L3 Cache

Memory

Memory

Memory

Large memories are slow, fast memories are small. Storage hierarchies are large and fast on average. Parallel processors, collectively, have large, fast memories -- the slow accesses to remote data we call communication . Algorithm should do most work on local data.

22

ECE 451-566, Fall 11

Load Imbalance

Load imbalance is the time that some processors in the system are idle due to

insufficient parallelism (during that phase). unequal size tasks.

Examples of the latter

adapting to interesting parts of a domain . tree-structured computations. fundamentally unstructured problems.

Algorithm needs to balance load

but techniques to balance load often reduce locality

ECE 451-566, Fall 11

Automatic Parallelism in Modern Machines

Bit level parallelism: within floating point operations, etc. Instruction level parallelism (ILP): multiple instructions execute per clock cycle. Memory system parallelism: overlap of memory operations with computation. OS parallelism: multiple jobs run in parallel on commodity SMPs. There are limitations to all of these! Thus to achieve high performance, the programmer needs to identify, schedule and coordinate parallel tasks and data.

23

ECE 451-566, Fall 11

Enterprise Scalability - Datacenter is new server"

Program == Web search, email, map/GIS, " Computer == 1000s computers, storage, network" Warehouse-sized facilities and workloads" New datacenter ideas (2007-2008): truck container (Sun), oating (Google), datacenter-in-a-tent (Microsoft)" How to enable innovation in new services without rst building and capitalizing a large company?!

photos: Sun Microsystems & datacenterknowledge.com

63

ECE 451-566, Fall 11

Google Oregon Datacenter

64

Source: Harpers (Feb, 2008)

24

ECE 451-566, Fall 11

ECE 451-566, Fall 11

!"#$%&%'($)*+#+,$"#$%()-)./%)!0+1&2$)

A 50-rack datacenter

X 64 blades per rack X 2 sockets per blade X 32 cores per socket X 10 VMs per core X 10 Java objects per VM

20,480,000

managed objects

25

ECE 451-566, Fall 11

Enterprise Application Scalability - Problem

How do you scale up applications?

Run jobs processing 100 s of terabytes of data Takes 11 days to read on 1 computer

Need lots of cheap computers

Fixes speed problem (15 minutes on 1000 computers), but! Reliability problems

In large clusters, computers fail every day Cluster size is not fixed

Need common infrastructure

Must be efficient and reliable

ECE 451-566, Fall 11

Enterprise Application Scalability - Solution

Open Source Apache Project Hadoop Core includes:

Distributed File System - distributes data Map/Reduce - distributes application

Written in Java Runs on

Linux, Mac OS/X, Windows, and Solaris Commodity hardware

26

ECE 451-566, Fall 11

Hadoop clusters

~20,000 machines running Hadoop @ Yahoo! Several petabytes of user data (compressed, unreplicated) Hundreds of thousands of jobs every month

ECE 451-566, Fall 11

Conclusion

All processors will be multi-core All computer will be massively parallel All programmers will be parallel programmers All programs will be parallel programs

The key challenge is how to enable mainstream developers to scale their applications on machines!

Algorithms scalable, asynchronous, fault-tolerant Programming models and systems Adaptive runtime management Data management System support

27

ECE 451-566, Fall 11

Wide-Area Scalability: The Grid Computing Paradigm

Grid Computing paradigm is an emerging way of thinking about a global infrastructure for:

Sharing data Distribute computation Coordinating problem solving processes Accessing remote instrumentation

User

Storage systems

Grid Infrastructure

Instruments

Computational power

Ack. F. Scibilia!

ECE 451-566, Fall 11

Key Enablers of Grid Computing - Exponentials

Network vs. computer performance

Computer speed doubles every 18 months Storage density doubles every 12 months Network speed doubles every 9 months Difference = order of magnitude per 5 years

1986 to 2000

Computers: x 500 Networks: x 340,000

Scientific American (Jan-2001)

2001 to 2010

Computers: x 60 Networks: x 4000

When the network is as fast as the computer's internal links, the machine disintegrates across the net into a set of special purpose appliances (George Gilder)

Ack: I. Foster!

28

ECE 451-566, Fall 11

The Grid Concept

Resource sharing & coordinated computational problem solving in dynamic, multi-institutional virtual organizations

1. Enable integration of distributed resources 2. Using general-purpose protocols & infrastructure 3. To achieve better-than-best-effort service

Ack: I. Foster!

A Cloudy Weather Forecast

" A

#

Cloudy Outlook

About 3.2% of U.S. small businesses, or about 230,000 businesses, use cloud services. # Another 3.6%, or 260,000, plan to add cloud services in the next 12 months. # Small-business spending on cloud services will increase by 36.2% in 2010 over a year ago, to $2.4 billion from $1.7 billion.

(Source: IDC, 2010)

Based on a slide by R. Wolski, UCSB

29

ECE 451-566, Fall 11

Defining Cloud Computing

Wikipedia Cloud computing is Internet-based computing, whereby shared resources, software and information are provided to computers and other devices on-demand like a public utility. NIST A cloud is a computing capability that provides an abstraction between the computing resource and its underlying technical architecture (e.g., servers, storage, networks), enabling convenient, ondemand network access to a shared pool of configurable computing resources that can be rapidly provisioned and released with minimal management effort or service provider interaction

SLAs Web Services Virtualization

ECE 451-566, Fall 11

The Cloud Evolution

reach

The Cloud The Web

information & e-commerce connectivity

1970 1980 1990 2000 2005 2010 2020

virtualized services

The Internet

time

78

30

ECE 451-566, Fall 11

The Cloud Stack

3,128'.+)'$)')3+.4%&+)53''36 !('21,.7)'$)')3+.4%&+)5!''36 /01.'$2.-&2-.+)'$)')3+.4%&+) 5/''36 !"#$%&'()*+$,-.&+$

!"#$%&''

79

!.%4'2+) 9(,-:

!-;(%&)9(,-:

<#;.%:)9(,-:$

ECE 451-566, Fall 11

The Lure !.

A seductive abstraction unlimited resources, always on, always accessible! Economies of scale Multiple entry points

*aaS: SaaS, PaaS, IaaS, HaaS

IT- outsourcing

Transform IT from being a capital investment to a utility TCO, capital costs, operation costs

Potential for on-demand scale-up, scale-down, scaleout Pay as you go, for what you use! !..

31

ECE 451-566, Fall 11

The Cloud Today - Examples

140

Source: Wikipedia

and more ..

ECE 451-566, Fall 11

Everything Cloud!

32

ECE 451-566, Fall 11

Clouds @ the Peak of Inflated Expectations

33

Das könnte Ihnen auch gefallen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5782)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (72)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Assignment 4 Client ServerDokument5 SeitenAssignment 4 Client ServerClip-part CollectionsNoch keine Bewertungen

- Procedure-0103 Hse MeetingsDokument3 SeitenProcedure-0103 Hse MeetingsIsmail Hamzah Azmatkhan Al-husainiNoch keine Bewertungen

- Computer Network - CS610 Power Point Slides Lecture 19Dokument21 SeitenComputer Network - CS610 Power Point Slides Lecture 19Ibrahim ChoudaryNoch keine Bewertungen

- System Software Unit-IIDokument21 SeitenSystem Software Unit-IIpalanichelvam90% (10)

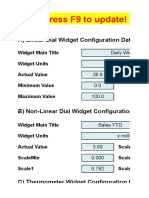

- Excel Dashboard WidgetsDokument47 SeitenExcel Dashboard WidgetskhincowNoch keine Bewertungen

- Clash DetectionDokument9 SeitenClash DetectionprinccharlesNoch keine Bewertungen

- E Business TaxDokument15 SeitenE Business Taxkforkota100% (2)

- BSC It - Sem IV - Core JavaDokument141 SeitenBSC It - Sem IV - Core JavaValia Centre of ExcellenceNoch keine Bewertungen

- Pub - Logic Timing Simulation and The Degradation Delay PDFDokument286 SeitenPub - Logic Timing Simulation and The Degradation Delay PDFcarlosgnNoch keine Bewertungen

- Roco 10772 Route CNT RLDokument2 SeitenRoco 10772 Route CNT RLMonica Monica MonicaNoch keine Bewertungen

- Everyday Math AlgorithmsDokument26 SeitenEveryday Math Algorithmsapi-28679754Noch keine Bewertungen

- Prototyping With BUILD 2Dokument66 SeitenPrototyping With BUILD 2Erickson CunzaNoch keine Bewertungen

- Compaq Armada M700 Maintenance GuideDokument137 SeitenCompaq Armada M700 Maintenance GuidetekayoNoch keine Bewertungen

- 2018-2019 - Recipe Ratio and Rate Project SheetDokument2 Seiten2018-2019 - Recipe Ratio and Rate Project SheetKarimaNoch keine Bewertungen

- Windows Embedded Standard 7 Technical OverviewDokument6 SeitenWindows Embedded Standard 7 Technical OverviewSum Ting WongNoch keine Bewertungen

- D78846GC10 TocDokument18 SeitenD78846GC10 TocRatneshKumar0% (1)

- v14 KpisDokument127 Seitenv14 KpisFrans RapetsoaNoch keine Bewertungen

- UPDATED GUIDELINES FOR BEEd STUDENTS IN RESEARCH 1Dokument2 SeitenUPDATED GUIDELINES FOR BEEd STUDENTS IN RESEARCH 1sheraldene eleccionNoch keine Bewertungen

- RT ToolBox2 Users ManualDokument229 SeitenRT ToolBox2 Users ManualJudas Ramirez AmavizcaNoch keine Bewertungen

- Kyd S Excel Shortcut KeysDokument15 SeitenKyd S Excel Shortcut KeysSantosh DasNoch keine Bewertungen

- Dtsu X9 37-2003Dokument156 SeitenDtsu X9 37-2003enigmathinking100% (1)

- IEEE CS BDC Summer Symposium 2023 Feature Selection Breast Cancer DetectionDokument1 SeiteIEEE CS BDC Summer Symposium 2023 Feature Selection Breast Cancer DetectionrumanaNoch keine Bewertungen

- PIANO 5.3: User GuideDokument19 SeitenPIANO 5.3: User GuideJesterbean100% (2)

- Open Science: Principles, Context, Tools and ExperiencesDokument55 SeitenOpen Science: Principles, Context, Tools and ExperiencesDavid ReinsteinNoch keine Bewertungen

- Raja.A: Mobile: +91 9965365941 EmailDokument3 SeitenRaja.A: Mobile: +91 9965365941 Emailprabha5080Noch keine Bewertungen

- Sri Kanda Guru KavachamDokument9 SeitenSri Kanda Guru KavachamAnonymous pqxAPhENoch keine Bewertungen

- MULTIPLE CHOICE. Choose The One Alternative That Best Completes The Statement or Answers The QuestionDokument12 SeitenMULTIPLE CHOICE. Choose The One Alternative That Best Completes The Statement or Answers The QuestionAndrew WegierskiNoch keine Bewertungen

- Perceptions of AMWLSAI Employees on E-Commerce Lending OperationsDokument17 SeitenPerceptions of AMWLSAI Employees on E-Commerce Lending OperationsgkzunigaNoch keine Bewertungen

- 04-Algorithms and FlowchartsDokument27 Seiten04-Algorithms and Flowchartsapi-23733597950% (2)

- Company Profile::: M-01, Falaknaz View, Main Shahrah-E-Faisal, Near Wireless Gate Karachi, PakistanDokument3 SeitenCompany Profile::: M-01, Falaknaz View, Main Shahrah-E-Faisal, Near Wireless Gate Karachi, PakistanZfNoch keine Bewertungen