Beruflich Dokumente

Kultur Dokumente

New Microsoft Office Word Document

Hochgeladen von

trai_phieulang0101Originalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

New Microsoft Office Word Document

Hochgeladen von

trai_phieulang0101Copyright:

Verfügbare Formate

INTRODUCTION

The rapid evolution of data networks into convergence with other services such as voice or video, has resulted in a significant increase in the amount of information that is required. Similarly, business needs have been conducive to proliferation of virtual private networks and collaborative work environments with global reach. All this information must somehow be secured against leaks, while the computers hosting or managed services must be protected against unauthorized access or misuse. Even each computer associated risk of falling victim to an unexpected visit from virus or worm intrusions direct your operating system. INTRODUCTION

The rapid evolution of data networks into convergence with other services such as voice or video, has resulted in a significant increase in the amount of information that is required. Similarly, business needs have been conducive to proliferation of virtual private networks and collaborative work environments with global reach. All this information must somehow be secured against leaks, while the computers hosting or managed services must be protected against unauthorized access or misuse. Even each computer associated risk of falling victim to an unexpected visit from virus or worm intrusions direct your operating system.

In a world where information is power, it is vital to implement systems of protection, detection and reaction of high availability that can meet the threats of the outside world in the most effective way possible and with minimal intervention of a human administrator. Faced with this challenge can find a wide range of solutions on the market, which have different features, but suffer from one of the most desirable: adaptability. Much of the products work rules based on updated each time it appears In a world where information is power, it is vital to implement systems of protection, detection and reaction of high availability that can meet the threats of the outside world in the most effective way possible and with minimal intervention of a human administrator. Faced with this challenge can find a wide range of solutions on the market, which have different features, but suffer from one of the most desirable: adaptability. Much of the products work rules based on updated each time that a new attack or variant of an existing one. Some use statistical methods to determine how far away the user behavior at some point in the usual behavior, the common shortcoming remains the great need of administration caused by the constant updates and the generation of false alarms due to unsuitability of the programs.

In this sense answers have emerged from the standpoint of Artificial Intelligence, which basically proposes that not only can identify an attack, but a whole pattern of behavior associated with it. This is the case of Neural Networks

that do not require conventional treatment for such a detection algorithm, other than for the medium filter information and make understandable to the network inputs (also known as coding process inputs).

Despite being a technology several years of work, Artificial Intelligence has not significantly penetrated the market for computer security, perhaps by the fact that the information is at stake is too important to entrust to the discretion only one machine, even if it is monitored, in turn, constantly by a human. On the other hand, the learning ability of an intelligent system is very limited compared to humans, and is not as simple to generalize from a set of particular cases if the problem is very complex.

For this work we decided to use a free tool under the GPL, called Snort . This is for several reasons, among them is that IDS is one of the most widely used free worldwide now (the company managing the project, Sourcefire, was acquired by Checkpoint, a leading company in the field for safety and apliances VPNs), is open source and has a modular architecture very suitable for developing similar components to plugins to avoid having to amend its basic internal structure. Currently the developer communities open source software are having an unusual importance, both for the same philosophy holds (one example is the successful project of Ubuntu Linux distribution) as the new form of collaborative work that have driven. Likewise, Free Software becomes an excellent option for developing countries, who need to implement appropriate solutions cheaper and new knowledge to develop new products and open markets.

This project shows no radically innovative solution within the state of the art of Intrusion Detection Systems (IDS) technologies using Artificial Intelligence (AI), but is the first attempt within the Universidad del Cauca to apply these technologies in the search for solutions

problem of information security in telecommunications, following the philosophy of Free Software.

It is not intended to make an exhaustive study of each of the IA technologies, but to take one of them and present comparative results against existing solution. On the other hand, there is a significant quantity of attacks and variants, we decided to pick just one. This allows for a more robust solution in the sense that it tries to attack many problems once and probably not do something effective for everyone, but to demonstrate with an attack of low complexity that can be achieved acceptable reliability margins.

This paper takes an approach to the problem of intrusion detection based on the philosophy of Free Software and the use of Artificial Intelligence, with the aim of generating new knowledge in the area of Computer Security and enrich the basis of experiences development community in our region and globally.

THEORETICAL

ARTIFICIAL INTELLIGENCE

1.1.1 History

The science of AI (Artificial Intelligence) is about forty years, referring to a conference held at Dartmouth (England) in 1958. During these forty years, public perception of AI has not always been adhered to reality. In the early years, the enthusiasm of scientific and popular publications tended to overestimate the capabilities of AI systems. Early successes in games, checking of mathematical theorems, common sense reasoning and mathematics academy seemed to promise rapid progress towards machine intelligence applied and useful way. During this time, the fields of speech recognition, natural language understanding and optical character recognition in images, specialties began in the laboratories of AI research.

However, the early successes were followed by a slow transition, where it was difficult for people and easy for computers, was more than overshadowed by the things that were easy for people to stop almost impossible for computers. The early promises have never been fully realized, and research in AI and the term artificial intelligence have been associated with technology failures and fiction.

Throughout its history, AI has focused on problems that were just a little beyond the scope of what the state of the art computers could do at that time [Rich & Knight 1991]. As computer science and computer systems have evolved to higher levels of functionality, the areas that fall within the domain of AI research have also changed.

After 40 years of work, we can identify three main phases of development of AI research [Bigus 2001]. In the early years, much of the work dealt with formal issues were structured and had well-defined borders, this included work in math-related skills such as checking theorems, geometry, calculus, and games like chess. In this first stage, the emphasis was on creating "machines thinkers" general purpose would be able to solve different kinds of problems, these systems had a tendency to include sophisticated reasoning and search techniques.

A second phase began with the recognition that the most successful AI projects were focused on problem domains encoded closed and usually much specific knowledge about the problem would be resolved. This method of adding domain knowledge specific to a more general reasoning system led to the first commercially successful AI: expert systems. The rule-based expert systems were developed to do several tasks including chemical analysis, configure computer systems and diagnose medical conditions in patients. Use research in knowledge representation, knowledge engineering and advanced techniques of reasoning, and proved that the IA could provide real value in commercial applications.

We are now in a third phase of AI applications. Since late 1980, much of the AI community has been working on solving the difficult problems of vision and speaks to the machines, understanding and translation of natural language, common sense reasoning, and control of robots. A branch of the IA

known as connectionism regained popularity and expanded the range of commercial applications through the use of neural networks for data mining, modeling and adaptive control. Biological methods such as Genetic Algorithms and alternative logic systems and fuzzy logic have been combined to re-energize the field of AI.

1.1.2 What is meant by intelligence?

When talking about artificial intelligence, the question that often arises is: what is meant by intelligence? Do you mean that the program acts as a human, think like a human or act or think rationally? While there are as many answers as there are researchers involved in work on AI, based on what is written in [Bigus 2001] can be read as follows: an intelligent act rationally, doing things we would do, but not necessarily in the same way we would. These programs would not see that the Turing test, proposed by Alan Turing in 1950 as a test for judging the computational intelligence. But these programs do useful for us, make us more productive, allow us to do more work in less time, and see more interesting and less useless data.

1.1.3 Classification of IA

You could say that every author who has written on this subject has made a classification other than IA, in addition to this, some authors have not agreed on which technologies should be considered as artificial intelligence and which not.

According to [Martin & Sanz 2001], AI Artificial Intelligence could be classified in Classical and Computational Intelligence and Soft Computing. Classical AI corresponds to the first two phases of development of AI research described by [Bigus 2001], is that which is done by conventional

algorithms being its largest development of expert systems mentioned above.

For its part, the technologies of Computational Intelligence and Soft Computing seek more adaptive solutions to problems, trying to imitate natural systems that has arisen after years of evolution, that is, try to imitate the behavior or structures living beings endowed with intelligence. Among these technologies are Artificial Neural Networks (ANN), Fuzzy Logic Systems and Genetic Algorithms.

According to [Bigus 2001] we can identify two schools within the IA: Processing school and school symbols of Neural Networks. Symbols in the processing intelligence is considered the ability to recognize situations or cases and that intelligent behavior can be achieved by manipulating symbols. This is one of the most important principles of AI techniques. Symbols are signs that represent objects and ideas and real world can be represented inside a computer by strings or numbers. In this method, a problem must be represented by a collection of symbols and then a suitable algorithm must be developed to process these symbols.

The researchers constructed using symbols intelligent systems for pattern recognition, reasoning, learning and planning [Russell & Norvig 1995]. History has shown that the symbols may be appropriate for reasoning and planning, but the pattern recognition and learning could be better addressed by other techniques.

Processing techniques Symbols represent a relatively high level in the cognitive process. From the perspective of cognitive science, processing symbol corresponds to conscious thought, where knowledge is represented explicitly, and knowledge itself can be examined and manipulated.

In Neural Networks and Connectionism intelligence is reflected in the ability to learn a few examples and then generalize and apply that knowledge to new situations. Neural networks have little to do with the processing of symbol, which is inspired by the formal mathematical logic and have more to do with how human intelligence or occurs naturally. Humans have neural networks in their heads, consisting of hundreds of billions of brain cells called neurons,

connected by synapses act as adaptive switching systems between neurons. Artificial neural networks are based on the massively parallel architecture found in the brain. They do not process information by manipulating symbols but parallel processing large amounts of data bit refined.

Compared to the symbol processing systems, neural networks perform cognitive functions relatively low level. The knowledge they gained through the learning process is stored in the connection weights and not available for viewing or manipulating it. However, the ability of neural networks to learn from their environment and adapt to the crucial role is an intelligent software systems. From the perspective of cognitive science, neural networks are more akin to pattern recognition and sensory processing are carried out covertly by the unconscious levels of the human mind. Artificial neural networks are discussed in detail below.

As AI research was once dominated by symbol processing techniques, there is now more balanced view in which the

strengths of neural networks are used to address the shortcomings of symbol processing, and both are absolutely necessary to create intelligent applications.

1.1.4 Technologies covered in the ia

Excluded from this section Neural Reden as these are discussed further in a separate item.

Search algorithms

The first major success in Artificial Intelligence research was the solution of problems using search techniques. In this branch of AI problems are represented as states and then solved using simple techniques such as brute force search or heuristic search methods more sophisticated.

The representation of a problem can be achieved by a method known as state space. To generate the state space of a problem should start by defining an initial state or starting point where you start your search. Scrolling through the various states is carried out using a set of operators and to determine when it has reached the target state have a test target (goal test). The cost of the road that continues to move between states is called the cost function and can be associated one to each state.

Effective search algorithms should move systematically through the state space. The bruteforce algorithms blindly move the space while the heuristic algorithms used feedback information about the problem or to direct the search.

There are some related terms search algorithms, for example, says that an algorithm is optimal if it guarantees the best solution of a set of possible solutions. It is complete if it is always a solution when it exists. The complexity of time defines how long it takes the algorithm to find the solution and the complexity of describing how much memory space required to carry out the search.

Among the known search algorithms by brute force are: the first search width (breadth-first search), the first search to deep (depth-first search) and search the first to deep iterated ( Deepening iterated-search). Among the heuristic algorithms are: best-first search (best-first search) algorithm A *, constraint satisfaction search and genetic algorithms.

Genetic algorithms use a metaphor with a biological process. In this method the problem states are represented by binary strings called chromosomes. Chromosomes are manipulated by genetic operators such as crossover and mutation. These algorithms perform the search in parallel, where the population size represents the degree of parallelism.

Expert Systems

There are systems in which knowledge is taken of experts on a certain subject and stored in a knowledge base to provide solutions to problems trying to simulate the human expert.

Expert systems can be classified into two main types depending on the nature of problems they are designed: deterministic and stochastic. Expert systems that address specific problems are known as systems based on

10

rules, because they draw their conclusions based on a set of rules using a logical reasoning mechanism [Castillo et al. 1997].

The stochastic systems that address problems, ie problems involving uncertain situations, they need to introduce some means of measuring uncertainty. Some measures of uncertainty are the factors of certainty and probability. Expert systems that use the likelihood as a measure of uncertainty is known as probabilistic expert systems, they use a joint probability distribution of a group of variables to describe the dependency relationships between them and thus draw conclusions using well known formulas of the theory probability. For a more efficient joint probability distribution networks using probabilistic models, among which include Markov networks and Bayesian networks.

A key feature of expert systems is the separation between knowledge (rules, facts) and their processing. Besides having a user interface and an explanatory component.

Below is a brief description of each component of an Expert System:

The Knowledge Base of an Expert System contains the knowledge of the facts and experiences of experts in a particular domain. The inference mechanism can simulate an expert system solution strategy of an expert. Explanatory Component explains the user found the solution strategy and why decisions. The User Interface is used so that it can make a query in natural language as possible. The acquisition component provides support to the structuring and implementation of knowledge in the knowledge base.

11

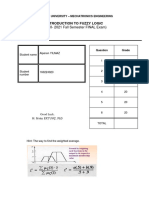

Fuzzy logic

Fuzzy logic has gained great fame by the variety of applications, ranging from the control of complex industrial processes, to the design of artificial devices for automatic deduction, through the construction of household electronics and entertainment as well as diagnostic systems.

It has been generally considered that the concept of fuzzy logic appeared in 1965 at the University of California at Berkeley, introduced by Lotfi A. Zadeh [Zadeh 1988].

Fuzzy logic is basically a multivalued logic that extends classical logic. The latter requires only statements true or false values, while fuzzy logic successfully modeled much of the reasoning "natural". Human reasoning used values are not necessarily true that "as deterministic." For example, calling that "a vehicle is moving fast" one is tempted to grade how "fast", in effect, move the vehicle. You could say that the vehicle is moving "medium fast" or "very fast" [Morales 2002].

Problem solving using Fuzzy Logic requires the definition of fuzzy sets that unlike conventional sets, in which an element merely "owned" or "not belonging", the component elements have a degree of membership, ie may not completely belong to a group or belong to multiple sets a bit. The degree of membership of an element of a fuzzy set is a number between 0 and 1, meaning 0 and 1 that does not belong entirely belonging to the set [Martin & Sanz 2001].

12

For example, the vehicle could be defined fuzzy sets with the functions of membership in the variable speed, shown in Figure 3.1.

Figure 2.1. Fuzzy sets.

These sets can be posed defined control rules such as if-then this:

if "speed" is "slow", Then increase "speed"

Fuzzy logic is an adaptive technology, as the membership functions of the different sets can be adjusted according to a given criterion, even using machine learning techniques.

NEURAL NETWORKS

The exact workings of the human brain remains a mystery. In detail, the most basic element of the human brain is a specific type of cell that unlike the rest of the body cells do not regenerate. It is assumed that these cells are the capabilities we provide to remember, think and apply previous experiences to each of our actions. These cells, of which approximately 100 billion, are known as neurons. Each of these neurons can connect up to another 200,000 neurons, but from 1000 to 10,000 connections is typical.

13

The power of the human mind comes from the little-known operation of these basic components and the multiple connections between them. It also comes from genetic programming and learning. Individual neurons are complicated. They have a variety of parts, subsystems, and control mechanisms. Convey information through electrochemical routes. There are over 100 different kinds of neurons, depending on the classification method used. Together, these neurons and their connections form a process that is not binary, not stable, and not synchronous. In short it is nothing like electronic computers currently available, or even, Artificial Neural Networks (ANN).

These artificial neural networks or connectionist systems only attempt to replicate the most basic elements of this complicated, versatile, and powerful body. They do it in a primitive way. But for the developer who is trying to solve a given problem, neural computing was never about replicating human brains. It is about machines and about a new way of solving problems.

Artificial neural networks are basically crude electronic models based on neural structure of the brain. Basically the brain learns from experience. These biologically inspired methods to compute are intended as the next great advancement in computer industry. Even simple animal brains are capable of functions that are currently impossible for computers. The memory computers do things well, such as saving data or performing complex math. But computers have trouble recognizing simple patterns or attempt to generalize from initial data.

Now, advances in biological research promise an initial understanding of the natural mechanism of thought. This research shows that the brains save information as models. Some of these models are very complicated and allow us to recognize individual faces from different angles. This process of saving the information as models, using these models, and then solving problems encompasses a new field in computing. This field, as

14

mentioned before, do not use traditional programming but involves massively parallel networking and training of these networks to solve specific problems. This field also uses computer concepts very different from traditional concepts such as behavior, reaction, selforganization, learning, generalization, and I forget.

Figure 2.2. One type of biological neuron.

The core of processing in a neural network is a neuron. This unity of human knowledge covers some general abilities. Basically, a biological neuron receives input information from other sources, combines them in some way, performs a generally nonlinear operation on the result, and then outputs the final result. The dendrites are responsible for receiving the inputs, processes input soma, the axon becomes processed inputs and outputs are connected synapses with other neurons electromechanical [Torres 2003].

1.1.5 The artificial neuron

15

The basic unit of Artificial Neural Networks, artificial neuron simulates the four basic functions of biological neurons. The operation of an artificial neuron or unit proposed by McCullock and Pitts in 1943 (Figure 3.3), can be summarized as follows:

Figure 2.3. Artificial neuron model McCullock-Pitts.

The signals are presented to the input, reperesentada by X1, X2, ... XP Each signal is multiplied by a number or weight, indicating its influence on the output of the neuron, represented by W1, W2, ... WP It performs the weighted sum of the signals that produce a level of activity, If this level of activity exceeds a certain limit (threshold), the result of the activation function (f (a)) the unit produces a specific output response (y). This model also includes a factor of influence or externally applied bias is represented by bk.

16

17

18

20

21

22

23

24

25

26

27

28

29

30

31

33

34

Benefits

35

Limitations

36

37

38

39

40

41

42

Why? A

43

44

45

47

48

49

50

51

52

53

54

55

56

57

58

59

61

62

63

64

65

66

67

68

69

70

71

72

74

75

76

77

system. system.

79

80

81

82

83

84

Conditions:

87

Conditions:

88

Conditions:

1.

89

2.

3.

4.

5.

90

1. 2. 3. 4. 5.

1. 2. 3. 4. 5.

92

93

94

96

MEDIUM

MEDIUM

MEDIUM

Information

97

98

99

100

S2

101

102

104

105

106

CONCLUSIONS AND RECOMMENDATIONS

107

108

109

110

GLOSSARY

Das könnte Ihnen auch gefallen

- AI and SecurityDokument11 SeitenAI and SecurityASHUTOSH SONINoch keine Bewertungen

- An Analysis On The Impact of Artificial Intelligence On Cybersecurity - STPDokument12 SeitenAn Analysis On The Impact of Artificial Intelligence On Cybersecurity - STPReverb 404100% (1)

- Artificial Intelligence Full Seminar Report Way2project inDokument10 SeitenArtificial Intelligence Full Seminar Report Way2project inShalini Tiwari100% (2)

- Artificial Intelligence and SecurityDokument12 SeitenArtificial Intelligence and SecurityHiffsah NaikNoch keine Bewertungen

- Introduction to Artificial Intelligence: A Complete Guide to GPTChat and AI Applications: AI Series, #1Von EverandIntroduction to Artificial Intelligence: A Complete Guide to GPTChat and AI Applications: AI Series, #1Noch keine Bewertungen

- CpE 413 – Emerging Technologies in AIDokument31 SeitenCpE 413 – Emerging Technologies in AIJudelmar SalvadorNoch keine Bewertungen

- AI: An overview of key concepts, applications, and technologiesDokument12 SeitenAI: An overview of key concepts, applications, and technologiesAyeshaNoch keine Bewertungen

- Horizon Scanning Artificial Intelligence Legal Profession May 2018 PDFDokument20 SeitenHorizon Scanning Artificial Intelligence Legal Profession May 2018 PDFyuvasree talapaneniNoch keine Bewertungen

- Artificial Intelligence: Learning about Chatbots, Robotics, and Other Business ApplicationsVon EverandArtificial Intelligence: Learning about Chatbots, Robotics, and Other Business ApplicationsBewertung: 5 von 5 Sternen5/5 (3)

- Neural Networks and Fuzzy Logic 19APC0216 MinDokument71 SeitenNeural Networks and Fuzzy Logic 19APC0216 MinGouthami RamidiNoch keine Bewertungen

- 7.fuzzy Neurons and Fuzzy Neural NetworksDokument6 Seiten7.fuzzy Neurons and Fuzzy Neural NetworksANAND GOYALNoch keine Bewertungen

- What Does CHK Astmari WrongDokument7 SeitenWhat Does CHK Astmari WrongBlack AfroNoch keine Bewertungen

- Practice, Available atDokument30 SeitenPractice, Available atkrisNoch keine Bewertungen

- Artificial Intelligence Research Papers 2012Dokument7 SeitenArtificial Intelligence Research Papers 2012dwqdxttlg100% (1)

- Position PaperDokument8 SeitenPosition Papercrisa mae actuelNoch keine Bewertungen

- Lopez Cpe422 ReviewquestionsDokument7 SeitenLopez Cpe422 ReviewquestionsRalph LopezNoch keine Bewertungen

- Artificial Intelligence Applications 2023Dokument50 SeitenArtificial Intelligence Applications 20231ankur.yadavNoch keine Bewertungen

- Talking Points May 22 2021Dokument17 SeitenTalking Points May 22 2021Ka Efren TerciasNoch keine Bewertungen

- 2022 Artificial Intelligence NotesDokument9 Seiten2022 Artificial Intelligence Notesbarasa peggyNoch keine Bewertungen

- AGI Brief OverviewDokument15 SeitenAGI Brief OverviewFrancisco Herrera TrigueroNoch keine Bewertungen

- Research Paper On Artificial Intelligence 2013Dokument8 SeitenResearch Paper On Artificial Intelligence 2013kikufevoboj2100% (1)

- Computer Science Computers Technology Programming: Expert SystemsDokument4 SeitenComputer Science Computers Technology Programming: Expert Systemsrammohan_dasNoch keine Bewertungen

- Intrusion Detection in Iot Networks Using Ai Based ApproachDokument15 SeitenIntrusion Detection in Iot Networks Using Ai Based ApproachFaisal AkhtarNoch keine Bewertungen

- An Exploration On Artificial Intelligence Application: From Security, Privacy and Ethic PerspectiveDokument5 SeitenAn Exploration On Artificial Intelligence Application: From Security, Privacy and Ethic PerspectiveDavid SilveraNoch keine Bewertungen

- 2020 Chaudhari A Review Article On Artificial Intelligence Change in Farmaceutical Formulation and DevelopmentDokument8 Seiten2020 Chaudhari A Review Article On Artificial Intelligence Change in Farmaceutical Formulation and DevelopmentArtur Pereira NetoNoch keine Bewertungen

- Artificial Intelligence Applications in Cybersecurity: 3. Research QuestionsDokument5 SeitenArtificial Intelligence Applications in Cybersecurity: 3. Research Questionsjiya singhNoch keine Bewertungen

- Survey AIDokument11 SeitenSurvey AILinta ShahNoch keine Bewertungen

- Recent Trends in TechnolgyDokument11 SeitenRecent Trends in TechnolgyShisir RokayaNoch keine Bewertungen

- CS158-2 Activity Instructions:: A. Lack of Algorithmic TransparencyDokument6 SeitenCS158-2 Activity Instructions:: A. Lack of Algorithmic TransparencyAngelique AlabadoNoch keine Bewertungen

- MANN 1033 Assignment 1 AnswersDokument19 SeitenMANN 1033 Assignment 1 Answersibrahim mereeNoch keine Bewertungen

- Artificial Intelligence: Benefits and RisksDokument11 SeitenArtificial Intelligence: Benefits and RiskssiwirNoch keine Bewertungen

- Machine LearningDokument6 SeitenMachine LearningRushabh RatnaparkhiNoch keine Bewertungen

- Corse Code Emte 1012 Corse Title Emerging Technology Individual Assignement Section R Proposed by Abayineh Yasab I.D NO 1200016Dokument6 SeitenCorse Code Emte 1012 Corse Title Emerging Technology Individual Assignement Section R Proposed by Abayineh Yasab I.D NO 1200016teshome endrisNoch keine Bewertungen

- AI Applications and Problem SolvingDokument15 SeitenAI Applications and Problem SolvingBara AlheehNoch keine Bewertungen

- Abstract Artificial Intelligence Otherwise Known As AIDokument11 SeitenAbstract Artificial Intelligence Otherwise Known As AIRanaNoch keine Bewertungen

- Information Search and Analysis Skills (Isas)Dokument46 SeitenInformation Search and Analysis Skills (Isas)Rifki ArdianNoch keine Bewertungen

- Module 9 - Trends in ITDokument11 SeitenModule 9 - Trends in ITANHIBEY, JEZREEL ACE S.Noch keine Bewertungen

- Artificial Intelligence in Cyber SecuritDokument5 SeitenArtificial Intelligence in Cyber SecuritPiyush KunwarNoch keine Bewertungen

- Workineh Amanu Section 4Dokument17 SeitenWorkineh Amanu Section 4workinehamanuNoch keine Bewertungen

- Running Head: Artificial Intelligence Code of EthicsDokument8 SeitenRunning Head: Artificial Intelligence Code of EthicsIan Ndiba MwangiNoch keine Bewertungen

- The Impact of Artificial Intelligence On CyberspaceDokument6 SeitenThe Impact of Artificial Intelligence On Cyberspacesly westNoch keine Bewertungen

- 7 Emerging KMDokument3 Seiten7 Emerging KMtyche 05Noch keine Bewertungen

- An Introduction to Artificial Intelligence (AIDokument10 SeitenAn Introduction to Artificial Intelligence (AIAnurag TripathyNoch keine Bewertungen

- CS158-2 Activity InstructionsDokument12 SeitenCS158-2 Activity InstructionsNiel AlcanciaNoch keine Bewertungen

- Artificial Intelligence 1695526138Dokument17 SeitenArtificial Intelligence 1695526138Gabriela SánchezNoch keine Bewertungen

- Six Major Branches of AI RevealedDokument5 SeitenSix Major Branches of AI RevealedKhurram AbbasNoch keine Bewertungen

- Ai White Paper UpdatedDokument21 SeitenAi White Paper UpdatedRegulatonomous OpenNoch keine Bewertungen

- C2 English Lesson On AiDokument6 SeitenC2 English Lesson On Aimartha cullenNoch keine Bewertungen

- Question Bank Class 9 (Ch. 1 - AI)Dokument5 SeitenQuestion Bank Class 9 (Ch. 1 - AI)Bhavya JangidNoch keine Bewertungen

- Digital Fluency Last Min ReferenceDokument21 SeitenDigital Fluency Last Min Referenceanushatanga7Noch keine Bewertungen

- The Effects of Technology On Society: Katie Christianson - Josiah Lenthe - Alex ColeDokument30 SeitenThe Effects of Technology On Society: Katie Christianson - Josiah Lenthe - Alex Colejosiah314Noch keine Bewertungen

- Expert SystemsDokument9 SeitenExpert SystemsanashussainNoch keine Bewertungen

- Artificial IntelligenceDokument7 SeitenArtificial IntelligencemanikamathurNoch keine Bewertungen

- The Role of Artificial Intelligence in Future Technology: March 2020Dokument5 SeitenThe Role of Artificial Intelligence in Future Technology: March 2020madhuri gopalNoch keine Bewertungen

- AIDokument8 SeitenAIJayesh BhogeNoch keine Bewertungen

- Artificial Intelligence Research Papers TopicsDokument9 SeitenArtificial Intelligence Research Papers Topicsaferauplg100% (1)

- STCL AssignmentDokument8 SeitenSTCL AssignmentRushabh RatnaparkhiNoch keine Bewertungen

- HUMAN CAPITAL MANAGEMENT TREND: AI & ML TITLEDokument10 SeitenHUMAN CAPITAL MANAGEMENT TREND: AI & ML TITLEajdajbdahdNoch keine Bewertungen

- Artificial Intelligence in The Creative Industries: A ReviewDokument68 SeitenArtificial Intelligence in The Creative Industries: A Reviewprof.thiagorenaultNoch keine Bewertungen

- M Tech Mid 2 Nnfs PaperDokument2 SeitenM Tech Mid 2 Nnfs Papersreekantha2013Noch keine Bewertungen

- Applied Sciences: A Review On Applications of Fuzzy Logic Control For Refrigeration SystemsDokument20 SeitenApplied Sciences: A Review On Applications of Fuzzy Logic Control For Refrigeration SystemsHazemNoch keine Bewertungen

- Fuzzy Controller Design For Dynamic Positioning of Unmanned Surface VehicleDokument6 SeitenFuzzy Controller Design For Dynamic Positioning of Unmanned Surface VehicleInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- Artificial Intelligence Applied To Project Success: A Literature ReviewDokument6 SeitenArtificial Intelligence Applied To Project Success: A Literature Reviewhonin alshaeerNoch keine Bewertungen

- Air Fryer Using Fuzzy Logic: Abha Tewari Kiran Israni Monica TolaniDokument4 SeitenAir Fryer Using Fuzzy Logic: Abha Tewari Kiran Israni Monica TolaniSyahmi HakimNoch keine Bewertungen

- Irawan 2019 IOP Conf. Ser. Earth Environ. Sci. 303 012044Dokument11 SeitenIrawan 2019 IOP Conf. Ser. Earth Environ. Sci. 303 012044NaufalNoch keine Bewertungen

- Proposition Logic Unification ResolutionDokument56 SeitenProposition Logic Unification ResolutionPanimalar PandianNoch keine Bewertungen

- Sbit Journal of Sciences and Technology Issn 2277-8764 VOL-2, ISSUE 1, 2013. An Overview of Artificial IntelligenceDokument4 SeitenSbit Journal of Sciences and Technology Issn 2277-8764 VOL-2, ISSUE 1, 2013. An Overview of Artificial Intelligencesampath kumarNoch keine Bewertungen

- Golforoushan 3Dokument116 SeitenGolforoushan 3Elyass DaddaNoch keine Bewertungen

- Smoothing Wind Power Fluctuations by Particle Swarm Optimization Based Pitch Angle ControllerDokument17 SeitenSmoothing Wind Power Fluctuations by Particle Swarm Optimization Based Pitch Angle ControllerMouna Ben SmidaNoch keine Bewertungen

- Short Term Load Forecast Using Fuzzy LogicDokument9 SeitenShort Term Load Forecast Using Fuzzy LogicRakesh KumarNoch keine Bewertungen

- (2020-2021 Fall Semester FINAL Exam) : Introduction To Fuzzy LogicDokument21 Seiten(2020-2021 Fall Semester FINAL Exam) : Introduction To Fuzzy LogicAlperen YılmazNoch keine Bewertungen

- Grafos Conceptuales y Logica Difusa de Tru Hoang Cao PDFDokument207 SeitenGrafos Conceptuales y Logica Difusa de Tru Hoang Cao PDFMaquiventa MelquisedekNoch keine Bewertungen

- Healthcare Logistics Optimization Framework For EfDokument13 SeitenHealthcare Logistics Optimization Framework For EfBbb AaaNoch keine Bewertungen

- Uncertain Time Series Analysis With Imprecise Observations: Xiangfeng Yang Baoding LiuDokument16 SeitenUncertain Time Series Analysis With Imprecise Observations: Xiangfeng Yang Baoding LiuThanhnt NguyenNoch keine Bewertungen

- EC360 Important University Questions PDFDokument4 SeitenEC360 Important University Questions PDFvoxovNoch keine Bewertungen

- Fuzzy Logic Control of Three Phase Induction Motor: A ReviewDokument3 SeitenFuzzy Logic Control of Three Phase Induction Motor: A ReviewInternational Journal of Innovative Science and Research Technology100% (1)

- Fuzzy Logic For ElevatorsDokument5 SeitenFuzzy Logic For ElevatorsFERNSNoch keine Bewertungen

- Design and Development of A Colour Sensi PDFDokument113 SeitenDesign and Development of A Colour Sensi PDFVũ Mạnh CườngNoch keine Bewertungen

- Bidirectional Associative MemoryDokument20 SeitenBidirectional Associative MemoryKrishnaBihariShuklaNoch keine Bewertungen

- Position Control CylinderDokument13 SeitenPosition Control CylinderfraicheNoch keine Bewertungen

- Question Bank For Artificial Intelligence Regulation 2013Dokument7 SeitenQuestion Bank For Artificial Intelligence Regulation 2013PRIYA RAJINoch keine Bewertungen

- Toward Human-Level Machine Intelligence: Lotfi A. ZadehDokument49 SeitenToward Human-Level Machine Intelligence: Lotfi A. ZadehRohitRajNoch keine Bewertungen

- GRA Method of Multiple Attribute Decision Making With Single Valued Neutrosophic Hesitant Fuzzy Set InformationDokument9 SeitenGRA Method of Multiple Attribute Decision Making With Single Valued Neutrosophic Hesitant Fuzzy Set InformationMia AmaliaNoch keine Bewertungen

- The Impact of Digital Marketing Strategies On Customers Buying Behavior in Oline Shopping Using The Rough Set TheoryDokument16 SeitenThe Impact of Digital Marketing Strategies On Customers Buying Behavior in Oline Shopping Using The Rough Set TheoryMario Sancarranco CrisantoNoch keine Bewertungen

- Total Pages: 2: Answer All Questions, Each Carries 3 MarksDokument2 SeitenTotal Pages: 2: Answer All Questions, Each Carries 3 MarksshakirckNoch keine Bewertungen

- Journal of Computer Science Research - Vol.4, Iss.2 April 2022Dokument46 SeitenJournal of Computer Science Research - Vol.4, Iss.2 April 2022Bilingual PublishingNoch keine Bewertungen

- Department of Computer Science Vidyasagar University: Paschim Medinipur - 721102Dokument26 SeitenDepartment of Computer Science Vidyasagar University: Paschim Medinipur - 721102Venkat GurumurthyNoch keine Bewertungen

- An Improved MADM Based SWOT AnalysisDokument17 SeitenAn Improved MADM Based SWOT AnalysiselvisgonzalesarceNoch keine Bewertungen