Beruflich Dokumente

Kultur Dokumente

T2003randomvariables 11

Hochgeladen von

s05xoOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

T2003randomvariables 11

Hochgeladen von

s05xoCopyright:

Verfügbare Formate

Course 003: Basic Econometrics, 2011-2012

Topic 2: Random Variables and Probability Distributions

Rohini Somanathan Course 003 ( Part 1), 2011-2012

Page 0

Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Sample spaces and random variables

The outcomes of some experiments inherently take the form of real numbers: crop yields with the application of a new type of fertiliser students scores on an exam miles per litre of an automobile Other experiments have a sample space that is not inherently a subset of Euclidean space Outcomes from a series of coin tosses The character of a politician The modes of transport taken by a citys population The degree of satisfaction respondents report for a service provider -patients in a hospital may be asked whether they are very satised, satised or dissatised with the quality of treatment. Our sample space would consist of arrays of the form (VS, S, S, DS, ....) The caste composition of elected politicians. The gender composition of children attending school. A random variable is a function that assigns a real number to each possible outcome s S.

&

Page 1 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Random variables

Denition: Let (S, S, ) be a probability space. If X : S is a real-valued function having as its domain the elements of S, then X is called a random variable. A random variable is therefore a real-valued function dened on the space S. Typically x is used to denote this image value, i.e. x = X(s). If the outcomes of an experiment are inherently real numbers, they are directly interpretable as values of a random variable, and we can think of X as the identity function, so X(s) = s. Note that just like there are lots of ways of dening a sample space, depending on what we want to get out of the experiment, there are also many random variables that can be constructed based on the same experiment. We choose random variables based on what we are interested in getting out of the experiment. For example, we may be interested in the number of students passing an exam, and not the identities of those who pass. A random variable would assign each element in the sample space a number corresponding to the number of passes associated with that outcome. We therefore begin with a probability space (S, S, ) and arrive at an induced probability space (R(X), B, PX (A)). How exactly do we arrive at the function Px (.)? As long as every set A R(X) is associated with an event in our original sample space S, Px (A) is just the probability assigned to that event by P.

&

Page 2 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Random variables..examples

1. Tossing a coin ten times. The sample space consists of the 210 possible sequences of heads and tails. There are many dierent random variables that could be associated with this experiment: X1 could be the number of heads, X2 the longest run of heads divided by the longest run of tails, X3 the number of times we get two heads immediately before a tail, etc... For s = HT T HHHHT T H, what are the values of these random variables? 2. Choosing a point in a plane Each outcome in the sample space is a point of the form s = (x, y) The random variable X could be the xcoordinate of the point. Another random variable Z would be the distance of the point from the origin, Z(s) = x2 + y2 . 3. Heights, weights, distances, temperature, scores, incomes... In these cases, we can have X(s) = s since these are already expressed as real numbers.

&

Page 3 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Induced probability spaces..examples

Lets look at some examples of how we arrive at our probability measure PX (A). A coin is tossed once and were interested in the number of heads, X. The probability assigned to the set A = {1} in our new space is just the probability associated with one head in our original space. So Pr(X = x) = 1 x {0, 1}. 2 With two tosses, the probability attached to the set A = {1} is the sum of the probabilities associated with the disjoint sets {H, T } and {T , H} whose union forms this event. In this case 2 1 Pr(X = x) = x ( 2 )2 x {0, 1, 2} Now consider a sequence of ips of an unbiased coin and our random variable X is the number of ips needed for the rst head. We now have 1 1 1 Pr(X = x) = f(x) = ( )x1 ( ) = ( )x x = 1, 2, 3 . . . 2 2 2 Does the probability for the whole space in this case add up to 1? Notice that the nature of the sample space in the rst two coin-ipping examples is dierent from the third. In the rst two cases it is nite, in the third it is countably innite. In all these cases we have a discrete random variable .

&

Page 4 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

The distribution function

Once weve assigned real numbers to all the subsets of our sample space S that are of interest, we can restrict our attention to the probabilities associated with the occurrence of sets of real numbers. Consider the set A = (, x] Now P(A) = Pr(X x) F(x) is used to denote the probability Pr(X x) and is called the distribution function of x Denition: The distribution function F of a random variable X is a function dened for each real number x as follows: F(x) = P(X x) for < x < If there are a nite number of elements w in A, this probability can be computed as F(x) =

wx

f(w)

In this case, the distribution function will be a step function, jumping at all points x in R(X) which are assigned positive probability.

&

Page 5 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Discrete distributions

Denition: A random variable X has a discrete distribution if X can take only a nite number k of dierent values x1 , x2 , . . . , xK or an innite sequence of dierent values x1 , x2 , . . . . The function f(x) = P(X = x) is the probability function of x. We dene it to be f(x) for all values x in our sample space R(X) and zero elsewhere. If X has a discrete distribution, the probability of any subset A of the real line is given by P(X A) = f(xi ).

xi A

In each of the 3 examples we considered above, we have a clean expression for this probability function. Sometimes, we may want to use these types of functions if they can closely approximate the probabilities dened by more messy functions. Examples: 1. The discrete uniform distribution: picking one of the rst k non-negative integers at random 1 for x = 0, 1, 2, ...k, f(x) = k 0 otherwise 2. The binomial distribution: the probability of x successes in n trials. n px qnx for x = 0, 1, 2, ...n, x f(x) = 0 otherwise

&

Page 6 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Continuous distributions

The sample space associated with our random variable often has an innite number of points.

Example: A point is randomly selected inside a circle of unit radius with origin (0, 0) where the probability assigned to being in a set A S is P(A) = area of A and X is the distance of the selected point from the origin. In this case F(x) = Pr(X x) = area of circle with radius x , so the distribution function of X is given by 0 for x < 0 F(x) = x2 0 x < 1 1 1x

Denition: A random variable X has a continuous distribution if there exists a nonnegative function f dened on the real line, such that for any interval A, P(X A) =

A

f(x)dx

The function f is called the probability density function or p.d.f. of X and must satisfy the conditions below 1. f(x) 0

2.

f(x)dx = 1

1 What is f(x) for the above example? How can you use this to compute P( 1 < X 2 )? How would 4

you use F(x) instead?

&

Page 7 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Continuous distributions..examples

1. The uniform distribution on an interval: Suppose a and b are two real numbers with a < b. A point x is selected from the interval S = {x : a x b} and the probability that it belongs to any subinterval of S is proportional to the length of that subinterval. We say that a point is chosen at random from the interval (a, b). It follows that the p.d.f. must be constant on S and zero outside it: 1 for a x b f(x) = ba 0 otherwise Notice that the value of the p.d.f is the reciprocal of the length of the interval, these values can be greater than one, and the assignment of probabilities does not depend on whether the distribution is dened over the closed interval or the open interval (a, b) 2. Unbounded random variables: It is sometimes convenient to dene a p.d.f over unbounded sets, because such functions may be easier to work with and may approximate the actual distribution of a random variable quite well. An example is: 0 for x 0 f(x) = 1 2 for x > 0

(1+x)

3. Unbounded densities: The following function is unbounded around zero but still represents a valid density. 2 x 1 3 for 0 < x < 1 f(x) = 3 0 otherwise

&

Page 8 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Mixed distributions

Often the process of collecting or recording data leads to censoring, and instead of obtaining a sample from a continuous distribution, we obtain one from a mixed distribution. Examples: The weight of an object is a continuous random variable, but our weighing scale only records weights up to a certain level. Households with very high incomes often underreport their income, for incomes above a certain level (say $250,000), surveys often club all households together - this variable is therefore top-censored. In each of these examples, we can derive the probability distribution for the new random variable, given the distribution for the continuous variable. In the example weve just considered: 0 for x 0 f(x) = 1 2 for x > 0 (1+x) suppose we record X = 3 for all values of X 3 The p.f. for our new random variable Y is given by the same p.f. for values less than 3 and by 1 for Y=3. 4 Some variables, such as the number of hours worked per week have a mixed distribution in the population, with mass points at 0 and 40.

&

Page 9 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Properties of the distribution function

Recall that the distribution function or cumulative distribution function (c.d.f ) for a random variable X is dened as F(x) = P(X x) for < x < . It follows that for any random variable (discrete, continuous or mixed), the domain of F is the real line and the values of F(x) must lie in [0, 1]. We can also establish that all distribution functions have the following three properties: 1. F(x) is a nondecreasing function of x, i.e. if x1 < x2 then F(x1 ) < F(x2 ).

( The occurrence of the event {X x1 } implies the occurrence of {X x2 } so P(X x1 ) P(X x2 ))

2. limx F(x) = 0 and limx F(x) = 1

( {x : x } is the entire sample space and {x : x } is the null set. )

3. F(x) is right-continuous, i.e. F(x) = F(x+ ) at every point x, where F(x+ ) is the right hand limit of F(x).

( for discrete random variables, there will be a jump at values that are taken with positive probability)

&

Page 10 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Computing probabilities using the distribution function

RESULT 1: For any given value of x, P(X > x) = 1 F(x) RESULT 2: For any values x1 and x2 where x1 < x2 , P(x1 < X x2 ) = F(x2 ) F(x1 )

Proof: Let A be the event X x1 and B be the event X x2 . B can be written as the union of two events B = (A B) (Ac B). Since A B, P(A B) = P(A). The event were interested in is Ac B whose probability is given by P(B) P(A) or P(x1 < X x2 ) = P(X x2 ) P(X x1 ). Now apply the denition of a d.f.

RESULT 3: For any given value x P(X < x) = F(x ) RESULT 4: For any given value x P(X = x) = F(x+ ) F(x ) The distribution function of a continuous random variable will be continuous and since

x

F(x) =

f(t)dt, F (x) = f(x)

For discrete and mixed discrete-continous random variables F(x) will exhibit a countable number of discontinuities at jump points reecting the assignment of positive probabilities to a countable number of events.

&

Page 11 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Examples of distribution functions

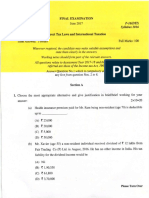

Consider the experiment of rolling a die or tossing a fair coin, with X in the rst case being the number of dots and in the second case the number of heads. Graph the distribution function of X in each of these cases. What about the experiment of picking a point in the unit interval [0, 1] with X as the distance from the origin? What does is the probability function that corresponds to the following distribution 3.3 The Cumulative Distribution Function 109 function?

F(x)

1 z3 z2

le of a

z1 z0

x1

x2

x3

x4

& 1.10. Similarly, the fact that Pr(X x) approaches 1 as x follows from Section

Page 12

%

Rohini Somanathan

Exercise 12 in Sec. 1.10.

'

Course 003: Basic Econometrics, 2011-2012

The quantile function

The distribution function X gives us the probability that X x for all real numbers x Suppose we are given a probability p and want to know the value of x corresponding to this value of the distribution function. If F is a one-to-one function, then it has an inverse and the value we are looking for is given by F1 (p)

1 Examples: median income would be found by F1 ( 2 ) where F is the distribution function of income.

Denition: When the distribution function of a random variable X is continuous and one-to-one over the whole set of possible values of X, we call the function F1 the quantile function of X. The value of F1 (p) is called the p quantile of X or the 100p percentile of X for each 0 < p < 1. Example: If X has a uniform distribution over the interval [a, b], F(x) =

xa ba

over this interval, 0

for x a and 1 for x > b. Given a value p, we simply solve for the pth quantile: x = pb + (1 p)a. Compute this for p = .5, .25, .9, . . .

&

Page 13 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Example: computing quantiles

1. The p.d.f of a random variable is given by: 1x f(x) = 8 0 Find the value of t such that (a) P(X t) = (b) P(X t) =

1 4 1 2

for 0 x 4 otherwise

&

Page 14 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Bivariate distributions

Social scientists are typically interested in the manner in which multiple attributes of people and the societies they live in. The object of interest is a multivariate probability distribution. examples: education and earnings, days ill per month and age, sex-ratios and areas under rice cultivation) This involves dealing with the joint distribution of two or more random variables. Bivariate distributions attach probabilities to events that are dened by values taken by two random variables (say X and Y). Values taken by these random variables are now ordered pairs, (xi , yi ) and an event A is a set of such values. If both X and Y are discrete random variables, the probability function f(x, y) = P(X = x and Y = y) and P(X, Y) A = f(xi , yi )

(xi ,yi )A

&

Page 15 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Representing a discrete bivariate distribution

If both X and Y are discrete, this function takes only a nite number of values. If there are only a small number of these values, they can be usefully presented in a table. The table below could represent the probabilities of receiving dierent levels of education. X is the highest level of education and Y is gender: education none primary middle high senior secondary graduate and above gender male .05 .25 .15 .1 .03 .02 female .2 .1 .04 .03 .02 .01

What are some features of a table like this one? In particular, how would we obtain probabilities associated with the following events: receiving no education becoming a female graduate completing primary school What else do you learn from the table about the population of interest?

&

Page 16 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Continuous bivariate distributions

We can extend our denition of a continuous univariate distribution to the bivariate case: Denition: Two random variables X and Y have a continuous joint distribution if there exists a nonnegative function f dened over the xy-plane such that for any subset A of the plane P[(X, Y) A] =

A

f(x, y)dxdy

f is now called the joint probability density function and must satisfy 1. f(x, y) 0 for < x < and < y <

2.

f(x, y)dxdy = 1

Example: Given the following joint density function on X and Y, well calculate P(X Y) (textbook section 3.4) cx2 y for x2 y 1 f(x, y) = 0 otherwise First nd c to make this a valid joint density (notice the limits of integration here)-it will turn out to be 21/4. Then integrate the density over Y (x2 , x) and X (0, 1). You could alternatively integrate over X (y, y) and Y (0, 1).

&

Page 17 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Bivariate distribution functions

Denition: The joint distribution function of two random variables X and Y is dened as the function F such that for all values of x and y ( < x < and < y < ) F[(x, y) = P(X x and Y y) Using this denition, the probability that (X, Y) will lie in a specied rectangle in the xy-plane is given by Pr(a < X b and c < Y d) = F(b, d) F(a, d) F(b, c) + F(a, c) The distinction between weak and strict inequalities is important when points on the boundary of the rectangle occur with positive probability. The distribution function of X can be derived from the joint distribution function F(x, y): Pr(X x) = F1 (x) = lim F(x, y)

y

Similarly, Pr(Y y) = F2 (y) = lim F(x, y)

x

&

If F(x, y) is continuously dierentiable in both its arguments, the joint density can be derived from it as: 2 F(x, y) f(x, y) = xy and given the density, we can integrate w.r.t x and y over the appropriate limits to get the distribution function.

Rohini Somanathan

Page 18

'

Course 003: Basic Econometrics, 2011-2012

Marginal distributions

Weve seen that given the joint distribution of two random variables X and Y, we can derive the distribution of one of these random variables. A distribution of X derived from the joint distribution of X and Y is known as the marginal distribution of X. We can also derive marginal density or probability mass functions given the joint density. For a discrete random variable: f1 (x) = P(X = x) =

y

P(X = x and Y = y) =

y

f(x, y)

and analogously f2 (y) = P(Y = y) =

x

P(X = x and Y = y) =

x

f(x, y)

For a continuous joint density f(x, y), the marginal densities for X and Y are given by:

f1 (x) =

f(x, y)dy and f2 (y) =

f(x, y)dx

Go back to our tabular distribution of the joint discrete distribution and see if can nd the marginal distribution of education. Can one construct the joint distribution from one of the marginal distributions?

&

Page 19 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Independent random variables

Denition: The two random variables X and Y are independent if, for any two sets A and B of real numbers, P(X A and Y B) = P(X A)P(Y B) In other words, if A is an event whose occurrence depends only values taken by X and Bs occurrence depends only on values taken by Y, then the random variables X and Y are independent only if the events A and B are independent, for all such events A and B. The condition for independence can be alternatively stated in terms of the joint and marginal distribution functions of X and Y by letting the sets A and B be the intervals (, x) and (, y) respectively. F(x, y) = F1 (x)F2 (y) For discrete distributions, we simply dene the sets A and B as the points x and y and require f(x, y) = f1 (x)f2 (y) In terms of the density functions, we say that X and Y are independent if it is possible to choose functions f1 and f2 such that the following factorization holds for ( < x < and < y < ) f(x, y) = f1 (x)f2 (y) Note, that it is not sucient that this factorization holds only for some values of x and y.

&

Page 20 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Independent random variables..examples

(DeGroot, example 3): There are two independent measurements X and Y of rainfall at a certain location: 2x for 0 x 1 g(x) = 0 otherwise Find the probability that X + Y 1. The joint density 4xy is got by multiplying the marginal densities because these variables 1 are independent. The required probability of 6 is then obtained by integrating over y (0, 1 x) and x (0, 1) How might we use a table of probabilities to determine whether two random variables are independent? Given the following density, can we tell whether the variables X and Y are independent? ke(x+2y) for x 0 and y 0 f(x, y) = 0 otherwise Notice that we can factorize the joint density as the product of g1 (x) = kex and g2 (y) = e2y . To obtain the marginal densities of X and Y, we multiply these functions by appropriate constants which make them integrate to unity. This gives us f1 (x) = ex for x 0 and f2 (y) = 2e2y for y 0

&

Page 21 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Dependent random variables..examples

Given the following density densities, lets see why the variables X and Y are dependent: 1. f(x, y) = x + y 0 for 0 < x < 1 and 0 < y < 1 otherwise

Notice that we cannot factorize the joint density as the product of a non-negative function of x and another non-negative function of y. Computing the marginals gives us f1 (x) = x + 1 1 for 0 < x < 1 and f2 (y) = y + for 0 < y < 1 2 2

so the product of the marginals is not equal to the joint density. 2. Suppose we have f(x, y) = kx2 y2 0 for x2 + y2 1 otherwise

In this case the possible values X can take depend on Y and therefore, even though the joint density can be factorized, the same factorization cannot work for all values of (x, y). The probability that X2 1, for example, will be obtained by integrating over the entire (1, 1) 3 1 interval if Y = 0, but if Y = 2 , X2 is constrained to be less than 4 More generally, whenever the space of positive probability density of X and Y is bounded by a curve that is neither a horizontal nor a vertical line, the two random variables are dependent.

&

Page 22 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Conditional distributions

Denition: Consider two discrete random variables X and Y with a joint probability function f(x, y) and marginal probability functions f1 (x) and f2 (y). After the value Y = y has been observed, we can write the the probability that X = x using our denition of conditional probability: f(x, y) P(X = x and Y = y) = P(X = x|Y = y) = Pr(Y = y) f2 (y) g1 (x|y) =

f(x,y) f2 (y)

is called the conditional probability function of X given that Y = y. Notice that:

1. for each xed value of y, g1 (x|y) is a probability function over all possible values of X because it is non-negative and g1 (x|y) =

x

1 f2 (y)

f(x, y) =

x

1 f2 (y) = 1 f2 (y)

2. conditional probabilities are proportional to joint probabilities because they just divide these by a constant. We cannot use the denition of condition probability to derive the continuous conditional distributions because the probability that Y takes any particular value y is zero. For continuous random variables, we simply dene the conditional probability density function of X given Y = y as f(x, y) for ( < x < and < y < ) g1 (x|y) = f2 (y)

and note that this is a valid p.d.f because

g1 (x|y)dx = 1

Rohini Somanathan

&

Page 23

'

Course 003: Basic Econometrics, 2011-2012

Deriving conditional distributions... the discrete case

For the education-gender example, we can nd the distribution of educational achievement conditional on being male, the distribution of gender conditional on completing college, or any other conditional distribution we are interested in : education none primary middle high senior secondary graduate and above f(gender|graduate) gender male .05 .25 .15 .1 .03 .02 .67 female .2 .1 .04 .03 .02 .01 .33 f(education|gender=male) .08 .42 .25 .17 .05 .03

&

Page 24 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Deriving conditional distributions... the continuous case

For the continuous joint distribution weve looked at before cx2 y for x2 y 1 f(x, y) = 0 otherwise the marginal distribution of X is given by

1

21 2 21 2 x ydy = x (1 x4 ) 4 8

x2

and the conditional distribution g2 (y|x) =

f(x,y) f1 (x) :

g2 (y|x) =

2y 1x4

for x2 y 1 otherwise

1

3 4

1 If X = 1 , we can compute P(Y 4 |X = 1 ) = 1 and P(Y 3 |X = 1 ) = 2 2 4 2

1 g2 (y| 2 ) =

7 15

&

Page 25 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Construction of the joint distribution

We can use conditional and marginal distributions to arrive at a joint distribution: f(x, y) = g1 (x|y)f2 (y) = g2 (y|x)f1 (x) Notice that the conditional distribution is not dened if for a value y0 at which f2 (y) = 0, but this is irrelevant because at any such value f(x, y0 ) = 0. Example: X is rst chosen from a uniform distribution on (0, 1) and then Y is chosen from a uniform distribution on (x, 1). The marginal distribution of X is straightforward: 1 0 for 0 < x < 1 otherwise (1)

f1 (x) =

Given a value of X = x, the conditional distribution

1 1x

g2 (y|x) =

for x < y < 1 otherwise

Using (1), the joint distribution is f(x, y) =

1 1x

for 0 < x < y < 1 otherwise

0 and the marginal distribution for Y can now be derived as:

y

f2 (y) =

f(x, y)dx =

0

1 dx = log(1 y) for 0 < y < 1 1x

&

Page 26 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Multivariate distributions

Our denitions of joint, conditional and marginal distributions can be easily extended to an arbitrary nite number of random variables. Such a distribution is now called a multivariate distributon. The joint distribution function is dened as the function F whose value at any point (x1 , x2 , . . . xn ) n is given by: F(x1 , . . . , xn ) = P(X1 x1 , X2 x2 , . . . , Xn xn ) For a discrete joint distribution, the probability function at any point (x1 , x2 , . . . xn ) by: f(x1 , . . . , xn ) = P(X1 = x1 , X2 = x2 , . . . , Xn = xn )

n

is given (2)

and the random variables X1 , . . . , Xn have a continuous joint distribution if there is a nonnegative function f dened on n such that for any subset A n , P[(X1 , . . . , Xn ) A] =

...A ...

f(x1 , . . . , xn )dx1 . . . dxn

(3)

The marginal distribution of any single random variable Xi can now be derived by integrating over the other variables f1 (x1 ) =

...

f(x1 , . . . , xn )dx2 . . . dxn

(4)

and the conditional probability density function of X1 given values of the other variables is: g1 (x1 |x2 . . . xn ) = f(x1 , . . . , xn ) f0 (x2 , . . . , xn ) (5)

&

Page 27 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Independence for the multivariate case

Independence: The n random variables X1 , . . . Xn are independent if for any n sets A1 , A1 , . . . An or real numbers, P(X1 A1 , X1 A2 , . . . , Xn An ) = P(X1 A1 )P(X2 A2 ) . . . P(Xn An ) If the joint distribution function of X1 , . . . Xn is given by F and the marginal d.f. for Xi by Fi , it follows that X1 , . . . Xn will be independent if and only if, for all points (x1 , . . . xn ) n F(x1 , . . . xn ) = F1 (x1 )F2 (x2 ) . . . Fn (xn ) and, if these random variables have a continuous joint distribution with joint density f(x1 , . . . xn ): f(x1 , . . . xn ) = f1 (x1 )f2 (x2 ) . . . fn (xn ) In the case of a discrete joint distribution the above equality holds for the probability function f. Random samples: Consider a probability distribution on the real line that can be represented by the either the probability function or a p.d.f f. The n random variables X1 , . . . Xn form a random sample if these variables are independent and the marginal p.f. or p.d.f. of each of them is f. It follows that for all points (x1 , . . . xn ), their joint p.f or p.d.f. is given by g(x1 , . . . , xn ) = f(x1 ) . . . f(xn ) The variables that form a random sample are said to be independent and identically distributed (i.i.d) and n is the sample size.

&

Page 28 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Multivariate distributions..example

Suppose we start with the following density function for a variable X1 : ex for x > 0 f1 (x) = 0

otherwise

and are told that for any given value of X1 = x1 , two other random variables X2 and X3 are independently and identically distributed with the following conditional p.d.f.: x ex1 t for t > 0 1 g(t|x1 ) = 0 otherwise The conditional p.d.f. is now given by g23 (x2 , x3 |x1 ) = x2 ex1 (x2 +x3 ) for non-negative values of 1 x2 , x3 (and zero otherwise) and the joint p.d.f of the three random variables is given by: f(x1 , x2 , x3 ) = f1 (x1 )g23 (x2 , x3 |x1 ) = x2 ex1 (1+x2 +x3 ) 1 for non-negative values of each of these variables. We can now obtain the marginal joint p.d.f of X2 and X3 by integrating over X1

&

Page 29 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Distributions of functions of random variables

Wed like to derive the distribution of X2 , knowing that X has a uniform distribution on (1, 1) the density f(x) of X over this interval is

1 2

we know further than Y takes values in [0, 1). the distribution function of Y is therefore given by

y

G(y) = P(Y y) = P(X2 y) = P( y X y) =

y

f(x)dx =

The density is obtained by dierentiating this: 1 for 0 < y < 1 2 y g(y) = 0

otherwise

note: Functions of continuous random variables need not be continuous- consider the example is Y = r(X) = c for any continuous random variable X.

&

Page 30 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

Directly deriving the density function of Y

RESULT: Let X be a random variable with density f(x) and Pr(a < X < b) = 1. Let Y = r(X) and suppose r(x) is continuous and either strictly increasing or strictly decreasing over (a, b). Suppose that a < X < b if an only if < Y < and let X = s(Y) be the inverse function for < Y < . Then the p.d.f of Y is specied by the relation f[s(y)] ds(y) for < y < dy g(y) = 0 otherwise Examples:

1. Suppose X represents the rate of growth of micro organisms and we are interested in the distribution of their stock Y at time t where Y = veXt . The distribution of X is given by 3(1 x2 ) for 0 < x < 1 0 otherwise If v = 10 and t = 5, then as x varies over the unit interval, y varies over (10, 10e5 ) and the inverse function is s(y) =

log(y/10) . 5

f(x) =

Therefore

ds(y) dy

1 = 5y and using our above result we have log(y/10) 2 3 ) 5y (1 5

g(y) =

for 10 < y < 10e5

0 otherwise 2. Suppose the density function for X is given by f(x) = 3x2 for x (0, 1) and Y = 1 X2 . In this case s(y) = 1 y so g(y) = 3 1 y 2

&

Page 31 Rohini Somanathan

'

Course 003: Basic Econometrics, 2011-2012

The Probability Integral Transformation

RESULT: Let X be a continuous random variable with the distribution function F and let Y = F(X). Then Y must be uniformly distributed on [0, 1]. The transformation from X to Y is called the probability integral transformation. We know that the distribution function must take values between 0 and 1. If we pick any of these values, y, the yth quantile of the distribution of X will be given by some number x and Pr(Y y) = Pr(X x) = F(x) = y which is the distribution function of a uniform random variable. This result helps us generate random numbers from various distributions, because it allows us to transform a sample from a uniform distribution into a sample from some other distribution provided we can nd F1 . Example: Suppose we want a sample from an exponential distribution. The density is ex dened over all x > 0 and the distribution function is 1 ex . If we pick from a uniform between 0 and 1, and get (say) .3, we can invert the distribution function to get x = log(0.7) as an observation of an exponential random variable.

&

Page 32 Rohini Somanathan

Das könnte Ihnen auch gefallen

- Digital Marketing Training Assignment5 Solution FileDokument8 SeitenDigital Marketing Training Assignment5 Solution Files05xoNoch keine Bewertungen

- Lesson 1 TranscriptDokument5 SeitenLesson 1 Transcripts05xoNoch keine Bewertungen

- Digital Marketing Training Assignment6 Solution FileDokument4 SeitenDigital Marketing Training Assignment6 Solution Files05xoNoch keine Bewertungen

- Applied Graph Theory - Qp&KeyDokument205 SeitenApplied Graph Theory - Qp&Keys05xoNoch keine Bewertungen

- Agreement With GuarantorDokument8 SeitenAgreement With Guarantors05xoNoch keine Bewertungen

- Why Technical Analysis WorksDokument6 SeitenWhy Technical Analysis Workss05xoNoch keine Bewertungen

- Ay2020-21 E3s1 Est Cad-CamDokument200 SeitenAy2020-21 E3s1 Est Cad-Cams05xoNoch keine Bewertungen

- Biology CorrectionsDokument1 SeiteBiology Correctionss05xoNoch keine Bewertungen

- Assignment Video Observation Functional LanguageDokument1 SeiteAssignment Video Observation Functional Languages05xoNoch keine Bewertungen

- Assignment Video Observation PPPDokument1 SeiteAssignment Video Observation PPPs05xoNoch keine Bewertungen

- Assignment - Telephone CourseDokument2 SeitenAssignment - Telephone Courses05xoNoch keine Bewertungen

- Assignment Video Observation Foreign LanguageDokument1 SeiteAssignment Video Observation Foreign Languages05xoNoch keine Bewertungen

- Assignment Video Course Difficult StudentsDokument1 SeiteAssignment Video Course Difficult Studentss05xoNoch keine Bewertungen

- Assignment Video Course Skills LessonDokument1 SeiteAssignment Video Course Skills Lessons05xoNoch keine Bewertungen

- Detox Your: Dr. Ryan Wohlfert, DC, CCSP Dr. Elena Villanueva, DCDokument7 SeitenDetox Your: Dr. Ryan Wohlfert, DC, CCSP Dr. Elena Villanueva, DCs05xoNoch keine Bewertungen

- The Doctor Will See You in 60 Seconds.: Etoricoxib 60mg, Thiocolchicoside 4mgDokument2 SeitenThe Doctor Will See You in 60 Seconds.: Etoricoxib 60mg, Thiocolchicoside 4mgs05xoNoch keine Bewertungen

- Group Reiki: October 27: Collective MessagesDokument9 SeitenGroup Reiki: October 27: Collective Messagess05xoNoch keine Bewertungen

- Assignment - Teaching Large ClassesDokument2 SeitenAssignment - Teaching Large Classess05xoNoch keine Bewertungen

- Teacher Asks The Students To Tell Their Partner 3 Things They Did Yesterday Evening. (S-S)Dokument2 SeitenTeacher Asks The Students To Tell Their Partner 3 Things They Did Yesterday Evening. (S-S)s05xoNoch keine Bewertungen

- Performa Invoice: SRI LAKSHMI NIVAS,#371,9th Main, "A" Block AECS Layout, Kudlu Gate, Near Chaitanya SchoolDokument1 SeitePerforma Invoice: SRI LAKSHMI NIVAS,#371,9th Main, "A" Block AECS Layout, Kudlu Gate, Near Chaitanya SchoolSharada HomeNoch keine Bewertungen

- 20 Areas To Declutter Quickly Checklist PDFDokument1 Seite20 Areas To Declutter Quickly Checklist PDFs05xoNoch keine Bewertungen

- Mrigasira Nakshatra - The Star of Research Invention and SpiritualityDokument15 SeitenMrigasira Nakshatra - The Star of Research Invention and SpiritualityANTHONY WRITER86% (21)

- Unlock Safely: Guide For Gated Communities ToDokument15 SeitenUnlock Safely: Guide For Gated Communities Tos05xoNoch keine Bewertungen

- Nakshatra Jayestha260610Dokument9 SeitenNakshatra Jayestha260610Anthony WriterNoch keine Bewertungen

- Nakshatras - PURVA ASHADA (Early Victory or The Undefeated) THE INVINCIBLE STARDokument3 SeitenNakshatras - PURVA ASHADA (Early Victory or The Undefeated) THE INVINCIBLE STARANTHONY WRITER50% (2)

- Tip Sheet How To Use Compost Cpts Htuc F PDFDokument2 SeitenTip Sheet How To Use Compost Cpts Htuc F PDFs05xoNoch keine Bewertungen

- Nakshatra Uttara AshadaDokument7 SeitenNakshatra Uttara AshadaAstroSunilNoch keine Bewertungen

- Mrigasira Nakshatra - The Star of Research Invention and SpiritualityDokument15 SeitenMrigasira Nakshatra - The Star of Research Invention and SpiritualityANTHONY WRITER86% (21)

- Mrigasira Nakshatra - The Star of Research Invention and SpiritualityDokument15 SeitenMrigasira Nakshatra - The Star of Research Invention and SpiritualityANTHONY WRITER86% (21)

- Paper 16Dokument8 SeitenPaper 16s05xoNoch keine Bewertungen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Review Chapter 2Dokument46 SeitenReview Chapter 2JennysanNoch keine Bewertungen

- Academic Stress ScaleDokument3 SeitenAcademic Stress Scaleteena jobNoch keine Bewertungen

- CNSB Bypass Separator Commissioning and Maintenance Guide: Conder® Tanks Covered by This GuideDokument4 SeitenCNSB Bypass Separator Commissioning and Maintenance Guide: Conder® Tanks Covered by This GuidesterlingNoch keine Bewertungen

- USDP Shehzore02Dokument39 SeitenUSDP Shehzore02Feroz GullNoch keine Bewertungen

- Manual Diagrama Detector de MetalesDokument18 SeitenManual Diagrama Detector de MetalesEdmundo Cisneros0% (1)

- AIMS Manual - 2021Dokument82 SeitenAIMS Manual - 2021Randyll TarlyNoch keine Bewertungen

- Virtual Screening of Natural Products DatabaseDokument71 SeitenVirtual Screening of Natural Products DatabaseBarbara Arevalo Ramos100% (1)

- Michael J. Flynn - Some Computer Organizations and Their Effectiveness, 1972Dokument13 SeitenMichael J. Flynn - Some Computer Organizations and Their Effectiveness, 1972earthcrosserNoch keine Bewertungen

- Innoversant Solutions - Business Consulting Services IndiaDokument15 SeitenInnoversant Solutions - Business Consulting Services Indiaispl123Noch keine Bewertungen

- IEC Certification Kit: Model-Based Design For EN 50128Dokument31 SeitenIEC Certification Kit: Model-Based Design For EN 50128Ícaro VianaNoch keine Bewertungen

- TEMPLATE - MODULE 5 - 8 Step Change Management WorksheetDokument9 SeitenTEMPLATE - MODULE 5 - 8 Step Change Management Worksheetapril75Noch keine Bewertungen

- Recommendation Letter MhandoDokument2 SeitenRecommendation Letter MhandoAnonymous Xb3zHio0% (1)

- Bianca Premo - The Enlightenment On Trial - Ordinary Litigants and Colonialism in The Spanish Empire-Oxford University Press (2017)Dokument385 SeitenBianca Premo - The Enlightenment On Trial - Ordinary Litigants and Colonialism in The Spanish Empire-Oxford University Press (2017)David Quintero100% (2)

- Basic - Concepts - in - Pharmaceutical - Care CLINICAL PHARMACYDokument17 SeitenBasic - Concepts - in - Pharmaceutical - Care CLINICAL PHARMACYPrincess RonsableNoch keine Bewertungen

- Khairro SanfordDokument2 SeitenKhairro SanfordJezreel SabadoNoch keine Bewertungen

- MP35N K Tube Technical Data SheetDokument2 SeitenMP35N K Tube Technical Data Sheetstrip1Noch keine Bewertungen

- Introduction To Graph-Theoryv2Dokument92 SeitenIntroduction To Graph-Theoryv2sheela lNoch keine Bewertungen

- Wilson's TheoremDokument7 SeitenWilson's TheoremJonik KalalNoch keine Bewertungen

- Foreign Direct Investment in Manufacturing and Service Sector in East AfricaDokument13 SeitenForeign Direct Investment in Manufacturing and Service Sector in East AfricaFrancis NyoniNoch keine Bewertungen

- Lawn-Boy Service Manual 1950-88 CompleteDokument639 SeitenLawn-Boy Service Manual 1950-88 Completemasterviking83% (35)

- Uniden Bearcat Scanner BC365CRS Owners ManualDokument32 SeitenUniden Bearcat Scanner BC365CRS Owners ManualBenjamin DoverNoch keine Bewertungen

- A HandBook On Finacle Work Flow Process 1st EditionDokument79 SeitenA HandBook On Finacle Work Flow Process 1st EditionSpos Udupi100% (2)

- Urban Problems and SolutionsDokument12 SeitenUrban Problems and SolutionsJohn Lloyd Agapito50% (2)

- Precast Concrete ConstructionDokument37 SeitenPrecast Concrete ConstructionRuta Parekh100% (1)

- Binary Arithmetic Operations: Prof. R.Ezhilarasie Assistant Professor School of Computing SASTRA Deemed To Be UniversityDokument26 SeitenBinary Arithmetic Operations: Prof. R.Ezhilarasie Assistant Professor School of Computing SASTRA Deemed To Be UniversityEzhil RamanathanNoch keine Bewertungen

- Iso 6336 5 2016Dokument54 SeitenIso 6336 5 2016Кирилл100% (2)

- 136 OsgoodeDokument8 Seiten136 Osgoodejawaid6970Noch keine Bewertungen

- Probability spaces and σ-algebras: Scott SheffieldDokument12 SeitenProbability spaces and σ-algebras: Scott SheffieldRikta DasNoch keine Bewertungen

- Grade 4 SYLLABUS Check Point 1Dokument2 SeitenGrade 4 SYLLABUS Check Point 1Muhammad HassaanNoch keine Bewertungen

- HTTPHeader LiveDokument199 SeitenHTTPHeader LiveDenys BautistaNoch keine Bewertungen