Beruflich Dokumente

Kultur Dokumente

Converging Storage and Data Networks in The Data Center Dell Networking Whitepaper Sep2011

Hochgeladen von

blussierttOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Converging Storage and Data Networks in The Data Center Dell Networking Whitepaper Sep2011

Hochgeladen von

blussierttCopyright:

Verfügbare Formate

Converging Storage and Data Networks in the Data Center

A Dell Technical White Paper

Converging Storage and Data Networks in the Data Center

THIS WHITE PAPER IS FOR INFORMATIONAL PURPOSES ONLY, AND MAY CONTAIN TYPOGRAPHICAL ERRORS AND TECHNICAL INACCURACIES. THE CONTENT IS PROVIDED AS IS, WITHOUT EXPRESS OR IMPLIED WARRANTIES OF ANY KIND. 2011 Dell Inc. All rights reserved. Reproduction of this material in any manner whatsoever without the express written permission of Dell Inc. is strictly forbidden. For more information, contact Dell. Dell, the DELL logo, and the DELL badge, PowerConnect, and PowerVault are trademarks of Dell Inc. Symantec and the SYMANTEC logo are trademarks or registered trademarks of Symantec Corporation or its affiliates in the US and other countries. Microsoft, Windows, Windows Server, and Active Directory are either trademarks or registered trademarks of Microsoft Corporation in the United States and/or other countries. Other trademarks and trade names may be used in this document to refer to either the entities claiming the marks and names or their products. Dell Inc. disclaims any proprietary interest in trademarks and trade names other than its own.

September 2011

Page ii

Converging Storage and Data Networks in the Data Center

Contents

Data Center Fabric Convergence ................................................................................... 2 Fibre Channel Over Ethernet..................................................................................... 3 FCoE Switching ........................................................................................................ 4 FCoE CNAs ............................................................................................................. 4 FCoE and iSCSI ........................................................................................................ 5 Migration from FC to FCoE .......................................................................................... 5 Conclusion ............................................................................................................. 7

Figures

Figure 1.Todays data center with multiple networks and with a consolidated Network ................ 2 Figure 2.FCoE Ethernet Switch..................................................................................... 4 Figure 3.First phase in migrating to FCoE ........................................................................ 6 Figure 4.Second phase in migrating to FCoE..................................................................... 6 Figure 5.Third and final phase in migrating to FCoE ........................................................... 7

Page iii

Converging Storage and Data Networks in the Data Center

Data Center Fabric Convergence

In typical large data centers, IT managers deploy different types of networks for data and storage networks. Todays data networks are mostly based on 1GbE, with 10GbE adoption expected to exponentially increase in 2011 and 40GbE/100GbE coming soon after. Enterprise data centers primarily use fiber channel for storage interconnects due to its reliability, performance and lossless operation. The operational and capital costs associated with having multiple networks are quite significant. Each network has separate switches, adapters, cables and management software. Often, servers have multiple 1GbE adapters and FC adapters for performance, management or reliability reasons. Additionally, power, space and cooling requirements for two networks is quite significant, often exceeding the capital equipment expenditure for the equipment itself. IT managers realize the continued cost and complexity of managing both a data network and a storage network is not a sustainable long-term solution. They are now looking for a solution that will enable them to consolidate their data and storage traffic onto a single, consolidated data center fabric. A consolidated data center fabric would dramatically simplify data center architectures and reduce cost. Some of the benefits of a consolidated fabric include: Reduced management cost as there would only be one fabric to manage. Reduced hardware costs as there would only be one set of switches, adapters and cables to purchase Simplified cabling infrastructure as there would only be one set of cables to manage.

Reduced space, power and cooling requirements as the number of switches and adapters would be much lower. The reasons multiple data center networks exist is the network and storage requirements have traditionally been different. But with advances in technology and changes in market dynamics, the needs of these two networks are converging. Additionally, standards bodies have been working to define standards that support a converged fabric.

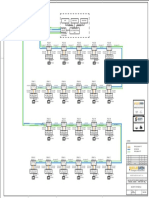

Figure 1.

Todays data center with multiple networks and with a consolidated Network

Page 2

Converging Storage and Data Networks in the Data Center

When the underlying requirements of storage and data networks are evaluated, Ethernet has the most promise for meeting the requirements for the converged fabric. Ethernet is the predominant network choice for interconnecting resources in the data center; it is ubiquitous and well understood by network engineers and developers worldwide, Ethernet has withstood the test of time and has been deployed for over twenty five years, and the cost per port of Ethernet is low. With 10GbE rapidly being adopted, and the approval of 40GbE/100GbE standards, Ethernet is now able to meet the performance requirements for a converged fabric. New Ethernet standards, such as DCB and FCoE address packet loss, flow control and other issues that now make it possible to use Ethernet as the basis for a converged fabric in the data center. Fibre Channel Over Ethernet Fibre Channel over Ethernet (FCoE) was adopted as an ANSI standard in June 2009. FCoE was developed by the International Committee for Information Technology Standards (INCITS) T11 committee. The FCoE protocol specification maps Fibre Channel (FC) upper layer protocols directly over a bridged Ethernet network and does not require the Internet Protocol (IP) for forwarding, as is the case with iSCSI. FCoE provides an evolutionary approach towards migration of FC SANs to an Ethernet switching fabric that preserves Fibre Channel constructs and provides latency, security, and traffic management attributes similar to those of FC while preserving investments in FC tools, training, and SAN devices (FC switches and FC-attached storage). The primary motivation driving the standardization of FCoE was the desire to preserve existing investment in FC SANS, while enabling data center I/O consolidation based on using Ethernet as the unified switching fabric for both LAN and SAN applications. The need for I/O consolidation is especially pressing for enterprises that are making a major commitment to server virtualization as a strategy for server resource optimization and energy conservation. Server virtualization on a large scale requires shared access to storage resources, such as that enabled by a SAN-based implementation of virtualized storage. A typical high performance server configuration currently includes a pair of Fibre Channel host bus adapters (HBAs) and two or more GbE network interface cards (NICs). Vendors of virtual machine software often recommend 6 or more GbE NIC ports for high performance servers. As the number of cores per CPU increases, the number of virtual machines per server will also increase, driving the need for significantly higher levels of both network and SAN I/O. By consolidating network and SAN I/O based on 10 GbE, followed by 40 GbE and 100 GbE in the future, the number of adapters required per server can be greatly reduced. For example, implementing FCoE over a pair of 10 Gigabit Ethernet adapter ports provides the equivalent bandwidth of two 4 Gbps FC connections and twelve GbE connections. This consolidation reduces the servers footprint with an 86% reduction in the number of cables, and also reduces the requirement for power and cooling by eliminating the hundreds of watts of power consumed by 12 adapters and the corresponding switch ports. Another economic advantage of FCoE is its ability to leverage traditionally low prices for Ethernet switch ports and adapters driven by large manufacturing volumes and strong competition. FCoE also promises improved application management and control, with all application-related data flows tracked and analyzed with a single set of Ethernet management tools. FCoE is dependent on parallel standards efforts to make Ethernet lossless by eliminating packet drops due to buffer overflow and to make other enhancements in Ethernet as a unified data center Page 3

Converging Storage and Data Networks in the Data Center switching fabric. The IEEE Data Center Bridging (DCB) and related standards are efforts are still ongoing. A combination of FCoE and DCB standards will have to be implemented both in server converged NICs and in data center switch ASICs before FCoE is ready to serve as a fully functional standards-based extension and migration path for Fibre Channel SANs in high performance data centers.

FCoE Switching

Figure 2 shows a possible architecture for the data plane of a FCoE switch that could be built based on existing Ethernet switch fabrics. The control plane (not shown in the figure) would also need to provide FCoE functionality, including support for FSPF routing and the Name Server database. Server attachment would be through the DCB Ethernet ports or line cards, while the connections to the FC SAN or FC Disk Arrays would be via FC ports. The FC ports would include the FCoE gateway encapsulation functionality plus a DCB Ethernet interface to the Ethernet switch fabric. Both the DCB Ethernet and the FC/FCoE ports would have to be implemented with a new generation of Ethernet ASIC chip sets that support the new technologies. With a number of IEEE study groups, the IETF, and T11 all involved in specifying standards that impact FCoE, it is likely that switch vendors will want to see a high degree of standards maturity before making the commitment to high performance DCB capable ASICs. An architecture such as that shown in Figure 2 not only has the potential to preserve prior investments in modular 10 GbE switches, but offers the potential for a high degree of configuration flexibility where the same modular chassis can be configured as a traditional Ethernet switch, a DCB Ethernet switch, a FCoE switch, a FC switch, or with other combinations of port types.

Figure 2.

FCoE Ethernet Switch

FCoE CNAs

Servers connected to the FCoE switched network will need Converged Network Adapters (CNAs) that support both DCB Ethernet and the Fiber Channel upper layer N-port functionality. The common product implementation is a single or dual-port 10 GbE intelligent NIC that includes hardware-based offload and acceleration for both TCP applications and FcoE. Protocol offload avoids per packet processing in software and enhances performance while reducing CPU utilization and latency. Products in this category have already been announced by a number of vendors.

Page 4

Converging Storage and Data Networks in the Data Center Initial products such as these will need to be based on pre-standard implementations of DCB Ethernet functionality because these standards have not yet been ratified. As these standards are finalized, the first generation CNAs may require some modifications in order to comply with evolving standards.

FCoE and iSCSI

iSCSI was ratified as an Internet standard in 2003 by the IETF as a low cost IP-based protocol for connecting hosts to block-level storage over existing or dedicated IP networks. iSCSI was developed to address the need for a lower cost alternative to Fibre Channel. iSCSI over Gigabit Ethernet has proven to be popular with small and medium-sized businesses (SMBs) but has not been able to displace FC because of a number of issues, including performance limitations with 1 GbE, lack of lossless Ethernet packet delivery features, and a lack of administration/management tools equivalent in functionality to those of FC. iSCSI over 10 GbE, in conjunction with intelligent NICs that offload the host CPU from TCP/IP and iSCSI processing chores, can solve the performance issue. However, this solution has not yet been widely adopted because of the relatively high costs of 10 GbE switch ports and 10 GbE intelligent iSCSI NICs. By the time that FCoE is a mature technology, the economic picture for 10 GbE iSCSI is likely to much more favorable. The emergence of DCB Ethernet for data center applications will benefit iSCSI, as well as FCoE. Therefore, as 10 GbE iSCSI and FCoE continue to mature, it can be expected that iSCSi will become considerably more competitive with both FC and FCoE. Over the same time frame, virtually all enterprises with data centers are likely to adopt one of these three SAN technologies as a side effect of server virtualization becoming ubiquitous. Data centers that have considerable prior investments in FC will naturally gravitate toward FCoE, while new adopters of SAN technology will want to consider the relative merits of FCoE vs. iSCSI over 10 GbE within the time frame of the purchase decision.

Migration from FC to FCoE

The success of FCoE depends on a number of separate standardization efforts in three standards bodies, as well as an extensive ecosystem comprised of a variety of vendors in the storage, adapter, switch, and semiconductor markets. While the FCoE aspects of the standards were driven with an apparent sense of urgency, Ethernet standards process are considerably slower due to the open nature of IEEE standards requirements and the sheer size and diversity of the Ethernet vendor community. As a result, the FC aspects of FCoE have been standardized well in advance of the full suite of DCB Ethernet enhancements described earlier. Adoption strategies for FCoE vary, depending on the situation. New data centers have the luxury of being able to start from scratch, not having to replace existing infrastructure. In this case, customers should plan on an end-to-end FCoE architecture, from the server to the storage device. For most data centers, the adoption of FCoE can be accomplished in phases. Adopting FCoE in phases minimizes the disruption caused by introducing new technology to a data center, minimizes the possibility of problems and spreads the cost of implementation over a longer period of time. The first phase in adopting FCoE is implementing FCoE between the server and the top of rack switch (see figure 3). Customers replace network cards and FC cards in servers with Converged Network Adapters that route both IP traffic and storage traffic over Ethernet. Customers also replace ToR network switches with a FCoE ToR (Top of Rack) switch. The FCoE ToR switch has multiple Ethernet ports to connect to servers, 10GbE ports to connect to the core network, and FC ports to connect to the storage network. These changes eliminate FC switches, multiple I/O cards and cabling, resulting in lower capex, lower operation cost and simplified management.

Page 5

Converging Storage and Data Networks in the Data Center

Figure 3.

First phase in migrating to FCoE

The second phase involves replacing the FC switches at the aggregation layer with FCoE switches (see figure 4). This second step is a key step in the migration process. If your architecture does not employ a 3-tier model, this phase can be skipped.

Figure 4.

Second phase in migrating to FCoE.

Page 6

Converging Storage and Data Networks in the Data Center The final phase is to replace the core Ethernet data switch and the core FC switch with a unified FCoE switch that supports both FCoE and Ethernet connectivity (see figure 5). This provides a completed end to end converged network. New storage devices with FCoE interfaces can be integrated as storage demands increase. This three phase migration strategy minimizes disruption to data center operations, minimizes the risk of implementation and spreads the cost of migration over a long period of time.

Figure 5.

Third and final phase in migrating to FCoE

Conclusion

Fibre Channel over Ethernet promises to play a major role in preserving prior investments in Fibre Channel Technology and storage resources while driving I/O consolidation for servers and eventually setting the stage for complete unification of data center switching fabrics. However, considering the mission critical nature of data center storage networking, adoption of FCoE is expected to be a very gradual and orderly process, gated in large part by the maturity of numerous relevant standards and the availability of cost-effective products that provide full implementations of those standards.

Page 7

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Cloud Information Governance Alleviating Risk and Staying Compliant - HB - FinalDokument13 SeitenCloud Information Governance Alleviating Risk and Staying Compliant - HB - FinalblussierttNoch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- IRON MOUNTAIN Maximum Business Value Ebook FinalDokument22 SeitenIRON MOUNTAIN Maximum Business Value Ebook FinalblussierttNoch keine Bewertungen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- IRON MOUNTAIN Maximum Business Value Ebook FinalDokument22 SeitenIRON MOUNTAIN Maximum Business Value Ebook FinalblussierttNoch keine Bewertungen

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- IRON MOUNTAIN Maximum Business Value Ebook FinalDokument22 SeitenIRON MOUNTAIN Maximum Business Value Ebook FinalblussierttNoch keine Bewertungen

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- TT - Email-Archiving Ch1 REVISE4Dokument11 SeitenTT - Email-Archiving Ch1 REVISE4blussierttNoch keine Bewertungen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Ten Ways To Save Money With IBM Tivoli Storage ManagerDokument12 SeitenTen Ways To Save Money With IBM Tivoli Storage ManagerblussierttNoch keine Bewertungen

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- E-Guide: VMware Vsphere Tips For SMBsDokument10 SeitenE-Guide: VMware Vsphere Tips For SMBsblussierttNoch keine Bewertungen

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- TT - Email-Archiving Ch1 FINALDokument11 SeitenTT - Email-Archiving Ch1 FINALblussierttNoch keine Bewertungen

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Expert Briefing - Hopping On The Agile Project Management Bandwagon - FinalDokument4 SeitenExpert Briefing - Hopping On The Agile Project Management Bandwagon - FinalblussierttNoch keine Bewertungen

- Gain VisibilityDokument8 SeitenGain VisibilityblussierttNoch keine Bewertungen

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- TT - Email Archiving NEW2Dokument11 SeitenTT - Email Archiving NEW2blussierttNoch keine Bewertungen

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- Consolidate Your Backup Systems With IBMDokument12 SeitenConsolidate Your Backup Systems With IBMDiego A. AlvarezNoch keine Bewertungen

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Leverage IbmDokument8 SeitenLeverage IbmblussierttNoch keine Bewertungen

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Get More-HP SAP App ManagementDokument4 SeitenGet More-HP SAP App ManagementblussierttNoch keine Bewertungen

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- TT - Email Archiving NEW2Dokument11 SeitenTT - Email Archiving NEW2blussierttNoch keine Bewertungen

- How The Cloud Improves Data Center EfficiencyDokument10 SeitenHow The Cloud Improves Data Center EfficiencyblussierttNoch keine Bewertungen

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- TT - Email-Archiving Ch1 FINALDokument11 SeitenTT - Email-Archiving Ch1 FINALblussierttNoch keine Bewertungen

- Ovum Technology Audit CA Clarity PPM Version 13Dokument12 SeitenOvum Technology Audit CA Clarity PPM Version 13blussierttNoch keine Bewertungen

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Reduce Your Data Storage FootprintDokument8 SeitenReduce Your Data Storage FootprintblussierttNoch keine Bewertungen

- CA Business Services Insight Projected Impact and Benefits 1 2012Dokument2 SeitenCA Business Services Insight Projected Impact and Benefits 1 2012blussierttNoch keine Bewertungen

- Using Portfolio Management To Align Projects and Manage Business Applications - FinalDokument13 SeitenUsing Portfolio Management To Align Projects and Manage Business Applications - FinalblussierttNoch keine Bewertungen

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Advanced Data Recovery For Midsized CompaniesDokument12 SeitenAdvanced Data Recovery For Midsized CompaniesblussierttNoch keine Bewertungen

- EntCIO Decisions Agile v5Dokument19 SeitenEntCIO Decisions Agile v5DabraCicNoch keine Bewertungen

- Realize Better Business OutcomesDokument2 SeitenRealize Better Business OutcomesblussierttNoch keine Bewertungen

- P&G TappedDokument2 SeitenP&G TappedblussierttNoch keine Bewertungen

- Transforming The Procure To Pay ProcessDokument8 SeitenTransforming The Procure To Pay ProcessblussierttNoch keine Bewertungen

- Whitepaper Gantthead CA Cloud v5Dokument10 SeitenWhitepaper Gantthead CA Cloud v5blussierttNoch keine Bewertungen

- Three Disruptive ForcesDokument12 SeitenThree Disruptive ForcesblussierttNoch keine Bewertungen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Social Intelligence Approaches To SupportDokument12 SeitenSocial Intelligence Approaches To SupportblussierttNoch keine Bewertungen

- Industry Edge - FinancialDokument56 SeitenIndustry Edge - FinancialblussierttNoch keine Bewertungen

- 3.5.5 Packet Tracer - Configure DTPDokument8 Seiten3.5.5 Packet Tracer - Configure DTPUmayra YusoffNoch keine Bewertungen

- 1.1 Recognize The Following Logical or Physical Network Topologies Given A Schematic Diagram or DescriptionDokument6 Seiten1.1 Recognize The Following Logical or Physical Network Topologies Given A Schematic Diagram or DescriptionChe MashepaNoch keine Bewertungen

- Comandos - Olt IntelbrasDokument2 SeitenComandos - Olt IntelbrasAyrton OliveiraNoch keine Bewertungen

- 3 Underlying TechnologiesDokument49 Seiten3 Underlying TechnologiesTutun JuhanaNoch keine Bewertungen

- MML - Report - 2023 1 7 15 21 58Dokument14 SeitenMML - Report - 2023 1 7 15 21 58sebok aje 123Noch keine Bewertungen

- DLINK DES-1210 Serie PDFDokument4 SeitenDLINK DES-1210 Serie PDFSACIIDNoch keine Bewertungen

- HP 110Gb-F Virtual Connect Ethernet Module For C-Class BladeSystem-c04282616Dokument15 SeitenHP 110Gb-F Virtual Connect Ethernet Module For C-Class BladeSystem-c04282616ADM HOSTCoNoch keine Bewertungen

- Ruckus Hands On Training v2Dokument77 SeitenRuckus Hands On Training v2Welly AndriNoch keine Bewertungen

- Chapter 3 Lab 3-1 - Static VLANS, Trunking, and VTP: TopologyDokument55 SeitenChapter 3 Lab 3-1 - Static VLANS, Trunking, and VTP: TopologyCESAR DAVID URREGO MORALESNoch keine Bewertungen

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Bakti 4G project IP / VLAN planning table(V1.0): 661103698.xlsx 文档密级Dokument206 SeitenBakti 4G project IP / VLAN planning table(V1.0): 661103698.xlsx 文档密级Yongki Eko Wahyu SantosoNoch keine Bewertungen

- EX 2200 Series FAQ PDFDokument5 SeitenEX 2200 Series FAQ PDFPankajNoch keine Bewertungen

- Cisco Switch Commands Cheat SheetDokument5 SeitenCisco Switch Commands Cheat SheetShreeram RaneNoch keine Bewertungen

- VPC Failover Scenarios 4.2Dokument24 SeitenVPC Failover Scenarios 4.2sivaleela123Noch keine Bewertungen

- Chapter 3 - VLANs Part 1Dokument53 SeitenChapter 3 - VLANs Part 1Fasy AwanNoch keine Bewertungen

- Tigerswitch 10/100/1000: Gigabit Ethernet SwitchDokument72 SeitenTigerswitch 10/100/1000: Gigabit Ethernet Switch林立基Noch keine Bewertungen

- Configuring L2 Interfaces - AnandpDokument25 SeitenConfiguring L2 Interfaces - AnandpcdhakaNoch keine Bewertungen

- Unit-II Data Link LayerDokument14 SeitenUnit-II Data Link LayerSadish KumarNoch keine Bewertungen

- Mogra LiteDokument2 SeitenMogra LiteSupriyo SahaNoch keine Bewertungen

- Communication With MB3170: Power Supply 24VdcDokument5 SeitenCommunication With MB3170: Power Supply 24VdcAbdo Amar HmaidouchNoch keine Bewertungen

- OrbitGMT Interconnection Scenario 2 Rev 03Dokument1 SeiteOrbitGMT Interconnection Scenario 2 Rev 03pablo esteban jara reyesNoch keine Bewertungen

- MEF Microwave Technology For Carrier Ethernet Final 110318 000010 000Dokument24 SeitenMEF Microwave Technology For Carrier Ethernet Final 110318 000010 000BIRENDRA BHARDWAJNoch keine Bewertungen

- MEF-CECP Carrier Ethernet Certified Professional Exam Blueprint D 2018Dokument2 SeitenMEF-CECP Carrier Ethernet Certified Professional Exam Blueprint D 2018Angelo James BruanNoch keine Bewertungen

- HPE Aruba AP Matrix - PublicDokument1 SeiteHPE Aruba AP Matrix - PublicCyber SkyNoch keine Bewertungen

- M845 2 XTran Access Node XTR-2124-A XTR-2124-AF A4 E ScreenDokument31 SeitenM845 2 XTran Access Node XTR-2124-A XTR-2124-AF A4 E ScreenrikyNoch keine Bewertungen

- Junipoer Module JX SFP 1GE LXDokument4 SeitenJunipoer Module JX SFP 1GE LXPlatoneNoch keine Bewertungen

- Bsl1-Ele-032 Security System SLD .Rev 0Dokument1 SeiteBsl1-Ele-032 Security System SLD .Rev 0jdzarzalejoNoch keine Bewertungen

- 02 IK IESYS e Introduction To Industrial EthernetDokument32 Seiten02 IK IESYS e Introduction To Industrial Ethernetsergio_lindau892Noch keine Bewertungen

- Ethernet Frame Format PDFDokument2 SeitenEthernet Frame Format PDFNicoleNoch keine Bewertungen

- SFPDokument2 SeitenSFPjoaquicNoch keine Bewertungen

- 3.1.1.5 Lab - Create and Store Strong PasswordsDokument8 Seiten3.1.1.5 Lab - Create and Store Strong PasswordsJuan D SanchezNoch keine Bewertungen

- Microsoft Azure Infrastructure Services for Architects: Designing Cloud SolutionsVon EverandMicrosoft Azure Infrastructure Services for Architects: Designing Cloud SolutionsNoch keine Bewertungen

- Microsoft Certified Azure Fundamentals Study Guide: Exam AZ-900Von EverandMicrosoft Certified Azure Fundamentals Study Guide: Exam AZ-900Noch keine Bewertungen