Beruflich Dokumente

Kultur Dokumente

Properties of Measurement Scales

Hochgeladen von

Hema SharistOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Properties of Measurement Scales

Hochgeladen von

Hema SharistCopyright:

Verfügbare Formate

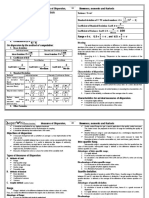

Properties of Measurement Scales Each scale of measurement satisfies one or more of the following properties of measurement.

Identity. Each value on the measurement scale has a unique meaning. Magnitude. Values on the measurement scale have an ordered relationship to one another. That is, some values are larger and some are smaller. Equal intervals. Scale units along the scale are equal to one another. This means, for example, that the difference between 1 and 2 would be equal to the difference between 19 and 20. Absolute zero. The scale has a true zero point, below which no values exist.

Nominal Scale of Measurement The nominal scale of measurement only satisfies the identity property of measurement. Values assigned to variables represent a descriptive category, but have no inherent numerical value with respect to magnitude. Gender is an example of a variable that is measured on a nominal scale. Individuals may be classified as "male" or "female", but neither value represents more or less "gender" than the other. Religion and political affiliation are other examples of variables that are normally measured on a nominal scale. Ordinal Scale of Measurement The ordinal scale has the property of both identity and magnitude. Each value on the ordinal scale has a unique meaning, and it has an ordered relationship to every other value on the scale. An example of an ordinal scale in action would be the results of a horse race, reported as "win", "place", and "show". We know the rank order in which horses finished the race. The horse that won finished ahead of the horse that placed, and the horse that placed finished ahead of the horse that showed. However, we cannot tell from this ordinal scale whether it was a close race or whether the winning horse won by a mile. Interval Scale of Measurement The interval scale of measurement has the properties of identity, magnitude, and equal intervals. A perfect example of an interval scale is the Fahrenheit scale to measure temperature. The scale is made up of equal temperature units, so that the difference between 40 and 50 degrees Fahrenheit is equal to the difference between 50 and 60 degrees Fahrenheit. With an interval scale, you know not only whether different values are bigger or smaller, you also know how much bigger or smaller they are. For example, suppose it is 60 degrees Fahrenheit on Monday and 70 degrees on Tuesday. You know not only that it was hotter on Tuesday, you also know that it was 10 degrees hotter.

Ratio Scale of Measurement The ratio scale of measurement satisfies all four of the properties of measurement: identity, magnitude, equal intervals, and an absolute zero. The weight of an object would be an example of a ratio scale. Each value on the weight scale has a unique meaning, weights can be rank ordered, units along the weight scale are equal to one another, and there is an absolute zero. Absolute zero is a property of the weight scale because objects at rest can be weightless, but they cannot have negative weight.

Group Statistics Box

Take a look at the first box in your output file called Group Statistics. In the first column, you will see the number 1 and the number 2 under the word IVSUGAR. These are the numbers that we chose to represent our two IV conditions, sugar (1) and no sugar (2). You can find out some descriptive statistics about each condition by reading across each row in this box. You can also see the number of participants per condition.

Example

In the Group Statistics box, the mean for condition 1 (sugar) is 4.20. The mean for condition 2 (no sugar) is 2.20. The standard deviation for condition 1 is 1.30 and for condition 2, 0.84. The number of participants in each condition (N) is 5.

Why look at Group Statistics

Some students wonder why we look at this box. We are doing a T-test and this box does not tell us the results for that test. We look at the box because it can give us some important and relevant information.

Example

We can see how many data points were entered for each condition. If we know that we had 5 participants per condition in our experiment, but N = 4 for condition 1 on this printout, this would be an indication that we had not entered all of the participant data in our data file. The condition means are also very important. They show us the magnitude of the difference between conditions and we can see which group has a higher mean. For example, we can see that the mean for condition1 is almost twice that of condition 2. We can see that participants in the sugar condition are remembering nearly twice the amount of words when compared to the no sugar condition.

Independent Samples Test Box

This is the next box you will look at. At first glance, you can see a lot of information and that might feel intimidating. But dont worry, you actually only have to look at half of the information in this box, either the top row or the bottom row.

Levenes Test for Equality of Variances

To find out which row to read from, look at the large column labeled Levenes Test for Equality of Variances. This is a test that determines if the two conditions have about the same or different amounts of variability between scores. You will see two smaller columns labeled F and Sig. Look in the Sig. column. It will have one value. You will use this value to determine which row to read from. In this example, the value in the Sig. column is 0.26 (when rounded).

If the Sig. Value is greater than .05

Read from the top row. A value greater than .05 means that the variability in your two conditions is about the same. That the scores in one condition do not vary too much more than the scores in your second condition. Put scientifically, it means that the variability in the two conditions is not significantly different. This is a good thing. In this example, the Sig. value is greater than .05. So, we read from the first row.

If the Sig. Value is less than or equal to .05

Read from the bottom row. A value less than .05 means that the variability in your two conditions is not the same. That the scores in one condition vary much more than the scores in your second condition. Put scientifically, it means that the variability in the two conditions is significantly different. This is a bad thing, but SPSS takes this into account by giving you slightly

different results in the second row. If the Sig. value in this example was greater less than .05, we would have read from the second row.

So weve got a row

Now that we have a row to read from, it is time to look at the results for our T-test. These results will tell us if the Means for the two groups were statistically different (significantly different) or if they were relatively the same.

Sig (2-Tailed) value

This value will tell you if the two condition Means are statistically different. Make sure to read from the appropriate row. In this example, the Sig (2-Tailed) value is 0.02. Recall that we have determined that it is best to read from the top row.

If the Sig (2-Tailed) value is greater than 05

You can conclude that there is no statistically significant difference between your two conditions. You can conclude that the differences between condition Means are likely due to chance and not likely due to the IV manipulation.

If the Sig (2-Tailed) value is less than or equal to .05

You can conclude that there is a statistically significant difference between your two conditions. You can conclude that the differences between condition Means are not likely due to change and are probably due to the IV manipulation.

Our Example

The Sig. (2-Tailed) value in our example is 0.02. This value is less than .05. Because of this, we can conclude that there is a statistically significant difference between the mean number of words recalled for the sugar and no sugar conditions. Since our Group Statistics box revealed that the Mean for the sugar condition was greater than the Mean for the no sugar condition, we can conclude that participants in the sugar condition were able to recall significantly more words than participants in the no sugar condition.

What it does: The Paired Samples T Test compares the means of two variables. It computes the difference between the two variables for each case, and tests to see if the average difference is significantly different from zero. Where to find it: Under the Analyze menu, choose Compare Means, then choose Paired Samples T Test. Click on both variables you wish to compare, then move the pair of selected variables into the Paired Variables box. Assumption: -Both variables should be normally distributed. You can check for normal distribution with a Q-Q plot.

Hypothesis: Null: There is no significant difference between the means of the two variables. Alternate: There is a significant difference between the means of the two variables. SPSS Output Following is sample output of a paired samples T test. We compared the mean test scores before (pre-test) and after (post-test) the subjects completed a test preparation course. We want to see if our test preparation course improved people's score on the test. First, we see the descriptive statistics for both variables.

The post-test mean scores are higher. Next, we see the correlation between the two variables.

There is a strong positive correlation. People who did well on the pre-test also did well on the post-test. Finally, we see the results of the Paired Samples T Test. Remember, this test is based on the difference between the two variables. Under "Paired Differences" we see the descriptive statistics for the difference between the two variables.

To the right of the Paired Differences, we see the T, degrees of freedom, and significance.

The T value = -2.171 We have 11 degrees of freedom Our significance is .053 If the significance value is less than .05, there is a significant difference. If the significance value is greater than. 05, there is no significant difference. Here, we see that the significance value is approaching significance, but it is not a significant difference. There is no difference between pre- and post-test scores. Our test preparation course did not help!

Das könnte Ihnen auch gefallen

- Scale of MeasurementDokument5 SeitenScale of MeasurementashishNoch keine Bewertungen

- Measurement ScalesDokument27 SeitenMeasurement ScalesYash GajwaniNoch keine Bewertungen

- Chapter 4 Supply AnalysisDokument11 SeitenChapter 4 Supply AnalysisNeelabh KumarNoch keine Bewertungen

- Heteroscedasticity NotesDokument9 SeitenHeteroscedasticity NotesDenise Myka TanNoch keine Bewertungen

- Tests of HypothesisDokument16 SeitenTests of HypothesisDilip YadavNoch keine Bewertungen

- ARCH ModelDokument26 SeitenARCH ModelAnish S.MenonNoch keine Bewertungen

- AutocorrelationDokument49 SeitenAutocorrelationBenazir Rahman0% (1)

- Measurement and ScalingDokument20 SeitenMeasurement and ScalingAnshikaNoch keine Bewertungen

- CFN-1-E-B2 IgnouDokument45 SeitenCFN-1-E-B2 IgnouIGNOU ASSIGNMENT100% (1)

- Chapter11-Two Ways AnovaDokument26 SeitenChapter11-Two Ways AnovaRaluca NegruNoch keine Bewertungen

- Measure of Central TendencyDokument113 SeitenMeasure of Central TendencyRajdeep DasNoch keine Bewertungen

- Simultaneous Equation ModelsDokument17 SeitenSimultaneous Equation ModelsLekshmi NairNoch keine Bewertungen

- ProbabilityDokument23 SeitenProbabilityMusicNoch keine Bewertungen

- Sessions 21-24 Factor Analysis - Ppt-RevDokument61 SeitenSessions 21-24 Factor Analysis - Ppt-Revnandini swamiNoch keine Bewertungen

- Data Analysis Using SPSS: Research Workshop SeriesDokument86 SeitenData Analysis Using SPSS: Research Workshop SeriesMuhammad Asad AliNoch keine Bewertungen

- Change of Origin and ScaleDokument11 SeitenChange of Origin and ScaleSanjana PrabhuNoch keine Bewertungen

- Organizational CynicismDokument62 SeitenOrganizational CynicismAnonymous rWn3ZVARLg100% (1)

- The Gauss Markov TheoremDokument17 SeitenThe Gauss Markov TheoremSamuel100% (1)

- Measurement ScaleDokument24 SeitenMeasurement ScalesoniNoch keine Bewertungen

- LESSON 2 Introduction To Statistics ContinuationDokument32 SeitenLESSON 2 Introduction To Statistics ContinuationJhay Anne Pearl Menor100% (1)

- CHAPTER 4 Research PDFDokument5 SeitenCHAPTER 4 Research PDFAviqa RizkyNoch keine Bewertungen

- Chapter 7Dokument43 SeitenChapter 7Uttam GolderNoch keine Bewertungen

- Manova and MancovaDokument10 SeitenManova and Mancovakinhai_seeNoch keine Bewertungen

- Combining Scores Multi Item ScalesDokument41 SeitenCombining Scores Multi Item ScalesKevin CannonNoch keine Bewertungen

- The IS - LM CurveDokument28 SeitenThe IS - LM CurveVikku AgarwalNoch keine Bewertungen

- Introduction To Business MathematicsDokument44 SeitenIntroduction To Business MathematicsAbdullah ZakariyyaNoch keine Bewertungen

- ANOVADokument57 SeitenANOVAMegha OmshreeNoch keine Bewertungen

- Multiple Regression AnalysisDokument77 SeitenMultiple Regression Analysishayati5823Noch keine Bewertungen

- Measurement and Scaling TechniquesDokument48 SeitenMeasurement and Scaling TechniquesDeepika Rana100% (1)

- Correlation NewDokument38 SeitenCorrelation NewMEDISHETTY MANICHANDANA100% (1)

- Optimum Combination of InputsDokument4 SeitenOptimum Combination of InputsRhea Joy L. SevillaNoch keine Bewertungen

- Ststistc PropertiesDokument5 SeitenStstistc PropertiesAbrar Ahmad0% (1)

- HeteroscedasticityDokument7 SeitenHeteroscedasticityBristi RodhNoch keine Bewertungen

- Reubs High School: Statistics ProjectDokument13 SeitenReubs High School: Statistics ProjectDhiraj DugarNoch keine Bewertungen

- Economic IndicatorsDokument2 SeitenEconomic IndicatorsShamsiyyaUNoch keine Bewertungen

- Sampling Theory And: Its Various TypesDokument46 SeitenSampling Theory And: Its Various TypesAashish KumarNoch keine Bewertungen

- Gdi and GemDokument32 SeitenGdi and GemDaVid Silence KawlniNoch keine Bewertungen

- Factor AnalysisDokument8 SeitenFactor AnalysisKeerthana keeruNoch keine Bewertungen

- Compoun Interest New - 412310955Dokument21 SeitenCompoun Interest New - 412310955Kathryn Santos50% (2)

- Chow TestDokument23 SeitenChow TestYaronBabaNoch keine Bewertungen

- Research Methodology - Primary & Secondary DataDokument20 SeitenResearch Methodology - Primary & Secondary Datajinu_john100% (1)

- 7 Chi-Square and FDokument21 Seiten7 Chi-Square and FSudhagarSubbiyanNoch keine Bewertungen

- Post Optimality AnalysisDokument13 SeitenPost Optimality AnalysislitrakhanNoch keine Bewertungen

- Two-Way Anova: (Analysis of Variance)Dokument64 SeitenTwo-Way Anova: (Analysis of Variance)Trisha GonzalesNoch keine Bewertungen

- Karl Pearson's Measure of SkewnessDokument27 SeitenKarl Pearson's Measure of SkewnessdipanajnNoch keine Bewertungen

- Mth302 Quiz 3Dokument2 SeitenMth302 Quiz 3Mubasher AzizNoch keine Bewertungen

- 5 - Test of Hypothesis (Part - 1)Dokument44 Seiten5 - Test of Hypothesis (Part - 1)Muhammad Huzaifa JamilNoch keine Bewertungen

- Questionnaire Design: Marketing Research-Naval BajpaiDokument45 SeitenQuestionnaire Design: Marketing Research-Naval Bajpaiaman100% (1)

- Disperson SkwenessOriginalDokument10 SeitenDisperson SkwenessOriginalRam KrishnaNoch keine Bewertungen

- BCG MatrixDokument19 SeitenBCG MatrixShiv PathakNoch keine Bewertungen

- Chap - 5 - Problems With AnswersDokument15 SeitenChap - 5 - Problems With AnswersKENMOGNE TAMO MARTIALNoch keine Bewertungen

- CHAPTER 17 Index NumbersDokument98 SeitenCHAPTER 17 Index NumbersAyushi JangpangiNoch keine Bewertungen

- SamplingDokument13 SeitenSamplingArun Mishra100% (1)

- Chapter 6 Measures of DispersionDokument27 SeitenChapter 6 Measures of DispersionMohtasin EmonNoch keine Bewertungen

- Topic 6 HeteroscedasticityDokument15 SeitenTopic 6 HeteroscedasticityRaghunandana RajaNoch keine Bewertungen

- Measurements and Scaling ConceptsDokument9 SeitenMeasurements and Scaling Conceptsgurjeet08Noch keine Bewertungen

- Measure of Central TendancyDokument27 SeitenMeasure of Central TendancySadia HakimNoch keine Bewertungen

- Data ENTRY and ANALYSIS Between GroupDokument15 SeitenData ENTRY and ANALYSIS Between GroupNur HidayahNoch keine Bewertungen

- One Sample T-Test:: Purpose: The One-Sample T Test Compares The Mean Score of A Sample To A Population Mean. HypothesesDokument4 SeitenOne Sample T-Test:: Purpose: The One-Sample T Test Compares The Mean Score of A Sample To A Population Mean. HypothesesprosmaticNoch keine Bewertungen

- Types of DataDokument9 SeitenTypes of DataIqbal TaufiqurrochmanNoch keine Bewertungen

- Equity Valuation Using MultiplesDokument47 SeitenEquity Valuation Using MultiplesSankalpKhannaNoch keine Bewertungen

- Education Longitudinal Study (ELS), 2002: Base YearDokument701 SeitenEducation Longitudinal Study (ELS), 2002: Base YearvngsasNoch keine Bewertungen

- StatisticsDokument15 SeitenStatisticsanzakanwalNoch keine Bewertungen

- Design of Overlay Thickness-01..Dokument21 SeitenDesign of Overlay Thickness-01..Shovon HalderNoch keine Bewertungen

- Module 2Dokument13 SeitenModule 2Princess Kaye RicioNoch keine Bewertungen

- Normal Distribution (Activity Sheet)Dokument3 SeitenNormal Distribution (Activity Sheet)sh1n 23Noch keine Bewertungen

- Review Questions To AttemptDokument2 SeitenReview Questions To AttemptFish de PaieNoch keine Bewertungen

- The T Test For Independent Means: Prepared By: Jhunar John M. Tauy, RPMDokument47 SeitenThe T Test For Independent Means: Prepared By: Jhunar John M. Tauy, RPMChin Dela CruzNoch keine Bewertungen

- Spring 12 ECON-E370 IU Exam 1 ReviewDokument27 SeitenSpring 12 ECON-E370 IU Exam 1 ReviewTutoringZoneNoch keine Bewertungen

- StatisticsDokument8 SeitenStatisticsIg100% (1)

- Test Method RC 20101 Design of Asphalt Mixes Marshall MethodDokument4 SeitenTest Method RC 20101 Design of Asphalt Mixes Marshall MethodNguyễn Văn MinhNoch keine Bewertungen

- 4 Qs Statistics 1 With Answers PDFDokument2 Seiten4 Qs Statistics 1 With Answers PDFANGELINE Madu0% (1)

- Statistics For Management - 1Dokument43 SeitenStatistics For Management - 1Nandhini P Asst.Prof/MBANoch keine Bewertungen

- 1-STATISTICS AND PROBABILITY For Senior HiDokument52 Seiten1-STATISTICS AND PROBABILITY For Senior HiJemar AlipioNoch keine Bewertungen

- Malaysia Folk Literature in Early Childhood EducationDokument8 SeitenMalaysia Folk Literature in Early Childhood EducationNur Faizah RamliNoch keine Bewertungen

- Name-Ahel Kundu Ms-Excel Gr-8 Roll No. STAT02 1st Data SetDokument30 SeitenName-Ahel Kundu Ms-Excel Gr-8 Roll No. STAT02 1st Data SetAhel KunduNoch keine Bewertungen

- DATA DESCRIPTION - Central TendencyDokument53 SeitenDATA DESCRIPTION - Central TendencyAldous ManuelNoch keine Bewertungen

- How To Use Excel To Conduct An AnovaDokument19 SeitenHow To Use Excel To Conduct An AnovakingofdealNoch keine Bewertungen

- Measures of Dispersion The Range Standard Deviation and Variance 1Dokument23 SeitenMeasures of Dispersion The Range Standard Deviation and Variance 1Dimple BoiserNoch keine Bewertungen

- Gso Iso 8243 1995 eDokument19 SeitenGso Iso 8243 1995 eMuhammad Masood AbbasNoch keine Bewertungen

- Mean Precipitation Calculation Over An Area: de Mesa, Princess Aleya Ortiz, Sabrina Mae SDokument23 SeitenMean Precipitation Calculation Over An Area: de Mesa, Princess Aleya Ortiz, Sabrina Mae SPrincessAleya DeMesaNoch keine Bewertungen

- Lab Phy 3rd 4thDokument6 SeitenLab Phy 3rd 4thFree fire LoverNoch keine Bewertungen

- 9709 m17 QP 62Dokument12 Seiten9709 m17 QP 62TirthNoch keine Bewertungen

- AUCET 2013 BrochureDokument31 SeitenAUCET 2013 BrochuresureshsocialworkNoch keine Bewertungen

- Properties of Median in StatisticsDokument6 SeitenProperties of Median in StatisticsElijah IbsaNoch keine Bewertungen

- STT WK 11 Lec 21 22Dokument11 SeitenSTT WK 11 Lec 21 22Muhammad AbubakarNoch keine Bewertungen

- Ora Laboratory Manual: Section 4 Section 4Dokument25 SeitenOra Laboratory Manual: Section 4 Section 4Armando SaldañaNoch keine Bewertungen

- Stat - Prob 11 - Q3 - SLM - WK2Dokument11 SeitenStat - Prob 11 - Q3 - SLM - WK2Lynelle Quinal80% (5)

- Capability AnalysisDokument22 SeitenCapability AnalysisRaviNoch keine Bewertungen

- Grouped DataDokument3 SeitenGrouped DataBahauddin BalochNoch keine Bewertungen