Beruflich Dokumente

Kultur Dokumente

110

Hochgeladen von

Eleunamme ZxOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

110

Hochgeladen von

Eleunamme ZxCopyright:

Verfügbare Formate

1.4 Describe the differences between symmetric and asymmetric multiprocessing.

What are three advantages and one disadvantage of multiprocessor systems? Answer: Symmetric multiprocessing treats all processors as equals, and I/O can be processed on any CPU . Asymmetric multiprocessing has one master CPU and the remainder CPU s are slaves. The master distributes tasks among the slaves, and I/O is usually done by the master only. Multiprocessors can save money by not duplicating power supplies, housings, and peripherals. They can execute programs more quickly and can have increased reliability. They are also more complex in both hardware and software than uniprocessor systems. 1.5 Distinguish between the clientserver and peer-to-peer models of distributed systems. Answer: The client-server model firmly distinguishes the roles of the client and server. Under this model, the client requests services that are provided by the server. The peer-to-peer model doesnt have such strict roles. In fact, all nodes in the system are considered peers and thus may act as either clients or servers - or both. A node may request a service from another peer, or the node may in fact provide such a service to other peers in the system. For example, lets consider a system of nodes that share cooking recipes. Under the client-server model, all recipes are stored with the server. If a client wishes to access a recipe, it must request the recipe from the specified server. Using the peer-to-peer model, a peer node could ask other peer nodes for the specified recipe. The node (or perhaps nodes) with the requested recipe could provide it to the requesting node. Notice how each peer may act as both a client (i.e. it may request recipes) and as a server (it may provide recipes.) 1.6 Consider a computing cluster consisting of twonodes running adatabase. Describe two ways 6 in which the cluster software can manage access to the data on the disk. Discuss the benefits and disadvantages of each. Answer: Consider the following two alternatives: asymmetric clustering and parallel clustering With asymmetric clustering, one host runs the database application with the other clustering. host simply monitoring it. If the server fails, the monitoring host becomes the active server. This is appropriate for providing redundancy. However, it does not utilize the potential processing power of both hosts. With parallel clustering, the database application can run in parallel on both hosts. The difficulty implementing parallel clusters is providing some form of distributed locking mechanism for files on the shared disk. 1.9 Give two reasons why caches are useful.What problems do they solve? What problems do they cause? If a cache can be made as large as the device for which it is caching (for instance, a cache as large as a disk), why not make it that large and eliminate the device? Answer: Caches are useful when two or more components need to exchange data, and the components perform transfers at differing speeds. Caches solve the transfer problem by providing a buffer of intermediate speed between the components. If the fast device finds the data it needs in the cache, it need not wait for the slower device. The data in the cache must be kept consistent with the data in the components. If a component has a data value change, and the datum is also in the cache, the cache must also be updated. This is especially a problem on

multiprocessor systemswhere more than one process may be accessing a datum.Acomponent may be eliminated by an equal-sized cache, but only if: (a) the cache and the component have equivalent state-saving capacity (that is, if the component retains its data when electricity is removed, the cache must retain data as well), and (b) the cache is affordable, because faster storage tends to be more expensive.

2.5 What is the purpose of the command interpreter? Why is it usually separate from the kernel? Would it be possible for the user to develop a new command interpreter using the system-call interface provided by the operating system? Answer: It reads commands from the user or from a file of commands and executes them, usually by turning them into one or more system calls. It is usually not part of the kernel since the command interpreter is subject to changes. An user should be able to develop a new command interpreter using the system-call interface provided by the operating system. The command interpreter allows an user to create and manage processes and also determine ways by which they communicate (such as through pipes and files). As all of this functionality could be accessed by an user-level program using the system calls, it should be possible for the user to develop a new command-line interpreter. 2.9 In what ways is the modular kernel approach similar to the layered approach? In what ways does it differ from the layered approach? Answer: The modular kernel approach requires subsystems to interact with each other through carefully constructed interfaces that are typically narrow (in terms of the functionality that is exposed to external modules). The layered kernel approach is similar in that respect. However, the layered kernel imposes a strict ordering of subsystems such that subsystems at the lower layers are not allowed to invoke operations corresponding to the upper-layer subsystems. There are no such restrictions in the modular-kernel approach, wherein modules are free to invoke each other without any constraints. 3.1 Describe the differences among short-term, medium-term, and longterm scheduling. Answer: Short-term (CPU scheduler)selects from jobs in memory those jobs that are ready to execute and allocates the CPU to them. Medium-termused especially with time-sharing systems as an intermediate scheduling used level. A swapping scheme is implemented to remove partially run programs from memory and reinstate them later to continue where they left off. Long-term (job scheduler)determines which jobs are brought into memory for processing. The primary difference is in the frequency of their execution. The shortterm must select a new process quite often. Long-term is usedmuch less often since it handles placing jobs in the system and may wait a while for a job to finish before it admits another one. 3.4 What are the benefits and detriments of each of the following? Consider both the systems and the programmers levels. a. Symmetric and asymmetric communication b. Automatic and explicit buffering c. Send by copy and send by reference d. Fixed-sized and variable-sized messages Answer: a. Symmetric and asymmetric communication - A benefit of symmetric communication is

that it allows a rendezvous between the sender and receiver. A disadvantage of a blocking send is that a rendezvous may not be required and the message could be delivered asynchronously; received at a point of no interest to the sender. As a result, message-passing systems often provide both forms of synchronization. b. Automatic and explicit buffering -Automatic buffering provides a queuewith indefinite length; thus ensuring the senderwill never have to block while waiting to copy a message. There are no specifications how automatic buffering will be provided; one scheme may reserve sufficiently large memory where much of the memory is wasted. Explicit buffering specifies how large the buffer is. In this situation, the sender may be blocked while waiting for available space in the queue. However, it is less likely memory will be wasted with explicit buffering. c. Send by copy and send by reference - Send by copy does not allow the receiver to alter the state of the parameter; send by reference does allow it.Abenefit of send by reference is that it allows the programmer towrite a distributed version of a centralized application. Javas RMI provides both, however passing a parameter by reference requires declaring the parameter as a remote object as well. d. Fixed-sized and variable-sized messages - The implications of this are mostly related to buffering issues; with fixed-size messages, a buffer with a specific size can hold a known number of messages. The number of variable-sized messages that can be held by such a buffer is unknown. Consider how Windows 2000 handles this situation: with fixed-sizedmessages (anything < 256 bytes), the messages are copied from the address space of the sender to the address space of the receiving process. Larger messages (i.e. variable-sized messages) use shared memory to pass the message.

4.4 Can a multithreaded solution using multiple user-level threads achieve betterperformance

on amultiprocessor system than on a single-processor system? Answer: A multithreaded system comprising of multiple user-level threads cannot make use of the different processors in a multiprocessor system simultaneously. The operating system sees only a single process and will not schedule the different threads of the process on separate processors. Consequently, there is no performance benefit associated with executing multiple user-level threads on a multiprocessor system. 4.6 Consider amultiprocessor system and a multithreaded program written using the many-to6 many threading model. Let the number of user-level threads in the program be more than the number of processors in the system.Discuss the performance implications of the following scenarios. a. The number of kernel threads allocated to the program is less than the number of processors. b. The number of kernel threads allocated to the program is equal to the number of processors. c. The number of kernel threads allocated to the program is greater than the number of processors but less than the number of userlevel threads. Answer: When the number of kernel threads is less than the number of processors, then some of the processors would remain idle since the scheduler maps only kernel threads to processors and not user-level threads to processors. When the number of kernel threads is exactly equal to

the number of processors, then it is possible that all of the processors might be utilized simultaneously. However, when a kernelthread blocks inside the kernel (due to a page fault or while invoking system calls), the corresponding processor would remain idle. When there are more kernel threads than processors, a blocked kernel thread could be swapped out in favor of another kernel thread that is ready to execute, thereby increasing the utilization of the multiprocessor system.

4.8 The Fibonacci sequence /* * @author Gagne, Galvin, Silberschatz * Operating System Concepts - Seventh Edition * Copyright John Wiley & Sons - 2005. */ #include <stdio.h> #include <windows.h> /** we will only allow up to 256 Fibonacci numbers */ #define MAX_SIZE 256 int fibs[MAX_SIZE]; /* the thread runs in this separate function */ DWORD WINAPI Summation(PVOID Param) { DWORD upper = *(DWORD *)Param; int i; if (upper== 0) return 0; else if (upper == 1) fibs[0] = 0; else if (upper== 2) { fibs[0] = 0; fibs[1] = 1; } else { // sequence > 2 fibs[0] = 0; fibs[1] = 1; for (i = 2; i < upper; i++) fibs[i] = fibs[i-1] + fibs[i-2]; } return 0; } int main(int argc, char *argv[]) { DWORD ThreadId;

HANDLE ThreadHandle; int Param; // do some basic error checking if (argc != 2) { fprintf(stderr,"An integer parameter is required\n"); return -1; } Param = atoi(argv[1]); if ( (Param < 0) || (Param > 256) ) { fprintf(stderr, "an integer >= 0 and < 256 is required \n"); return -1; } // create the thread ThreadHandle = CreateThread(NULL, 0, Summation, &Param, 0, &ThreadId); if (ThreadHandle != NULL) { WaitForSingleObject(ThreadHandle, INFINITE); CloseHandle(ThreadHandle); /** now output the Fibonacci numbers */ for (int i = 0; i < Param; i++) printf("%d\n", fibs[i]); } } 5.1 Discuss how the following pairs of scheduling criteria conflict in certain settings. 1 a. CPU utilization and response time b. Average turnaround time and maximum waiting time c. I/O device utilization and CPU utilization Answer: CPU utilization and response time: CPU utilization is increased if the overheads associated with context switching is minimized. The context switching overheads could be lowered by performing context switches infrequently. This could however result in increasing the response time for processes. Average turnaround time and maximum waiting time: Average turnaround time is minimized by executing the shortest tasks first. Such a scheduling policy could however starve longrunning tasks and thereby increase their waiting time. I/O device utilization and CPU utilization: CPU utilization is maximized by running long-running

CPU-bound tasks without performing context switches. I/O device utilization is maximized by scheduling I/O-bound jobs as soon as they become ready to run, thereby incurring the overheads of context switches. 5.2 Consider the following set of processes, with the length of the CPU-burst time given in 2 milliseconds: Process Burst Time P1 P2 P3 P4 P5 10 1 2 1 5 Priority 3 1 3 4 2

The processes are assumed to have arrived in the order P1, P 2, P3, P 4, P5,all at time 0. a. Draw four Gantt charts illustrating the execution of these processes using FCFS, SJF, a nonpreemptive priority (a smaller priority number implies a higher priority), and RR (quantum = 1) scheduling. b. What is the turnaround time of each process for each of the scheduling algorithms in part a? c. What is thewaiting time of each process for each of the scheduling algorithms in part a? d. Which of the schedules in part a results in the minimal average waiting time (over all processes)? Answer: a. The four Gantt charts () b. Turnaround time

FCFS RR SJF

Priority 16 1 18 19 6 Priority 6 0 16 6 18 8 1

P1 P2 P3 P4 P5

10 11 13 14 19

FCFS

19 2 7 4 14

RR

19 1 4 2 9

SJF

c. Waiting time (turnaround time minus burst time) P1 P2 P3 P4 P5 0 10 11 1 13 14 4 9 1 5 3 9 9 0 2 1 4

d. Shortest Job First

Das könnte Ihnen auch gefallen

- Operating Systems Interview Questions You'll Most Likely Be AskedVon EverandOperating Systems Interview Questions You'll Most Likely Be AskedNoch keine Bewertungen

- SAS Programming Guidelines Interview Questions You'll Most Likely Be AskedVon EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be AskedNoch keine Bewertungen

- Chapter 1 ExercisesDokument7 SeitenChapter 1 ExercisesWajid AliNoch keine Bewertungen

- Assignment 1Dokument4 SeitenAssignment 1WmshadyNoch keine Bewertungen

- Chapter 1 ExerciesesDokument5 SeitenChapter 1 ExerciesesSyedRahimAliShahNoch keine Bewertungen

- Silberschatz 7 Chapter 1 Questions and Answers: Jas::/conversion/tmp/scratch/389117979Dokument6 SeitenSilberschatz 7 Chapter 1 Questions and Answers: Jas::/conversion/tmp/scratch/389117979AbdelrahmanAbdelhamedNoch keine Bewertungen

- 1.1 A Network Computer Relies On A Centralized Computer For Most of Its SerDokument6 Seiten1.1 A Network Computer Relies On A Centralized Computer For Most of Its SerChinOrzNoch keine Bewertungen

- Tutorial 4 Solution - OSDokument6 SeitenTutorial 4 Solution - OSUnknown UnknownNoch keine Bewertungen

- Operating Systems ConceptsDokument4 SeitenOperating Systems ConceptsdrcsuNoch keine Bewertungen

- OsDokument9 SeitenOsAqeel H. YounusNoch keine Bewertungen

- HE163750 - Nguyen Quoc Huy - Part1Dokument15 SeitenHE163750 - Nguyen Quoc Huy - Part1Nguyen Quoc Huy (K16HL)Noch keine Bewertungen

- AssignmentDokument4 SeitenAssignmentMuqaddas PervezNoch keine Bewertungen

- COMP3231 80questionsDokument16 SeitenCOMP3231 80questionsGenc GashiNoch keine Bewertungen

- Excercise Solution 3-5Dokument5 SeitenExcercise Solution 3-5f21bscs008Noch keine Bewertungen

- OS Concepts Chapter 1 Solutions To Exercises Part 2Dokument2 SeitenOS Concepts Chapter 1 Solutions To Exercises Part 2Alfred Fred100% (2)

- UntitledDokument7 SeitenUntitledHazem MohamedNoch keine Bewertungen

- CH 4 (Threads)Dokument8 SeitenCH 4 (Threads)Muhammad ImranNoch keine Bewertungen

- OS - CHAP1 - Question AnswersDokument6 SeitenOS - CHAP1 - Question AnswersNoshin FaiyroozNoch keine Bewertungen

- Unit 1 - Exercise - SolutionDokument8 SeitenUnit 1 - Exercise - SolutionAnita Sofia KeyserNoch keine Bewertungen

- So2 - Tifi - Tusty Nadia Maghfira-Firda Priatmayanti-Fatthul Iman-Fedro Jordie PDFDokument9 SeitenSo2 - Tifi - Tusty Nadia Maghfira-Firda Priatmayanti-Fatthul Iman-Fedro Jordie PDFTusty Nadia MaghfiraNoch keine Bewertungen

- Questions To Practice For Cse323 Quiz 1Dokument4 SeitenQuestions To Practice For Cse323 Quiz 1errormaruf4Noch keine Bewertungen

- Assignment Os: Saqib Javed 11912Dokument8 SeitenAssignment Os: Saqib Javed 11912Saqib JavedNoch keine Bewertungen

- Distributed System Models and Issues - PGDokument14 SeitenDistributed System Models and Issues - PGMahamud elmogeNoch keine Bewertungen

- Practice Exercises: AnswerDokument4 SeitenPractice Exercises: AnswerMARYAMNoch keine Bewertungen

- RC2801B48 Home Work 1 CSE316 YogeshDokument5 SeitenRC2801B48 Home Work 1 CSE316 YogeshYogesh GandhiNoch keine Bewertungen

- Performance Analysis of N-Computing Device Under Various Load ConditionsDokument7 SeitenPerformance Analysis of N-Computing Device Under Various Load ConditionsInternational Organization of Scientific Research (IOSR)Noch keine Bewertungen

- CP4253 Map Unit IiDokument23 SeitenCP4253 Map Unit IiNivi VNoch keine Bewertungen

- Practice Exercises: AnswerDokument4 SeitenPractice Exercises: AnswerFiremandeheavenly InamenNoch keine Bewertungen

- OS Plate 1Dokument5 SeitenOS Plate 1Tara SearsNoch keine Bewertungen

- OsqnansDokument13 SeitenOsqnansHinamika JainNoch keine Bewertungen

- Nust College of Eme Nismah SaleemDokument4 SeitenNust College of Eme Nismah SaleemNismah MuneebNoch keine Bewertungen

- Advanced Operating System Department: IITA Student ID: BIA110004 Name: 哈瓦尼Dokument5 SeitenAdvanced Operating System Department: IITA Student ID: BIA110004 Name: 哈瓦尼Hawani KhairulNoch keine Bewertungen

- Operating System FundamentalsDokument2 SeitenOperating System FundamentalsBegench SuhanovNoch keine Bewertungen

- Unit-3 2 Multiprocessor SystemsDokument12 SeitenUnit-3 2 Multiprocessor SystemsmirdanishmajeedNoch keine Bewertungen

- اسيمنتاتDokument48 Seitenاسيمنتاتابراهيم اشرف ابراهيم متولي زايدNoch keine Bewertungen

- Chapter 3-Processes: Mulugeta ADokument34 SeitenChapter 3-Processes: Mulugeta Amerhatsidik melkeNoch keine Bewertungen

- Assignment - 4: Name: Kaiwalya A. Kulkarni Reg - No.: 2015BCS019 Roll No.: A-18Dokument6 SeitenAssignment - 4: Name: Kaiwalya A. Kulkarni Reg - No.: 2015BCS019 Roll No.: A-18somesh shatalwarNoch keine Bewertungen

- Assignment1-Rennie Ramlochan (31.10.13)Dokument7 SeitenAssignment1-Rennie Ramlochan (31.10.13)Rennie RamlochanNoch keine Bewertungen

- Chapter 3-ProcessesDokument43 SeitenChapter 3-ProcessesAidaNoch keine Bewertungen

- Section ADokument3 SeitenSection AKaro100% (1)

- Solution Manual For Operating System Concepts Essentials 2nd Edition by SilberschatzDokument6 SeitenSolution Manual For Operating System Concepts Essentials 2nd Edition by Silberschatztina tinaNoch keine Bewertungen

- Aca Unit5Dokument13 SeitenAca Unit5karunakarNoch keine Bewertungen

- Lec2 ParallelProgrammingPlatformsDokument26 SeitenLec2 ParallelProgrammingPlatformsSAINoch keine Bewertungen

- Chapter 3Dokument25 SeitenChapter 3yomiftamiru21Noch keine Bewertungen

- PCP ChapterDokument3 SeitenPCP ChapterAyush PateriaNoch keine Bewertungen

- Java ConcurrencyDokument24 SeitenJava ConcurrencyKarthik BaskaranNoch keine Bewertungen

- Answers Chapter 1 To 4Dokument4 SeitenAnswers Chapter 1 To 4parameshwar6Noch keine Bewertungen

- CS326 Parallel and Distributed Computing: SPRING 2021 National University of Computer and Emerging SciencesDokument33 SeitenCS326 Parallel and Distributed Computing: SPRING 2021 National University of Computer and Emerging SciencesNehaNoch keine Bewertungen

- Sardar Patel College of Engineering, Bakrol: Distributed Operating SystemDokument16 SeitenSardar Patel College of Engineering, Bakrol: Distributed Operating SystemNirmalNoch keine Bewertungen

- Using Replicated Data To Reduce Backup Cost in Distributed DatabasesDokument7 SeitenUsing Replicated Data To Reduce Backup Cost in Distributed DatabasesVijay SundarNoch keine Bewertungen

- Chapter 3-ProcessesDokument40 SeitenChapter 3-Processesezra berhanuNoch keine Bewertungen

- Distributed Systems: Practice ExercisesDokument4 SeitenDistributed Systems: Practice Exercisesijigar007Noch keine Bewertungen

- Z-Distributed Computing)Dokument16 SeitenZ-Distributed Computing)Surangma ParasharNoch keine Bewertungen

- Os Assignment 2Dokument6 SeitenOs Assignment 2humairanaz100% (1)

- Dos Mid PDFDokument16 SeitenDos Mid PDFNirmalNoch keine Bewertungen

- Data Replication TechniquesDokument3 SeitenData Replication Techniquesگل گلابNoch keine Bewertungen

- OS Assignments No.2 PDFDokument4 SeitenOS Assignments No.2 PDFsheraz7288Noch keine Bewertungen

- AmandaNoverdinaP TugasBAB4Dokument3 SeitenAmandaNoverdinaP TugasBAB4amanda noverdinaNoch keine Bewertungen

- It Is The ThreadDokument17 SeitenIt Is The Threadbhageshnarnawareg1Noch keine Bewertungen

- Multiprocessor Systems:: Advanced Operating SystemDokument60 SeitenMultiprocessor Systems:: Advanced Operating SystemshaiqNoch keine Bewertungen

- CPU FlowchartsDokument11 SeitenCPU FlowchartsVinoth KumarNoch keine Bewertungen

- Module 4 Answer KeyDokument4 SeitenModule 4 Answer KeyEleunamme Zx100% (2)

- Eye TrackingDokument2 SeitenEye TrackingEleunamme ZxNoch keine Bewertungen

- Opaque: Reproduction Scale Copying Original Replica MuseumDokument2 SeitenOpaque: Reproduction Scale Copying Original Replica MuseumEleunamme ZxNoch keine Bewertungen

- Dbms TutorialDokument91 SeitenDbms TutorialEleunamme ZxNoch keine Bewertungen

- Travel Itinerary: Lstm5TDokument3 SeitenTravel Itinerary: Lstm5TEleunamme ZxNoch keine Bewertungen

- Hist Ory of Inte Rnet Hist Ory of Inte Rnet: Wikipedia Was CreatedDokument1 SeiteHist Ory of Inte Rnet Hist Ory of Inte Rnet: Wikipedia Was CreatedEleunamme ZxNoch keine Bewertungen

- 104 INVENTORY FOR May 2015Dokument2 Seiten104 INVENTORY FOR May 2015Eleunamme ZxNoch keine Bewertungen

- Business PolicyDokument3 SeitenBusiness PolicyEleunamme ZxNoch keine Bewertungen

- Unit 3 Unit 4 Unit 1 Unit 2: Module 6: Permutations and CombinationsDokument1 SeiteUnit 3 Unit 4 Unit 1 Unit 2: Module 6: Permutations and CombinationsEleunamme ZxNoch keine Bewertungen

- Room 104 Inventory For April 2015Dokument2 SeitenRoom 104 Inventory For April 2015Eleunamme ZxNoch keine Bewertungen

- ReportDokument1 SeiteReportEleunamme ZxNoch keine Bewertungen

- AssessmentforCfE tcm4-565505Dokument9 SeitenAssessmentforCfE tcm4-565505Eleunamme ZxNoch keine Bewertungen

- EnnDokument1 SeiteEnnEleunamme ZxNoch keine Bewertungen

- CBLM CHS NC2 Terminating and Connect of Electrical Wiring and Electronic CircuitsDokument20 SeitenCBLM CHS NC2 Terminating and Connect of Electrical Wiring and Electronic CircuitsEleunamme Zx67% (9)

- A Journey Through Western Music and ArtsDokument39 SeitenA Journey Through Western Music and ArtsEleunamme Zx100% (4)

- A Journey Through Western Music and ArtsDokument35 SeitenA Journey Through Western Music and ArtsEleunamme ZxNoch keine Bewertungen

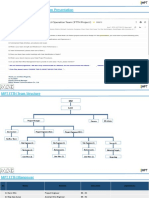

- CBT Process FlowDokument3 SeitenCBT Process FlowEleunamme ZxNoch keine Bewertungen

- Resume 1Dokument9 SeitenResume 1Eleunamme ZxNoch keine Bewertungen

- Heart Attack SignDokument1 SeiteHeart Attack SignEleunamme ZxNoch keine Bewertungen

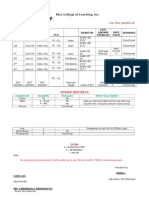

- MLG College of Learning, Inc: Honorees' Day and Christmas PartyDokument2 SeitenMLG College of Learning, Inc: Honorees' Day and Christmas PartyEleunamme ZxNoch keine Bewertungen

- Schedule 2013Dokument8 SeitenSchedule 2013Eleunamme ZxNoch keine Bewertungen

- Producing Better Results Through Better OrganizationDokument8 SeitenProducing Better Results Through Better OrganizationEleunamme ZxNoch keine Bewertungen

- Writing Software Requirements SpecificationsDokument14 SeitenWriting Software Requirements SpecificationsPriyank KeniNoch keine Bewertungen

- Weather Bulletin Number Two Tropical Cyclone Warning: Tropical Depression "Agaton" Issued at 5:00 PM, 17 January 2014Dokument2 SeitenWeather Bulletin Number Two Tropical Cyclone Warning: Tropical Depression "Agaton" Issued at 5:00 PM, 17 January 2014Eleunamme ZxNoch keine Bewertungen

- CH 01Dokument47 SeitenCH 01Eleunamme ZxNoch keine Bewertungen

- CH 01Dokument47 SeitenCH 01Eleunamme ZxNoch keine Bewertungen

- Networks Communicating SharingDokument33 SeitenNetworks Communicating SharingEleunamme ZxNoch keine Bewertungen

- Dot Net FolderDokument1 SeiteDot Net FolderEleunamme ZxNoch keine Bewertungen

- ScheduleDokument1 SeiteScheduleEleunamme ZxNoch keine Bewertungen

- Smar Tdate® 5: Our TechnologyDokument2 SeitenSmar Tdate® 5: Our TechnologyMatthew BaranNoch keine Bewertungen

- eCPPTv3 Labs-3 PDFDokument11 SeiteneCPPTv3 Labs-3 PDFRachid Moyse PolaniaNoch keine Bewertungen

- Operating Systems Session 12 Address TranslationDokument16 SeitenOperating Systems Session 12 Address TranslationmineNoch keine Bewertungen

- The Zero TrustDokument4 SeitenThe Zero TrustTim SongzNoch keine Bewertungen

- Task2-Identifying ComponentsDokument3 SeitenTask2-Identifying ComponentsStephanie DilloNoch keine Bewertungen

- Revised - Internship Summary ReportDokument10 SeitenRevised - Internship Summary ReportAKSHAT ANTALNoch keine Bewertungen

- Networking Essentials 6Th Edition Beasley Full ChapterDokument67 SeitenNetworking Essentials 6Th Edition Beasley Full Chapterheather.bekis553100% (7)

- User AgentDokument31 SeitenUser AgentgeorgeNoch keine Bewertungen

- Splunk-6 0 3-DistSearchDokument49 SeitenSplunk-6 0 3-DistSearchLexs TangNoch keine Bewertungen

- PowerMax+and+VMAX+Family+Configuration+and+Business+Continuity+Administration Lab+Guide Typo CorrectedDokument176 SeitenPowerMax+and+VMAX+Family+Configuration+and+Business+Continuity+Administration Lab+Guide Typo CorrectedSatyaNoch keine Bewertungen

- Week 01 Lab 01 RectangleDokument4 SeitenWeek 01 Lab 01 Rectangleulfany.guimelNoch keine Bewertungen

- Cosivis eDokument208 SeitenCosivis eZadiel MirelesNoch keine Bewertungen

- Unit 5Dokument85 SeitenUnit 5Vamsi KrishnaNoch keine Bewertungen

- Ball Thorsten Writing An Compiler in Go PDFDokument355 SeitenBall Thorsten Writing An Compiler in Go PDFKevin Sam100% (1)

- Getting Started With Scratch 3.0Dokument14 SeitenGetting Started With Scratch 3.0Francisco Javier Méndez Vázquez100% (1)

- Ise10 OverviewDokument4 SeitenIse10 OverviewsiamuntulouisNoch keine Bewertungen

- ASSIGNMENT9876Dokument11 SeitenASSIGNMENT9876Mian Talha NaveedNoch keine Bewertungen

- CA Switch - Ls-S2326tp-Ei-Ac-DatasheetDokument4 SeitenCA Switch - Ls-S2326tp-Ei-Ac-Datasheetanji201Noch keine Bewertungen

- Cyber Security Sales Play Cheat SheetDokument4 SeitenCyber Security Sales Play Cheat SheetAkshaya Bijuraj Nair100% (1)

- STM422 RS232 To RS485-RS422 Interface Converter User GuideDokument2 SeitenSTM422 RS232 To RS485-RS422 Interface Converter User GuideMojixNoch keine Bewertungen

- Lecture 06 - Algorithm Analysis PDFDokument6 SeitenLecture 06 - Algorithm Analysis PDFsonamNoch keine Bewertungen

- Laptop Clearance SpecialsDokument6 SeitenLaptop Clearance SpecialsMakhobane SibanyoniNoch keine Bewertungen

- HW 1Dokument4 SeitenHW 1Mayowa AdelekeNoch keine Bewertungen

- Q4 Activity 3Dokument1 SeiteQ4 Activity 3XEINoch keine Bewertungen

- Content: MPT FTTH Project Operation Team PresentationDokument44 SeitenContent: MPT FTTH Project Operation Team PresentationAung Thein OoNoch keine Bewertungen

- Anatomy of A CompilerDokument12 SeitenAnatomy of A CompilerJulian Cesar PerezNoch keine Bewertungen

- Te 18Dokument75 SeitenTe 18Ritika BakshiNoch keine Bewertungen

- CS8662 - Mobile Application Development Lab ManualDokument66 SeitenCS8662 - Mobile Application Development Lab ManualBharath kumar67% (3)

- Hpe Access Points: Your LogoDokument1 SeiteHpe Access Points: Your LogoNhan PhanNoch keine Bewertungen

- Lecture 1: Introduction To Java: Dr. Sapna Malik Assistant Professor, CSE Department, MSITDokument19 SeitenLecture 1: Introduction To Java: Dr. Sapna Malik Assistant Professor, CSE Department, MSITnavecNoch keine Bewertungen