Beruflich Dokumente

Kultur Dokumente

Designing Classifier Ensemble Through Multiple-Pheromone Ant Colony Based Feature Selection

Hochgeladen von

Journal of ComputingOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Designing Classifier Ensemble Through Multiple-Pheromone Ant Colony Based Feature Selection

Hochgeladen von

Journal of ComputingCopyright:

Verfügbare Formate

JOURNAL OF COMPUTING, VOLUME 4, ISSUE 5, MAY 2012, ISSN 2151-9617 https://sites.google.com/site/journalofcomputing WWW.JOURNALOFCOMPUTING.

ORG

39

Designing Classifier Ensemble through Multiple-Pheromone Ant Colony based Feature Selection

Shunmugapriya Palanisamy1 and Kanmani S2

Abstract A Classifier Ensemble (CE) efficiently improves the generalization ability of the classifier compared to a single classifier. Feature Selection extracts the relevant features from a data set removing the irrelevant and noisy data and hence improves the accuracy of a classifier, in turn increases the overall ensemble accuracy. This paper proposes a novel hybrid algorithm CE-MPACO the combination of Multiple Pheromone based Ant Colony Optimization algorithm (MPACO) and a classifier ensemble (CE). A classifier ensemble consisting of Support Vector Machine (SVM), Decision Tree and Nave Bayes, performs the task of classification and MPACO is used as a feature selector to select the most important features as well as to increase the overall classification accuracy of the classifier ensemble. Four UCI (University of California, Irvine) benchmark datasets have been used for the evaluation of the proposed method. Three ensembles ACO-CE, ACO-Bagging and ACOBoosting have been constructed from the finally selected feature subsets. From the experimental results, it can be seen that these ensembles have shown up to 5% increase in the classification accuracy compared to the constituent classifiers and the standard ensembles Bagging and Boosting. Index TermsClassifier Ensemble, Multiple Pheromone, Ant Colony Optimization Algorithm, Feature Selection, SVM, Decision Tree, Nave Bayes.

1 INTRODUCTION

Classifier Ensemble (CE) is an important and dynamically growing research area of Machine Learning [3]. In Pattern classification, when individual classifiers do not hold good for a particular application, the results of the classifiers are combined to form a Classifier Ensemble (CE) or Multiple Classifier System (MCS) for better classification. i.e. Classifier Ensemble is a learning paradigm where several classifiers are combined in order to generate a single classification result with improved performance accuracy [1]. The constituent classifiers of a CE may be of same type (Homogeneous CE) or of different type (Heterogeneous CE). Each classifier in the CE is trained over the entire feature space. Feature selection extracts the relevant features from a data set without affecting the original representation of the dataset [24]. It can be seen from the literature that Feature Selection has been widely used in the construction of classifier ensemble techniques [6, 7, 20, 21, 22, 23, 25, and 26]. Optimization techniques like Genetic Algorithm (GA) and Ant Colony Optimization Algorithm (ACO) have been successfully employed to implement feature selection in numerous applications [2, 8, 9, 10, 11, 12, 20, 22, 23, and 26]. In order to enhance the classification accuracy, different algorithms for pattern classification [1], different techniques for feature selection and a number of classifier ensemble methodologies have been proposed and implemented so far. The main limitation of these methods is that, none of them could give a consistent performance over all the datasets [7]. In this regard, we have proposed a novel hybrid algorithm CE-MPACO: the combination of Multiple Pheromone based Ant Colony Optimization algorithm (MPACO) to perform feature selection and a classifier ensemble (CE) for the task of classification. The experimental results are evaluated using four UCI benchmark datasets. While MPACO makes up combination of different subsets of features, the classifier ensemble validates the feature subset combinations by using Mean Accuracy as the fitness measure. This paper is organized in eight sections. Section II describes feature selection and its types. In Section III, a brief description of Ant Colony Systems is presented. Section IV outlines the proposed method CE-MPACO. Section V discusses about the Multiple Pheromone Ant Colony Optimization based Feature Selection and details of the classifier ensemble used is explained in section VI. The computational experiments and results are discussed in section VII and section VIII concludes the paper.

2 FEATURE SELECTION (FS)

Feature selection is viewed as an important preprocessing step for different tasks of data mining especially pattern classification. When the dimensionality of the feature space is very high, FS is used to extract the relevant and useful data. FS reduces the dimensionality of feature space by removing the noisy, redundant and irrelevant data and thereby makes the feature set more suitable for classification without affecting the accuracy of prediction [4]. It has been proved in the literature that classifications done with feature subsets given as an output of FS have higher prediction accuracy than classifications carried out without FS [20]. A number of algorithms have been proposed to implement FS. Apart from the ordinary FS algorithms there are two types of feature selection methods related to

JOURNAL OF COMPUTING, VOLUME 4, ISSUE 5, MAY 2012, ISSN 2151-9617 https://sites.google.com/site/journalofcomputing WWW.JOURNALOFCOMPUTING.ORG

40

pattern classification: Filter Approach and Wrapper Approach.

2.1 FILTER APPROACH

This method is independent of any learning algorithm and hence does not use a classifier. It depends on the general characteristics of the training data and uses the measures such as distance, information, dependency and consistency to evaluate the feature subsets selected [20] and [24].

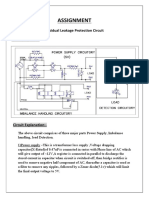

algorithm [6] and a multi objective genetic algorithm [7] have been employed in classifier ensemble construction. ACO is used as a feature selector for the optimal selection of features [2], [8], [9], [10], [11] and [12]. The main steps of the proposed method are as follows: 1) A set of binary bit strings (of length equal to the number of features) are created and assigned to the ants in the MP-ACO. 2) Feature subsets are selected by the ants based on the pheromone values of features and the heuristic information. Pheromone values get updated based on the selection of features by ants. 3) The feature subsets selected by ants are passed to the ensemble of classifiers for evaluating the accuracy of classification. 4) After the feature subsets selected by all the ants have been evaluated by the classifier ensemble, the best ant and the best solution of the iteration are selected based on the ensemble accuracy. The pheromone values of the features selected by the best ant are given more weight age by updation. 5) Repeat the steps 2 to 4 for a predefined number of iterations or there is no increase in the ensemble accuracy to output the optimal subset of features. Construct the three class classifier ensemble (ACO-CE), ACO-Bagging and ACO-Boosting on the feature subset selected.

2.2 Wrapper Approach This method involves the use of a classifier. The evaluation functions used for selecting the feature subsets are based on the classifier. The feature subset that results from a wrapper approach depends on the classification method used and two different classifiers can lead to two different feature subsets. Compared to filter approach the feature subsets obtained through wrapper are always effective subsets but it is a time consuming process [20] and [24]. 2.3 Evolutionary Approach Independent of filter and wrapper approaches, evolutionary algorithms are also used for searching the best subset of features through the entire feature space [2, 6, 7, 8, 9, 10, 11, 12, 20, 22, 23, and 26]..

3 ANT COLONY SYSTEM

Ant Colony Optimization (ACO) was introduced in early 1990s by M.Dorigo and his colleagues [5]. ACO algorithm is a novel nature inspired meta- heuristic for the solution of hard combinatorial optimization problems. The main inspiration source of ACO is the foraging behavior of real ants. Ants are social insects living in colonies with interesting foraging behavior. An ant can find the shortest path between the food source and a nest. Initially, ants walk in random paths in search of food sources. While walking on the ground between the food source and the nest, ants deposit a chemical substance called the pheromone on the ground and a pheromone trial is formed. This pheromone evaporates with time. So, the shorter paths will have more pheromone than the longer paths. Ants can smell the pheromone and when choosing their paths, they tend to choose the paths with stronger pheromone concentration. This way, as more ants select a particular path, more and more pheromone will be deposited on the path. At a certain point of time, this path (the shortest path) will be selected by all the ants.

5 THE MP-ACO BASED FEATURE SELECTION

The Ant Colony Optimization Algorithm that has been used for feature selection (FS) in this work is based on the ACO algorithm proposed by Nadia Abd-Alsabour et al [2]. A set of binary bits is considered whose length is equal to the number of features in the given dataset. This binary bit set is assigned to each of the ants to represent th its selected features. When the n bit is a 1 this means that the feature number n in the given dataset is selected, otherwise this feature is not selected. Initially at the start of the algorithm, the bits are randomly initialized to zeros and ones in the binary bit set. The pheromone values ( i ) are associated with the features. The initial pheromone value ( 0 ) and the , values are initialized. All these values are selected in the range of [0, 1]. At the initial stage, instead of assigning single pheromone value to all the ( i ) , multiple values of pheromone are assigned to ( i ) related to different features. These pheromone values are assigned based on a pre processing step of selecting the relevancy of features to the classification accuracy. Hence it is named as Multiple Pheromone ACO. At each construction step, each ant selects a feature depending on a probability estimate that is calculated according to the equation (1):

4 THE PROPOSED MPACO-CLASSIFIER ENSEMBLE ALGORITHM (CE-MPACO)

The ACO-Ensemble algorithm proposed in this study is the combination of Multiple Pheromone Ant Colony Optimization algorithm and a classifier ensemble consisting of Decision tree, Nave Bayes and Support Vector Machine (SVM) classifiers. A multi objective evolutionary

JOURNAL OF COMPUTING, VOLUME 4, ISSUE 5, MAY 2012, ISSN 2151-9617 https://sites.google.com/site/journalofcomputing WWW.JOURNALOFCOMPUTING.ORG

41

pi = i i

(1)

Where, i is the pheromone value associated with feature i and i is the proportion of ants that have selected this feature. After each construction step, on the basis of the estimated probability pi , the local pheromone update is performed by all ants according to the following equation:

i = (1 ). i + . 0

is formed by the majority vote of the three classifiers, Decision Tree, Nave Bayes and SVM. Bagging [1], [13] and [14] and Boosting are most famous classifier ensemble methods which have been used in numerous Pattern Classification domains [1], [13] and [15]. ACO-Bagging is constructed by the combination of C4.5 Bagging with ACO selected feature subset. ACO-Boosting is constructed by Boosting the C4.5 decision tree along with ACO selected feature subset.

(2)

After every iteration, the feature reducts obtained from each ant is passed to the classifier ensemble and the mean accuracy of the classifier ensemble on the feature subset given by each ant is recorded [7]. The ant having the highest accuracy is termed as the best ant and the solution (feature subset) given by the best ant is termed as the best solution (best features) of the iteration. The mean accuracy of the classifier ensemble is calculated using the following equation:

m

7 EXPERIMENTS AND DISCUSSION

The datasets and the results of the experiments conducted are discussed in this section.

c

MeanAccuracy =

j =1

(3)

In order to strengthen those features that form the best solution, the global pheromone update is done to the features selected by the best ant according to the following equation:

i = (1 ). i + ( .

Where, Lbest best ant and

1 ) Lbest

(4)

is the number of features selected by the is the evaporation rate.

The algorithm comes to an end, when there seems to be no improvement in the Mean Accuracy of the classifier ensemble. The feature subset, leading to highest accuracy during the iterations is taken as the output from the algorithm.

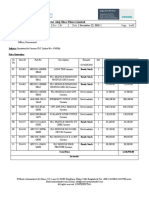

7.1 Datasets The proposed method has been evaluated by four benchmark datasets taken from UCI repository [16]. The details of the datasets are given in Table 1. The first dataset is Heart-C. Heart-C dataset has 303 instances and 14 features including class labels. It is a 2 class dataset. The second dataset is Dermatology dataset. Dermatology dataset has 366 instances and 34 features describing 6 types of skin disorders. Psoriasis class has 112 instances, Seboreic dermatitis class has 61 samples, Lichen planus class has 72 samples, Pityriasis rosea class has 49 samples, Cronic dermatitis class has 52 samples and the last class Pityriasis rubra pilaris has 20 samples. Hepatitis dataset has 155 instances and 20 features describing the survival or dying possibility of people after the attack of Hepatitis disease There are 32 samples belonging to Die class and 123 samples belonging to Live class. Lung cancer dataset has 32 instances and 57 features. Three types of disorders, after the attack of Lung Cancer have been described. The first class type has 9 samples, 13 samples in the second class type and 10 samples in the third class type.

TABLE 1 DATASETS DESCRIPTION

6 THE CLASSIFIER ENSEMBLE

The classifiers Decision Tree, Nave Bayes and SVM are put together to form the classifier ensemble in the proposed method. In the proposed study, during the process of feature selection by ants, the feature subsets selected by each of the ants is input to the classifier ensemble. The three classifiers consider the candidate feature subsets one at a time, trains itself with the combination of features and classifies the test set. When the classifiers pass the accuracies of the feature subsets, the ACO algorithm calculates the mean accuracy of the classifier ensemble using eqn. (3). This mean accuracy is used as the evaluation criterion for selecting the best feature subset combination. In the proposed method, the classifier ensembles ACOCE, ACO-Bagging and ACO-Boosting are constructed using the finally selected feature subset. ACO-CE

Datasets Heart Dermatology Hepatitis Lung Cancer

No.of Features 13 34 19 56

No.of Samples 303 366 155 32

No. of Classes 2 6 2 2

These 4 datasets have been chosen because they have been used in numerous experimental works related to classifier ensemble and feature selection. The datasets are chosen such that the number of features is in a varied range, so that the effect of feature selection by ACO is easily visible.

7.2 Implementation of CE-MPACO

Classifications of the datasets are implemented using WEKA 3.6.3 Software from Waikato University [17] and

JOURNAL OF COMPUTING, VOLUME 4, ISSUE 5, MAY 2012, ISSN 2151-9617 https://sites.google.com/site/journalofcomputing WWW.JOURNALOFCOMPUTING.ORG

42

feature selection using ACO has been implemented using Net Beans IDE. The class predictions for the datasets by three classifiers Decision Tree, SVM and Nave Bayes are shown in the Table IV. Decision Tree is implemented by using J48 algorithm, SVM by the LIBSVM package and Nave Bayes by the Nave Bayes classification algorithm. The values of the ACO parameters are initialized as shown in Table 2. After many trials, these values are selected because they give the best performance of the algorithm. At the end of each iteration, the feature subsets selected are passed on to the classifier ensemble. The MPACO Feature Selector uses the mean accuracy given in eqn. (3) to evaluate the feature subsets selected by the ants. After 10 iterations, the optimal feature subset for each of the dataset is selected based on the maximum classification accuracy gain of the classifier ensemble. The number of features selected by the CE-MPACO for all the four datasets is shown in Table 3. TABLE 2 EXPERIMENTAL VALUES OF ACO PARAMETERS

TABLE 5 CLASSIFICATION ACCURACY OF THE ENSEMBLES BY 10-FOLD CROSS VALIDATION

C4.5 Datasets Heart Dermatology Hepatitis Lung Cancer Bagging 83.18 96.9 72.82 86.98 C4.5 Boosting 83.05 96.5 75.13 85.31 ACOCE 87.21 98.40 78.09 91.79 ACOBagging 86.75 98.58 77.65 91.5 ACOBoosting 87.29 97.81 76.25 91.01

Three classifier ensembles ACO-CE, ACO-Bagging, ACO-Boosting are then constructed using the optimal feature subset selected by the proposed CE-MPACO method. The classification accuracies achieved by these three ensembles are given in Table 5. 10-fold Cross Validation [1, 2 and 7] has been used to evaluate the accuracy of the constructed ensembles. When the CE-MPACO method is applied to the datasets and the ensembles are constructed using the features output by CE-MPACO, the recognition rates for all the four datasets are improved significantly and this is shown in Fig.1. From the data represented in Tables 4 and 5 and from Fig 1, it can be visualized that, i. Feature selection has definitely improved the accuracy of classification and is used to speed up the process of classification. ii. For all the four datasets, CE-MPACO (ACO-CE, ACO-Bagging and ACO-Boosting) has given the highest recognition rates compared to the constituent classifiers. iii. In the graphical representation, the curve tends to elevate on the ACO methods for all the datasets which shows the betterment of the proposed model. iv. For heart dataset, the accuracy has increased up to a maximum of 4%. v. There is 2% increase in the recognition rate for the Dermatology dataset and 3% increase in Hepatitis dataset. vi. In case of Lung cancer, only 8 features are selected in the place of 56 features and the prediction rate is increased by 5%. This very well explains the effectiveness of the proposed method.

ACO Parameters

Ants Iterations

Experimental Values 0.2 0.3 0.6 0.3 0.8 Multiple values vaying with datasets No. of features in the dataset 10

TABLE 3 FEATURES SELECTED BY ACO

Datasets Heart Dermatology Hepatitis Lung Cancer

Total Features 13 34 19 56

Features Selected by CE-MPACO 8 28 13 8

TABLE 4 CLASS PREDICTIONS FOR FOUR UCI DATASETS BY CLASSIFICATION ALGORITHMS

Datasets Heart C Dermatology Hepatitis Lung Cancer SVM 81.23 94.81 71.3 82.38 Nave Bayes 81.87 92.26 70.96 84.12 Decision Tree 77.56 95.35 58.06 84.03

8 CONCLUSION

In this paper, a new method of classifier ensemble CEMPACO has been proposed and implemented. CEMPACO is proposed by combining the Multiple Pheromone Ant Colony Optimization (MPACO) with a Classifier Ensemble (CE) and has been used to optimize the feature selection process. This method has resulted in optimal selection of feature subsets and the effectiveness of the proposed method can be seen from

JOURNAL OF COMPUTING, VOLUME 4, ISSUE 5, MAY 2012, ISSN 2151-9617 https://sites.google.com/site/journalofcomputing WWW.JOURNALOFCOMPUTING.ORG

43

the results obtained. The ensembles ACO-CE, ACOBagging and ACO-Boosting developed using the selected feature subset, has given classification accuracies increased by 5% than the constituent classifiers and the ensembles Bagging and Boosting.

[13] [14] [15]

[16]

[17] [18]

[19] FIG. 1.GRAPH SHOWING THE COMPARISON OF PREDICTIONS FOR THE FOUR UCI DATASETS BY THE CONSTITUENT CLASSIFIERS, TRADITIONAL ENSEMBLES AND ENSEMBLES CONSTRUCTED USING CE-MPACO

[20]

REFERENCES

L.I.Kuncheva, Combining Pattern Classifiers, Methods and Algorithms, Wiley Interscience, 2005. [2] Nadia Abd-Alsabour and Marcus Randall, Feature Selection for Classification Using an Ant Colony System, Proc. Sixth IEEE International Conference on eScience Workshops, pp 86- 91, 2010. [3] Schapire.R.E The strength of weak learnability, Machine Learning, 5, 197227, 1990. [4] Ahmed, E.F., W.J. Yang and M.Y. Abdullah, Novel method of the combination of forecasts based on rough sets, J. Comput. Sci., 5: 440-444.2009. [5] Dorigo, M., Di, C. G., and Gambardella, L. M., Ant algorithms for discrete optimization. Artificial Life, 1999, 5: 137-172 [6] A. Chandra, and X. Yao, Ensemble learning using multiobjective evolutionary algorithm, Journal of Mathematical Modeling and Algorithms, vol. 5, no. 4, pp. 417-445, 2006. [7] Zili Zhang and Pengyi Yang, An Ensemble of Classifiers with Genetic Algorithm Based Feature Selection, IEEE Intelligent Informatics Bulletin, Vol 9, No.1 , pp. 18-24, 2008. [8] Rahul Karthik Sivagaminathan and Sreeram Ramakrishnan, A hybrid approach for feature subset selection using neural networks and ant colony optimization, Expert Systems with Applications 33 (2007) 4960. [9] Mehdi Hosseinzadeh Aghdam , Nasser Ghasem-Aghaee and Mohammad Ehsan Basiri, Text feature selection using ant colony optimization, Expert Systems with Applications 36 (2009) 68436853. [10] Ahmed Al-Ani, Feature Subset Selection Using Ant Colony Optimization, International Journal of Computational Intelligence, 2(1), pp 53-58, 2005. [11] Ahmed Al-Ani, Ant Colony Optimization for Feature Subset Selection, Proc. World Academy of Science, Engineering and Technology 4, pp. 35 38, 2005. [12] M. Sadeghzadeh, M. Teshnehlab, Correlation-based Feature [1] [21]

[22]

[23]

[24]

[25]

[26]

Selection using Ant Colony Optimization, Proc. World Academy of Science, Engineering and Technology 64, pp. 497 502, 2010 Polikar R., Ensemble based Systems in decision making IEEE Circuits and Systems Mag., vol. 6, no. 3, pp. 21-45, 2006. L. Breiman, Bagging predictors, Machine Learning, vol. 24, no. 2, pp.123-140, 1996. Y. Freund, R.E. Schapire, Experiments with a new boosting algorithm, Proceeding of the Thirteenth International conferenceon Machine Learning, 148-156, 1996. A. Frank, A. Asuncion, UCI Machine Learning Repository [Online] Available: http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science (2010). WEKA: A Java Machine Learning Package. [Online] Available: http://www.cs.waikato.ac.nz/ml/weka/. Chih-Chung Lo, Guang-Feng Deng, Using Ant Colony Optimization Algorithm to Solve Airline Crew Scheduling Problems, Proc. IEEE Third International Conference on Natural Computation (ICNC), pp. 197-201, 2007. In`es Alaya, Ant Colony Optimization for Multi-objective Optimization Problems, Proc. IEEE International Conference on Tools with Artificial Intelligence, pp 450 457, 2007. Laura E A Santana, Ligia Silva Anne M P Canuto, Fernando Pintro and Karliane O Vale, A Comparative Analysis of Genetic Algorithm and Ant Colony Optimization to Select Attributes for an Heterogeneous Ensemble of Classifiers, IEEE pp. 465472, 2010. M. Bacauskiene, A. Verikas, A. Gelzinisa and D.Valinciusa, A feature selection technique for generation of classification committees and its application to categorization of laryngeal images, Pattern Recognition 42(5), 645 654, 2009. Y He, D. Chen and W Zhao, Ensemble classifier system based on ant colony algorithm and its application in chemical pattern classification, Chemometrics and Intelligent Laboratory Systems, pp. 39-49, 2006. K Robbins, W Zhang and J Bertrand, The ant colony algorithm for feature selection in high-dimension gene expression data for disease classification, Mathematical Medicine and Biology (pp. 413-426), Oxford University Press, 2007. ShiXin Yu, Feature Selection and Classifier Ensembles: A Study on Hyperspectral Remote Sensing Data, A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Physics) in The University of Antwerp, 2003. Lee, M. et al. A two-step approach for feature selection and classifier ensemble construction in computer-aided diagnosis, IEEE International Symposium on Computer-Based Medical System, pp. 548-553, Albuquerque: IEEE Computer Society, 2008. H Kanan and K Faez, An improved feature selection method based on ant colony optimization (ACO) evaluated on face recognition system, Applied Mathematics and Computation, pp. 716725, 2008.

P.Shunmugapriya received her M.E. degree in 2006 from Department of Computer Science and Engineering, FEAT, Annamalai University, Chidambaram. She had been working as a Senior Lecturer for the past 7 years in the Department of Computer Science and Engineering, Sri Manakula Vinayagar Engineering College, Affiliated to Pondicherry University, Puducherry. Currently she is working towards her Ph.D degree in Optimal Design of Classifier Ensembles using Swarm Intelligent, Meta-Heuristic Search Algorithms in the Dept. of Computer Science & Engg.., Pondicherry Engineering Col-

JOURNAL OF COMPUTING, VOLUME 4, ISSUE 5, MAY 2012, ISSN 2151-9617 https://sites.google.com/site/journalofcomputing WWW.JOURNALOFCOMPUTING.ORG

44

lege, Puducherry. Her areas of interest are Artificial Intelligence, Ontology based Software Engineering, Classifier Ensembles and Swarm Intelligence. Dr. S. Kanmani received her B.E and M.E in Computer Science and Engineering from Bharathiyar University and Ph.D in Anna University, Chennai. She had been the faculty of Department of Computer Science and Engineering, Pondicherry Engineering College from 1992 onwards. Presently she is a Professor in the Department of Information Technology, Pondicherry Engineering College, Puducherry. Her research interests are Software Engineering, Software testing, Object oriented system, and Data Mining. She is Member of Computer Society of India, ISTE and Institute of Engineers, India. She has published about 50 papers in various international conferences and journals.

Das könnte Ihnen auch gefallen

- Hybrid Network Coding Peer-to-Peer Content DistributionDokument10 SeitenHybrid Network Coding Peer-to-Peer Content DistributionJournal of ComputingNoch keine Bewertungen

- Business Process: The Model and The RealityDokument4 SeitenBusiness Process: The Model and The RealityJournal of ComputingNoch keine Bewertungen

- Analytical Study of AHP and Fuzzy AHP TechniquesDokument4 SeitenAnalytical Study of AHP and Fuzzy AHP TechniquesJournal of ComputingNoch keine Bewertungen

- A Compact Priority Based Architecture Designed and Simulated For Data Sharing Based On Reconfigurable ComputingDokument4 SeitenA Compact Priority Based Architecture Designed and Simulated For Data Sharing Based On Reconfigurable ComputingJournal of ComputingNoch keine Bewertungen

- Complex Event Processing - A SurveyDokument7 SeitenComplex Event Processing - A SurveyJournal of ComputingNoch keine Bewertungen

- Applying A Natural Intelligence Pattern in Cognitive RobotsDokument6 SeitenApplying A Natural Intelligence Pattern in Cognitive RobotsJournal of Computing100% (1)

- Mobile Search Engine Optimization (Mobile SEO) : Optimizing Websites For Mobile DevicesDokument5 SeitenMobile Search Engine Optimization (Mobile SEO) : Optimizing Websites For Mobile DevicesJournal of ComputingNoch keine Bewertungen

- Using Case-Based Decision Support Systems For Accounting Choices (CBDSS) : An Experimental InvestigationDokument8 SeitenUsing Case-Based Decision Support Systems For Accounting Choices (CBDSS) : An Experimental InvestigationJournal of ComputingNoch keine Bewertungen

- Image Retrival of Domain Name System Space Adjustment TechniqueDokument5 SeitenImage Retrival of Domain Name System Space Adjustment TechniqueJournal of ComputingNoch keine Bewertungen

- Decision Support Model For Selection of Location Urban Green Public Open SpaceDokument6 SeitenDecision Support Model For Selection of Location Urban Green Public Open SpaceJournal of Computing100% (1)

- Product Lifecycle Management Advantages and ApproachDokument4 SeitenProduct Lifecycle Management Advantages and ApproachJournal of ComputingNoch keine Bewertungen

- Exploring Leadership Role in GSD: Potential Contribution To An Overall Knowledge Management StrategyDokument7 SeitenExploring Leadership Role in GSD: Potential Contribution To An Overall Knowledge Management StrategyJournal of ComputingNoch keine Bewertungen

- Divide and Conquer For Convex HullDokument8 SeitenDivide and Conquer For Convex HullJournal of Computing100% (1)

- Detection and Estimation of Multiple Far-Field Primary Users Using Sensor Array in Cognitive Radio NetworksDokument8 SeitenDetection and Estimation of Multiple Far-Field Primary Users Using Sensor Array in Cognitive Radio NetworksJournal of ComputingNoch keine Bewertungen

- Combining Shape Moments Features For Improving The Retrieval PerformanceDokument8 SeitenCombining Shape Moments Features For Improving The Retrieval PerformanceJournal of ComputingNoch keine Bewertungen

- QoS Aware Web Services Recommendations FrameworkDokument7 SeitenQoS Aware Web Services Recommendations FrameworkJournal of ComputingNoch keine Bewertungen

- Real-Time Markerless Square-ROI Recognition Based On Contour-Corner For Breast AugmentationDokument6 SeitenReal-Time Markerless Square-ROI Recognition Based On Contour-Corner For Breast AugmentationJournal of ComputingNoch keine Bewertungen

- Hiding Image in Image by Five Modulus Method For Image SteganographyDokument5 SeitenHiding Image in Image by Five Modulus Method For Image SteganographyJournal of Computing100% (1)

- Impact of Facebook Usage On The Academic Grades: A Case StudyDokument5 SeitenImpact of Facebook Usage On The Academic Grades: A Case StudyJournal of Computing100% (1)

- Energy Efficient Routing Protocol Using Local Mobile Agent For Large Scale WSNsDokument6 SeitenEnergy Efficient Routing Protocol Using Local Mobile Agent For Large Scale WSNsJournal of ComputingNoch keine Bewertungen

- Predicting Consumers Intention To Adopt M-Commerce Services: An Empirical Study in The Indian ContextDokument10 SeitenPredicting Consumers Intention To Adopt M-Commerce Services: An Empirical Study in The Indian ContextJournal of ComputingNoch keine Bewertungen

- Secure, Robust, and High Quality DWT Domain Audio Watermarking Algorithm With Binary ImageDokument6 SeitenSecure, Robust, and High Quality DWT Domain Audio Watermarking Algorithm With Binary ImageJournal of ComputingNoch keine Bewertungen

- Application of DSmT-ICM With Adaptive Decision Rule To Supervised Classification in Multisource Remote SensingDokument11 SeitenApplication of DSmT-ICM With Adaptive Decision Rule To Supervised Classification in Multisource Remote SensingJournal of ComputingNoch keine Bewertungen

- K-Means Clustering and Affinity Clustering Based On Heterogeneous Transfer LearningDokument7 SeitenK-Means Clustering and Affinity Clustering Based On Heterogeneous Transfer LearningJournal of ComputingNoch keine Bewertungen

- Detection of Retinal Blood Vessel Using Kirsch AlgorithmDokument4 SeitenDetection of Retinal Blood Vessel Using Kirsch AlgorithmJournal of ComputingNoch keine Bewertungen

- Prioritization of Detected Intrusion in Biometric Template Storage For Prevention Using Neuro-Fuzzy ApproachDokument9 SeitenPrioritization of Detected Intrusion in Biometric Template Storage For Prevention Using Neuro-Fuzzy ApproachJournal of ComputingNoch keine Bewertungen

- Impact of Software Project Uncertainties Over Effort Estimation and Their Removal by Validating Modified General Regression Neural Network ModelDokument6 SeitenImpact of Software Project Uncertainties Over Effort Estimation and Their Removal by Validating Modified General Regression Neural Network ModelJournal of ComputingNoch keine Bewertungen

- Towards A Well-Secured Electronic Health Record in The Health CloudDokument5 SeitenTowards A Well-Secured Electronic Health Record in The Health CloudJournal of Computing0% (1)

- Arabic Documents Classification Using Fuzzy R.B.F Classifier With Sliding WindowDokument5 SeitenArabic Documents Classification Using Fuzzy R.B.F Classifier With Sliding WindowJournal of ComputingNoch keine Bewertungen

- When Do Refactoring Tools Fall ShortDokument8 SeitenWhen Do Refactoring Tools Fall ShortJournal of ComputingNoch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Taylor Series PDFDokument147 SeitenTaylor Series PDFDean HaynesNoch keine Bewertungen

- Approvals Management Responsibilities and Setups in AME.B PDFDokument20 SeitenApprovals Management Responsibilities and Setups in AME.B PDFAli LoganNoch keine Bewertungen

- Saflex-Dg - 41 Data SheetDokument5 SeitenSaflex-Dg - 41 Data SheetrasheedgotzNoch keine Bewertungen

- Bridge Over BrahmaputraDokument38 SeitenBridge Over BrahmaputraRahul DevNoch keine Bewertungen

- Ficha Técnica Panel Solar 590W LuxenDokument2 SeitenFicha Técnica Panel Solar 590W LuxenyolmarcfNoch keine Bewertungen

- Darkle Slideshow by SlidesgoDokument53 SeitenDarkle Slideshow by SlidesgoADITI GUPTANoch keine Bewertungen

- Design ProjectDokument60 SeitenDesign Projectmahesh warNoch keine Bewertungen

- Principled Instructions Are All You Need For Questioning LLaMA-1/2, GPT-3.5/4Dokument24 SeitenPrincipled Instructions Are All You Need For Questioning LLaMA-1/2, GPT-3.5/4Jeremias GordonNoch keine Bewertungen

- TIA Guidelines SingaporeDokument24 SeitenTIA Guidelines SingaporeTahmidSaanidNoch keine Bewertungen

- Michael Clapis Cylinder BlocksDokument5 SeitenMichael Clapis Cylinder Blocksapi-734979884Noch keine Bewertungen

- LC For Akij Biax Films Limited: CO2012102 0 December 22, 2020Dokument2 SeitenLC For Akij Biax Films Limited: CO2012102 0 December 22, 2020Mahadi Hassan ShemulNoch keine Bewertungen

- Sensitivity of Rapid Diagnostic Test and Microscopy in Malaria Diagnosis in Iva-Valley Suburb, EnuguDokument4 SeitenSensitivity of Rapid Diagnostic Test and Microscopy in Malaria Diagnosis in Iva-Valley Suburb, EnuguSMA N 1 TOROHNoch keine Bewertungen

- Topic One ProcurementDokument35 SeitenTopic One ProcurementSaid Sabri KibwanaNoch keine Bewertungen

- FINAL SMAC Compressor Control Philosophy Rev4Dokument6 SeitenFINAL SMAC Compressor Control Philosophy Rev4AhmedNoch keine Bewertungen

- Assignment: Residual Leakage Protection Circuit Circuit DiagramDokument2 SeitenAssignment: Residual Leakage Protection Circuit Circuit DiagramShivam ShrivastavaNoch keine Bewertungen

- Quality Standards For ECCE INDIA PDFDokument41 SeitenQuality Standards For ECCE INDIA PDFMaryam Ben100% (4)

- Exploring-Engineering-And-Technology-Grade-6 1Dokument5 SeitenExploring-Engineering-And-Technology-Grade-6 1api-349870595Noch keine Bewertungen

- Hofstede's Cultural DimensionsDokument35 SeitenHofstede's Cultural DimensionsAALIYA NASHATNoch keine Bewertungen

- Arudha PDFDokument17 SeitenArudha PDFRakesh Singh100% (1)

- Expression of Interest (Eoi)Dokument1 SeiteExpression of Interest (Eoi)Mozaffar HussainNoch keine Bewertungen

- Banking Ombudsman 58Dokument4 SeitenBanking Ombudsman 58Sahil GauravNoch keine Bewertungen

- Waste Biorefinery Models Towards Sustainable Circular Bioeconomy Critical Review and Future Perspectives2016bioresource Technology PDFDokument11 SeitenWaste Biorefinery Models Towards Sustainable Circular Bioeconomy Critical Review and Future Perspectives2016bioresource Technology PDFdatinov100% (1)

- Sheet-Metal Forming Processes: Group 9 PresentationDokument90 SeitenSheet-Metal Forming Processes: Group 9 PresentationjssrikantamurthyNoch keine Bewertungen

- For Accuracy and Safety: Globally ApprovedDokument4 SeitenFor Accuracy and Safety: Globally ApprovedPedro LopesNoch keine Bewertungen

- Week 3 Lab Arado, Patrick James M.Dokument2 SeitenWeek 3 Lab Arado, Patrick James M.Jeffry AradoNoch keine Bewertungen

- Blackberry: Terms of Use Find Out MoreDokument21 SeitenBlackberry: Terms of Use Find Out MoreSonu SarswatNoch keine Bewertungen

- Tribes Without RulersDokument25 SeitenTribes Without Rulersgulistan.alpaslan8134100% (1)

- Pearson R CorrelationDokument2 SeitenPearson R CorrelationAira VillarinNoch keine Bewertungen

- 5steps To Finding Your Workflow: by Nathan LozeronDokument35 Seiten5steps To Finding Your Workflow: by Nathan Lozeronrehabbed100% (2)

- Man Bni PNT XXX 105 Z015 I17 Dok 886160 03 000Dokument36 SeitenMan Bni PNT XXX 105 Z015 I17 Dok 886160 03 000Eozz JaorNoch keine Bewertungen