Beruflich Dokumente

Kultur Dokumente

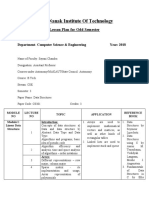

Generation of Computer Languages

Hochgeladen von

Sayani ChandraOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Generation of Computer Languages

Hochgeladen von

Sayani ChandraCopyright:

Verfügbare Formate

Computer Language

First Generation

Second Generation

Third Generation

Fourth Generation

Fifth Generation

1. First Generation Language:The first generation languages, or 1GL are low-level languages that are machine language. Originally, no translator was used to compile or assemble the first-generation language. The first-generation programming instructions were entered through the front panel switches of the computer system. The main benefit of programming in a first-generation programming language is that the code a user writes can run very fast and efficiently, since it is directly executed by the CPU. However, machine language is a lot more difficult to learn than higher generational programming languages, and it is far more difficult to edit if errors occur. In addition, if instructions need to be added into memory at some location, then all the instructions after the insertion point need to be moved down to make room in memory to accommodate the new instructions. Doing so on a front panel with switches can be very difficult. Furthermore, portability is significantly reduced - in order to transfer code to a different computer it needs to be completely rewritten since the machine language for one computer could be significantly different from another computer. Architectural considerations make portability difficult too. For example, the number of registers on one CPU architecture could differ from those of another.

2. Second Generation Language:The second generation languages, or 2GL are also low-level languages that generally consist of assembly languages. The term was coined to provide a distinction from higher level third-generation programming languages (3GL) such as COBOL and earlier machine code languages. Second-generation programming languages have the following properties:

The code can be read and written by a programmer. To run on a computer it must

be converted into a machine readable form, a process called assembly.

The language is specific to a particular processor family and environment.

Second-generation languages are sometimes used in kernels and device drivers (though C is generally employed for this in modern kernels), but more often find use in extremely intensive processing such as games, video editing, graphic manipulation/rendering. One method for creating such code is by allowing a compiler to generate a machineoptimized assembly language version of a particular function. This code is then hand-tuned, gaining both the brute-force insight of the machine optimizing algorithm and the intuitive abilities of the human optimizer.

3. Third Generation Language:The third generation languages, or 3GL are high-level languages such as C. Most popular general-purpose languages today, such as C++, C#, Java, BASIC and Delphi, are also third-generation languages. Most 3GLs support structured programming.

4. Fourth Generation Language:The fourth generation languages, or 4GL are languages that consist of statements similar to statements in a human language. Fourth generation languages are commonly used in database programming and scripts. A fourth-generation programming language (1970s-1990) (abbreviated 4GL) is a programming language or programming environment designed with a specific purpose in mind, such as the development of commercial business software. In the history of computer science, the 4GL followed the 3GL in an upward trend toward higher abstraction and statement power. The 4GL was followed by efforts to define and use a 5GL. The natural-language, block-structured mode of the third-generation programming languages improved the process of software development. However, 3GL development methods can be slow and error-prone. It became clear that some applications could be developed more rapidly by adding a higher-level programming language and methodology which would generate the equivalent of very complicated 3GL instructions with fewer errors. In some senses, software engineering arose to handle 3GL development. 4GL and 5GL projects are more oriented toward problem solving and systems engineering. All 4GLs are designed to reduce programming effort, the time it takes to develop software, and the cost of software development. They are not always successful in this task, sometimes resulting in inelegant and unmaintainable code. However, given the right problem, the use of an appropriate 4GL can be spectacularly successful as was seen with MARK-IV and MAPPER (see History Section, Santa Fe real-time tracking of their freight cars the productivity gains were estimated to be 8 times over COBOL). The usability

improvements obtained by some 4GLs (and their environment) allowed better exploration for heuristic solutions than did the 3GL. A quantitative definition of 4GL has been set by Capers Jones, as part of his work on function point analysis. Jones defines the various generations of programming languages in terms of developer productivity, measured in function points per staff-month. A 4GL is defined as a language that supports 1220 FP/SM. This correlates with about 1627 lines of code per function point implemented in a 4GL. Fourth-generation languages have often been compared to domain-specific programming languages (DSLs). Some researchers state that 4GLs are a subset of DSLs. Given the persistence of assembly language even now in advanced development environments (MS Studio), one expects that a system ought to be a mixture of all the generations, with only very limited use of the first.

5. Fifth Generation Language:The fifth generation languages, or 5GL are programming languages that contain visual tools to help develop a program. A good example of a fifth generation language is Visual Basic. A fifth-generation programming language (abbreviated 5GL) is a programming language based around solving problems using constraints given to the program, rather than using an algorithm written by a programmer. Most constraint-based and logic programming languages and some declarative languages are fifth-generation languages. While fourth-generation programming languages are designed to build specific programs, fifth-generation languages are designed to make the computer solve a given problem without the programmer. This way, the programmer only needs to worry about what problems need to be solved and what conditions need to be met, without worrying about how to implement a routine or algorithm to solve them. Fifth-generation languages are used mainly in artificial intelligence research. Prolog, OPS5, and Mercury are examples of fifth-generation languages. These types of languages were also built upon Lisp, many originating on the Lisp machine, such as ICAD. Then, there are many frame languages, such as KL-ONE. In the 1990s, fifth-generation languages were considered to be the wave of the future, and some predicted that they would replace all other languages for system development, with the exception of low-level languages. Most notably, from 1982 to 1993 Japan put much research and money into their fifth generation computer systems project, hoping to design a massive computer network of machines using these tools. However, as larger programs were built, the flaws of the approach became more apparent. It turns out that starting from a set of constraints defining a particular problem, deriving an efficient algorithm to solve it is a very difficult problem in itself. This crucial step cannot yet be automated and still requires the insight of a human programmer.

Today, fifth-generation languages are back as a possible level of computer language. A number of software vendors currently claim that their software meets the visual "programming" requirements of the 5GL concept.

Compiler vs Interpreter:Compiler and interpreter, both basically serve the same purpose. They convert one level of language to another level. A compiler converts the high level instructions into machine language while an interpreter converts the high level instruction into some intermediate form and after that, the instruction is executed. Compiler A compiler is defined as a computer program that is used to convert high level instructions or language into a form that can be understood by the computer. Since computer can understand only in binary numbers so a compiler is used to fill the gap otherwise it would have been difficult for a human to find info in the 0 and 1 form. Earlier the compilers were simple programs which were used to convert symbols into bits. The programs were also very simple and they contained a series of steps translated by hand into the data. However, this was a very time consuming process. So, some parts were programmed or automated. This formed the first compiler. More sophisticated compliers are created using the simpler ones. With every new version, more rules added to it and a more natural language environment is created for the human programmer. The complier programs are evolving in this way which improves their ease of use. There are specific compliers for certain specific languages or tasks. Compliers can be multiple or multistage pass. The first pass can convert the high level language into a language that is closer to computer language. Then the further passes can convert it into final stage for the purpose of execution. Interpreter The programs created in high level languages can be executed by using two different ways. The first one is the use of compiler and the other method is to use an interpreter. High level instruction or language is converted into intermediate from by an interpreter. The advantage of using an interpreter is that the high level instruction does not goes through compilation stage which can be a time consuming method. So, by using an interpreter, the high level program is executed directly. That is the reason why some programmers use interpreters while making small sections as this saves time.

Almost all high level programming languages have compilers and interpreters. But some languages like LISP and BASIC are designed in such a way that the programs made using them are executed by an interpreter. Difference between compiler and interpreter A complier converts the high level instruction into machine language while an interpreter converts the high level instruction into an intermediate form. Before execution, entire program is executed by the compiler whereas after translating the first line, an interpreter then executes it and so on. List of errors is created by the compiler after the compilation process while an interpreter stops translating after the first error. An independent executable file is created by the compiler whereas interpreter is required by an interpreted program each time.

Das könnte Ihnen auch gefallen

- Generations of Programming LanguagesDokument35 SeitenGenerations of Programming LanguagessamNoch keine Bewertungen

- Class 9 It 402 Sample Term 2Dokument3 SeitenClass 9 It 402 Sample Term 2Ashish Kr0% (1)

- Digital Documentation - PresentationDokument14 SeitenDigital Documentation - Presentationmukulkabra78% (18)

- AI Practical File FormatDokument4 SeitenAI Practical File FormatAnil Kumar singhNoch keine Bewertungen

- Class - IX Subject-IT (402) Question Bank - Green Skills: AnswerDokument2 SeitenClass - IX Subject-IT (402) Question Bank - Green Skills: AnswerDev Verma100% (2)

- CBSE Class 11 Informatics Practices Sample Paper-03 (Solved)Dokument13 SeitenCBSE Class 11 Informatics Practices Sample Paper-03 (Solved)cbsesamplepaper78% (27)

- Class 10 AI NOTES CHAPTER 1Dokument7 SeitenClass 10 AI NOTES CHAPTER 1Vinayak Chaturvedi100% (4)

- IT 402 Digital Documentation Class 10 Questions and Answers - CBSE Skill EducationDokument17 SeitenIT 402 Digital Documentation Class 10 Questions and Answers - CBSE Skill EducationManoj DalakNoch keine Bewertungen

- Chapter - Introduction To IT-ITes Industry Class 9Dokument5 SeitenChapter - Introduction To IT-ITes Industry Class 9shalabh197671% (7)

- Content:: Introduction of Evolution of Programming LanguageDokument15 SeitenContent:: Introduction of Evolution of Programming Languageアザハリ ハズアンNoch keine Bewertungen

- Introduction To ProgrammingDokument44 SeitenIntroduction To ProgrammingarsyadNoch keine Bewertungen

- Programming LanguagesDokument2 SeitenProgramming LanguagesRamchand HansrajuaNoch keine Bewertungen

- What Is Programming LanguageDokument4 SeitenWhat Is Programming LanguageHarold Pasion100% (1)

- Basic Python Programming Unit 1Dokument53 SeitenBasic Python Programming Unit 118WJ1A03H1 kalyan pusalaNoch keine Bewertungen

- Chapter 1 Edited PROGRAMINGDokument14 SeitenChapter 1 Edited PROGRAMINGteyik ayeNoch keine Bewertungen

- CSC 403 Lecture 1Dokument17 SeitenCSC 403 Lecture 1koredekayode2023Noch keine Bewertungen

- Dokumen - Tips - Program Language Generations and Programming Geofizoktatokvasssome Basic ConceptsDokument26 SeitenDokumen - Tips - Program Language Generations and Programming Geofizoktatokvasssome Basic ConceptsNnosie Khumalo Lwandile SaezoNoch keine Bewertungen

- Types of Programming Languages: 1. Low Level LanguageDokument14 SeitenTypes of Programming Languages: 1. Low Level Languageshishay GebreNoch keine Bewertungen

- Lesson - 5Dokument25 SeitenLesson - 5dang2dangjindagiNoch keine Bewertungen

- Chapter 1Dokument16 SeitenChapter 1Oz GNoch keine Bewertungen

- Five Generations of Programming LanguagesDokument4 SeitenFive Generations of Programming LanguagesSham JaggernauthNoch keine Bewertungen

- Chapter TwoDokument8 SeitenChapter Twon64157257Noch keine Bewertungen

- CHAPTER 8 INTRODUCTION TO PROGRAMMING-printDokument46 SeitenCHAPTER 8 INTRODUCTION TO PROGRAMMING-printkumar266anilNoch keine Bewertungen

- Chapter 1-Introduction To Computer ProgrammingDokument26 SeitenChapter 1-Introduction To Computer Programmingbahru demeke100% (1)

- Lesson Notes ProgrammingDokument14 SeitenLesson Notes Programmingcraig burey100% (1)

- Generations of Programming LanguageDokument9 SeitenGenerations of Programming LanguagePrasadaRaoNoch keine Bewertungen

- Unit-1, - Programming LanguageDokument35 SeitenUnit-1, - Programming LanguageLuffy RaiNoch keine Bewertungen

- Programming LanguagesDokument21 SeitenProgramming LanguagesKrishna Keerthana Muvvala83% (12)

- Week 3 Language TranslatorsDokument6 SeitenWeek 3 Language Translatorspowelljean16Noch keine Bewertungen

- University of Bohol College of Business and AccountancyDokument3 SeitenUniversity of Bohol College of Business and AccountancyPhillies Poor PrinceNoch keine Bewertungen

- Lesson 1A Evolution of Programming LanguagesDokument31 SeitenLesson 1A Evolution of Programming Languagesteddy tavaresNoch keine Bewertungen

- LP - 7th Day - CLASS NOTESDokument14 SeitenLP - 7th Day - CLASS NOTESMohammad Fahim AkhtarNoch keine Bewertungen

- 4 Types of ComputersDokument5 Seiten4 Types of Computersjonathan delos reyesNoch keine Bewertungen

- 2nd Programing LanguageDokument11 Seiten2nd Programing LanguageAshley WilliamsonNoch keine Bewertungen

- Lecture 1 Introduction To ProgrammingDokument19 SeitenLecture 1 Introduction To ProgrammingOm KNoch keine Bewertungen

- Kathmandu University Department of Computer Science and Engineering Dhulikhel, KavreDokument4 SeitenKathmandu University Department of Computer Science and Engineering Dhulikhel, KavreAcharya SuyogNoch keine Bewertungen

- Computer Languages: Presented By:-Baburaj PatelDokument12 SeitenComputer Languages: Presented By:-Baburaj PatelBaburaj PatelNoch keine Bewertungen

- Unit 5 Programming ConceptsDokument14 SeitenUnit 5 Programming ConceptsDipesh SAPKOTANoch keine Bewertungen

- Introduction To Programming: 1.1. What Is Computer Programming?Dokument73 SeitenIntroduction To Programming: 1.1. What Is Computer Programming?Yaregal ChalachewNoch keine Bewertungen

- Introduction To Programming Languages: Prepared By: Aura Mae M. Celestino - CITE FacultyDokument20 SeitenIntroduction To Programming Languages: Prepared By: Aura Mae M. Celestino - CITE FacultyjieNoch keine Bewertungen

- CDokument38 SeitenCAshish KumarNoch keine Bewertungen

- Programming Languages and Program Development ToolsDokument15 SeitenProgramming Languages and Program Development ToolsOh ReviNoch keine Bewertungen

- Introduction To Java: Grade VIII Computer ApplicationsDokument13 SeitenIntroduction To Java: Grade VIII Computer ApplicationsbinduannNoch keine Bewertungen

- Part 03 - Lesson 1 Introduction To Computer (Cont.)Dokument7 SeitenPart 03 - Lesson 1 Introduction To Computer (Cont.)Nut YoulongNoch keine Bewertungen

- Not A CD 07Dokument34 SeitenNot A CD 07Yumi SofriNoch keine Bewertungen

- Unit 2 Cs 150Dokument16 SeitenUnit 2 Cs 150wazmanzambiaNoch keine Bewertungen

- Computer / Programming LanguagesDokument9 SeitenComputer / Programming LanguagesBlessing TofadeNoch keine Bewertungen

- Programming IntroductionDokument23 SeitenProgramming IntroductionJohn Zachary RoblesNoch keine Bewertungen

- Langaugaes and TraslatorsDokument5 SeitenLangaugaes and TraslatorsWakunoli LubasiNoch keine Bewertungen

- Lec Note On ProgrammingDokument88 SeitenLec Note On ProgrammingBetsegaw DemekeNoch keine Bewertungen

- Chapter 1Dokument25 SeitenChapter 1KhaiTeoNoch keine Bewertungen

- Form 5 Lesson Notes Part 1Dokument56 SeitenForm 5 Lesson Notes Part 1Jack LowNoch keine Bewertungen

- Chapter 1 - Computer ProgrammingDokument9 SeitenChapter 1 - Computer Programminghiwot kebedeNoch keine Bewertungen

- CS111-PART 4 - Brief Introduction To SoftwareDokument25 SeitenCS111-PART 4 - Brief Introduction To SoftwareJian KusanagiNoch keine Bewertungen

- Chapter1 1 PDFDokument18 SeitenChapter1 1 PDFintensityNoch keine Bewertungen

- Computer Lang Class 6 Utkarsh VKDokument12 SeitenComputer Lang Class 6 Utkarsh VKAroosh DattaNoch keine Bewertungen

- Module 1 JavaDokument46 SeitenModule 1 JavaShibaZ KumarNoch keine Bewertungen

- Generations of Programming LanguageDokument13 SeitenGenerations of Programming LanguageMark Jerome AlbanoNoch keine Bewertungen

- Chapter One 1.1.: Why Do We Need To Learn Computer Programming?Dokument14 SeitenChapter One 1.1.: Why Do We Need To Learn Computer Programming?Bruck TesfuNoch keine Bewertungen

- Comp 2001 Chapter 1 Handout-EditedDokument24 SeitenComp 2001 Chapter 1 Handout-EditedDaniel DamessaNoch keine Bewertungen

- Time Complexity of Binary SearchDokument1 SeiteTime Complexity of Binary SearchSayani ChandraNoch keine Bewertungen

- Assignment 1Dokument1 SeiteAssignment 1Sayani ChandraNoch keine Bewertungen

- Java Applet NotesDokument4 SeitenJava Applet NotesSayani ChandraNoch keine Bewertungen

- Java Applet NotesDokument4 SeitenJava Applet NotesSayani ChandraNoch keine Bewertungen

- Guru Nanak Institute of Technology: Lesson Plan For Odd SemesterDokument3 SeitenGuru Nanak Institute of Technology: Lesson Plan For Odd SemesterSayani ChandraNoch keine Bewertungen

- Heap Data Structure - NotesDokument4 SeitenHeap Data Structure - NotesSayani ChandraNoch keine Bewertungen

- SSC CSHL 11 Dec 2011 Morning Answer KeyDokument5 SeitenSSC CSHL 11 Dec 2011 Morning Answer KeySayani ChandraNoch keine Bewertungen

- Java Tut 1Dokument55 SeitenJava Tut 1api-26793394Noch keine Bewertungen

- Computer Fundamental TutorialDokument39 SeitenComputer Fundamental TutorialSayani ChandraNoch keine Bewertungen

- TREEDokument1 SeiteTREESayani ChandraNoch keine Bewertungen

- Java Tut 1Dokument55 SeitenJava Tut 1api-26793394Noch keine Bewertungen

- Firewall ConfigurationDokument16 SeitenFirewall ConfigurationSayani ChandraNoch keine Bewertungen

- Different Types of MemoryDokument26 SeitenDifferent Types of MemorySayani Chandra100% (1)

- GopherDokument3 SeitenGopherSayani ChandraNoch keine Bewertungen

- CC CC: Presented by Presented by Sayani Sayani Chandra Chandra (Roll (Roll - 09127106017) 09127106017)Dokument17 SeitenCC CC: Presented by Presented by Sayani Sayani Chandra Chandra (Roll (Roll - 09127106017) 09127106017)Sayani ChandraNoch keine Bewertungen

- Question 1Dokument9 SeitenQuestion 1Manil JhaNoch keine Bewertungen

- Question 1Dokument9 SeitenQuestion 1Manil JhaNoch keine Bewertungen

- Presentation 1Dokument36 SeitenPresentation 1Sayani ChandraNoch keine Bewertungen

- The Zen of pYTHONDokument2 SeitenThe Zen of pYTHONGeraldNoch keine Bewertungen

- 200 GPT Prompts For Software DevelopersDokument25 Seiten200 GPT Prompts For Software Developerssingh.shweta543212001Noch keine Bewertungen

- Notes Prepared For: Mohammed Waseem RazaDokument130 SeitenNotes Prepared For: Mohammed Waseem RazaSadhi KumarNoch keine Bewertungen

- A Concise Introduction To Languages and Machines (Undergraduate Topics in Computer Science)Dokument346 SeitenA Concise Introduction To Languages and Machines (Undergraduate Topics in Computer Science)Paul George100% (1)

- Seamless Garment KnittingDokument32 SeitenSeamless Garment Knittingkathirvelus9408Noch keine Bewertungen

- Java - All in One (FAQs)Dokument138 SeitenJava - All in One (FAQs)api-3728136Noch keine Bewertungen

- Object Pascal Handbook Berlin VersionDokument563 SeitenObject Pascal Handbook Berlin VersionJhon Eduar ValenciaNoch keine Bewertungen

- Coding With ScratchDokument6 SeitenCoding With ScratchJos Ramblas75% (8)

- Obliq Programming LanguageDokument14 SeitenObliq Programming LanguageWalter MenezesNoch keine Bewertungen

- VB1 Revision - Programming With VBDokument10 SeitenVB1 Revision - Programming With VBGideon MogeniNoch keine Bewertungen

- Sma 191 Computer ProgrammingDokument95 SeitenSma 191 Computer Programmingkamandawyclif0Noch keine Bewertungen

- MAT 121 Lesson - 1Dokument20 SeitenMAT 121 Lesson - 1Vixtor JasonNoch keine Bewertungen

- C-Programming: Project ProposalDokument7 SeitenC-Programming: Project ProposalHemant Singh Sisodiya100% (1)

- CSC209 PHP-MySQL LECTURE NOTE PDFDokument70 SeitenCSC209 PHP-MySQL LECTURE NOTE PDFsadiqaminu931Noch keine Bewertungen

- Zig LanguageDokument16 SeitenZig LanguageMr ChashmatoNoch keine Bewertungen

- Big Data Survey QuestionnaireDokument9 SeitenBig Data Survey QuestionnaireAdd K100% (2)

- X11 Basic ManualDokument546 SeitenX11 Basic ManualVasi ValiNoch keine Bewertungen

- A+ Blog STD 9 It Chapter 4 Programming PDF Note 1 (Em)Dokument5 SeitenA+ Blog STD 9 It Chapter 4 Programming PDF Note 1 (Em)PriyaNoch keine Bewertungen

- Embedded C Programming Language (Overview, Syntax, One Simple Program Like Addition of Two Numbers)Dokument6 SeitenEmbedded C Programming Language (Overview, Syntax, One Simple Program Like Addition of Two Numbers)Pritam Salunkhe100% (1)

- SRSDokument10 SeitenSRSAdi MateiNoch keine Bewertungen

- Compiler (Statement of Problem)Dokument58 SeitenCompiler (Statement of Problem)Drishti ChhabraNoch keine Bewertungen

- Android Based Healthcare BotDokument14 SeitenAndroid Based Healthcare BotAdugna EtanaNoch keine Bewertungen

- O Level Computer Science Notes PDFDokument30 SeitenO Level Computer Science Notes PDFShahzad ShahNoch keine Bewertungen

- It 2018Dokument46 SeitenIt 2018Rehan TariqNoch keine Bewertungen

- SRM B.tech Part-Time CurriculumDokument78 SeitenSRM B.tech Part-Time CurriculumSathish Babu S GNoch keine Bewertungen

- Digital Differential AnalyzerDokument53 SeitenDigital Differential AnalyzerAbin PmNoch keine Bewertungen

- You by Caroline KepnesDokument200 SeitenYou by Caroline Kepnesndhi1aug0% (1)

- Python For Software Development Hans Petter HalvorsenDokument204 SeitenPython For Software Development Hans Petter HalvorsenryrytNoch keine Bewertungen

- Class - 12/we App-Notes - JavascriptDokument12 SeitenClass - 12/we App-Notes - JavascriptAISHA AHAMMED100% (1)

- Andhra University MCA SyllabusDokument74 SeitenAndhra University MCA SyllabusMounika NerellaNoch keine Bewertungen