Beruflich Dokumente

Kultur Dokumente

Lec 05 - Cache Memory

Hochgeladen von

Mark MOOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Lec 05 - Cache Memory

Hochgeladen von

Mark MOCopyright:

Verfügbare Formate

Concepts and Principles

A small but fast memory element in which the contents of the most commonly accessed locations are maintained, and can be placed between the main memory and the CPU.

Faster electronics can be used, which also results in a greater expense in terms of money, size, and power requirements. A cache memory has fewer locations than a main memory, which reduces the access time. The cache is placed both physically closer and logically closer to the CPU than the main memory, and this placement avoids communication delays over a shared bus.

The cache for this example consists of 214 slots into which main memory blocks are placed. There are more main memory blocks than there are cache slots, and any one of the 227 main memory blocks can be mapped into each cache slot (with only one block placed in a slot at a time).

Tag bit - keeps track of which one of the227 possible blocks is in each slot Valid bit- indicate whether or not the slot holds a block that belongs to the program being executed.

Dirty bit - keeps track of whether or not a block has been modified while it is in the cache. A slot that is modified must be written back to the main memory before the slot is reused for another block.

This scheme is called direct mapping because each cache slot corresponds to an explicit set of main memory blocks. For a direct mapped cache, each main memory block can be mapped to only one slot, but each slot can receive more than one block.

The set associative mapping scheme combines the simplicity of direct mapping with the flexibility of associative mapping.

Least recently used (LRU) First-in first-out (FIFO) Least frequently used(LFU) Random.

For the LRU policy, a time stamp is added to each slot, which is updated when any slot is accessed. When a slot must be freed for a new block, the contents of the least recently used slot, as identified by the age of the corresponding time stamp, are discarded and the new block is written to that slot.

The LFU policy works similarly, except that only one slot is updated at a time by incrementing a frequency counter that is attached to each slot. When a slot is needed for a new block, the least frequently used slot is freed.

The FIFO policy replaces slots in round-robin fashion, one after the next in the order of their physical locations in the cache.

The random replacement policy simply chooses a slot at random.

Write-Through Write-Back

Das könnte Ihnen auch gefallen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- MCS-011 Solved Assignment 2015-16 IpDokument12 SeitenMCS-011 Solved Assignment 2015-16 IpJigar NanduNoch keine Bewertungen

- 01-19 Diagnostic Trouble Code Table PDFDokument40 Seiten01-19 Diagnostic Trouble Code Table PDFmefisto06cNoch keine Bewertungen

- OTM Reports FTI Training ManualDokument78 SeitenOTM Reports FTI Training ManualAquib Khan100% (2)

- Electric Power Station PDFDokument344 SeitenElectric Power Station PDFMukesh KumarNoch keine Bewertungen

- Battery Power Management For Portable Devices PDFDokument259 SeitenBattery Power Management For Portable Devices PDFsarikaNoch keine Bewertungen

- Tailless AircraftDokument17 SeitenTailless AircraftVikasVickyNoch keine Bewertungen

- Quality Risk ManagementDokument29 SeitenQuality Risk ManagementmmmmmNoch keine Bewertungen

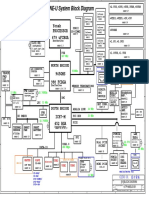

- Clevo M620ne-UDokument34 SeitenClevo M620ne-UHh woo't hoofNoch keine Bewertungen

- Astm F 30 - 96 R02 - RJMWDokument5 SeitenAstm F 30 - 96 R02 - RJMWphaindikaNoch keine Bewertungen

- Wastewater Treatment Options For Paper Mills Using Waste Paper/imported Pulps As Raw Materials: Bangladesh PerspectiveDokument4 SeitenWastewater Treatment Options For Paper Mills Using Waste Paper/imported Pulps As Raw Materials: Bangladesh PerspectiveKool LokeshNoch keine Bewertungen

- Brochure sp761lfDokument10 SeitenBrochure sp761lfkathy fernandezNoch keine Bewertungen

- Circuito PCB Control Pedal V3 TerminadoDokument1 SeiteCircuito PCB Control Pedal V3 TerminadoMarcelo PereiraNoch keine Bewertungen

- ZXComputingDokument100 SeitenZXComputingozzy75Noch keine Bewertungen

- Cinegy User ManualDokument253 SeitenCinegy User ManualNizamuddin KaziNoch keine Bewertungen

- RE 2017 EOT Brochure 4ADokument20 SeitenRE 2017 EOT Brochure 4AOrangutan SolutionsNoch keine Bewertungen

- METCALDokument28 SeitenMETCALa1006aNoch keine Bewertungen

- SunstarDokument189 SeitenSunstarSarvesh Chandra SaxenaNoch keine Bewertungen

- Standard Costing ExercisesDokument3 SeitenStandard Costing ExercisesNikki Garcia0% (2)

- Manual Construction Standards Completo CorregidozDokument240 SeitenManual Construction Standards Completo CorregidozJose DiazNoch keine Bewertungen

- Meitrack Gprs Protocol v1.6Dokument45 SeitenMeitrack Gprs Protocol v1.6monillo123Noch keine Bewertungen

- Design For X (DFX) Guidance Document: PurposeDokument3 SeitenDesign For X (DFX) Guidance Document: PurposeMani Rathinam RajamaniNoch keine Bewertungen

- CS 303e, Assignment #10: Practice Reading and Fixing Code Due: Sunday, April 14, 2019 Points: 20Dokument2 SeitenCS 303e, Assignment #10: Practice Reading and Fixing Code Due: Sunday, April 14, 2019 Points: 20Anonymous pZ2FXUycNoch keine Bewertungen

- 09T030 FinalDokument14 Seiten09T030 FinalKriengsak RuangdechNoch keine Bewertungen

- Anna University:: Chennai - 600025. Office of The Controller of Examinations Provisional Results of Nov. / Dec. Examination, 2020. Page 1/4Dokument4 SeitenAnna University:: Chennai - 600025. Office of The Controller of Examinations Provisional Results of Nov. / Dec. Examination, 2020. Page 1/4Muthu KumarNoch keine Bewertungen

- Technical Data For Elevator Buckets - Bucket ElevatorDokument1 SeiteTechnical Data For Elevator Buckets - Bucket ElevatorFitra VertikalNoch keine Bewertungen

- Universal USB Installer - Easy As 1 2 3 - USB Pen Drive LinuxDokument4 SeitenUniversal USB Installer - Easy As 1 2 3 - USB Pen Drive LinuxAishwarya GuptaNoch keine Bewertungen

- NanoDokument10 SeitenNanoRavi TejaNoch keine Bewertungen

- Seminar Report ON "Linux"Dokument17 SeitenSeminar Report ON "Linux"Ayush BhatNoch keine Bewertungen

- Stair Pressurization - ALLIED CONSULTANTDokument8 SeitenStair Pressurization - ALLIED CONSULTANTraifaisalNoch keine Bewertungen

- Review of C++ Programming: Sheng-Fang HuangDokument49 SeitenReview of C++ Programming: Sheng-Fang HuangIfat NixNoch keine Bewertungen