Beruflich Dokumente

Kultur Dokumente

09-Chebyshev Estimator

Hochgeladen von

pdfindirmeCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

09-Chebyshev Estimator

Hochgeladen von

pdfindirmeCopyright:

Verfügbare Formate

Chebyshev Estimator

Presented by: Orr Srour

References

Yonina Eldar, Amir Beck and Marc Teboulle, "A

Minimax Chebyshev Estimator for Bounded Error

Estimation" (2007), to appear in IEEE Trans. Signal

Proc.

Amir Beck and Yonina C. Eldar, Regularization in

Regression with Bounded Noise: A Chebyshev Center

Approach, SIAM J. Matrix Anal. Appl. 29 (2), 606-625

(2007).

Jacob (Slava) Chernoi and Yonina C. Eldar, Extending

the Chebyshev Center estimation Technique, TBA

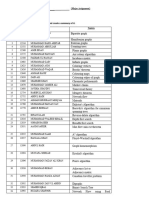

Chebyshev Center - Agenda

Introduction

CC - Basic Formulation

CC - Geometric Interpretation

CC - So why not..?

Relaxed Chebyshev Center (RCC)

Formulation of the problem

Relation with the original CC

Feasibility of the original CC

Feasibility of the RCC

CLS as a CC relaxation

CLS vs. RCC

Constraints formulation

Extended Chebyshev Center

Notations

y boldface lowercase = vector

y

i

- ith component of the vector y

A - boldface uppercase = matrix

- hat = the estimated vector of x

= A B is PD, PSD

x

, < s A B A B

The Problem

Estimate the deterministic parameter

vector from observations

with:

A n x m model matrix

w perturbation vector.

m

e x R

n

e y R

= + y Ax w

LS Solution

When nothing else is known, a common

approach is to find the vector

that minimizes the data error:

Known as least squares, this solution

can be written explicitly:

x

2

Ax y

* 1 *

( )

=

LS

x A A A y

(Assuming A has a full column rank)

But

In practical situations A is often ill-

conditioned -> poor LS results

Regularized LS

Assume we have some simple prior

information regarding the parameter

vector x.

Then we can use the regularized least

squares (RLS):

{ }

2 2

ar in

: gm

n

q

e

s =

RLS

x

x Ax y Lx

F

But

But what if we have some prior

information regarding the noise vector

as well?

What if we have some more

complicated information regarding the

parameter vector x?

Assumptions

From now on we assume that the noise

is norm-bounded :

And that x lies in a set defined by:

s

2

w

C

{ }

: ( ) 2 0,1

0, ,

i i

m

i i i

f d i k

d

= + + s s s

> e e

T T

i i

x x x Q x g x

Q g

C

R R

(hence C is the intersection of k ellipsoids)

Assumptions

The feasible parameter set of x is then

given by:

(hence Q is compact)

Q is assumed to have non-empty

interior

{ }

2

: , = e s x x y Ax Q C

Constrained Least Squares (CLS)

Given the prior knowledge , a popular

estimation strategy is:

- Minimization of the data error over C

- But: the noise constraint is unused

More importantly, it doesnt necessarily lead

to small estimation error:

e x C

2

CLS

argmin

e

=

x

x y Ax

C

x x

Chebyshev Center

The goal: estimator with small

estimation error

Suggested method: minimize the

worst-case error over all feasible

vectors

2

min max

e

x x

x x

Q

Chebyshev Center

Geometric Interpretation

Alternative representation:

-> find the smallest ball (hence its

center and its radius r ) which

encloses the set Q.

{ }

2

,

: for all min

r

r r s e

x

x x x Q

x

Chebyshev Center

Geometric Interpretation

Chebyshev Center

This problem is more commonly known

as finding Chebyshevs Center.

Pafnuty Lvovich Chebyshev

16.5.1821 08.12.1894

Chebyshev Center

The problem

The inner maximization is non-convex

Computing CC is a hard optimization

problem

Can be solved efficiently over the

complex domain for intersection of 2

ellipsoids

Relaxed Chebyshev Center (RCC)

Let us consider the inner maximization

first:

and:

{ }

2

: ) 0, 0

( ) 2 0,

max (

0

i

i i

f i k

f d i k

s s s

+ + s s s

x

T T

i i

x x x

x x Q x g x

2

0 0 0

, , d = = =

T T

Q A A g A y y

Relaxed Chebyshev Center (RCC)

Denoting , we can write the

optimization problem as:

with:

A =

T

xx

{ }

2

( , )

2 ( ) max Tr

A e

+ A

T

x

x x x

G

{ }

( , ) : ( , ) 0, 0 ,

( , ) (

f

) 2

i

i i

i k

f Tr d

= A A s s s A =

A = A + +

T

T

i i

x x xx

x Q g x

G

Concave

Not Convex

Relaxed Chebyshev Center (RCC)

Let us replace G with:

And write the RCC as the solution of:

{ }

( , ) : ( , ) 0, 0 f ,

i

i k = A A s s s A s

T

x x xx T

Convex

{ }

2

( , )

min x

2 ( ) ma Tr

A e

+ A

T

x x

x x x

T

Convex

Relaxed Chebyshev Center (RCC)

T is bounded

The objective is concave (linear) in

The objective is convex in

We can replace the order:

min-max to max-min

, A x

x

{ }

2

( , )

max

2 ( in ) m Tr

A e

+ A

T

x x

x x x

T

Relaxed Chebyshev Center (RCC)

The inner minimization is a simple

quadratic problem resulting with

Thus the RCC problem can be written

as:

= x x

{ }

2

( , )

max ( ) Tr

A e

+ A

x

x

T}

Note: this is a convex optimization problem.

RCC as an upper bound for CC

RCC is not generally equal to the CC (except for k

= 1 over the the complex domain)

Since we have:

Hence the RCC provides an upper bound on

the optimal minimax value.

_ G T

{ }

{ }

2

2

( , )

2

( , )

2

min max

min max

min ma ) x

( )

2 (

Tr

Tr

e

A e

A e

=

=

s

+ A

+ A

x x

T

x x

T

x x

x x

x x x

x x x

Q

G

T

CC The problem

RCC Solution

Theorem:

The RCC estimator is given by:

( ) ( )

1

RCC

0 0

k k

i i i i

i i

o o

= =

=

x Q g

RCC Solution

Where are the optimal solution

of:

subject to:

0

( ,.. ) .,

k

o o

( ) ( ) ( )

{ }

1

0 0 0 0

min

T

k k k k

i i i i i i i i

i i i i

d

o

o o o o

= = = =

g Q g

0

0, 0

k

i i

i

i

i k

o

o

=

>

> s s

Q I

RCC Solution as SDP

Or as a semidefinite program (SDP):

s.t.:

{ }

0

min

k

i i

i

t d

o

o

=

0 0

0

0

0

0, 0

k k

i i i i

i i

k

T

i i

i

k

i i

i

i

t

a i k

o o

o

o

= =

=

=

| |

|

>

|

\ .

>

> s s

Q g

g

Q I

Feasibility of the CC

Proposition: is feasible.

Proof:

Let us write the opt. problem as:

with:

CC

x

{ }

2

m

( ) in | +

x

x x

{ }

2

( ) 2 max |

e

= +

T

x

x x x x

Q

1. Convex in

x

2. strictly convex:

3. has a

UNIQUE

solution

Feasibility of the CC

Let us assume that is infeasible, and

denote by y its projection onto Q.

By the projection theorem:

and therefore:

x

( (

) ) 0

T

s e x y x y x Q

2 2 2

2 2

2( ) ) (

T

< + =

= + + s

y x y x x y

y x x y x y x y x x

Feasibility of the CC

So:

Which using the compactness of Q

implies:

But this contradicts the optimality of .

2 2

< e y x x x x Q

2 2

max max

e e

<

x x

y x x x

Q Q

x

Hence: is unique and feasible.

x

Feasibility of the RCC

Proposition: is feasible.

Proof:

Uniqueness follows from the approach

used earlier.

Let us prove feasibility by showing that

any solution of the RCC is also a solution of

the CC.

RCC

x

Feasibility of the RCC

Let be a solution for the

RCC problem. Then:

Since:

We get:

( )

, A e

RCC

x T

) 2 0, 0 (

RCC i

Tr k d i A + s + s s

T

i i

Q g x

,

0 A > >

T

RCC RCC i

x x Q

) 2

2

(

( ) 0

i i

i

f d

d Tr s A s

= + +

+ +

T T

RCC RCC i RCC i RCC

T

i i RCC

x x Q x g x

Q g x

e

RCC

x Q

CLS as CC relaxation

We now show that CLS is also a (looser)

relaxation of the Chebyshev center.

Reminder:

2

CLS

argmin

e

=

x

x y Ax

C

{ }

: ( ) 2 0,1

0, ,

i i

m

i i i

f d i k

d

= + + s s s

> e e

T T

i i

x x x Q x g x

Q g

C

R R

CLS as CC relaxation

Note that is equivalent to

Define the following CC relaxation:

e x Q

2

, e s x y Ax C

2

( , ) :

. ( ) 0, Tr

A e

=

`

A + s A >

)

T T T T

x x

A A 2y A x y xx

C

V.

unharmed

relaxed

{ }

2

( , )

max ( ) Tr

A e

+ A

x

x

V}

CLS as CC relaxation

Theorem: The CLS estimate is the same

as the relaxed CC over V (here CCV).

Proof:

Les us assume is the CCV solution,

and the RCC solution, .

The RCC is a strictly convex problem, so its

solution is unique:

( , ) A

1

x

2

x =

1 2

x x

2 2

0 r >

1 2

y Ax y Ax

CLS as CC relaxation

Define

It is easy to show that

(hence it is a valid solution for the CCV)

(

'

)

r

Tr

A = A + +

T T

2 2 1 1

T

x x x x I

A A

( ) ', A e

2

x V

CLS as CC relaxation

Denote by the objective of the

CCV.

By definition:

contradicting the optimality of .

, ( ) P A x

2 1

', ) , ( )

( )

( P P

n

r

Tr

A = A +

T

x x

A A

> 0

( , ) A

1

x

=

1 2

x x

CLS vs. RCC

Now, as in the proof of the feasibility of

the RCC, we know that:

And so:

Which means that the CLS estimate is

the solution of a looser relaxation than

that of the RCC.

( ) ( , )

i i

f f s A x x

e T V

Modeling Constraints

The RCC optimization method is based

upon a relaxation of the set Q

Different characterizations of Q may

lead to different relaxed sets.

Indeed, the RCC depends on the

specific chosen form of Q.

Linear Box Constraints

Suppose we want to append box

constraints upon x:

These can also be written as:

Which of the two is preferable?

l u s s

T

a x

( )( ) 0 l u s

T T

a x a x

Linear Box Constraints

Define:

1

, ) : ( ) 0,

, ,

(

i

Tr d

u l

A A + + s

s + s A

=

`

>

)

T

i i

T T T

x Q 2g x

a x 0 a x 0 xx

T

2

, ) : ( ) 0,

( ) ( )

(

,

i

Tr d

Tr u l ul

A A + + s

A

=

`

+ + s

)

A >

T

i i

T T T

x Q 2g x

aa a x 0 xx

T

Linear Box Constraints

Suppose , then:

Since , it follows that:

Which can be written as:

2

( , ) A e x T

( ) ( ) 0 Tr u l ul A + + s

T T

aa a x

A >

T

xx

( ) 0 u l ul + + s

T T T

x aa x a x

( )( ) 0 l u s

T T

a x a x

1

( , ) A e x T

Linear Box Constraints

Hence:

T1 is a looser relaxation -> T2 is

preferable.

2 1

_ T T

Linear Box Constraints

An example in R2:

The constraints have been chosen as the

intersection of:

A randomly generated ellipsoid

[-1, 1] x [-1, 1]

Linear Box Constraints

Linear Box Constraints

An example

Image Deblurring

x is a raw vector of a 16 x 16 image.

A is a 256 x 256 matrix representing

atmospheric turbulence blur (4 HBW,

0.8 STD).

w is a WGN vector with std 0.05 .

The observations are Ax+w

We want x back

An example

Image Deblurring

LS:

RLS: with

CLS:

RCC:

1

( )

=

T T

LS

x A A A y

2

1.1 = x

{ }

2

min : 0, 4 5 ) 2 ( 1 6

i i

x i x s s s Ax y

{ }

2

, 0,1 25 ( ) 6 4

i i

x x i = s s s s Ax y Q

Chebyshev Center - Agenda

Introduction

CC - Basic Formulation

CC - Geometric Interpretation

CC - So why not..?

Relaxed Chebyshev Center (RCC)

Formulation of the problem

Relation with the original CC

Feasibility of the original CC

Feasibility of the RCC

CLS as a CC relaxation

CLS vs. RCC

Constraints formulation

Extended Chebyshev Center

Questions..?

Now is the time

Das könnte Ihnen auch gefallen

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (120)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Yaskawa Product CatalogDokument417 SeitenYaskawa Product CatalogSeby Andrei100% (1)

- Ascc Catalog 2014-2016Dokument157 SeitenAscc Catalog 2014-2016api-261615090Noch keine Bewertungen

- Culture and Cultural GeographyDokument6 SeitenCulture and Cultural GeographySrishti SrivastavaNoch keine Bewertungen

- Ag3 RTM Chap 1Dokument79 SeitenAg3 RTM Chap 1PhillipOttoNoch keine Bewertungen

- Angular With Web ApiDokument32 SeitenAngular With Web ApiAnonymous hTmjRsiCp100% (1)

- Fourier Transform Infrared Quantitative Analysis of Sugars and Lignin in Pretreated Softwood Solid ResiduesDokument12 SeitenFourier Transform Infrared Quantitative Analysis of Sugars and Lignin in Pretreated Softwood Solid ResiduesDaisyOctavianiNoch keine Bewertungen

- Effects of Temperature and Moisture On SMCDokument20 SeitenEffects of Temperature and Moisture On SMCsenencostasNoch keine Bewertungen

- Regional Directorate General of Public Finance of BucharestDokument8 SeitenRegional Directorate General of Public Finance of BucharestAnna MarissNoch keine Bewertungen

- Stone ColumnDokument116 SeitenStone ColumnNur Farhana Ahmad Fuad100% (1)

- Lecture Outline: College Physics, 7 EditionDokument25 SeitenLecture Outline: College Physics, 7 EditionRaman Aylur SubramanianNoch keine Bewertungen

- Sap 47n WF TablesDokument56 SeitenSap 47n WF TablesjkfunmaityNoch keine Bewertungen

- PsiRun SheetsDokument3 SeitenPsiRun SheetsalemauNoch keine Bewertungen

- Is Euclidean Zoning The New Pig in The Parlor?: The Coming of Age of Form-Based Zoning (Damon Orobona)Dokument19 SeitenIs Euclidean Zoning The New Pig in The Parlor?: The Coming of Age of Form-Based Zoning (Damon Orobona)Damon Orobona100% (1)

- Role of ICT & Challenges in Disaster ManagementDokument13 SeitenRole of ICT & Challenges in Disaster ManagementMohammad Ali100% (3)

- 240-2 - Review Test 2 - 2Dokument4 Seiten240-2 - Review Test 2 - 2Nathaniel McleodNoch keine Bewertungen

- Graph Theory (B)Dokument2 SeitenGraph Theory (B)Waqar RoyNoch keine Bewertungen

- Color OverlaysDokument2 SeitenColor Overlaysapi-366876366Noch keine Bewertungen

- Gender Differences in Self-ConceptDokument13 SeitenGender Differences in Self-Conceptmaasai_maraNoch keine Bewertungen

- Mann Whitney U: Aim: To Be Able To Apply The Mann Whitney U Test Data and Evaluate Its EffectivenessDokument16 SeitenMann Whitney U: Aim: To Be Able To Apply The Mann Whitney U Test Data and Evaluate Its EffectivenessAshish ThakkarNoch keine Bewertungen

- Kinetic - Sculpture FirstDokument3 SeitenKinetic - Sculpture FirstLeoNoch keine Bewertungen

- A Presentation ON Office Etiquettes: To Be PresentedDokument9 SeitenA Presentation ON Office Etiquettes: To Be PresentedShafak MahajanNoch keine Bewertungen

- WSDL Versioning Best PracticesDokument6 SeitenWSDL Versioning Best Practiceshithamg6152Noch keine Bewertungen

- Eng 105 S 17 Review RubricDokument1 SeiteEng 105 S 17 Review Rubricapi-352956220Noch keine Bewertungen

- Practice For The CISSP Exam: Steve Santy, MBA, CISSP IT Security Project Manager IT Networks and SecurityDokument13 SeitenPractice For The CISSP Exam: Steve Santy, MBA, CISSP IT Security Project Manager IT Networks and SecurityIndrian WahyudiNoch keine Bewertungen

- Drpic Syllabus TheMedievalObject 2015Dokument8 SeitenDrpic Syllabus TheMedievalObject 2015Léo LacerdaNoch keine Bewertungen

- DfgtyhDokument4 SeitenDfgtyhAditya MakkarNoch keine Bewertungen

- Process Validation - Practicle Aspects - ISPE PDFDokument25 SeitenProcess Validation - Practicle Aspects - ISPE PDFvg_vvg100% (2)

- PyramidsDokument10 SeitenPyramidsapi-355163163Noch keine Bewertungen

- Norman Perrin-What Is Redaction CriticismDokument96 SeitenNorman Perrin-What Is Redaction Criticismoasis115100% (1)

- Effects of Water, Sanitation and Hygiene (WASH) Education On Childhood Intestinal Parasitic Infections in Rural Dembiya, Northwest Ethiopia An Uncontrolled (2019) Zemi PDFDokument8 SeitenEffects of Water, Sanitation and Hygiene (WASH) Education On Childhood Intestinal Parasitic Infections in Rural Dembiya, Northwest Ethiopia An Uncontrolled (2019) Zemi PDFKim NichiNoch keine Bewertungen