Beruflich Dokumente

Kultur Dokumente

RAC Operational Best Practices

Hochgeladen von

devjeet0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

237 Ansichten25 SeitenRAC Support Best Practices

Copyright

© © All Rights Reserved

Verfügbare Formate

PPT, PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenRAC Support Best Practices

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PPT, PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

237 Ansichten25 SeitenRAC Operational Best Practices

Hochgeladen von

devjeetRAC Support Best Practices

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PPT, PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 25

RAC & ASM Best Practices

You Probably Need More than just RAC

Kirk McGowan

Technical Director RAC Pack

Oracle Server Technologies

Cluster and Parallel Storage Development

Agenda

Operational Best Practices (IT MGMT 101)

Background

Requirements

Why RAC Implementations Fail

Case Study

Criticality of IT Service Management (ITIL)

process

Best Practices

People, Process, AND Technology

Why do people buy RAC?

Low cost scalability

Cost reduction, consolidation, infrastructure that

can grow with the business

High Availability

Growing expectations for uninterrupted service.

Why do RAC Implementations

fail?

RAC, scale-out clustering is new technology

Insufficient budget and effort is put towards filling

the knowledge gap

HA is difficult to do, and cannot be done with

technology alone

Operational processes and discipline are critical

success factors, but are not addressed

sufficiently

Case Study

Based on true stories. Any resemblance, in

full or in part, to your own experiences is

intentional and expected.

Names have been changed to protect the

innocent

Case Study

Background

8-12 months spent implementing 2 systems somewhat

different architectures, very different workloads, identical

tech stacks

Oracle expertise (Development) engaged to help flatten

tech learning curve

Non-mission critical systems, but important elements of a

larger enterprise re-architecture effort.

Many technology issues encountered across the stack, and

resolved over the 8-12 month implementation

Hw, OS, storage, network, rdbms,

cluster, and application

Case Study

Situation

New mission critical deployment using same technology

stack

Distinct architecture, applications development teams, and

operations teams

Large staff turnover

Major escalation, post production

CIO: Oracle products do not meet our

business requirements

RAC is unstable

DG doesnt handle the workload

JDBC connections dont failover

Case Study

Operational Issues

Requirements, aka SLOs were not defined

e.g. Claim of 20s failover time; application logic included 80s

failover time, cluster failure detection time alone set to 120s.

Inadequate test environments

Problems encountered first in production including the fact

that SLOs could not be met

Inadequate change control

Lessons learned in previous deployments were not applied to

new deployment rediscovery of same problems

Some changes implemented in test, but never rolled into

production re-occuring problems (outages) in production

No process for confirming a change actually fixes the problem

prior to implementing in production

Case Study

More Operational Issues

Poor knowledge xfer between internal teams

Configuration recommendations, patches, fixes identified in

previous deployments were not communicated.

Evictions are a symptom, not the problem.

Inadequate system monitoring

OS level statistics (CPU, IO, memory) were not being captured.

Impossible to RCA on many problems without ability to correlate

cluster / database symptoms with system level activity.

Inadequate Support procedures

Inconsistent data capture

No on-site vendor support consistent with criticality of system

No operations manual

- Managing and responding to outages

- Responding and restoring service after outages

Overview of Operational

Process Requirements

What are ITIL Guidelines?

ITIL (the IT Infrastructure Library) is the most widely accepted

approach to IT service management in the world, ITIL

provides a comprehensive and consistent set of best

practices for IT service management, promoting a quality

approach to achieving business effectiveness and efficiency

in the use of information systems.

IT Service Management

IT Service Management = Service Delivery

+ Service Support

Service Delivery: partially concerned with

setting up agreements and monitoring the

targets within these agreements.

Service Support: processes can be viewed

as delivering services as laid down in

these agreements.

Provisioning of IT Service Mgmt

In all organizations, must be matched to current and

rapidly changing business demands. The objective is

to continually improve the quality of service, aligned to

the business requirements, cost-effectively. To meet

this objective, three areas need to be considered:

People with the right skills, appropriate training and the

right service culture

Effective and efficient Service Management processes

Good IT Infrastructure in terms of tools and technology.

Unless People, Processes and Technology are

considered and implemented appropriately within a

steering framework, the objectives of Service

Management will not be realized.

Service Delivery

Financial Management

Service Level Management

Severity/priority definitions

e.g. Sev1, Sev2, Sev3, Sev4

Response time guidelines

SLAs

Capacity Management

IT Service Continuity Management

Availability Management

Service Support

Incident Management

Incident documentation & Reporting, incident handling,

escalation procedures

Problem Management

RCAs, QA & Process improvement

Configuration Management

Standard configs, gold images, CEMLIs

Change Management

Risk assessment, backout, sw maintenance, decommission

Release Management

New deployments, upgrades, Emergency release,

component release

BP: Set & Manage Expectations

Why is this important?

Expectations with RAC are different at the outset

HA is as much (if not moreso) about the processes and

procedures, than it is about the technology

No matter what technology stack you implement, on its own it

is incapable of meeting stringent SLAs

Must communicate what the technology can AND

cant do

Must be clear on what else needs to be in place to

supplement the technology if HA business

requirements are going to be met.

HA isnt cheap!

BP: Clearly define SLOs

Sufficiently granular

Cannot architect, design, OR manage a system without clearly

understanding the SLOs

24x7 is NOT an SLO

Define HA/recovery time objectives, throughput,

response time, data loss, etc

Need to be established with an understanding of the cost of

downtime for the system.

RTO and RPO are key availability metrics

Response time and throughput are key performance metrics

Must address different failure conditions

Planned vs unplanned

Localized vs site-wide

Must be linked to the business requirements

Response time and resolution time

Must be realistic

Manage to the SLOs

Definitions of problem severity levels

Documented targets for both incident response time, and

resolution time, based on severity

Classification of applications w.r.t. business criticality

Establish SLA with business

Negotiated response and resolution times

Definition of metrics

E.g. Application Availability shall be measured using the

following formula: Total Minutes In A Calendar Month

minus Unscheduled Outage Minutes minus Scheduled

Outage Minutes in such month, divided by Total Minutes

In A Calendar Month

Negotiated SLOs

Effectively documents expectations between IT and business

Incident log: date, time, description, duration, resolution

Example Resolution Time

Matrix

Severity 1 Priority 1 and 2 SRs

< 1 hour

Severity 1 Priority 3 SRs

< 13 Hours

Severity 2 Priority 1 SRs

< 14 hours

Severity 2 SRs

< 132 hrs

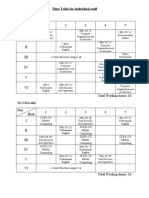

Example Response Time

Matrix

Status Sev1/P1 Sev1/P2 Sev2/P1 Sev2 Sev3/

Sev4

New,XFR 15 30 15 30 60

ASG 15 60 15 30 60

IRR, 2CB 15 30 15 60 120

RVW,1CB 15 60 15 60 120

PCR,RDV 60 N/A 60 120 3 hrs

WIP 60 60 60 18 hrs 4 days

INT 60 2 60 120 min 3 hrs

LMS,CUS 4 4 4 2 days 4 days

DEV 4 4 4 3 days 10 days

BP: TEST, TEST, TEST

Testing is a shared responsibility

Functional, destructive, and stress testing

Test environments must be representative of production

Both in terms of configuration, and capacity

Separate from Production

Building a test harness to mimic production workload is a necessary, but

non-trivial effort

Ideally, problems would never be encountered first in

production

If they are, the first question should be: Why didnt we catch the problem

in test?

Exceeding some threshold

Unique timing or race condition

What can we do so we catch this type of problem in the future?

Build a test case that can be reused as part of pre-production

testing.

BP: Define, document, and

adhere to Change Control

Processes

This amounts to self discipline

Applies to all changes at all levels of the tech stack

Hw changes, configuration changes, patches and patchsets,

upgrades, and even significant changes in workload.

If no changes are introduced, system will reach a steady state,

and function for ever.

A well designed system will be able to tolerate some

fluctuations, and faults.

A well managed system will meet service levels

If a problem (that was fixed) is encountered again elsewhere, it is

a change management process problem, not a technology

problem. I.e. rediscovery should not happen.

Ensure fixes are applied across all nodes in a cluster, and all

environments to which the fix applies.

BP: Plan for, and execute

Knowledge Xfer

New technology has a learning curve.

10g, RAC, and ASM cross traditional job boundaries so

knowledge xfer must be executed across all affected groups

Architecture, development, and operations

Network admin, sysadmin, storage admin, dba

Learn how to identify and diagnose problems

e.g. evictions are not a problem, they are a symptom

Learn how to use the various tools and interpret output

Hanganalyze, system state dumps, truss, etc

Understand behaviour distinction between cause and

symptom

Needs to occur pre-production

Operational Readiness

BP: Monitor your system

Define key metrics and monitor them actively

Establish a (performance) baseline

Learn how to use Oracle-provided tools

RDA (+ RACDDT)

AWR/ADDM

Active Session History

OSWatcher

Coordinate monitoring and collection of OS level stats

as well as db-level stats

Problems observed at one layer are often just symptoms of

problems that exist at a different layer

Dont jump to conclusions

BP: Define, Document, and

communicate Support

procedures

Define corrective procedures for outages

Routinely test corrective procedures

HA process:

Prevent Detect capture resume analyze fix

Classify high priority systems, and the steps that need to

be taken in each phase

Keep an active log of every outage

If we dont provide sufficient tools to get to root cause, then

shame on us.

If you dont implement the diagnositic capabilities that are

provided to help get to root cause, then shame on you

Serious outages should never happen more than once.

Summary

Deficiencies in operational processes and procedures

are the root cause of the vast majority of escalations

Address these, you dramatically increase your chances of

a successful RAC deployment, and will save yourself a lot

of future pain

Additional areas of challenge

Configuration Management Initial Install and config,

standardized gold image deployment

Incident Management - Diagnosing cluster-related

problems

Das könnte Ihnen auch gefallen

- Emotional Intelligence For Project Managers, May 2, 2014Dokument10 SeitenEmotional Intelligence For Project Managers, May 2, 2014devjeetNoch keine Bewertungen

- Node JS - L1: Trend NXT Hands-On AssignmentsDokument3 SeitenNode JS - L1: Trend NXT Hands-On AssignmentsParshuram Reddy0% (1)

- YAPP (Oracle) Yet Another Performance Profiling MethodDokument28 SeitenYAPP (Oracle) Yet Another Performance Profiling MethodkruemeL1969Noch keine Bewertungen

- SAP Technical AuditDokument5 SeitenSAP Technical AuditSuryanarayana TataNoch keine Bewertungen

- Csol 590 Final PaperDokument9 SeitenCsol 590 Final Paperapi-487513274Noch keine Bewertungen

- Itil Osa - Exam TipsDokument11 SeitenItil Osa - Exam TipsMohammad Faisal AbdulRabNoch keine Bewertungen

- Incident Management: What Makes A Standard Change Standard?Dokument5 SeitenIncident Management: What Makes A Standard Change Standard?beignaNoch keine Bewertungen

- Current Process (Current State or "As Is" Assessment) New Process (Future State or "To Be" Assessment)Dokument16 SeitenCurrent Process (Current State or "As Is" Assessment) New Process (Future State or "To Be" Assessment)Jarraad Benjamin100% (1)

- SAP Baseline Security Audit: Essential Technical ControlsDokument5 SeitenSAP Baseline Security Audit: Essential Technical ControlsRanjeet SinghNoch keine Bewertungen

- 04 Capacity PlanningDokument10 Seiten04 Capacity PlanningGwyneth BundaNoch keine Bewertungen

- ERP Implementation Fundamentals: Richard Byrom Oracle Consultant, Speaker and AuthorDokument23 SeitenERP Implementation Fundamentals: Richard Byrom Oracle Consultant, Speaker and AuthorsajidschannelNoch keine Bewertungen

- SWDokument13 SeitenSWPrincess SinghneeNoch keine Bewertungen

- It and RetailDokument49 SeitenIt and RetailchetnachhabraNoch keine Bewertungen

- The Root of The Cause: Industrial EngineerDokument6 SeitenThe Root of The Cause: Industrial EngineerrajatejayNoch keine Bewertungen

- Performance Optimization With SAP On DB2 - Key Performance IndicatorsDokument38 SeitenPerformance Optimization With SAP On DB2 - Key Performance IndicatorsursmjpreddyNoch keine Bewertungen

- Current Process (Current State or "As Is" Assessment) New Process (Future State or "To Be" Assessment)Dokument19 SeitenCurrent Process (Current State or "As Is" Assessment) New Process (Future State or "To Be" Assessment)Jarraad BenjaminNoch keine Bewertungen

- Class PresentationDokument142 SeitenClass PresentationFaisal RashidNoch keine Bewertungen

- Role of SAP Consultant in TestingDokument7 SeitenRole of SAP Consultant in TestingLokesh ModemzNoch keine Bewertungen

- SRE Week 3 Team Skills Characteristics of Req 19102022 083658amDokument35 SeitenSRE Week 3 Team Skills Characteristics of Req 19102022 083658amSaira RiasatNoch keine Bewertungen

- Software Engineering Unit 1Dokument66 SeitenSoftware Engineering Unit 1Saran VNoch keine Bewertungen

- Job Title: Distribution Clerk Job Location: Louisville KY Duration: 6 Months HRS/WK: 40.00 Job DescriptionDokument9 SeitenJob Title: Distribution Clerk Job Location: Louisville KY Duration: 6 Months HRS/WK: 40.00 Job Descriptionchintan patelNoch keine Bewertungen

- Support StructureDokument14 SeitenSupport Structureapi-3809437Noch keine Bewertungen

- Functional or Non-FunctionalDokument6 SeitenFunctional or Non-FunctionalSalooNoch keine Bewertungen

- Indian Oil Corporation LimitedDokument9 SeitenIndian Oil Corporation LimitedLavanya VitNoch keine Bewertungen

- Best Practices Are Essential For A Successful Data Warehouse SolutionDokument2 SeitenBest Practices Are Essential For A Successful Data Warehouse SolutionkishoreparasaNoch keine Bewertungen

- Manual I Q&ADokument9 SeitenManual I Q&AgsrsantoshNoch keine Bewertungen

- Topics For The Data Warehouse Test PlanDokument16 SeitenTopics For The Data Warehouse Test PlanWayne YaddowNoch keine Bewertungen

- Business Analyst PDFDokument79 SeitenBusiness Analyst PDFdommarajuuu1Noch keine Bewertungen

- Value Stream Mapping ProcessDokument45 SeitenValue Stream Mapping Processaparna k100% (1)

- Proven Practices in Migrating To System 11 - March HUG MeetingDokument18 SeitenProven Practices in Migrating To System 11 - March HUG MeetingMohamed FayazdeenNoch keine Bewertungen

- ISPM EQ Taxonomy+ReliabilityDataDokument140 SeitenISPM EQ Taxonomy+ReliabilityDataAdel Chelba100% (2)

- Chapter 12Dokument53 SeitenChapter 12Phuong AnhNoch keine Bewertungen

- 7BCEE1A-Datamining and Data WarehousingDokument128 Seiten7BCEE1A-Datamining and Data WarehousingHari KrishnaNoch keine Bewertungen

- Project RPRT JituDokument5 SeitenProject RPRT JitujitssssNoch keine Bewertungen

- Osa ExamDokument15 SeitenOsa ExamDaniel BibleNoch keine Bewertungen

- ERP OverviewDokument33 SeitenERP OverviewKhubaibAhmedNoch keine Bewertungen

- Erfaringer Og Demo Av Test Suite Solman 7 2 CoopDokument16 SeitenErfaringer Og Demo Av Test Suite Solman 7 2 CoopPramod SNoch keine Bewertungen

- Chapter 3 - IsD PrinciplesDokument5 SeitenChapter 3 - IsD PrinciplesluckyhookNoch keine Bewertungen

- PerformanceManagementDokument28 SeitenPerformanceManagementbirukNoch keine Bewertungen

- BAC Lecture 2Dokument9 SeitenBAC Lecture 2kibria sweNoch keine Bewertungen

- Successful Performance Tuning MethodologiesDokument17 SeitenSuccessful Performance Tuning MethodologiesRaghuram KashyapNoch keine Bewertungen

- Delivery Process 2. System ProcessDokument31 SeitenDelivery Process 2. System ProcessshriranjaniukNoch keine Bewertungen

- Configuration and Asset ManagementDokument3 SeitenConfiguration and Asset Managementanwar962000Noch keine Bewertungen

- Workload Automation Service ExecutionDokument12 SeitenWorkload Automation Service ExecutionKostas StNoch keine Bewertungen

- Data Warehousing - Delivery ProcessDokument4 SeitenData Warehousing - Delivery ProcessPhine TanayNoch keine Bewertungen

- Guidelines Data Warehousing DesignDokument3 SeitenGuidelines Data Warehousing Designrajeshaurora5Noch keine Bewertungen

- Case StudyDokument12 SeitenCase StudyAkanksha Singh0% (1)

- Presentation - Top 10 Lessons Learned in Deploying The Oracle ExadataDokument29 SeitenPresentation - Top 10 Lessons Learned in Deploying The Oracle Exadatakinan_kazuki104Noch keine Bewertungen

- Week9 1PerformanceMgt CH9Mullins 2020Dokument28 SeitenWeek9 1PerformanceMgt CH9Mullins 2020Nur AtikahNoch keine Bewertungen

- Chapter 2 Software Qualities Chapter 2 Software Qualities: Kaist Se Lab Kaist Se LabDokument45 SeitenChapter 2 Software Qualities Chapter 2 Software Qualities: Kaist Se Lab Kaist Se LabArun Kumar GargNoch keine Bewertungen

- CISCO Systems IncDokument5 SeitenCISCO Systems Incjust giggledNoch keine Bewertungen

- Professional Profile BADokument4 SeitenProfessional Profile BAkartikb60Noch keine Bewertungen

- Walgreens Senior Site Reliability Engineer JDDokument4 SeitenWalgreens Senior Site Reliability Engineer JDarunnagpalNoch keine Bewertungen

- Logistics Legacy ModernizationDokument8 SeitenLogistics Legacy ModernizationInfosysNoch keine Bewertungen

- Managing E-Business and Network SystemsDokument30 SeitenManaging E-Business and Network SystemsMANISHANoch keine Bewertungen

- High Availability Overview: O/S FailuresDokument6 SeitenHigh Availability Overview: O/S FailuresroddickersonNoch keine Bewertungen

- Best Practices For Performance TunningDokument33 SeitenBest Practices For Performance Tunningrafael_siNoch keine Bewertungen

- Plant MaintenanceDokument18 SeitenPlant MaintenanceOshinfowokan OloladeNoch keine Bewertungen

- Reg Reporting BADokument2 SeitenReg Reporting BAGservices WorksNoch keine Bewertungen

- How To Implement Cdisc: 2013. 11. 22 SAS Korea Sung-Soo - ParkDokument21 SeitenHow To Implement Cdisc: 2013. 11. 22 SAS Korea Sung-Soo - ParkgeekindiaNoch keine Bewertungen

- Hyperion PlanningDokument53 SeitenHyperion PlanningRamesh Krishnamoorthy100% (1)

- 8d Problemsolvingmethod 130828060105 Phpapp02Dokument204 Seiten8d Problemsolvingmethod 130828060105 Phpapp02Padarabinda MaharanaNoch keine Bewertungen

- ArrowECS SORA IO#102936 TipGuide2 031212Dokument5 SeitenArrowECS SORA IO#102936 TipGuide2 031212devjeetNoch keine Bewertungen

- Extreme Performance Using Oracle Timesten In-Memory DatabaseDokument22 SeitenExtreme Performance Using Oracle Timesten In-Memory DatabasedevjeetNoch keine Bewertungen

- Ziff Davis HowtoformulateawinningbigdatastrategyDokument9 SeitenZiff Davis HowtoformulateawinningbigdatastrategydevjeetNoch keine Bewertungen

- Point in Time Recovery: Anar GodjaevDokument9 SeitenPoint in Time Recovery: Anar GodjaevdevjeetNoch keine Bewertungen

- Best Practices Solaris-RACDokument4 SeitenBest Practices Solaris-RACdevjeetNoch keine Bewertungen

- Enabling Solaris Project Settings For Crs (Id 435464.1)Dokument3 SeitenEnabling Solaris Project Settings For Crs (Id 435464.1)devjeetNoch keine Bewertungen

- Social Media AnalyticsDokument12 SeitenSocial Media AnalyticsdevjeetNoch keine Bewertungen

- Give Them Something To Talk About: Brian Solis On The Art of EngagementDokument4 SeitenGive Them Something To Talk About: Brian Solis On The Art of EngagementdevjeetNoch keine Bewertungen

- Fitness CentreDokument32 SeitenFitness CentrePrince JunejaNoch keine Bewertungen

- Clarke John Henry - A Dictionary of Practical Materia Medica (Vol. II Part 1)Dokument757 SeitenClarke John Henry - A Dictionary of Practical Materia Medica (Vol. II Part 1)Renata Oana ErdeiNoch keine Bewertungen

- CIS Hardening Windows 2019 L1 10.16.76.68-Sf-Jkt-ItocbsdDokument6 SeitenCIS Hardening Windows 2019 L1 10.16.76.68-Sf-Jkt-ItocbsdkochikohawaiiNoch keine Bewertungen

- Resource Allocation and Scheduling in Cloud Computing - Policy and AlgorithmDokument14 SeitenResource Allocation and Scheduling in Cloud Computing - Policy and Algorithmsumatrablackcoffee453Noch keine Bewertungen

- CoCo School Main BrochureDokument8 SeitenCoCo School Main BrochureSantanu DasguptaNoch keine Bewertungen

- 14) اسئلة تدريبية MCQ على نمط الاختبارات القادمةDokument14 Seiten14) اسئلة تدريبية MCQ على نمط الاختبارات القادمةanas mazenNoch keine Bewertungen

- Curriculum - Student - BTECH BCE 2022 - 22BCE0608 - 2022 10 16 - 10 47 20Dokument4 SeitenCurriculum - Student - BTECH BCE 2022 - 22BCE0608 - 2022 10 16 - 10 47 20Abhishek SinghNoch keine Bewertungen

- BCS L3 Digital Marketer IfATE v1.1 KM3 Digital Marketing Business Principles Sample Paper B Answer Sheet V1.1Dokument7 SeitenBCS L3 Digital Marketer IfATE v1.1 KM3 Digital Marketing Business Principles Sample Paper B Answer Sheet V1.1IT AssessorNoch keine Bewertungen

- Sap C s4cpb 2402 Dumps by Moran 20 02 2024 10qa EbraindumpsDokument12 SeitenSap C s4cpb 2402 Dumps by Moran 20 02 2024 10qa EbraindumpsAlberto PinedaNoch keine Bewertungen

- Assignment #2 ItcDokument4 SeitenAssignment #2 ItcJoshua Yvan TordecillaNoch keine Bewertungen

- SAS Studio 3.8: Administrator's GuideDokument64 SeitenSAS Studio 3.8: Administrator's GuideXTNNoch keine Bewertungen

- Computer in BangladeshDokument15 SeitenComputer in BangladeshOshim Adhar100% (2)

- DW DM NotesDokument107 SeitenDW DM NotesbavanaNoch keine Bewertungen

- Online JobsDokument5 SeitenOnline Jobswinston11Noch keine Bewertungen

- How To Extract .SAR File For Windows - My Code CommunityDokument3 SeitenHow To Extract .SAR File For Windows - My Code CommunityRangabashyamNoch keine Bewertungen

- Topical Revision Guide For Sub-Ict and Computer StudiesDokument49 SeitenTopical Revision Guide For Sub-Ict and Computer StudiesIlukol VictorNoch keine Bewertungen

- Why Do We Need Control-M When I Can Use Cron Jobs or Windows Task Scheduler?Dokument8 SeitenWhy Do We Need Control-M When I Can Use Cron Jobs or Windows Task Scheduler?nadeem baigNoch keine Bewertungen

- Inbound Data Flow in SAP Retail EnvironmentDokument3 SeitenInbound Data Flow in SAP Retail EnvironmentEklavya BansalNoch keine Bewertungen

- Technical Assessment For Deployment Support Engineer - Hery MunanzarDokument6 SeitenTechnical Assessment For Deployment Support Engineer - Hery MunanzarMuhammad HafizNoch keine Bewertungen

- 300 715 DemoDokument8 Seiten300 715 DemolingNoch keine Bewertungen

- Time Table For Individual Staff - EvenDokument5 SeitenTime Table For Individual Staff - EvenRaja ANoch keine Bewertungen

- DBT - CommandsDokument2 SeitenDBT - CommandsPhakaorn A.Noch keine Bewertungen

- Case Study 5Dokument2 SeitenCase Study 5Mythes JicaNoch keine Bewertungen

- Pivotal Critical Secondary: Manual InspectionDokument4 SeitenPivotal Critical Secondary: Manual InspectionSaravananNoch keine Bewertungen

- Grade 9 MATH First Quarter: Oral Language and FLUENCY First QuarterDokument3 SeitenGrade 9 MATH First Quarter: Oral Language and FLUENCY First QuarterRi rimottoNoch keine Bewertungen

- Manual SIWAREX WP521 WP522 en - PDF Page 154Dokument1 SeiteManual SIWAREX WP521 WP522 en - PDF Page 154Cr SeNoch keine Bewertungen

- View Available Choices HandlerDokument10 SeitenView Available Choices HandlerSoma DeyNoch keine Bewertungen

- Quest Toad For Oracle: Find The Edition That's Right For YouDokument1 SeiteQuest Toad For Oracle: Find The Edition That's Right For YouCrazy KhannaNoch keine Bewertungen