Beruflich Dokumente

Kultur Dokumente

1 - Basics

Hochgeladen von

Naanu Sharma0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

13 Ansichten31 SeitenYfg

Copyright

© © All Rights Reserved

Verfügbare Formate

PPTX, PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenYfg

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PPTX, PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

13 Ansichten31 Seiten1 - Basics

Hochgeladen von

Naanu SharmaYfg

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PPTX, PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 31

SOFTWARE TESTING

● Software: Software, is any set of machine-readable

instructions (most often in the form of a computer program)

that directs a computer's processor to perform specific

operations.

● Testing: Testing is the process of evaluating a system or its

component(s) with the intent to find that whether it satisfies

the specified requirements or not.

SOFTWARE TESTING

● Process of evaluating the attributes and capabilities

of a program and determining that it meets user

requirements.

● Done to find defects

● To determine software is working as was required

(Validation & Verification)

● Reliability & Usability

● To measure performance (Non-functional Attributes)

SOFTWARE TESTING

● Software testing is a process used to identify the correctness,

completeness, and quality of developed computer software. It

includes a set of activities conducted with the intent of finding errors in

software so that it could be corrected before the product is released to

the end users.

● In simple words, software testing is an activity to check whether the

actual results match the expected results and to ensure that the

software system is defect free.

● Testing the completeness and correctness of a software.

Types of testing:

● Static & Dynamic

● Manual & Automation

● Functional & Non-Functional

OBJECTIVES OF SOFTWARE TESTING

● To ensure that the solution meets the business and user

requirements;

● To catch errors that can be bugs or defects;

● To determining user acceptability;

● To ensuring that a system is ready for use;

● To gaining confidence that it works;

● Improving the quality of software

● To measure the performance

● Evaluating the capabilities of a system to show that a

system performs as intended;

● To verify software certify to standards

● Validation & Verification

● Usability & Reliability

IMPORTANCE OF SOFTWARE TESTING

● To improve the quality, reliability & performance of

software.

● Software bugs can potentially cause monetary and

human loss, history is full of such examples

● This is China Airlines Airbus A300 crashing due to a

software bug on April 26, 1994 killing 264 innocent

lives

● In 1985,Canada's Therac-25 radiation therapy machine

malfunctioned due to software bug and delivered lethal

radiation doses to patients ,leaving 3 people dead and

critically injuring 3 others

● In April of 1999 ,a software bug caused the failure of a

$1.2 billion military satellite launch, the costliest

accident in history

● In may of 1996, a software bug caused the bank

accounts of 823 customers of a major U.S. bank to be

credited with 920 million US dollars

CHARACTERISTICS OF SOFTWARE TESTERS

● Be skeptical and question everything

● Don’t compromise on quality

● Think from user perspective

● Prioritize tests

● Start testing early

● Listen to all suggestions

● Identify and manage risks

● Develop analyzing skills

● Negative side testing

● Stop blaming others

● Be cool when bugs are rejected

● Clearly explain bugs

● Creativity/trouble shooters/explorers

RESPONSIBILITIES OF SOFTWARE

TESTERS

● Analyze requirements

● Prepare test plans

● Create test cases/test data

● Analyze test cases of other team members

● Execution of test cases

● Defect logging and tracking

● Providing complete information while logging bugs

● Summary reports

● Use cases creation

● Suggestions to improve the quality

● Communication with test lead/managers, clients,

business teams etc.

TESTING TERMINOLOGY

● Error/Mistake

● Bug/Defect/Fault

● Failure

● Black Box Testing

● White Box Testing

● Grey Box Testing

● Functional Testing

● Non Functional Testing

● Manual Testing

● Automation Testing

● Error/Mistake: Human action that produce an

incorrect result

● Bug/defect/fault: Flaw in component/ system that

can cause component/ system fail to perform its

required function

● Failure: Deviation of component/ system from its

expected delivery, services or result.

When can defects arise?

● Requirement phase

● Design Phase

● Build/Implementation phase

● Testing Phase

● After Release/maintenance phase

BLACK BOX TESTING

● The technique of testing without having any knowledge

of the interior workings of the application is Black Box

testing. The tester is oblivious to the system

architecture and does not have access to the source

code. Typically, when performing a black box test, a

tester will interact with the system's user interface by

providing inputs and examining outputs without

knowing how and where the inputs are worked upon.

WHITE BOX TESTING

● White box testing is the detailed investigation of internal

logic and structure of the code. White box testing is also

called glass testing or open box testing. In order to perform

white box testing on an application, the tester needs to

possess knowledge of the internal working of the code.

● The tester needs to have a look inside the source code and

find out which unit/chunk of the code is behaving

inappropriately.

GREY BOX TESTING

● Grey Box testing is a technique in which both black

box and white box testing is done or both black box

and white box testing techniques are involved.

FUNCTIONAL TESTING

● Functional Testing: Testing based on an

analysis of the specification of the

functionality of a component or system

● The process of testing to determine the

functionality of a software product.

NON FUNCTIONAL TESTING

● Testing the attributes of a component or

system that do not relate to functionality, e.g.

reliability, efficiency, usability,

maintainability and portability.

MANUAL TESTING

● Manual Testing: Testing the software

manually without using any automation tool

or executing the test cases manually.

AUTOMATION TESTING

● Automation Testing: Testing the software

using any automation tool or executing the

test cases using automation tools.

PRINCIPLES OF SOFTWARE TESTING

● Testing shows presence of defects:

● Exhaustive testing is impossible:

● Early testing:

● Defect clustering:

● Pesticide paradox:

● Testing is context depending:

● Absence – of – errors fallacy

TESTING SHOWS PRESENCE OF DEFECTS

● Testing can show the defects are present, but cannot

prove that there are no defects. Even after testing the

application or product thoroughly we cannot say that

the product is 100% defect free.

EXHAUSTIVE TESTING IS

IMPOSSIBLE

● Testing everything including all combinations of inputs and

preconditions is not possible. So, instead of doing the exhaustive

testing we can use risks and priorities to focus testing efforts. For

example: In an application in one screen there are 15 input fields,

each having 5 possible values, then to test all the valid

combinations you would need 30 517 578 125 (515) tests.

DEFECT CLUSTERING

● A small number of modules contains most of

the defects discovered during pre-release

testing or shows the most operational failures.

PESTICIDE PARADOX

● If the same kinds of tests are repeated again and again,

eventually the same set of test cases will no longer be

able to find any new bugs. To overcome this “Pesticide

Paradox”, it is really very important to review the test

cases regularly and new and different tests need to be

written to exercise different parts of the software or

system to potentially find more defects.

TESTING IS CONTEXT DEPENDENT

● Testing is basically context dependent.

Different kinds of sites are tested differently.

For example, safety – critical software is tested

differently from an e-commerce site.

ABSENCE – OF – ERRORS FALLACY

● If the system built have some defects then

still it can have high usability.

● Ex. Windows and Linux/Unix

EARLY TESTING

● In the software development life cycle testing

activities should start as early as possible and

should be focused on defined objectives

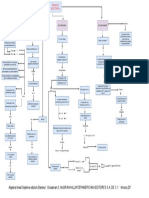

COST OF FIXING DEFECTS

RELATION BETWEEN TESTING AND

QUALITY

● In general: Quality is how well things are working and

are as per the requirements.

● In terms of IT: deliverables working after installation

without errors.

● Testing improves quality.

QUALITY is directly proportional to Testing.

Das könnte Ihnen auch gefallen

- Unit 13 Software Testing Assignment Part ADokument37 SeitenUnit 13 Software Testing Assignment Part AJawaj Duha50% (2)

- Stqa Paper Solution (Des 16)Dokument33 SeitenStqa Paper Solution (Des 16)RajaviNoch keine Bewertungen

- Assignment of Software Test PlanDokument15 SeitenAssignment of Software Test Plankafeel afridi100% (1)

- Software Testing: A Guide to Testing Mobile Apps, Websites, and GamesVon EverandSoftware Testing: A Guide to Testing Mobile Apps, Websites, and GamesBewertung: 4.5 von 5 Sternen4.5/5 (3)

- ISTQB Certified Tester Foundation Level Practice Exam QuestionsVon EverandISTQB Certified Tester Foundation Level Practice Exam QuestionsBewertung: 5 von 5 Sternen5/5 (1)

- QA-methodology-11 12 2019Dokument26 SeitenQA-methodology-11 12 2019Popa AlexNoch keine Bewertungen

- SQE Lecture 6Dokument28 SeitenSQE Lecture 6sk knowledgeNoch keine Bewertungen

- STA Unit01Dokument68 SeitenSTA Unit01melvinarajvee.25csNoch keine Bewertungen

- Testing TypesDokument42 SeitenTesting TypesAdiOvidiuNoch keine Bewertungen

- NTU SQE Lecture 8Dokument29 SeitenNTU SQE Lecture 8bulbonhaNoch keine Bewertungen

- Module 1Dokument72 SeitenModule 1armaanmishra48Noch keine Bewertungen

- Manual Testing Notes - TheTestingAcademyDokument53 SeitenManual Testing Notes - TheTestingAcademyShayak KaranNoch keine Bewertungen

- Manual Testing Interview QuestionsDokument11 SeitenManual Testing Interview QuestionsshauiiaNoch keine Bewertungen

- TheTestingAcademy - Part 1 Manual Testing NotesDokument17 SeitenTheTestingAcademy - Part 1 Manual Testing Noteskeror89732Noch keine Bewertungen

- By: Chankey PathakDokument44 SeitenBy: Chankey PathakzsdxcfgNoch keine Bewertungen

- Introduction ToDokument49 SeitenIntroduction ToAna MariaNoch keine Bewertungen

- Unit 6 SW TestingDokument49 SeitenUnit 6 SW TestingPrasad Patil100% (1)

- Software TestingDokument30 SeitenSoftware Testingapi-3856384100% (1)

- Shubhangi Webteklabs Pvt. LTD.: Technical ConsultantDokument44 SeitenShubhangi Webteklabs Pvt. LTD.: Technical ConsultantSathiya MoorthyNoch keine Bewertungen

- Unit 5Dokument17 SeitenUnit 51213- sanjana chavanNoch keine Bewertungen

- 01.let's Start!Dokument35 Seiten01.let's Start!sycb2xktn4Noch keine Bewertungen

- Fresher Q&A For Testing 1Dokument16 SeitenFresher Q&A For Testing 1shahanwazNoch keine Bewertungen

- Software TestingDokument51 SeitenSoftware Testingjeyaseelan100% (19)

- Basic Iphone Application DevelopmentBlock-5Dokument35 SeitenBasic Iphone Application DevelopmentBlock-5solanki.hemaxi21Noch keine Bewertungen

- SOFT3406 Week14Dokument39 SeitenSOFT3406 Week14sokucuNoch keine Bewertungen

- Sidra Arooj Software Quality Assurance AssignmentDokument20 SeitenSidra Arooj Software Quality Assurance Assignment001stylev001Noch keine Bewertungen

- Lecture 1 IntroductionDokument31 SeitenLecture 1 IntroductionSantosh PantaNoch keine Bewertungen

- Stages of Manual TestingDokument17 SeitenStages of Manual TestingVarun sharmaNoch keine Bewertungen

- Unit 5Dokument18 SeitenUnit 5srikanthNoch keine Bewertungen

- UNIT 4 - SOFTWARE TestingDokument15 SeitenUNIT 4 - SOFTWARE Testingsuthakarsutha1996Noch keine Bewertungen

- Software Testing Is A Process of Verifying and Validating That ADokument61 SeitenSoftware Testing Is A Process of Verifying and Validating That Asv0093066Noch keine Bewertungen

- By: Chankey Pathak Chankey007@gmail - Co MDokument44 SeitenBy: Chankey Pathak Chankey007@gmail - Co MDavis MichaelNoch keine Bewertungen

- Harish PPT QADokument36 SeitenHarish PPT QAIshanth SwaroopNoch keine Bewertungen

- Se Unit 5Dokument116 SeitenSe Unit 5AnsariNoch keine Bewertungen

- TestingDokument61 SeitenTestingShohanur RahmanNoch keine Bewertungen

- Black Box Testing Definition, Example, Application, Techniques, Advantages and DisadvantagesDokument28 SeitenBlack Box Testing Definition, Example, Application, Techniques, Advantages and DisadvantagesvidyaNoch keine Bewertungen

- Ms TestingDokument62 SeitenMs Testingmansha99Noch keine Bewertungen

- Chapter 5 SFTDokument26 SeitenChapter 5 SFTtendulkarnishchalNoch keine Bewertungen

- Software Testing: Presented byDokument77 SeitenSoftware Testing: Presented byAnupama MukherjeeNoch keine Bewertungen

- Manual Testing NotesDokument15 SeitenManual Testing NotesSharad ManjhiNoch keine Bewertungen

- Notes of Unit - IV (SE)Dokument27 SeitenNotes of Unit - IV (SE)Aditi GoelNoch keine Bewertungen

- Functional Testing Non-Functional TestingDokument5 SeitenFunctional Testing Non-Functional TestingElena MorariuNoch keine Bewertungen

- הנדסת תוכנה- הרצאה 8Dokument18 Seitenהנדסת תוכנה- הרצאה 8RonNoch keine Bewertungen

- Software Testing BasicDokument11 SeitenSoftware Testing Basicnuve1284Noch keine Bewertungen

- 4 SeDokument17 Seiten4 SeSathishkumar MNoch keine Bewertungen

- 30/10/2014 Chapter 8 Software Testing 1Dokument40 Seiten30/10/2014 Chapter 8 Software Testing 1WAAD IBRANoch keine Bewertungen

- TestingDokument24 SeitenTestingShomirul Hayder SourovNoch keine Bewertungen

- SE - UNIT3 Part1Dokument47 SeitenSE - UNIT3 Part1Prathamesh KulkarniNoch keine Bewertungen

- Software Testing Chapter-2Dokument34 SeitenSoftware Testing Chapter-2shyamkava01Noch keine Bewertungen

- 08 TestingDokument40 Seiten08 TestingA RaufNoch keine Bewertungen

- SWQTesting Unit-1Dokument31 SeitenSWQTesting Unit-1Esta AmeNoch keine Bewertungen

- Lecture 3 Test PlanningDokument32 SeitenLecture 3 Test PlanningSantosh PantaNoch keine Bewertungen

- Types of Testing: Presented byDokument27 SeitenTypes of Testing: Presented bykishorenagaNoch keine Bewertungen

- Software - Engg Chap 04Dokument70 SeitenSoftware - Engg Chap 042008 AvadhutNoch keine Bewertungen

- SDLC (Software Development Life Cycle) Models: Test Quality Center SQL TechniquesDokument5 SeitenSDLC (Software Development Life Cycle) Models: Test Quality Center SQL TechniquesgaidhaniNoch keine Bewertungen

- 01 - Manual Testing InterView Questions and AnswersDokument25 Seiten01 - Manual Testing InterView Questions and AnswersSiri VulisettyNoch keine Bewertungen

- GCSW Class 2Dokument34 SeitenGCSW Class 2Fabian Peñarrieta AcarapiNoch keine Bewertungen

- Automated Software Testing Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesVon EverandAutomated Software Testing Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNoch keine Bewertungen

- Career Transition Guide to Software Testing: HBA Series, #1Von EverandCareer Transition Guide to Software Testing: HBA Series, #1Noch keine Bewertungen

- Sap Successfactors Training Materials Guide: April 2020Dokument4 SeitenSap Successfactors Training Materials Guide: April 2020pablo picassoNoch keine Bewertungen

- Bhaja Govindham LyricsDokument9 SeitenBhaja Govindham LyricssydnaxNoch keine Bewertungen

- A Win-Win Water Management Approach in The PhilippinesDokument29 SeitenA Win-Win Water Management Approach in The PhilippinesgbalizaNoch keine Bewertungen

- Global Slump: The Economics and Politics of Crisis and Resistance by David McNally 2011Dokument249 SeitenGlobal Slump: The Economics and Politics of Crisis and Resistance by David McNally 2011Demokratize100% (5)

- Team 12 Moot CourtDokument19 SeitenTeam 12 Moot CourtShailesh PandeyNoch keine Bewertungen

- Manuel Vs AlfecheDokument2 SeitenManuel Vs AlfecheGrace0% (1)

- The Neuromarketing ConceptDokument7 SeitenThe Neuromarketing ConceptParnika SinghalNoch keine Bewertungen

- Posterior Cranial Fossa Anesthetic ManagementDokument48 SeitenPosterior Cranial Fossa Anesthetic ManagementDivya Rekha KolliNoch keine Bewertungen

- Life Without A Centre by Jeff FosterDokument160 SeitenLife Without A Centre by Jeff Fosterdwhiteutopia100% (5)

- Roger Dean Kiser Butterflies)Dokument4 SeitenRoger Dean Kiser Butterflies)joitangNoch keine Bewertungen

- KCET MOCK TEST PHY Mock 2Dokument8 SeitenKCET MOCK TEST PHY Mock 2VikashNoch keine Bewertungen

- Right To Information: National Law University AND Judicial Academy, AssamDokument20 SeitenRight To Information: National Law University AND Judicial Academy, Assamsonu peterNoch keine Bewertungen

- Nota 4to Parcial ADokument8 SeitenNota 4to Parcial AJenni Andrino VeNoch keine Bewertungen

- Research Article: Old Sagay, Sagay City, Negros Old Sagay, Sagay City, Negros Occidental, PhilippinesDokument31 SeitenResearch Article: Old Sagay, Sagay City, Negros Old Sagay, Sagay City, Negros Occidental, PhilippinesLuhenNoch keine Bewertungen

- 5 L&D Challenges in 2024Dokument7 Seiten5 L&D Challenges in 2024vishuNoch keine Bewertungen

- Algebra Lineal Septima Edicion Stanley I. Grossman S. Mcgraw-Hilliinteramericana Editores S.A. de C.V Mexico, DFDokument1 SeiteAlgebra Lineal Septima Edicion Stanley I. Grossman S. Mcgraw-Hilliinteramericana Editores S.A. de C.V Mexico, DFJOSE JULIAN RAMIREZ ROJASNoch keine Bewertungen

- Sjögren's SyndromeDokument18 SeitenSjögren's Syndromezakaria dbanNoch keine Bewertungen

- Profix SS: Product InformationDokument4 SeitenProfix SS: Product InformationRiyanNoch keine Bewertungen

- E-Math Sec 4 Sa2 2018 Bukit Panjang - Short AnsDokument36 SeitenE-Math Sec 4 Sa2 2018 Bukit Panjang - Short AnsWilson AngNoch keine Bewertungen

- Roysia Middle School Prospectus Info PackDokument10 SeitenRoysia Middle School Prospectus Info PackroysiamiddleschoolNoch keine Bewertungen

- Kami Export - Tools in Studying Environmental ScienceDokument63 SeitenKami Export - Tools in Studying Environmental ScienceBenBhadzAidaniOmboyNoch keine Bewertungen

- Bekic (Ed) - Submerged Heritage 6 Web Final PDFDokument76 SeitenBekic (Ed) - Submerged Heritage 6 Web Final PDFutvrdaNoch keine Bewertungen

- AP Online Quiz KEY Chapter 8: Estimating With ConfidenceDokument6 SeitenAP Online Quiz KEY Chapter 8: Estimating With ConfidenceSaleha IftikharNoch keine Bewertungen

- JLPT N2 Vocab - 04Dokument345 SeitenJLPT N2 Vocab - 04raj_kumartNoch keine Bewertungen

- Indian School Bousher Final Term End Exam (T2) : Academic Session - 2021-22 Grade: 7Dokument7 SeitenIndian School Bousher Final Term End Exam (T2) : Academic Session - 2021-22 Grade: 7Shresthik VenkateshNoch keine Bewertungen

- History of Architecture VI: Unit 1Dokument20 SeitenHistory of Architecture VI: Unit 1Srehari100% (1)

- 08-20-2013 EditionDokument32 Seiten08-20-2013 EditionSan Mateo Daily JournalNoch keine Bewertungen

- Tropical Design Reviewer (With Answers)Dokument2 SeitenTropical Design Reviewer (With Answers)Sheena Lou Sangalang100% (4)

- All-India rWnMYexDokument89 SeitenAll-India rWnMYexketan kanameNoch keine Bewertungen

- Ujian Praktek Bhs Inggris WajibDokument4 SeitenUjian Praktek Bhs Inggris WajibMikael TitoNoch keine Bewertungen