Beruflich Dokumente

Kultur Dokumente

Solid State Storage Deep Dive

Hochgeladen von

Jeyakumar Narasingam0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

64 Ansichten31 SeitenSSD - Topics

Copyright

© © All Rights Reserved

Verfügbare Formate

PPT, PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenSSD - Topics

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PPT, PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

64 Ansichten31 SeitenSolid State Storage Deep Dive

Hochgeladen von

Jeyakumar NarasingamSSD - Topics

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PPT, PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 31

And How It Effects SQL Server

• NAND Flash Structure

• MLC and SLC Compared

• NAND Flash Read Properties

• NAND Flash Write Properties

• Wear-Leveling

• Garbage Collection

• Write Amplification

• TRIM

• Error Detection and Correction

• Reliability

• Form Factor

• Performance Characteristics

• Determining What’s Right for You

• Not All SSD’s Are Created Equal

• Two Main Flavors NAND And NOR

• NOR

– Operates like RAM.

– NOR is parallel at the cell level.

– NOR reads slightly faster than NAND.

– Can execute directly from NOR without copy to

RAM.

• NAND

– NAND operates like a block device a.k.a. hard disk.

– NAND is serial at the cell level.

– NAND writes significantly faster than NOR.

– NAND erases much faster than NOR--4 ms vs. 5 s.

• Serial array of transistors.

– Each transistor holds 1 bit(or more).

• Arrays grouped into pages.

– 4096 bytes in size.

– Contains “spare” area for ECC and other ops.

• Pages grouped into Blocks

– 64 to 128 pages.

– Smallest erasable unit.

• Pages grouped into chip

– As big as 16 Gigabytes.

• Chips grouped on to devices.

– Usually in a parallel arrangement.

NAND Flash Structure. Gates, Cells,

Pages and Strings.

• MLC (Multi-Level Cell)

– Higher capacity (two bits per cell).

– Low P\E cycle count 3k~ 10K~.

– Cheaper per Gigabyte.

– High ECC needs.

• SLC (Single-Level Cell)

– Fast read speed

• 25ns vs. 50ns

– Fast Write Speed

• 220ns vs. 900ns

– High P\E cycle count 100k~ to 300k~

– Tend to be conservative numbers.

– Minimal ECC requirements

• 1 bit per 512 bytes vs. 12~ bits per.

– Expensive

• Up to 5x the cost of MLC.

It isn’t RAM.

◦ Slower access times.

1~ ns vs. 50~ ns.

No write in place.

It isn’t a hard disk.

◦ Much faster access times.

Nanoseconds vs. Milliseconds

◦ No moving parts.

Program Erase Cycle

◦ Erased state all bits are 1.

◦ Programmed bits are 0.

◦ Programmed pages at a time.

One pass programming.

◦ Erased block at a time(128 pages).

Must erase entire block to program a single page

again.

◦ Finite life cycle, 10k~ MLC 100k~ SLC.

Once failed to erase may still be readable.

Data written in pages and erased in blocks. Blocks are becoming larger as NAND

Flash die sizes shrink.

• Wear-Leveling

– Spreads writes across blocks.

– Ideally, write to every block before erasing any.

– Data grouped into two patterns.

• Static, written once and read many times.

• Dynamic, written often read infrequently.

– If you only Wear-Level data in motion you burn out

the page quickly.

– If you Wear-Level static data you are incurring extra

I/O

Background Garbage Collection

◦ Defers P/E cycle.

◦ Pages marked as dirty, erased later.

◦ Requires spare area.

◦ Incurs additional I/O.

◦ Can be put under pressure by frequent small writes.

Write Amplification

◦ Ripples in a pond.

◦ Device moves blocks around.

◦ Incoming I/O greater than Device has.

◦ Every write causes additional writes.

Small writes can be a real problem.

OLTP workloads are a good example.

TRIM can help.

Initial Write of 4 pages to a single

erasable block.

Four new pages and four replacement

pages written. Original pages are now

marked invalid.

Garbage collection comes along and

moves all valid pages to a new block

and erases the other block.

• TRIM

– Supported out of the box on Windows 7, Windows

2008 R2.

• Some manufacturers are shipping a TRIM service that

works with their driver

– Acts like spare area for garbage collection.

– OS and file system tell drive block is empty.

– Filling file system defeats TRIM.

– File fragmentation can hurt TRIM.

• Grow your files manually!

• Don’t run disk defrag!

Many things cause errors on Flash!

• Write Disturb

– Data Cells NOT being written to are corrupted.

• Fixed with normal erase.

• Read Disturb

– Repeated reads on same page effects other pages on

block.

• Fixed with normal erase.

• Charge Loss/Gain

– Transistors may gain or lose charge over time.

• Flash devices at rest or rarely accessed data.

• Fixed with normal erase.

All of these issues are generally dealt with very well

using standard ECC techniques.

As cells are programmed other cells

may experience voltage change.

As cells are read other cells in same

block can suffer voltage change.

If flash is at rest or rarely read cells

can suffer charge loss.

• Not all drives are benchmarked the same.

• Short-stroking

– Only using a small portion of the drive.

– Allows for lots of spare capacity via TRIM.

• Huge queue depths.

– Increases latency.

– Can be unrealistic.

• Odd block transfer sizes.

– Random IO testing.

• Some use 512 byte while others use 4k.

– Sequential IO testing.

• Most use 128k.

• Some use 64k to better fit into large buffers.

• Some use 1mb and high queue depths.

Read the numbers carefully.

◦ Random IO bench usually 4k.

SQL Server works on 8k.

◦ Sequential IO bench usually 128k.

SQL Server works on 64k to 128mb

◦ Queue depths set high.

SQL Server usually configured for low Queue depth.

• SLC is ready “Out of the box.”

– Requires much less infrastructure on disk to

support robust write environments.

• MLC needs some help.

– Requires lots of spare area and smarter controllers

to handle extra ECC.

– eMLC has all management functions built onto the

chip.

• Both configured similarly.

– RAID of chips.

– TRIM, GC and Wear-Leveling

Longevity between devices can be huge.

Consumer grade drives are consumable.

◦ Aren’t rated for full drive writes.

Desktop drives usually tested on a fraction of drive

capacity!

◦ Aren’t rated for continuous writes.

It may say three year life span.

Could be much shorter look at total writes.

• SAS is the king of your heavy workloads.

• Command Queuing

– SAS supports up to 216 usually capped at 64.

– SATA supports up to 32.

• Error recovery and detection.

– SMART isn’t.

– SCSI command set is better.

• Duplex

– SAS is full duplex and dual ported per drive.

– SATA is single duplex and single ported.

• Multi-path IO

– Native to SAS at the drive level.

– Available to SATA via expanders.

• Flash comes in lots of form factors.

• Standard 2.5” and 3.5” drives,

• Fibre Attached

• Texas Memory System RAM-SAN 620

• Violin Memory

• PCIe add-in cards.

• Few “native” cards.

• Fusion-io

• Texas Memory System RAM-SAN 20

• Bundled solutions.

• LSI SSS6200

• OCZ Z-Drive

• OCZ Revodrive

• PCIe To Disk

• 2.5” form factor and plugs

• Skips SAS/SATA for direct PCIe lanes.

You MUST understand your workloads.

◦ Monitor virtual file stats

http://sqlserverio.com/2011/02/08/gather-virtual-

file-statistics-using-t-sql-tsql2sday-15/

Track random vs. sequential

Track size of transfers

◦ Capture IO Patterns

http://sqlserverio.com/2010/06/15/fundamentals-

of-storage-systems-capturing-io-patterns/

◦ Benchmark!

http://sqlserverio.com/2010/06/15/fundamentals-

of-storage-testing-io-systems/

• From new

– Best possible performance.

– Drive will never be this fast again.

• Previous writes effect future reads.

– Large sequential writes nice for GC.

– Small random writes slow GC down.

– Wait for GC to catch up when benching drive.

• Give the GC time to settle in going from small random to

large sequential or vice versa.

• Steady state is what we are after.

• Performance over time slows.

– Cells wear out.

• Causes multiple attempts to read or write

• ECC saves you but the IO is still spent.

• Not all drives are equal.

• Understand drives are tuned for workloads.

– Desktop drives don’t favor 100% random writes…

– Enterprise drives are expected to get punished.

• Fix it with firmware.

– Most drives will have edge cases.

• OCZ and Intel suffered poor performance after drive

use over time.

• Be wary of updates that erase your drive.

– Gives you a temporary performance boost.

• Flash read performance is great, sequential or

random.

• Flash write performance is complicated, and can

be a problem if you don’t manage it.

• Flash wears out over time.

– Not nearly the issue it use to be, but you must

understand your write patterns.

– Plan for over provisioning and TRIM support.

• It can have a huge impact on how much storage you actually

buy.

– Flash can be error prone.

• Be aware that writes and reads can cause data corruption.

Solid State Storage Deep Dive

Wes Brown

Das könnte Ihnen auch gefallen

- Sqlrally Orlando Understanding Storage Systems and SQL ServerDokument38 SeitenSqlrally Orlando Understanding Storage Systems and SQL ServerJeyakumar NarasingamNoch keine Bewertungen

- Storage Through the Ages: A Brief History of Data Storage TechnologyDokument40 SeitenStorage Through the Ages: A Brief History of Data Storage TechnologytaleNoch keine Bewertungen

- Disksraid 09 PDFDokument44 SeitenDisksraid 09 PDFPankaj BharangarNoch keine Bewertungen

- 4- MemoryDokument41 Seiten4- MemoryAliaa TarekNoch keine Bewertungen

- Chapter 12Dokument23 SeitenChapter 12Hein HtetNoch keine Bewertungen

- Lecture #3-4Dokument78 SeitenLecture #3-4Basem HeshamNoch keine Bewertungen

- 11 MemoryDokument41 Seiten11 MemoryKhoa PhamNoch keine Bewertungen

- Challenges of SSD Forensic Analysis: by Digital AssemblyDokument44 SeitenChallenges of SSD Forensic Analysis: by Digital AssemblyMohd Asri XSxNoch keine Bewertungen

- Disk and RAID FundamentalsDokument35 SeitenDisk and RAID FundamentalsRajesh BhardwajNoch keine Bewertungen

- Chapter 5 Memory OrganizationDokument75 SeitenChapter 5 Memory Organizationendris yimerNoch keine Bewertungen

- 05-Chap6-External Memory LEC 1Dokument56 Seiten05-Chap6-External Memory LEC 1abdul shakoorNoch keine Bewertungen

- Chap 5 Memory System p2Dokument34 SeitenChap 5 Memory System p2bapdeptrai567Noch keine Bewertungen

- Common Exadata Mistakes: Andy Colvin Practice Director, Enkitec IOUG Collaborate 2014Dokument49 SeitenCommon Exadata Mistakes: Andy Colvin Practice Director, Enkitec IOUG Collaborate 2014Mohammad Abdul AzeezNoch keine Bewertungen

- Chapter 6 ReportDokument48 SeitenChapter 6 ReportShiina KawaiiNoch keine Bewertungen

- Challenges of SSD Forensic Analysis (37p)Dokument37 SeitenChallenges of SSD Forensic Analysis (37p)Akis KaragiannisNoch keine Bewertungen

- Seminar SSD Yash AgarwalDokument16 SeitenSeminar SSD Yash AgarwalkishanNoch keine Bewertungen

- Chapter 6 - External MemoryDokument50 SeitenChapter 6 - External MemoryHPManchesterNoch keine Bewertungen

- Ch02 Storage MediaDokument24 SeitenCh02 Storage MediaHidayatAsriNoch keine Bewertungen

- Flash Memory: Presented By: Amit Raj 09EE6406 Instrumentation EnggDokument21 SeitenFlash Memory: Presented By: Amit Raj 09EE6406 Instrumentation Enggrajelec100% (1)

- Week 9 Internal External MemoriesDokument43 SeitenWeek 9 Internal External Memoriesmuhammad maazNoch keine Bewertungen

- Everything You Need to Know About Solid State DrivesDokument24 SeitenEverything You Need to Know About Solid State DrivesBhavya AmbaliyaNoch keine Bewertungen

- Chapter 6Dokument27 SeitenChapter 6murad25.cseNoch keine Bewertungen

- 04 Cache Memory ComparcDokument47 Seiten04 Cache Memory ComparcMekonnen WubshetNoch keine Bewertungen

- Comarch - Week 5 MemoryDokument31 SeitenComarch - Week 5 MemoryT VinassaurNoch keine Bewertungen

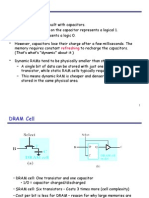

- Dynamic Memory Refreshes CapacitorsDokument8 SeitenDynamic Memory Refreshes CapacitorsNava KrishnanNoch keine Bewertungen

- Flash SeminarDokument21 SeitenFlash Seminarbandekhoda111100% (1)

- William Stallings Computer Organization and Architecture 8 Edition External MemoryDokument35 SeitenWilliam Stallings Computer Organization and Architecture 8 Edition External MemoryabbasNoch keine Bewertungen

- Secondary Storage IntroductionDokument82 SeitenSecondary Storage IntroductionharisiddhanthiNoch keine Bewertungen

- Magnetic DiskDokument15 SeitenMagnetic DiskAmit CoolNoch keine Bewertungen

- MySQL and Linux Tuning - Better TogetherDokument26 SeitenMySQL and Linux Tuning - Better TogetherOleksiy Kovyrin100% (1)

- Best Practices For PerformanceDokument0 SeitenBest Practices For PerformancedivandannNoch keine Bewertungen

- MemoryDokument97 SeitenMemorynerdmehNoch keine Bewertungen

- Memory Sub-System: CT101 - Computing SystemsDokument46 SeitenMemory Sub-System: CT101 - Computing SystemstopherskiNoch keine Bewertungen

- 03-Chap4-Cache Memory MappingDokument24 Seiten03-Chap4-Cache Memory Mappingabdul shakoorNoch keine Bewertungen

- Managing Database Storage with Disks and RAIDDokument47 SeitenManaging Database Storage with Disks and RAIDGopi BalaNoch keine Bewertungen

- William Stallings Computer Organization and Architecture 8 Edition External MemoryDokument57 SeitenWilliam Stallings Computer Organization and Architecture 8 Edition External MemoryTirusew AbereNoch keine Bewertungen

- Memory Hierarchy: Haresh Dagale Dept of ESEDokument32 SeitenMemory Hierarchy: Haresh Dagale Dept of ESEmailstonaikNoch keine Bewertungen

- Storing Data: Disks and Files: (R&G Chapter 9)Dokument39 SeitenStoring Data: Disks and Files: (R&G Chapter 9)raw.junkNoch keine Bewertungen

- Types of External Memory Covered in Chapter 6Dokument24 SeitenTypes of External Memory Covered in Chapter 6Pravin KatreNoch keine Bewertungen

- Memory TechnologyDokument6 SeitenMemory Technologymanishbhardwaj8131Noch keine Bewertungen

- Iseries Memory TuningDokument81 SeitenIseries Memory Tuningsparvez100% (1)

- Memories: Reviewing Memory Hierarchy ConceptsDokument37 SeitenMemories: Reviewing Memory Hierarchy ConceptsshubhamvslaviNoch keine Bewertungen

- 06 - External MemoryDokument47 Seiten06 - External MemoryAyush TripathiNoch keine Bewertungen

- A Level CS CH 3 9618Dokument14 SeitenA Level CS CH 3 9618calvin esauNoch keine Bewertungen

- CAODokument65 SeitenCAOPriya SinghNoch keine Bewertungen

- Semiconductor Memory Types and Operations ExplainedDokument33 SeitenSemiconductor Memory Types and Operations ExplainedHPManchester100% (1)

- FALLSEM2021-22 ITE2001 TH VL2021220105091 Reference Material II 01-10-2021 3.4TypesofMemoriesDokument18 SeitenFALLSEM2021-22 ITE2001 TH VL2021220105091 Reference Material II 01-10-2021 3.4TypesofMemoriesJai ShreeNoch keine Bewertungen

- William Stallings Computer Organization and Architecture 6th Edition Internal MemoryDokument27 SeitenWilliam Stallings Computer Organization and Architecture 6th Edition Internal MemoryAhmed Hassan MohammedNoch keine Bewertungen

- Computer Architecture and Organization: Lecture10: Rotating DisksDokument21 SeitenComputer Architecture and Organization: Lecture10: Rotating DisksMatthew R. PonNoch keine Bewertungen

- Raid 5Dokument26 SeitenRaid 5Ritu ShrivastavaNoch keine Bewertungen

- Operating Systems: - Redundant Array of Independent DisksDokument26 SeitenOperating Systems: - Redundant Array of Independent DisksAndrés PDNoch keine Bewertungen

- Group 6Dokument41 SeitenGroup 6Walid_Sassi_TunNoch keine Bewertungen

- Pre-Mid Assignment Computer Science11Dokument10 SeitenPre-Mid Assignment Computer Science11faryal ahmadNoch keine Bewertungen

- UNIT4 - Memory OrganizationDokument65 SeitenUNIT4 - Memory OrganizationParas DwivediNoch keine Bewertungen

- WINSEM2020-21 - SWE1005 - TH - VL2020210504111 - Reference - Material - I - 12-May-2021 - Device SubsystemsDokument25 SeitenWINSEM2020-21 - SWE1005 - TH - VL2020210504111 - Reference - Material - I - 12-May-2021 - Device SubsystemsSharmila BalamuruganNoch keine Bewertungen

- FS16 Saratech 04 PerformanceTuningDokument38 SeitenFS16 Saratech 04 PerformanceTuningStefano MilaniNoch keine Bewertungen

- COMPUTER COMPONENTSDokument31 SeitenCOMPUTER COMPONENTSGirish RaoNoch keine Bewertungen

- ROM MemoryDokument56 SeitenROM MemoryAndre Deyniel CabreraNoch keine Bewertungen

- Memory: ClassificationsDokument43 SeitenMemory: ClassificationsKumar ReddyNoch keine Bewertungen

- FreeBSD Mastery: Storage Essentials: IT Mastery, #4Von EverandFreeBSD Mastery: Storage Essentials: IT Mastery, #4Noch keine Bewertungen

- Call Time Capture: Mentions The Total Time Taken To Call A MethodDokument5 SeitenCall Time Capture: Mentions The Total Time Taken To Call A MethodJeyakumar NarasingamNoch keine Bewertungen

- Master The InterviewDokument1 SeiteMaster The InterviewJeyakumar NarasingamNoch keine Bewertungen

- MCM SQL PDFDokument8 SeitenMCM SQL PDFJeyakumar NarasingamNoch keine Bewertungen

- Understanding Storage Systems and SQL Server Ssday57Dokument34 SeitenUnderstanding Storage Systems and SQL Server Ssday57Jeyakumar NarasingamNoch keine Bewertungen

- Microsoft SQL Server Architecture: Tom Hamilton - America's Channel Database CSEDokument18 SeitenMicrosoft SQL Server Architecture: Tom Hamilton - America's Channel Database CSEJeyakumar NarasingamNoch keine Bewertungen

- 71 DR UthappaDokument2 Seiten71 DR UthappaJeyakumar NarasingamNoch keine Bewertungen

- C:a I Iqth. Iri Aidsnli: Tran3formDokument6 SeitenC:a I Iqth. Iri Aidsnli: Tran3formJeyakumar NarasingamNoch keine Bewertungen

- Index Numbers of Wholesale Price in IndiaDokument5 SeitenIndex Numbers of Wholesale Price in IndiaJeyakumar NarasingamNoch keine Bewertungen

- Economics PovertyDokument16 SeitenEconomics PovertyJeyakumar NarasingamNoch keine Bewertungen

- GS Economics OnlineDokument20 SeitenGS Economics OnlineJeyakumar NarasingamNoch keine Bewertungen

- Right to Information Key to Good GovernanceDokument22 SeitenRight to Information Key to Good Governancepannuks_73Noch keine Bewertungen

- Reading ComprehensionDokument5 SeitenReading ComprehensionJeyakumar NarasingamNoch keine Bewertungen

- Reading ComprehensionDokument5 SeitenReading ComprehensionJeyakumar NarasingamNoch keine Bewertungen

- (WWW - Entrance-Exam - Net) - All Bank PO PaperDokument13 Seiten(WWW - Entrance-Exam - Net) - All Bank PO PaperAditya Anil KhaitanNoch keine Bewertungen

- Instructions For Update Installation of Elsawin 4.10Dokument16 SeitenInstructions For Update Installation of Elsawin 4.10garga_cata1983Noch keine Bewertungen

- In Touch ArchestrADokument84 SeitenIn Touch ArchestrAItalo MontecinosNoch keine Bewertungen

- CV Manish IPDokument4 SeitenCV Manish IPManish KumarNoch keine Bewertungen

- Garmin Swim: Owner's ManualDokument12 SeitenGarmin Swim: Owner's ManualCiureanu CristianNoch keine Bewertungen

- Simple Linear Regression (Part 2) : 1 Software R and Regression AnalysisDokument14 SeitenSimple Linear Regression (Part 2) : 1 Software R and Regression AnalysisMousumi Kayet100% (1)

- Lit RevDokument9 SeitenLit RevBattuDedSec AC418Noch keine Bewertungen

- Systemd Vs SysVinitDokument1 SeiteSystemd Vs SysVinitAhmed AbdelfattahNoch keine Bewertungen

- SIEMplifying Security Monitoring For SMBsDokument9 SeitenSIEMplifying Security Monitoring For SMBsiopdescargo100% (1)

- Receiving Receipts FAQ'sDokument13 SeitenReceiving Receipts FAQ'spratyusha_3Noch keine Bewertungen

- IT 160 Final Lab Project - Chad Brown (VM37)Dokument16 SeitenIT 160 Final Lab Project - Chad Brown (VM37)Chad BrownNoch keine Bewertungen

- The Fluoride Ion Selective Electrode ExperimentDokument5 SeitenThe Fluoride Ion Selective Electrode Experimentlisaaliyo0% (1)

- READMEDokument2 SeitenREADMEWongRongJingNoch keine Bewertungen

- CAPTCHA PresentationDokument28 SeitenCAPTCHA Presentationbsbharath198770% (10)

- Industrial Robotics: Robot Anatomy, Control, Programming & ApplicationsDokument30 SeitenIndustrial Robotics: Robot Anatomy, Control, Programming & ApplicationsKiran VargheseNoch keine Bewertungen

- A corners-first solution method for Rubik's cubeDokument7 SeitenA corners-first solution method for Rubik's cubeVani MuthukrishnanNoch keine Bewertungen

- Optimize Oracle General Ledger average balances configurationDokument16 SeitenOptimize Oracle General Ledger average balances configurationSingh Anish K.Noch keine Bewertungen

- Android Manage Hotel BookingsDokument2 SeitenAndroid Manage Hotel BookingsShahjahan AlamNoch keine Bewertungen

- Punjab and Sind Bank account statement for Kirana storeDokument16 SeitenPunjab and Sind Bank account statement for Kirana storedfgdsgfsNoch keine Bewertungen

- Defcon 17 Sumit Siddharth SQL Injection WormDokument19 SeitenDefcon 17 Sumit Siddharth SQL Injection Wormabdel_lakNoch keine Bewertungen

- YouTube Brand Channel RedesignDokument60 SeitenYouTube Brand Channel Redesignchuck_nottisNoch keine Bewertungen

- Astral Column Pipe PricelistDokument4 SeitenAstral Column Pipe PricelistVaishamNoch keine Bewertungen

- Roland Mdx-650 Milling TutorialDokument28 SeitenRoland Mdx-650 Milling TutorialRavelly TelloNoch keine Bewertungen

- Create Article Master and Cost from SpecificationDokument159 SeitenCreate Article Master and Cost from SpecificationGurushantha DoddamaniNoch keine Bewertungen

- Swot MatrixDokument2 SeitenSwot MatrixAltaf HussainNoch keine Bewertungen

- SQL Language Quick Reference PDFDokument156 SeitenSQL Language Quick Reference PDFNguessan KouadioNoch keine Bewertungen

- Open Source For You 2016Dokument108 SeitenOpen Source For You 2016Eduardo Farías ReyesNoch keine Bewertungen

- Applying Graph Theory to Map ColoringDokument25 SeitenApplying Graph Theory to Map ColoringAnonymous BOreSFNoch keine Bewertungen

- Verifying The Iu-PS Interface: About This ChapterDokument8 SeitenVerifying The Iu-PS Interface: About This ChapterMohsenNoch keine Bewertungen

- Debugging RFC Calls From The XIDokument4 SeitenDebugging RFC Calls From The XIManish SinghNoch keine Bewertungen

- Data Center Design GuideDokument123 SeitenData Center Design Guidealaa678100% (1)